MyzharBot v2

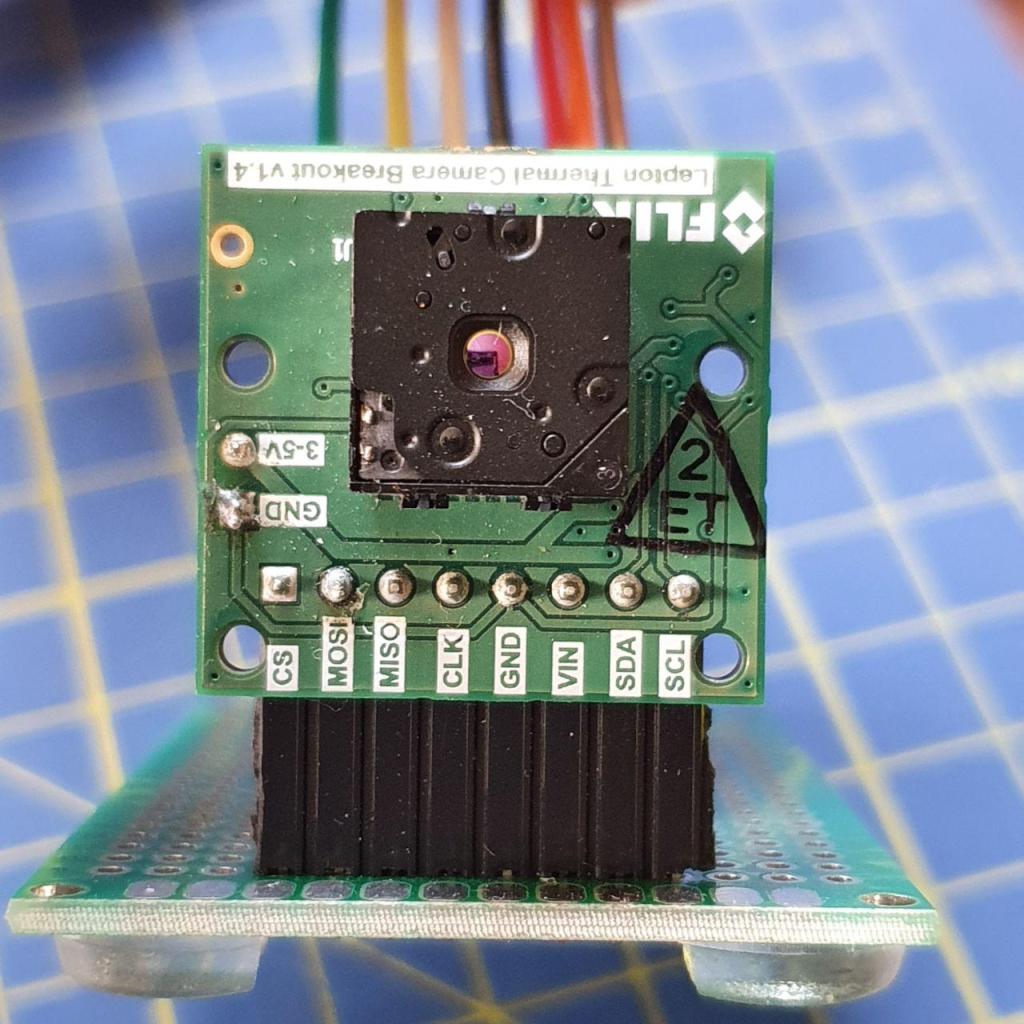

In late 2012, I began developing the second version of MyzharBot with the ambitious goal of adding autonomous navigation capabilities.

The first version had a critical limitation: insufficient space to accommodate a computer for autonomous navigation.

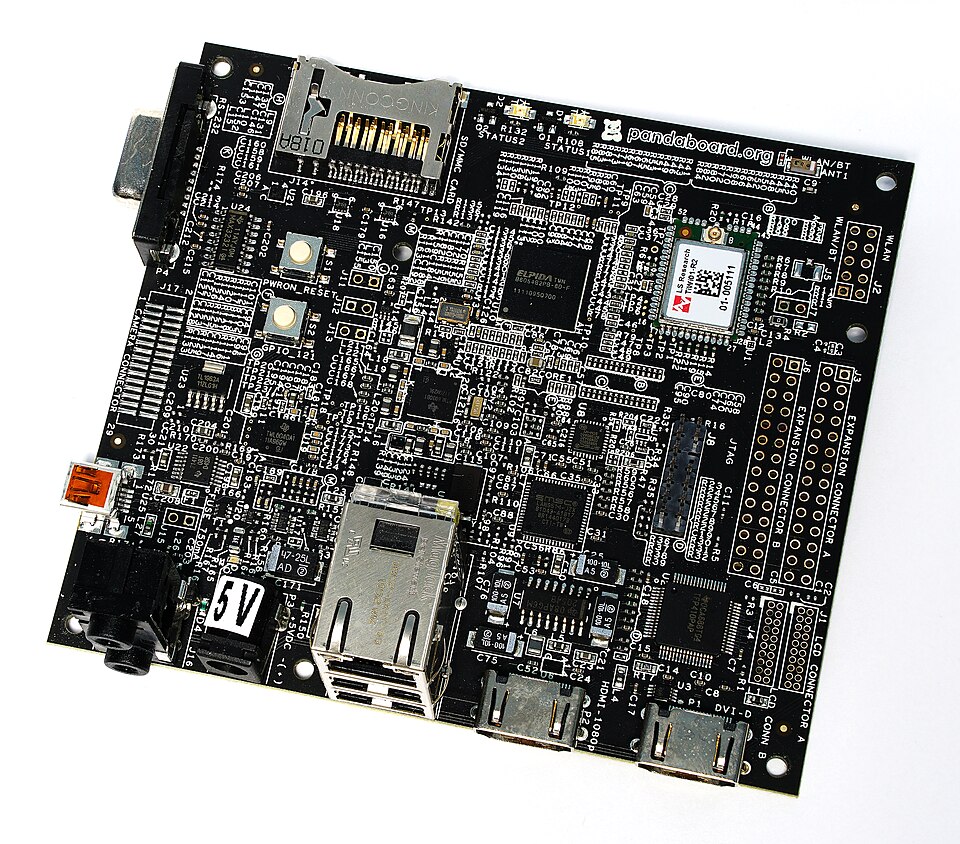

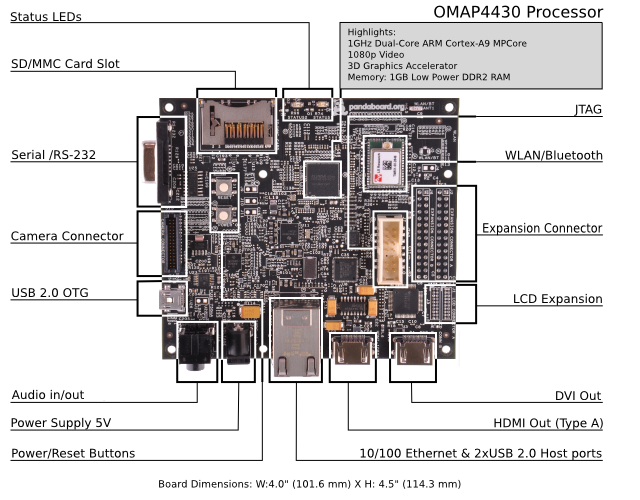

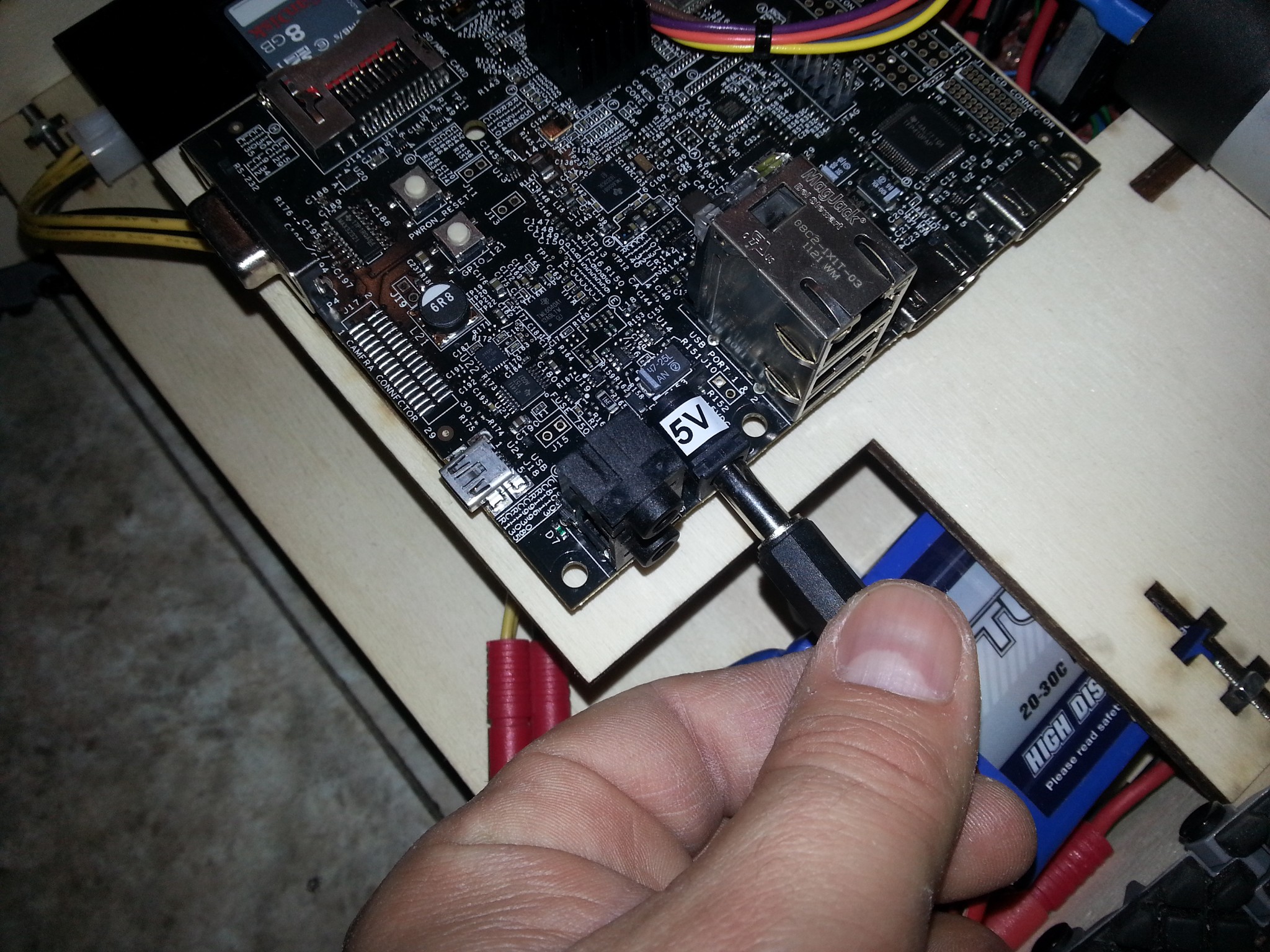

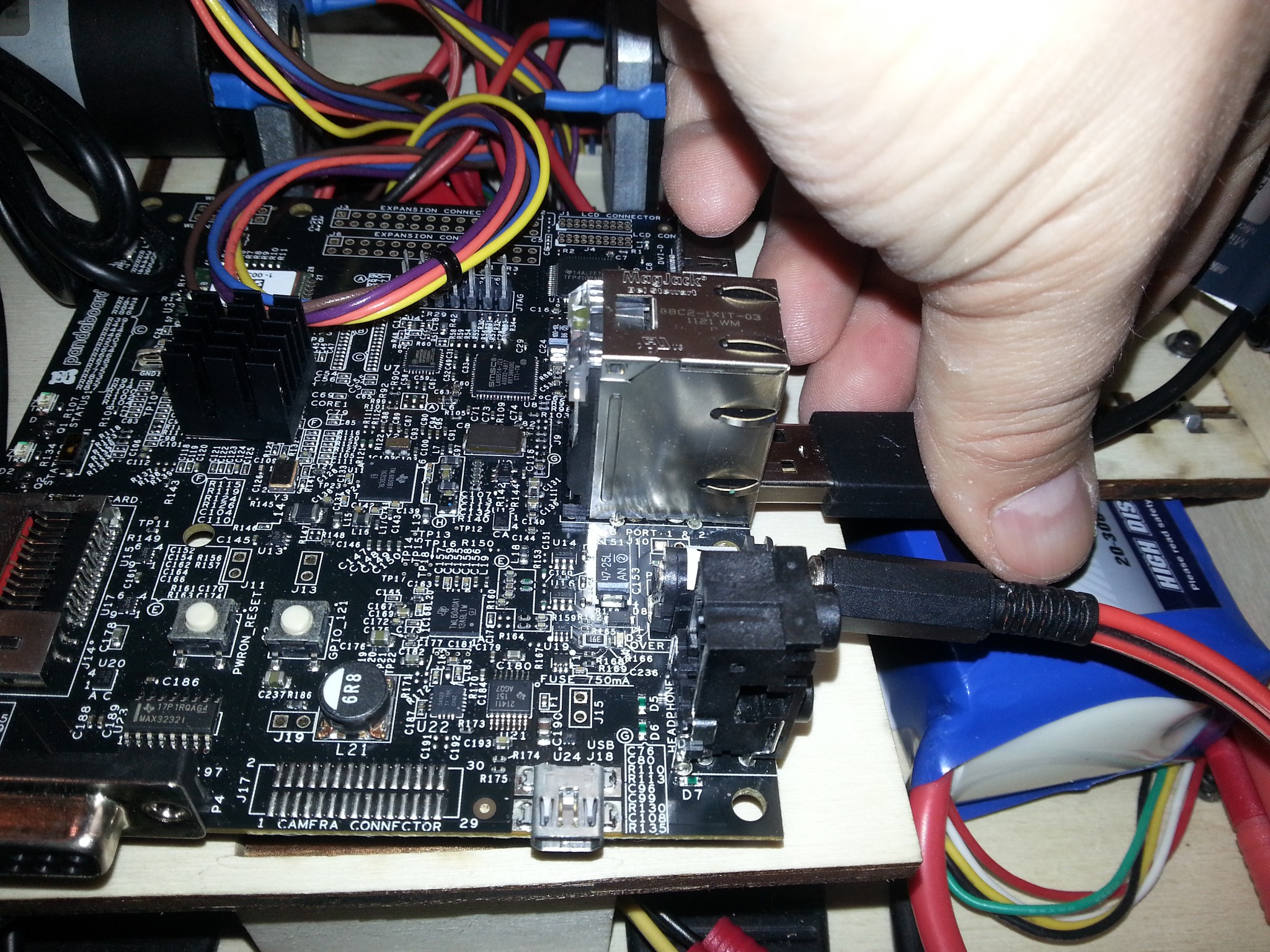

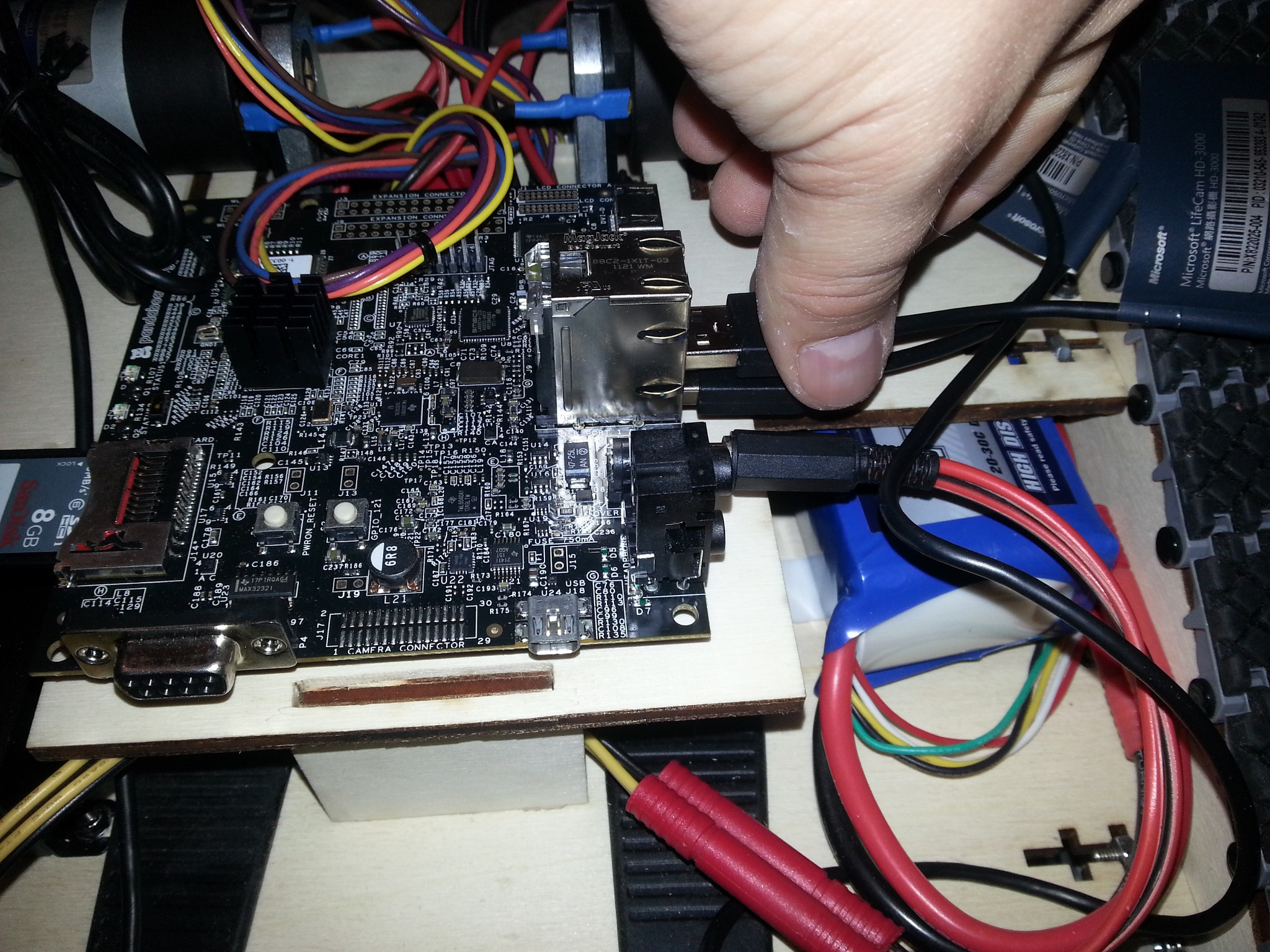

I chose the Pandaboard ES, a compact ARM-based embedded Linux computer that showed promise for robotics applications. It offered a robust set of I/O pins and competitive performance for its time.

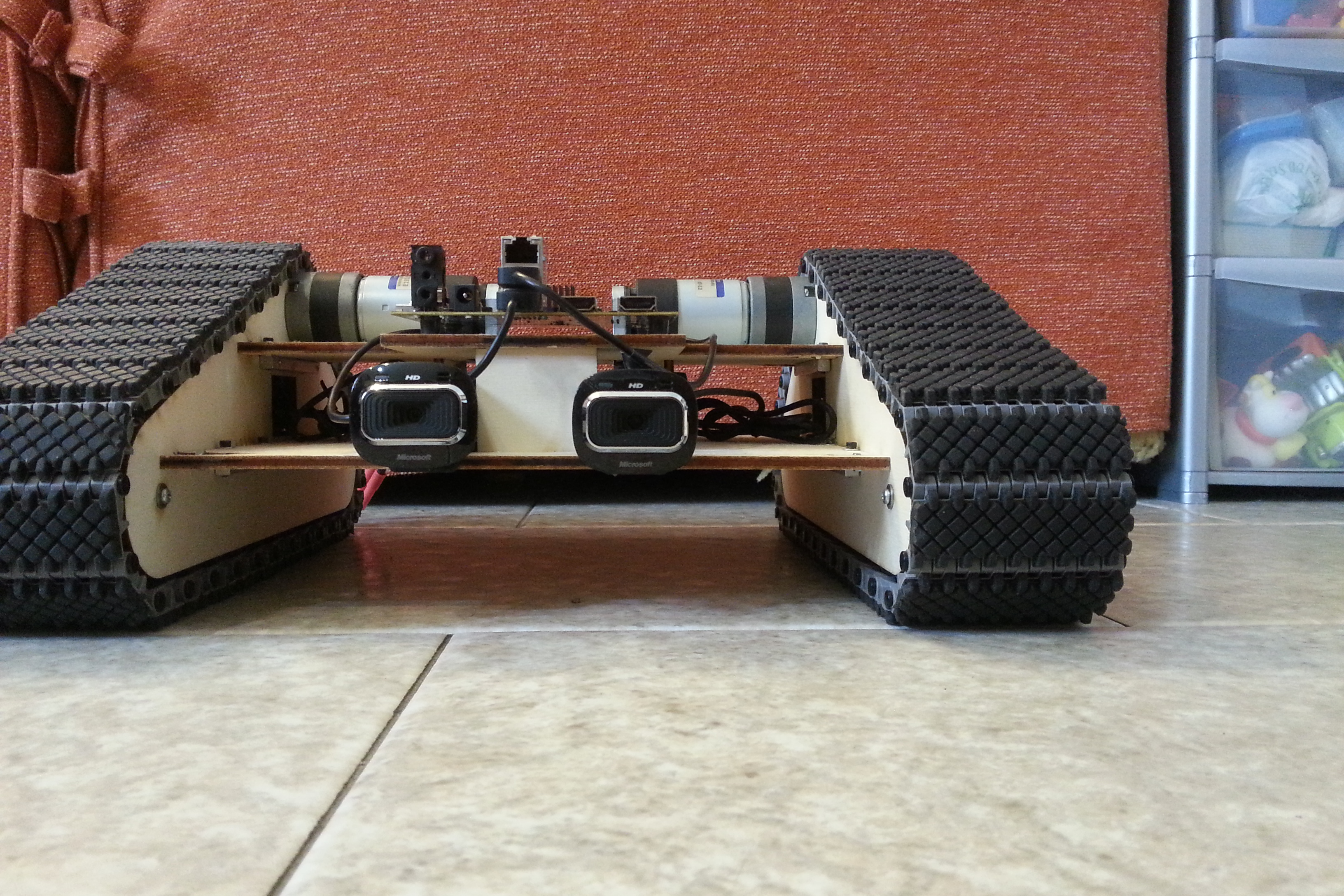

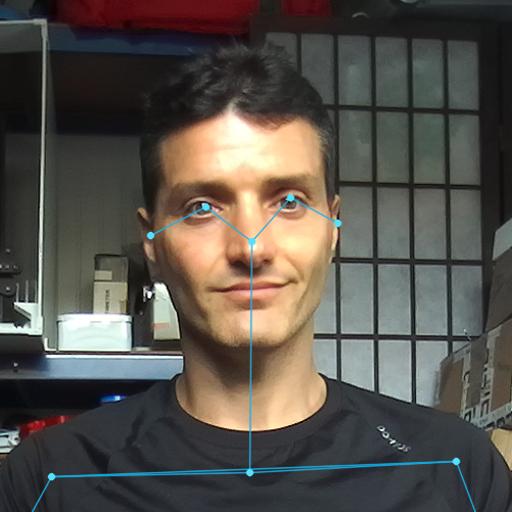

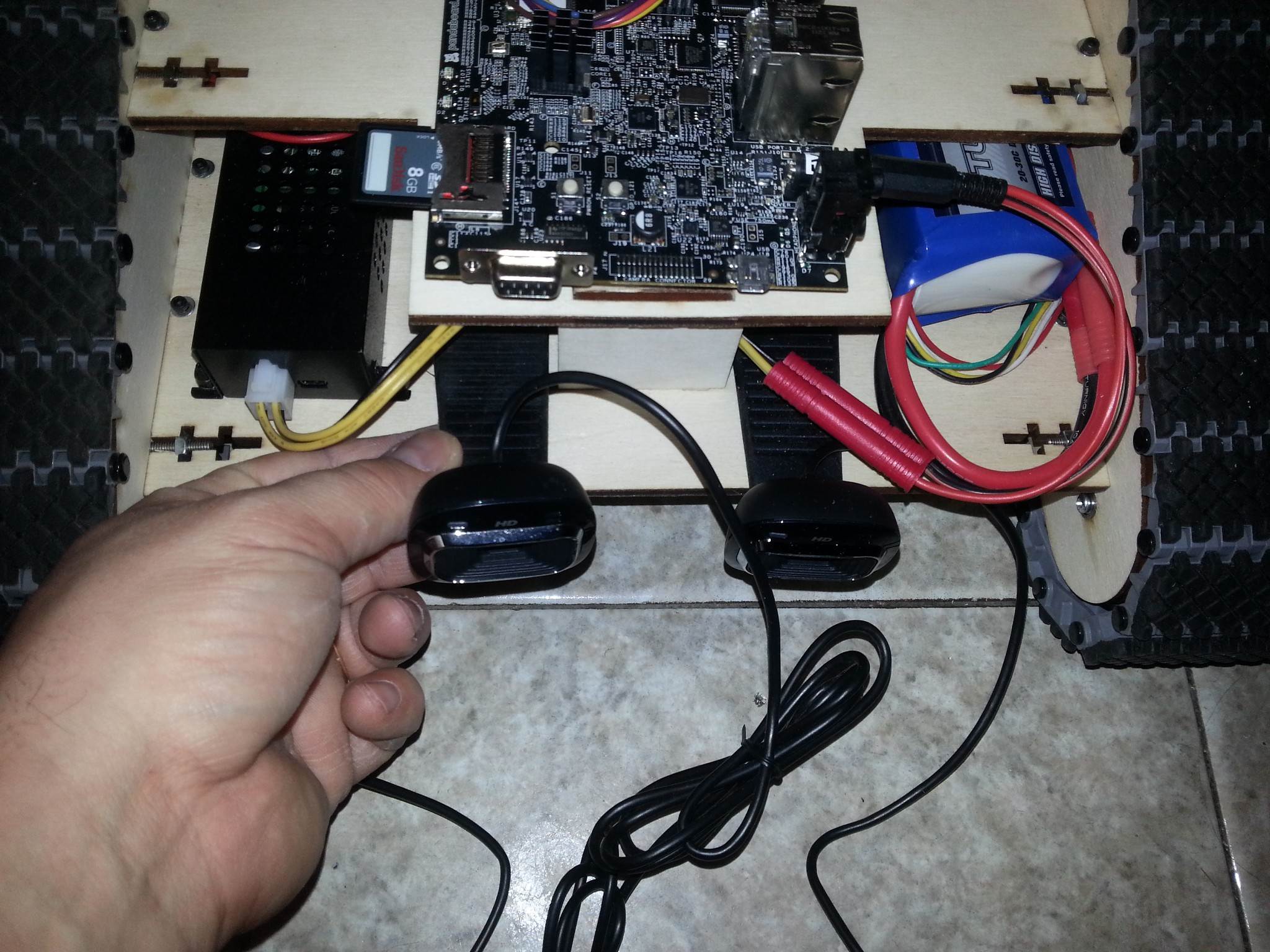

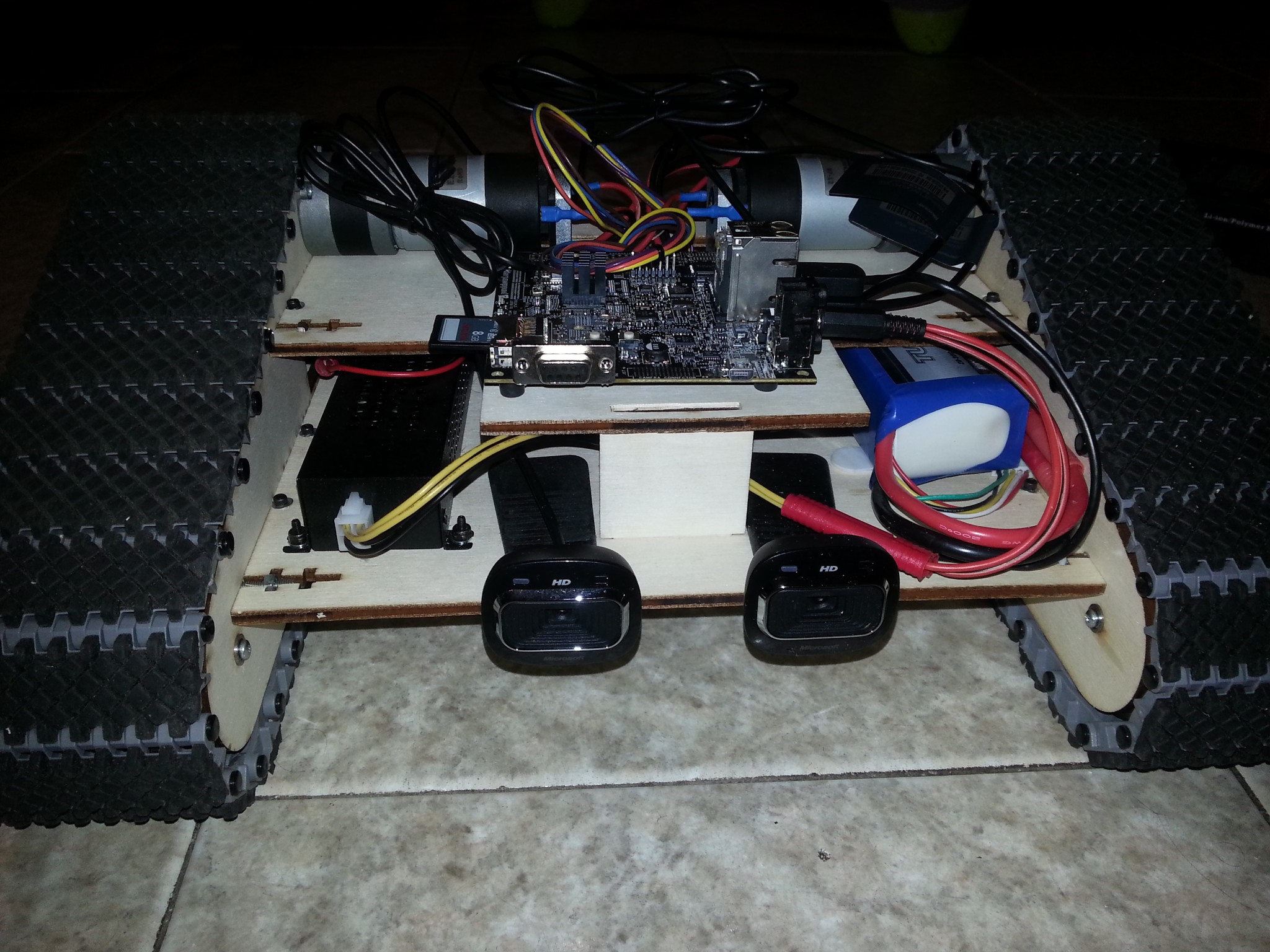

My initial plan involved implementing stereo vision using two standard webcams. I was overly optimistic about achieving reliable depth perception through this approach—a naive assumption that everything could be solved with enough coding effort.

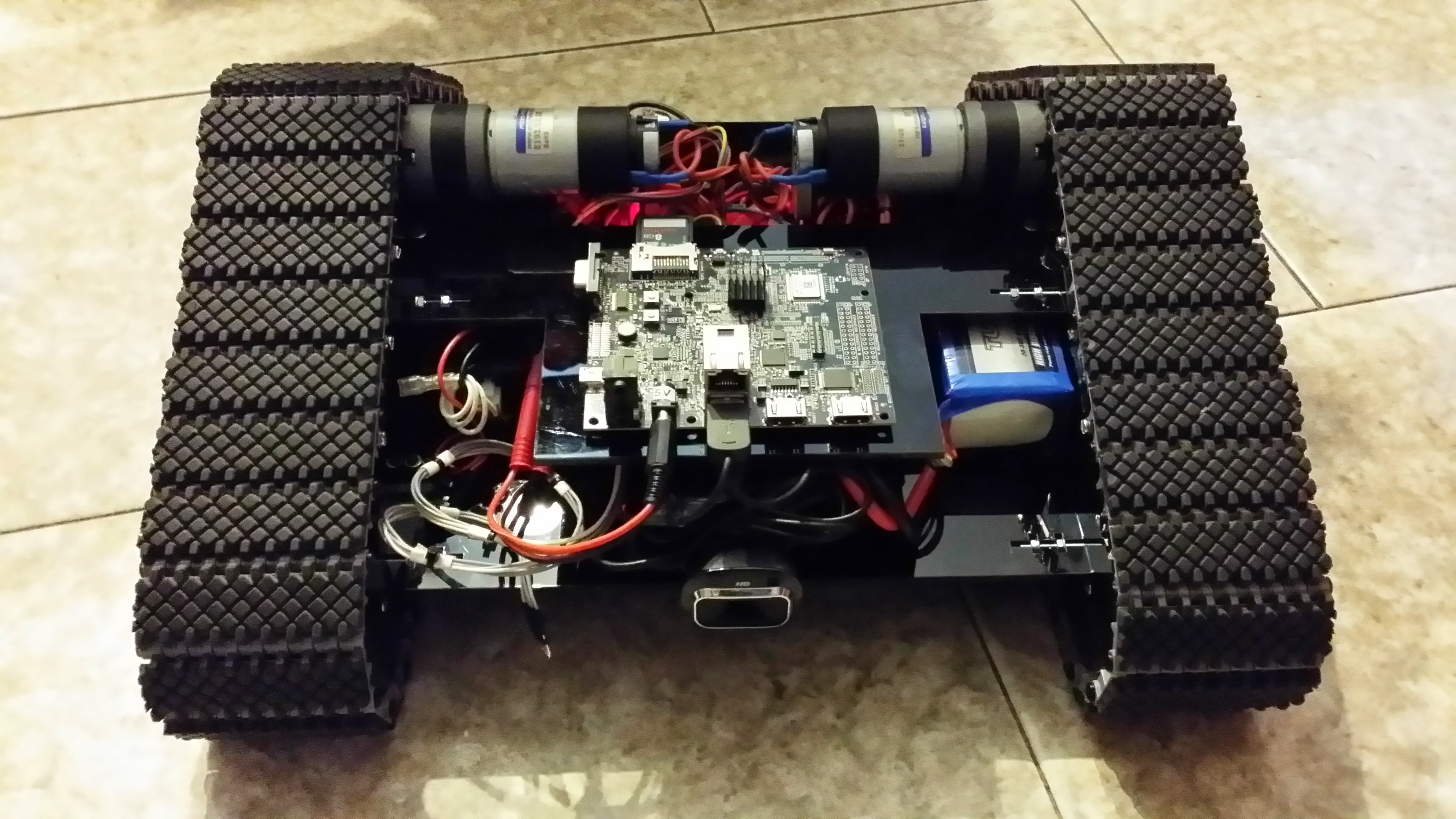

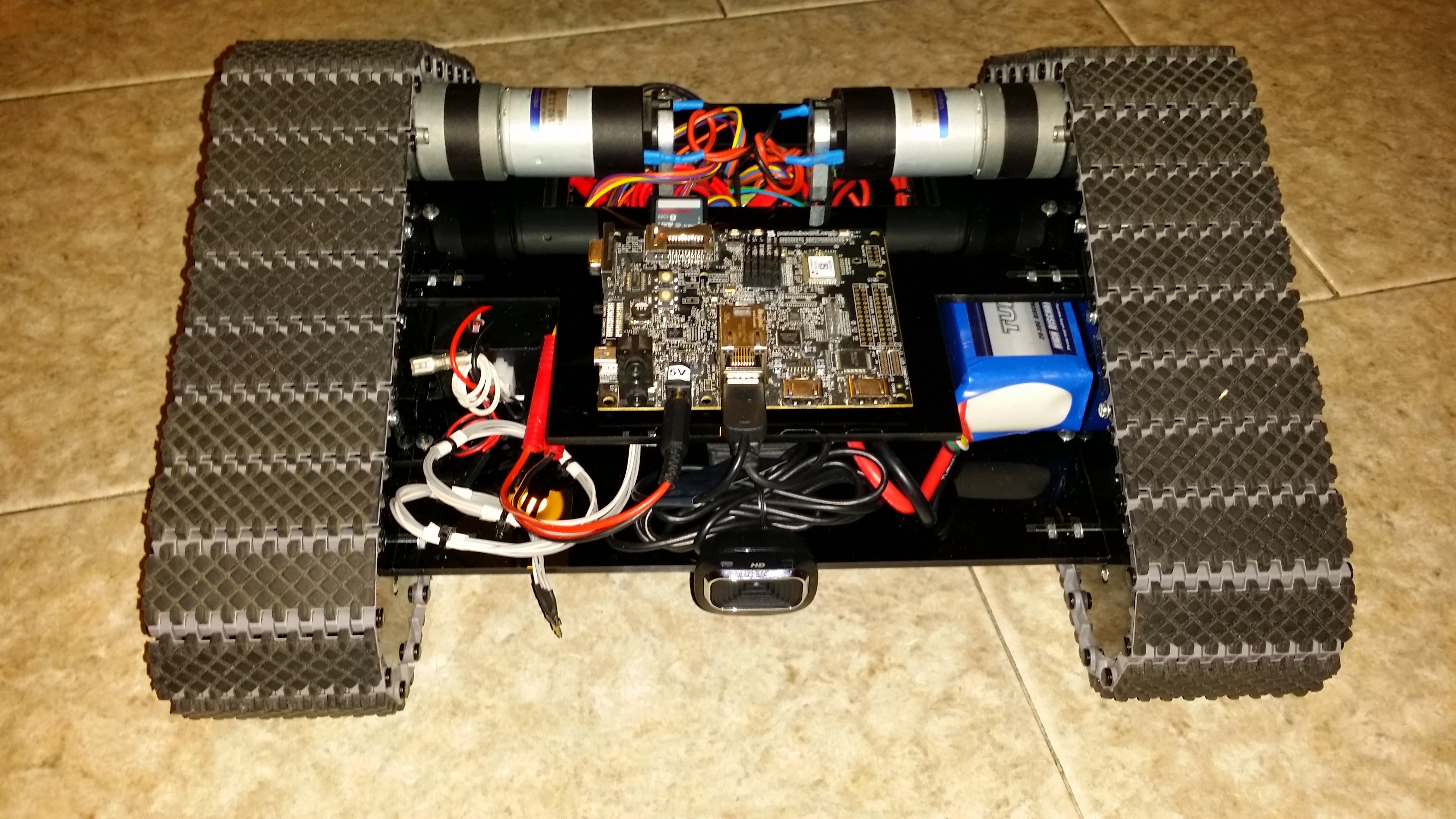

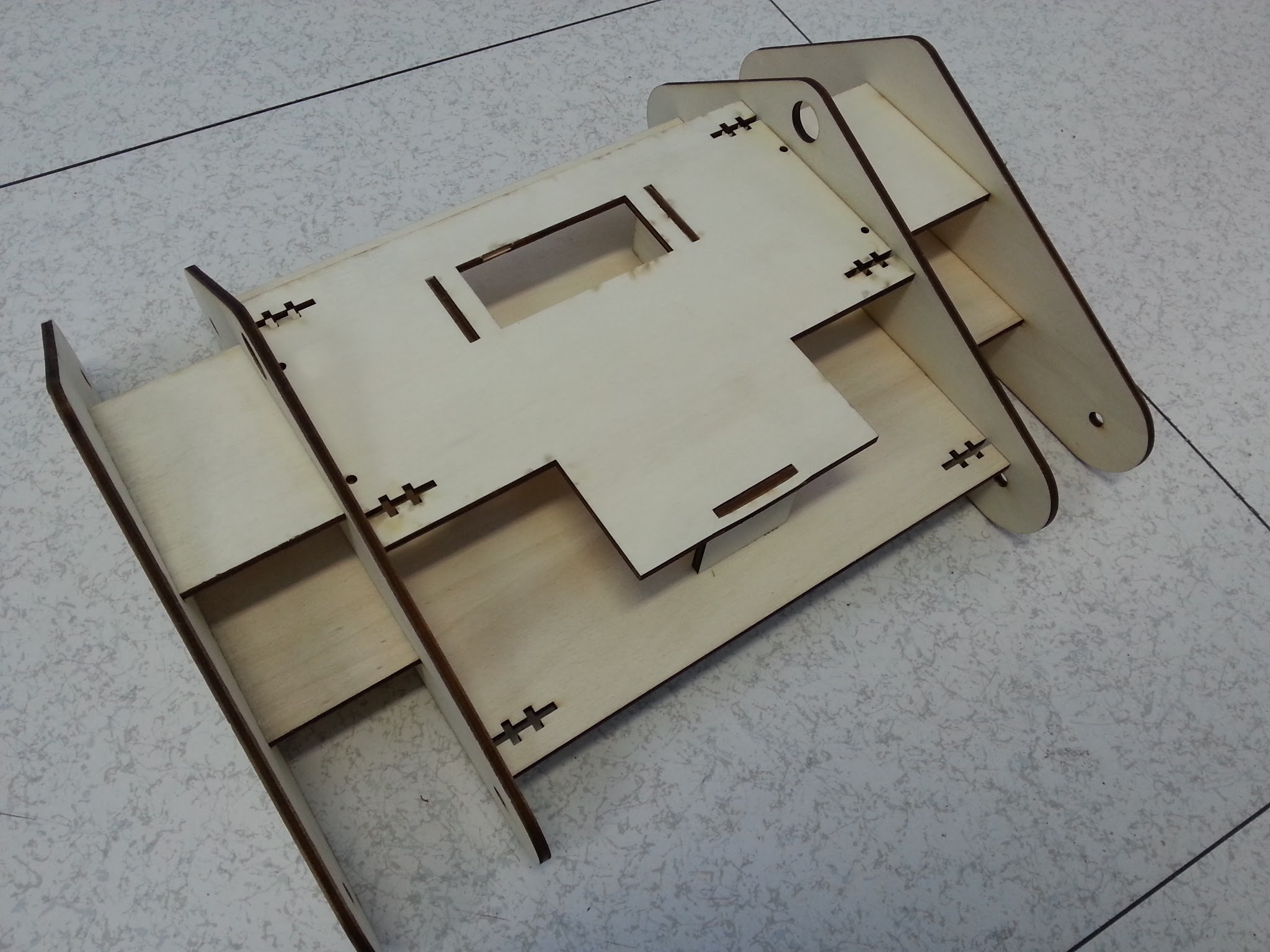

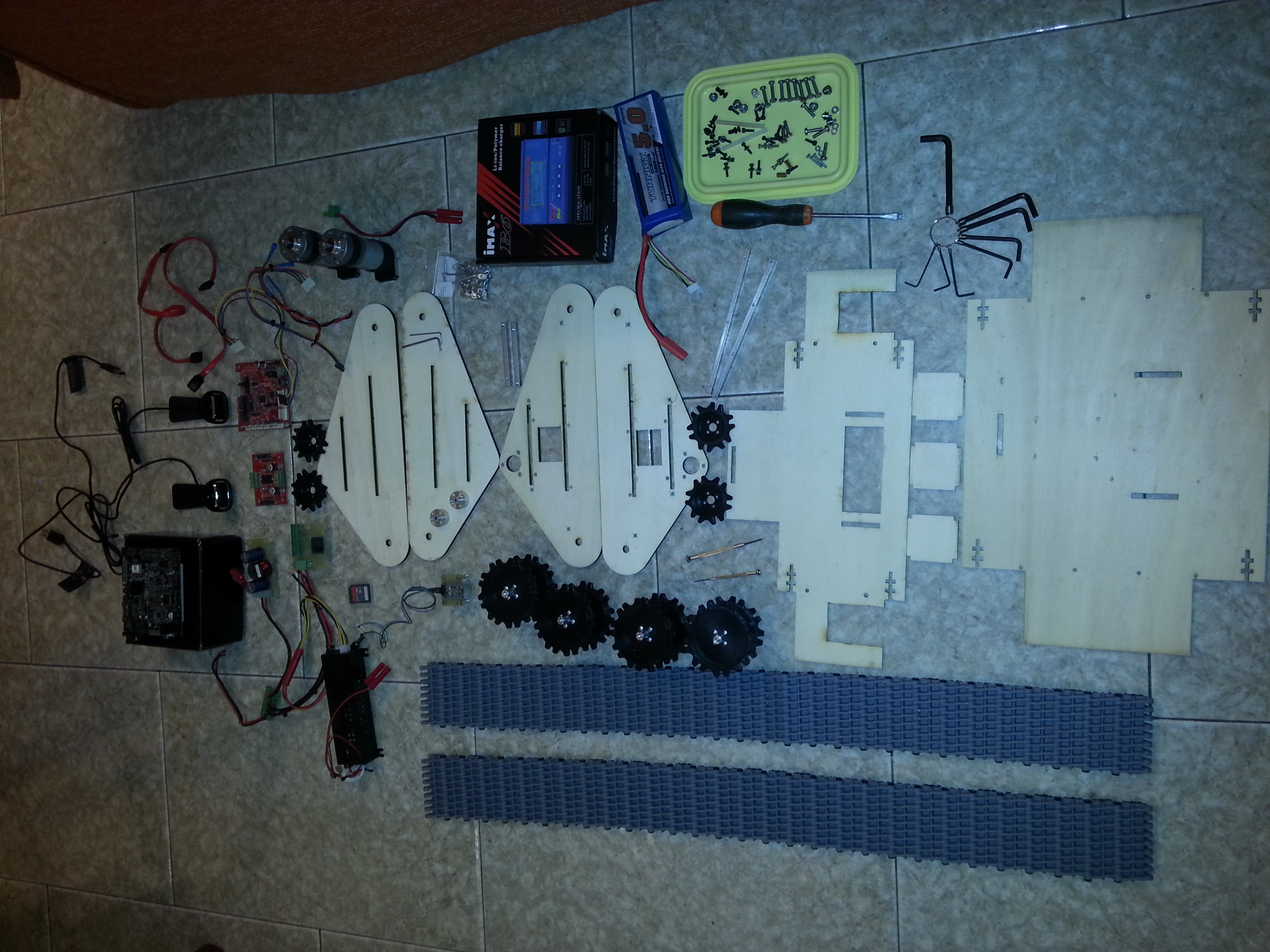

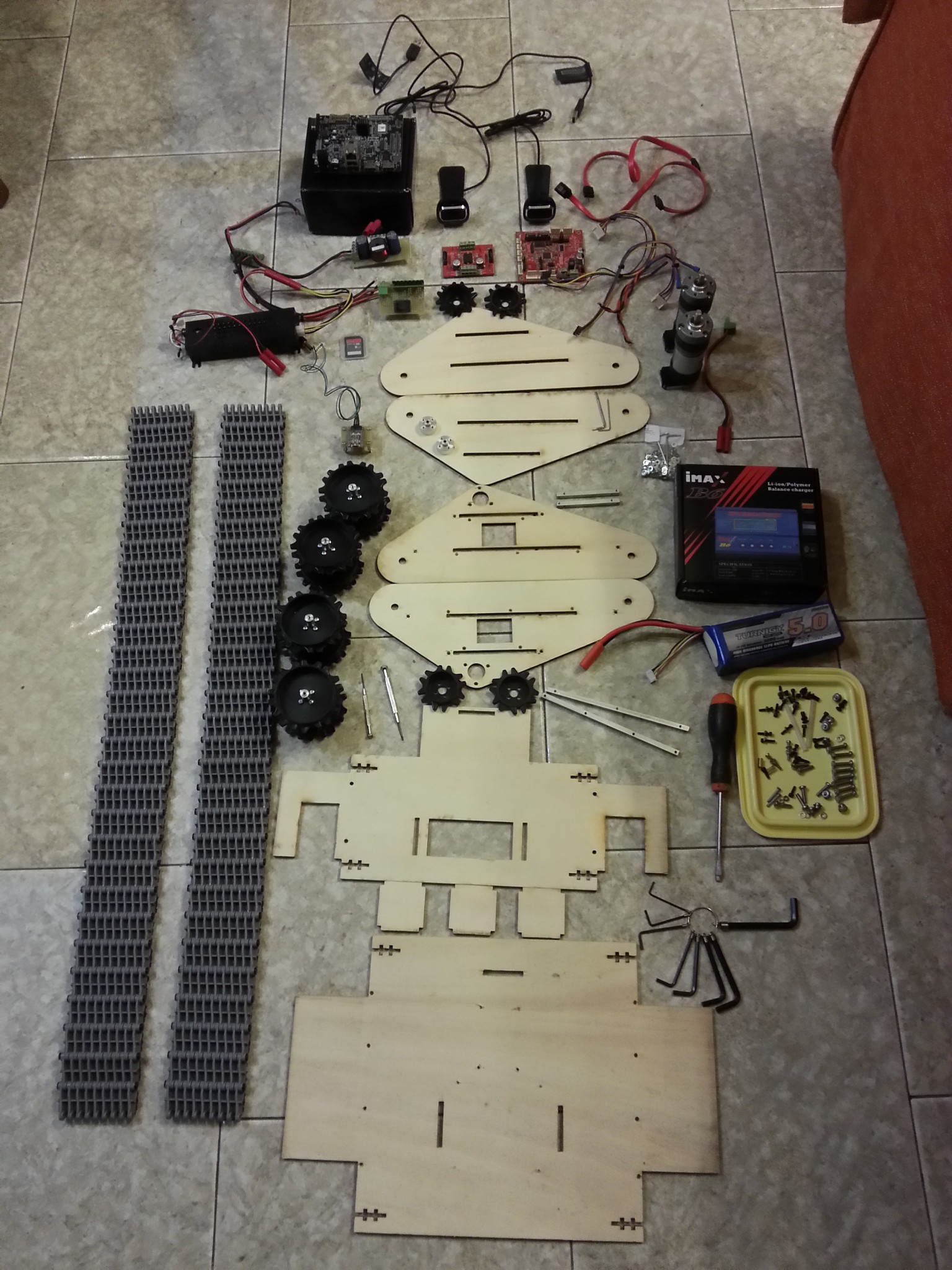

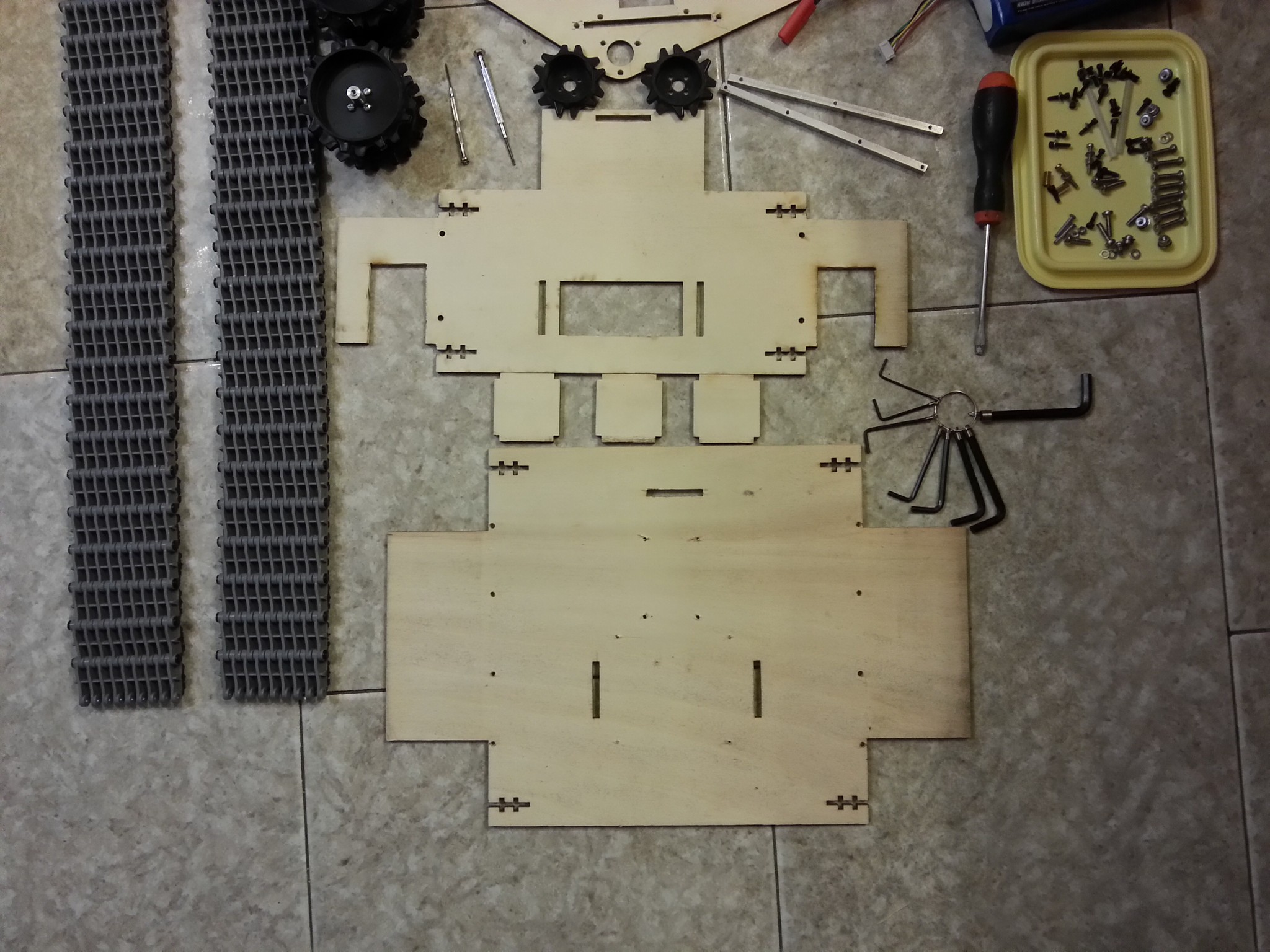

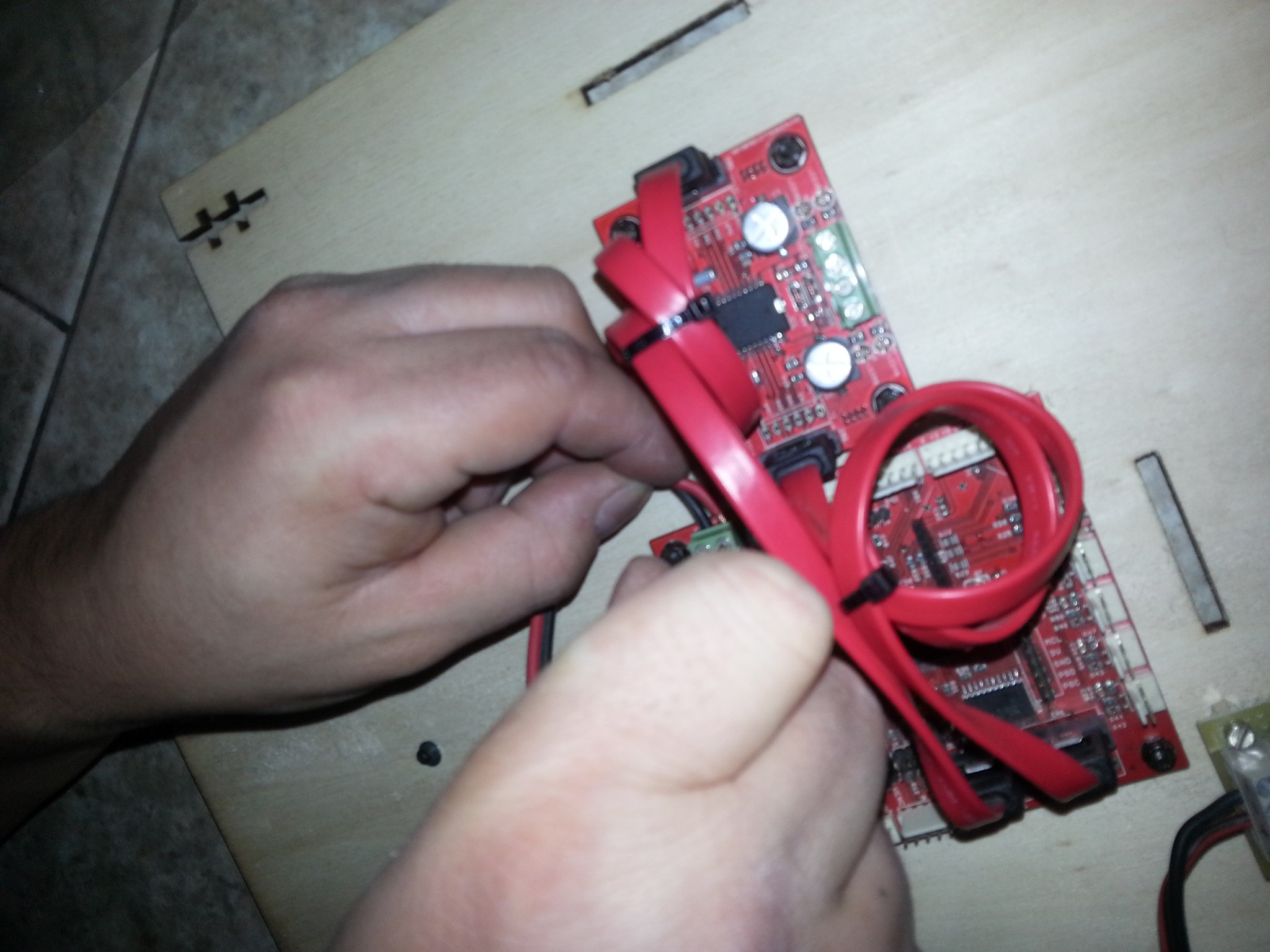

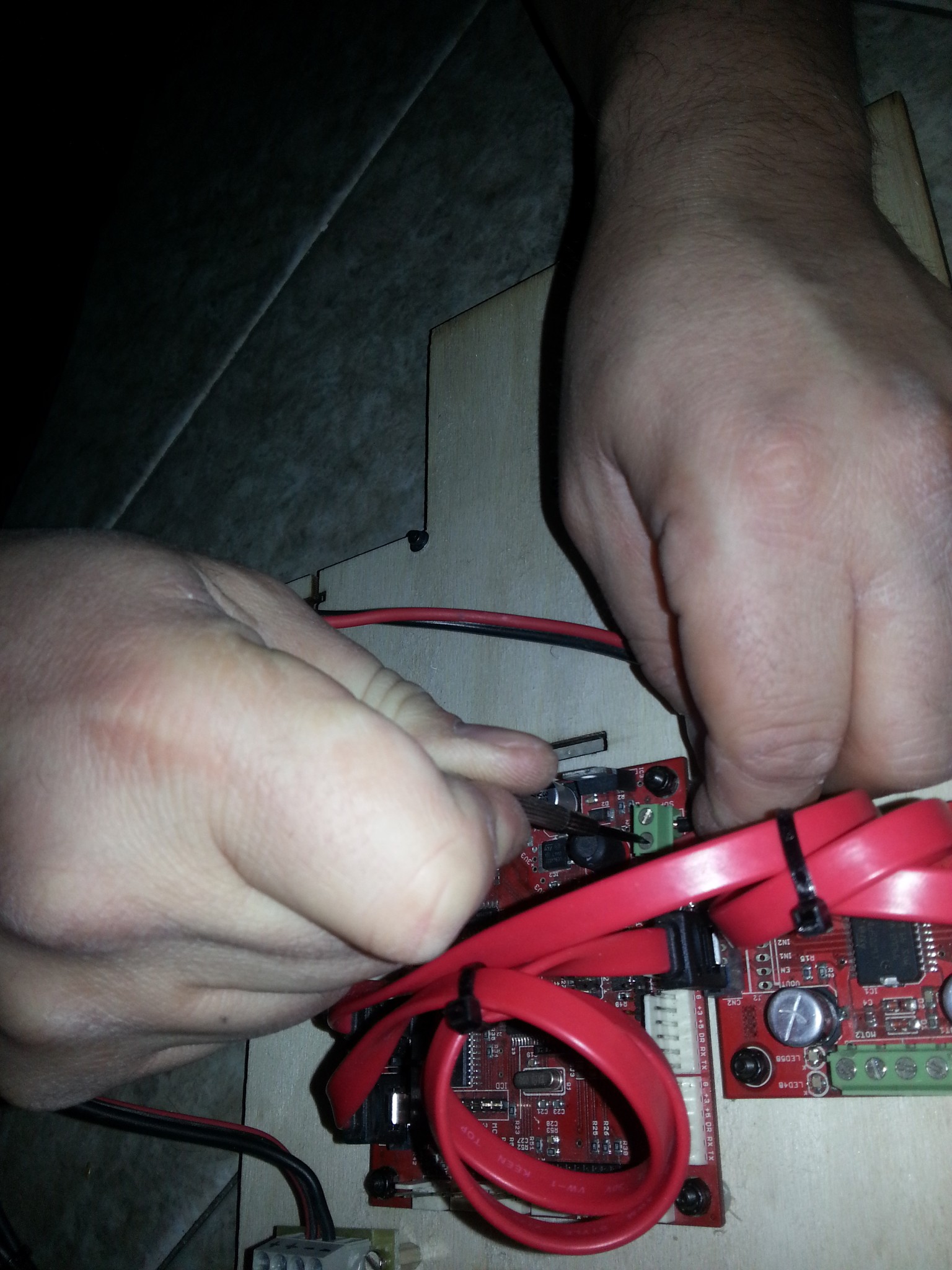

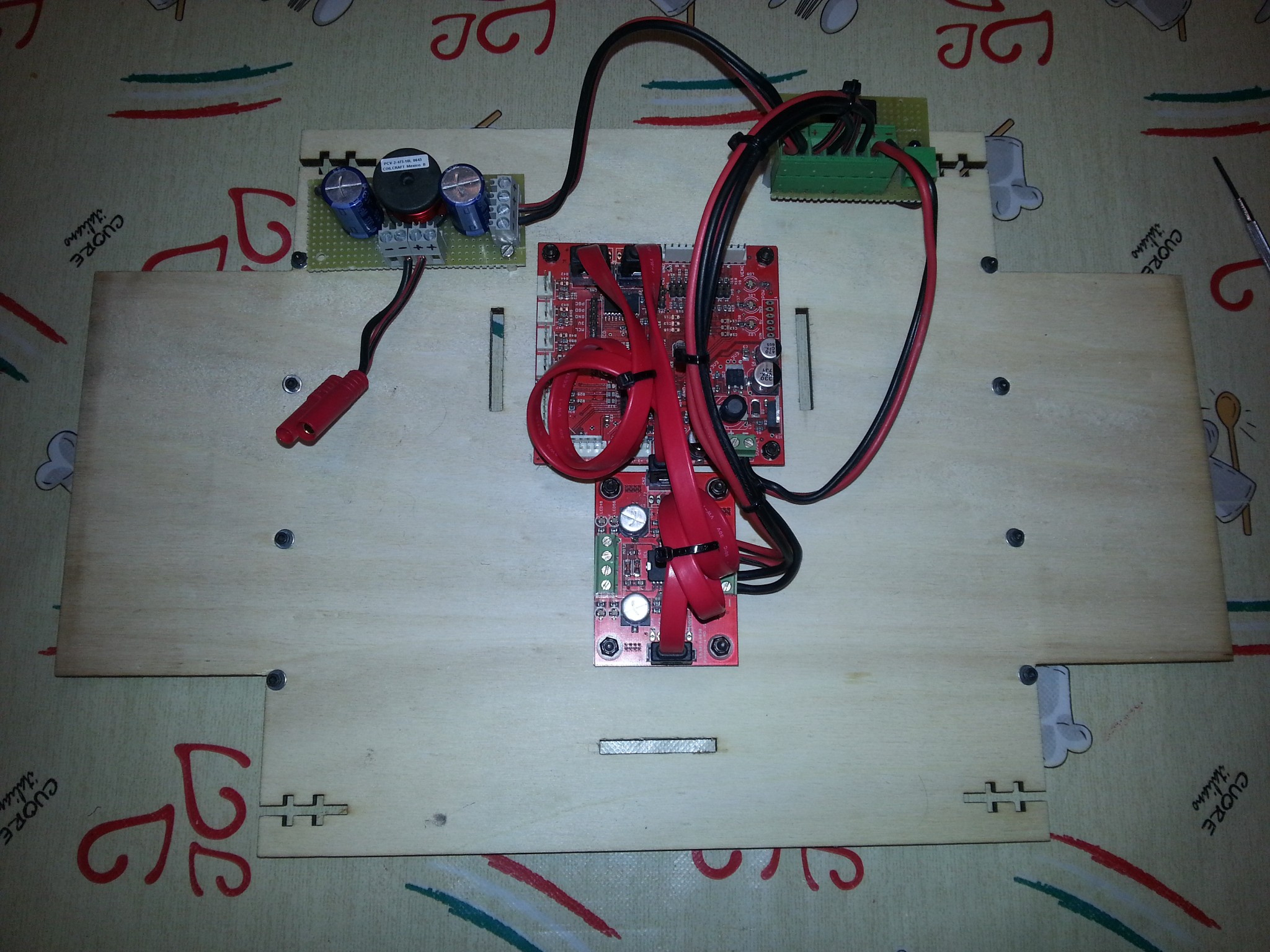

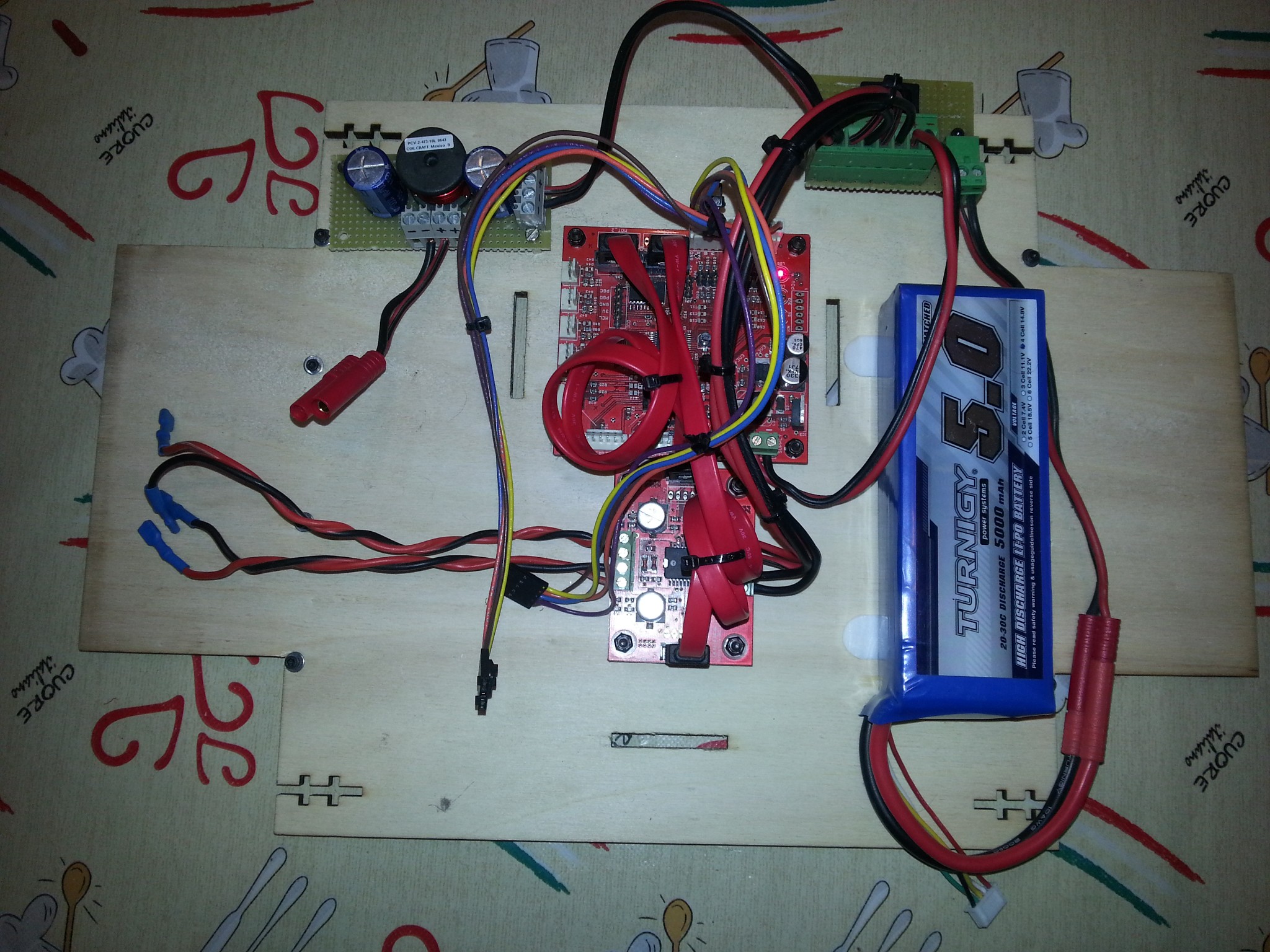

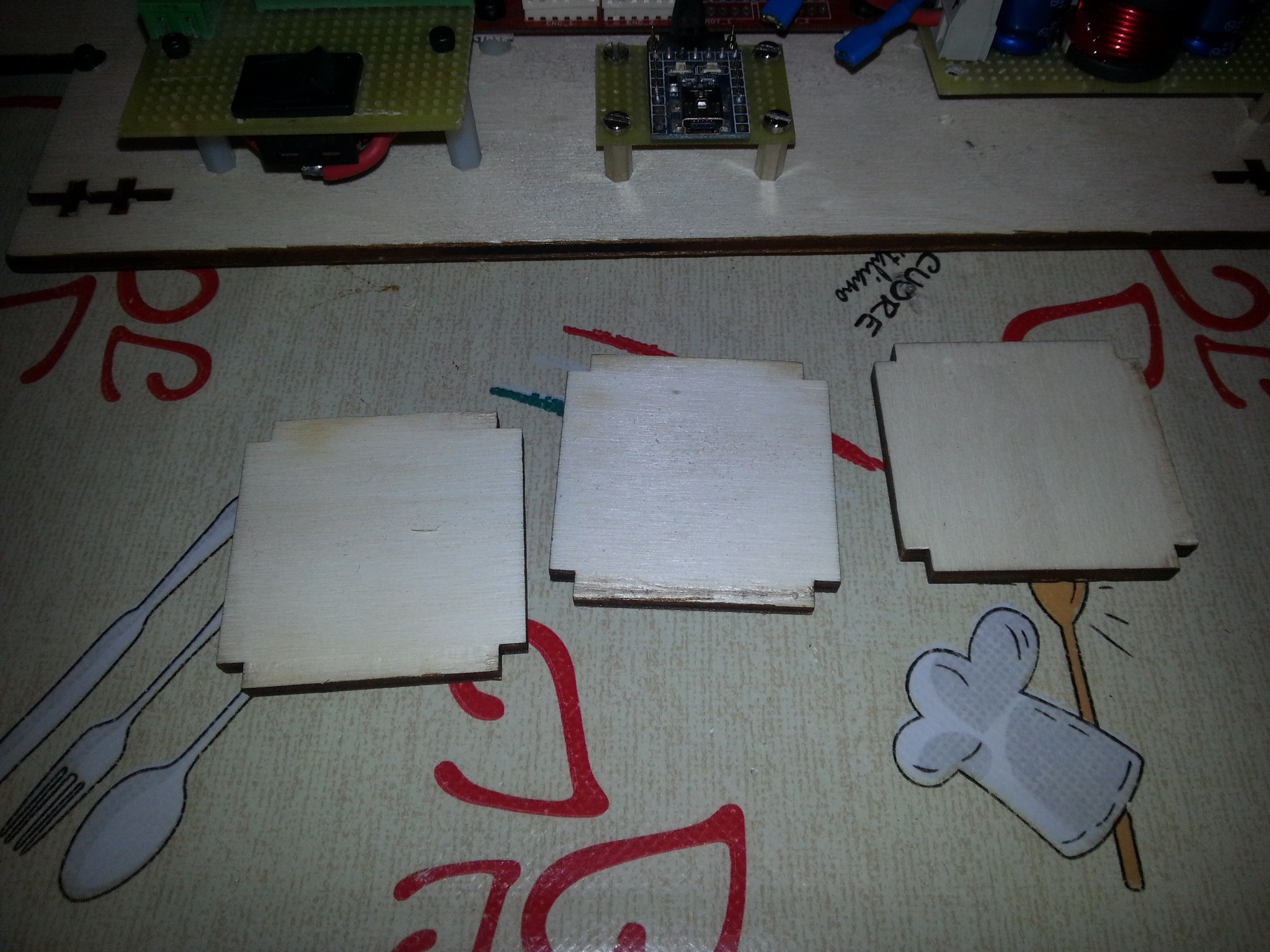

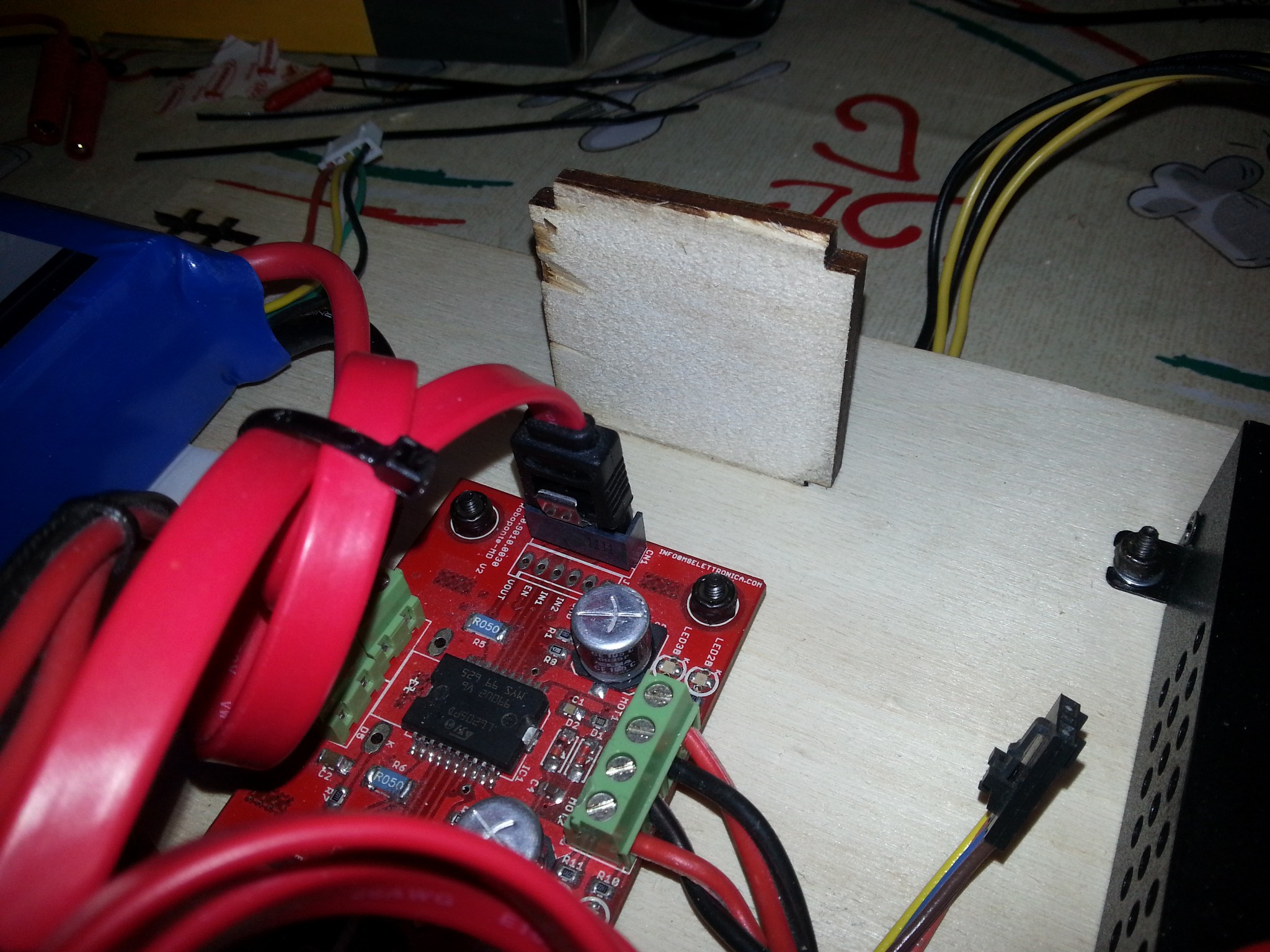

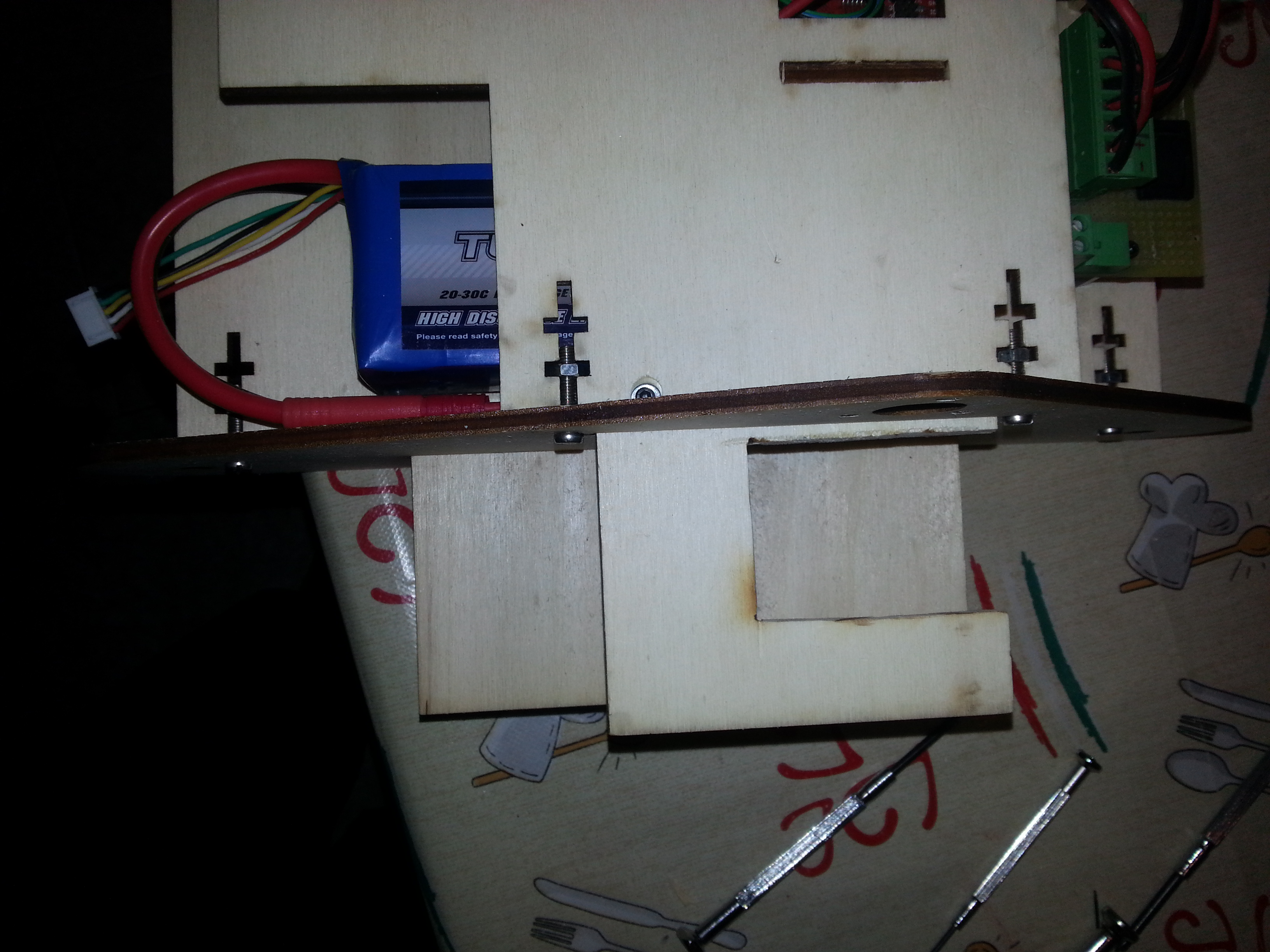

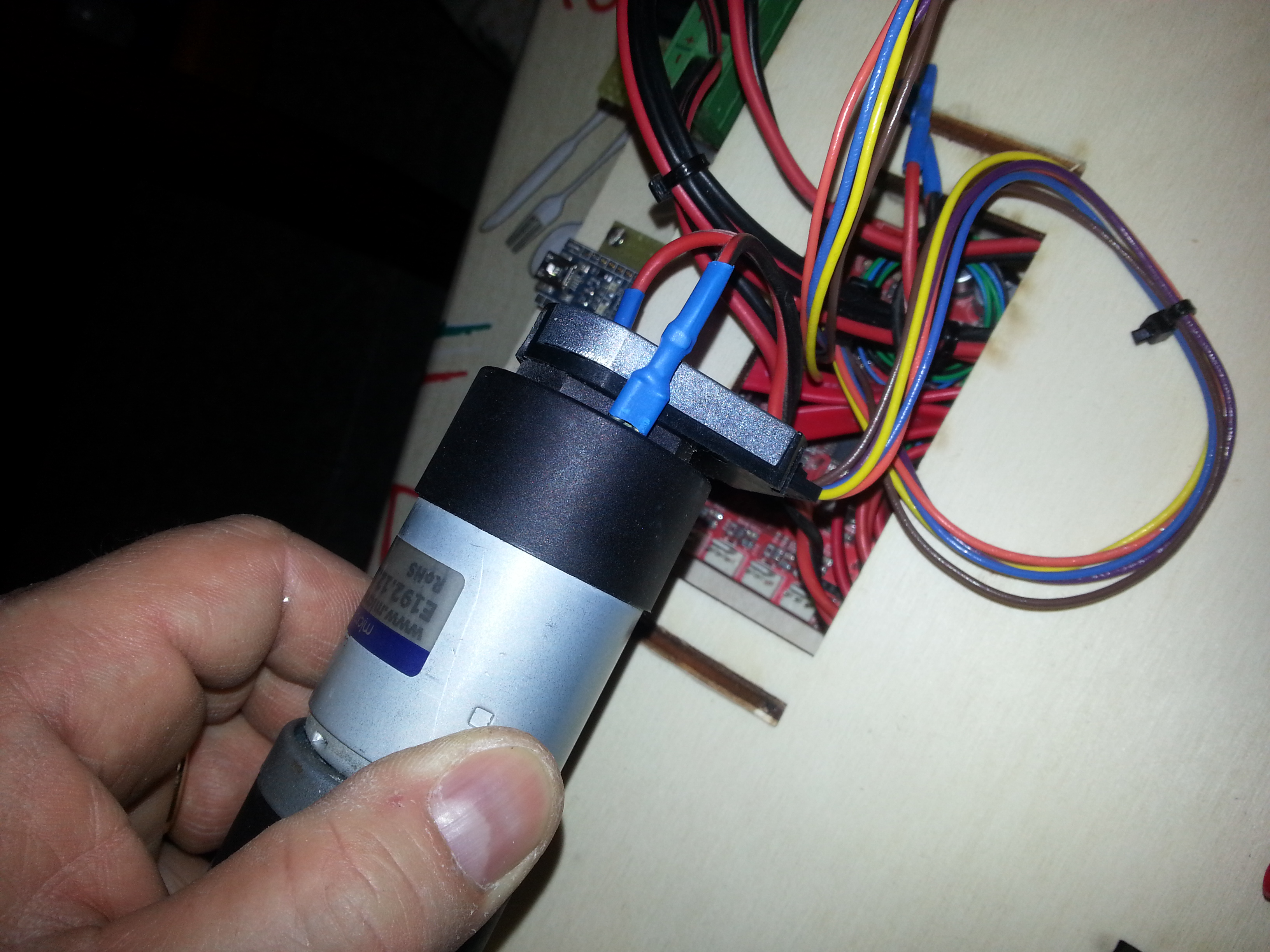

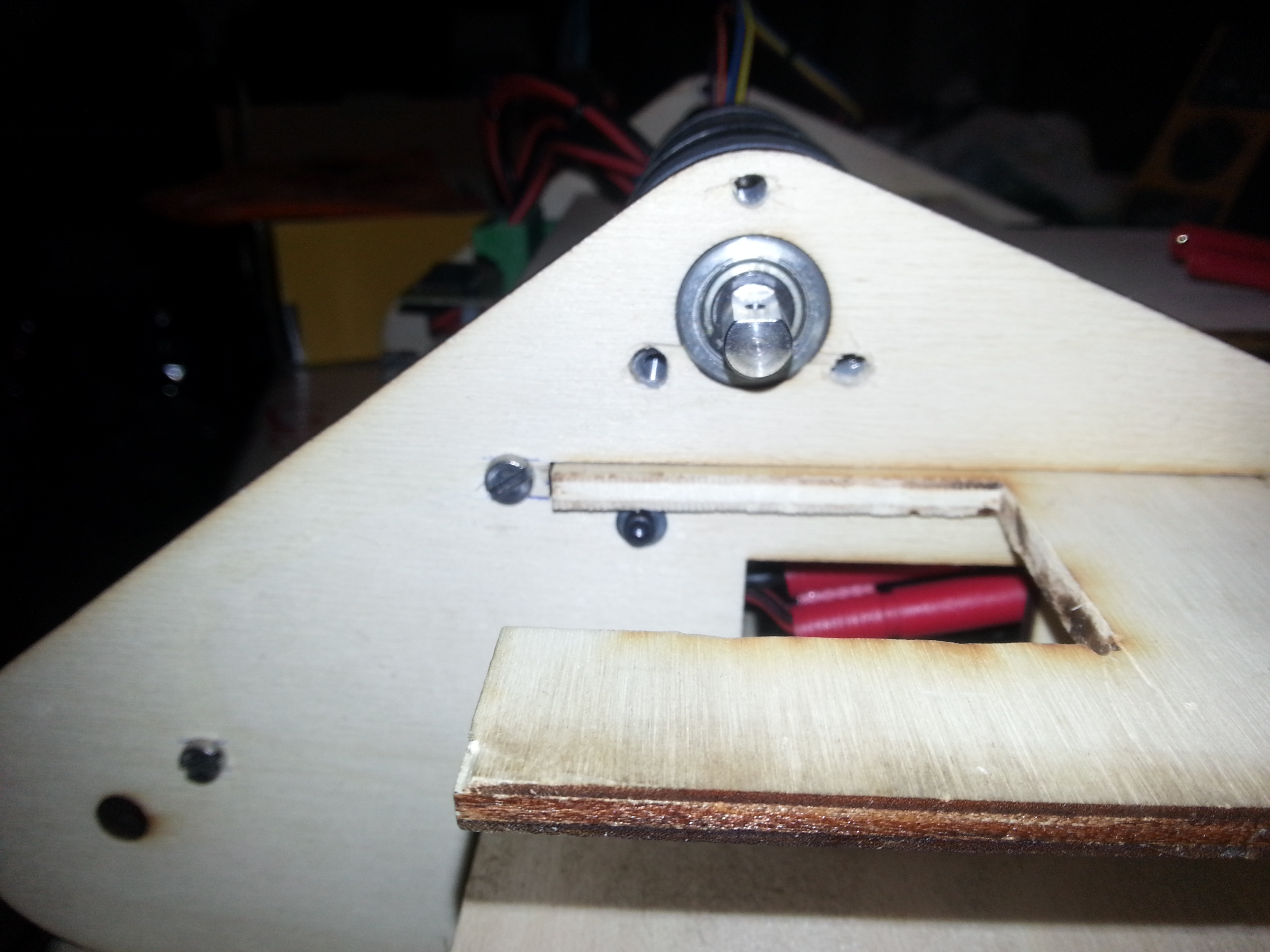

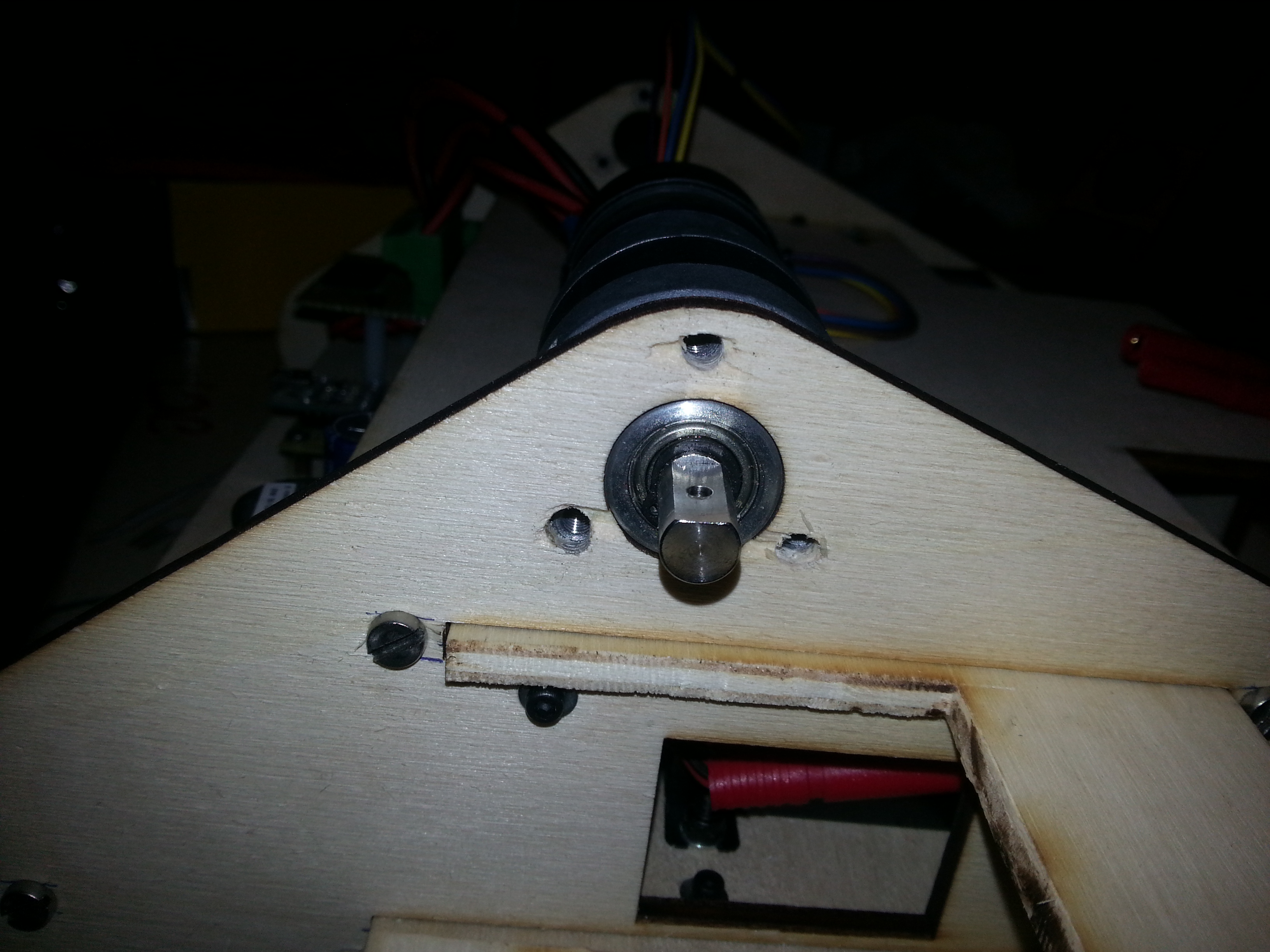

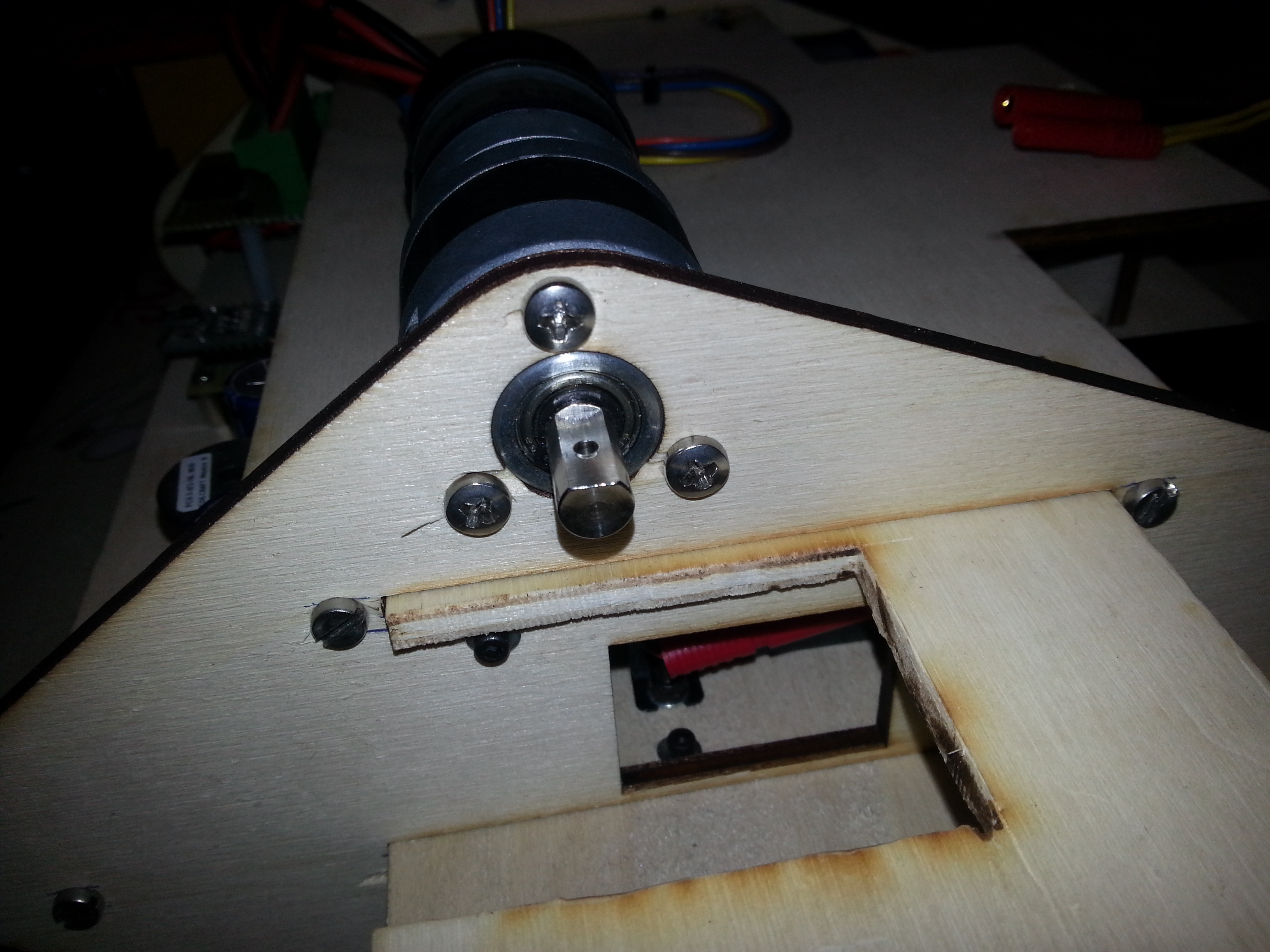

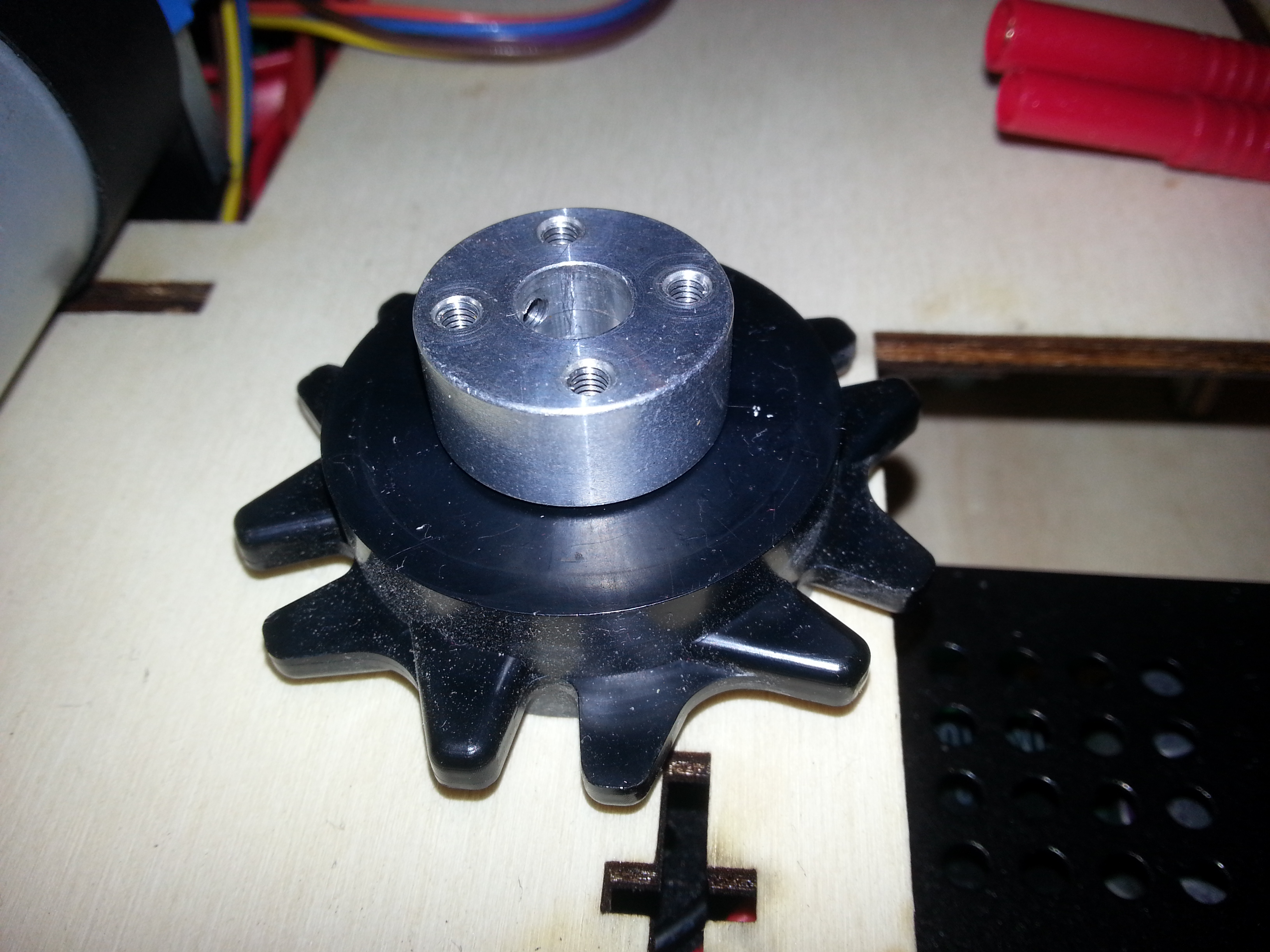

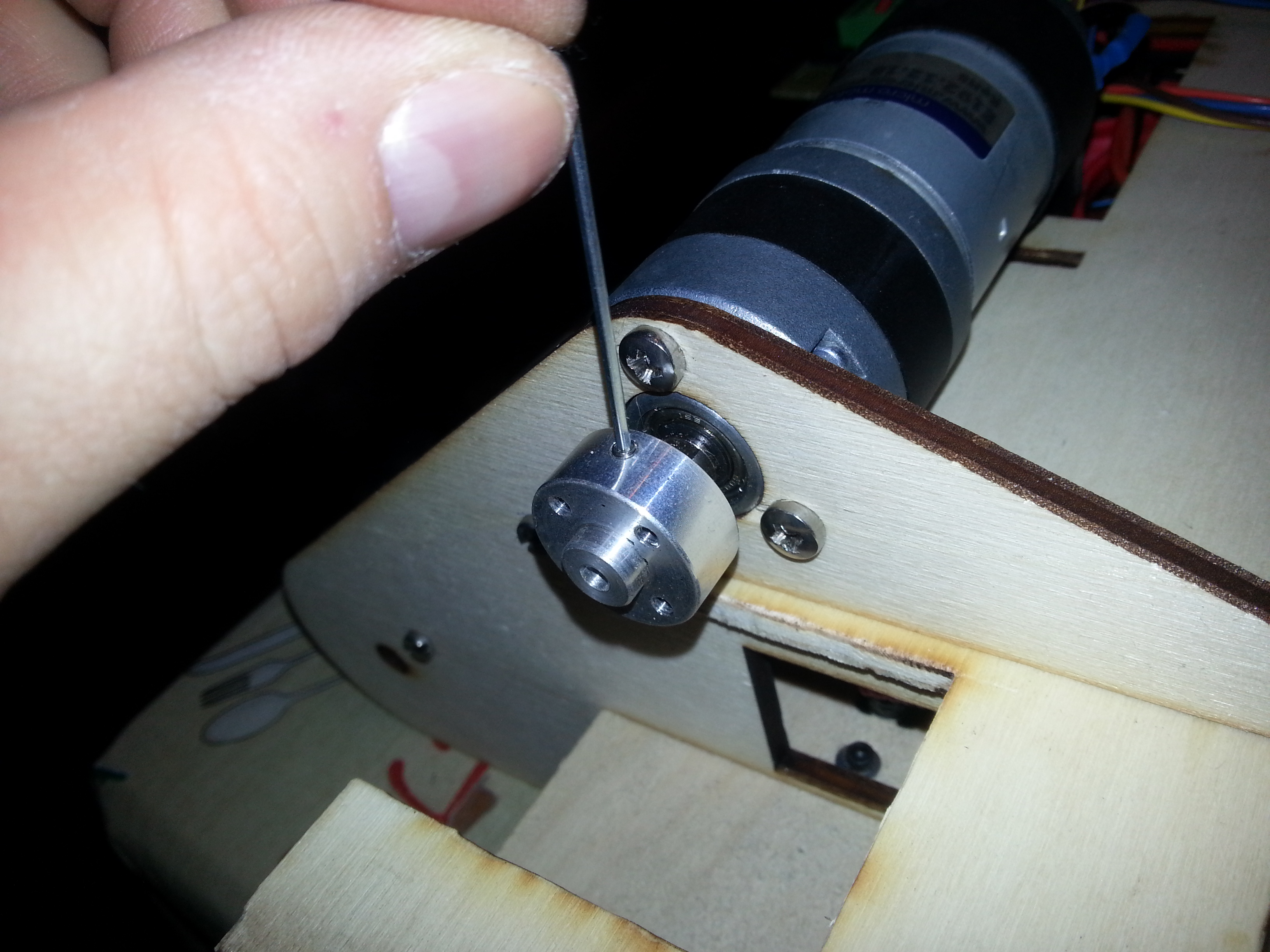

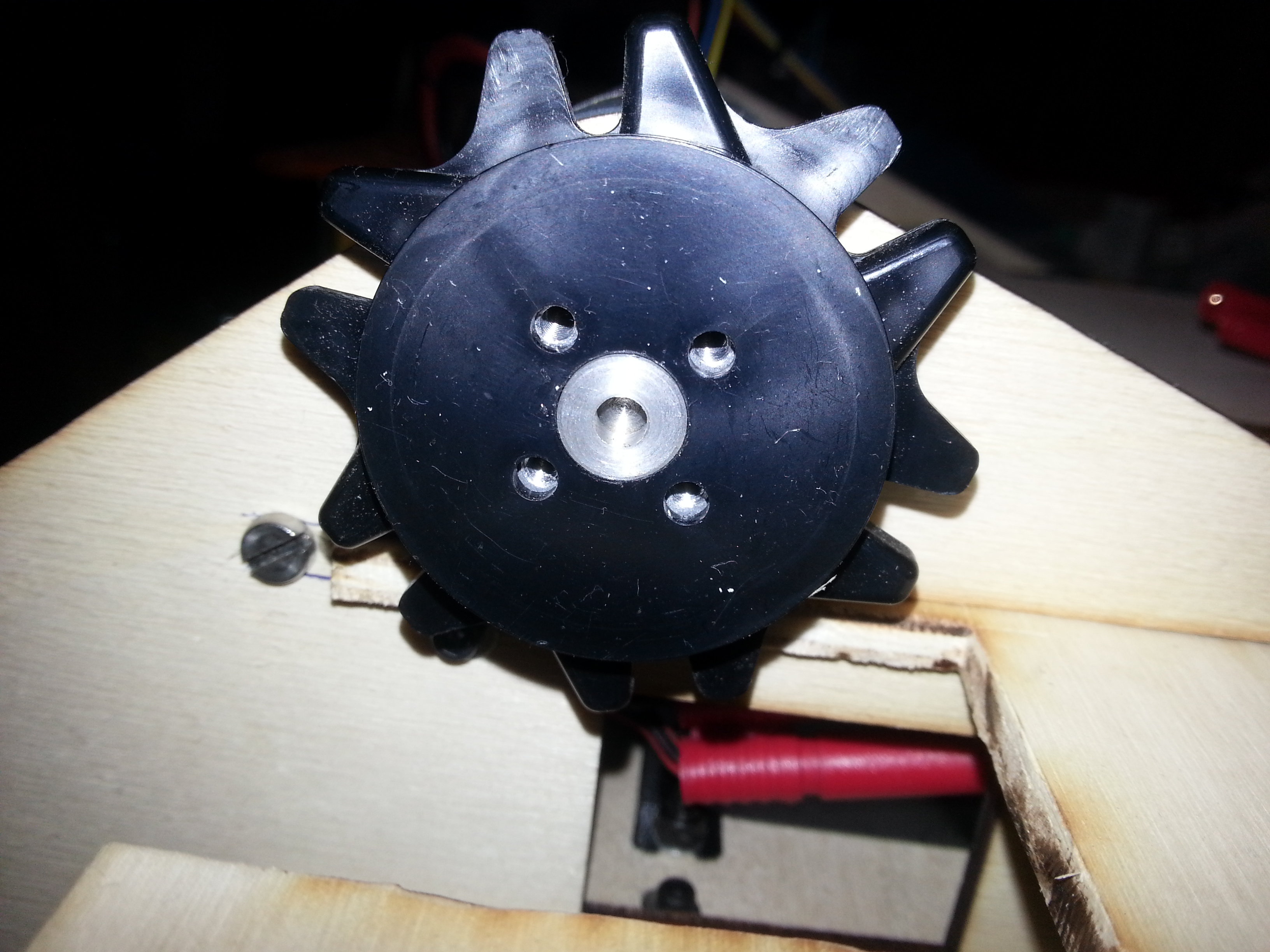

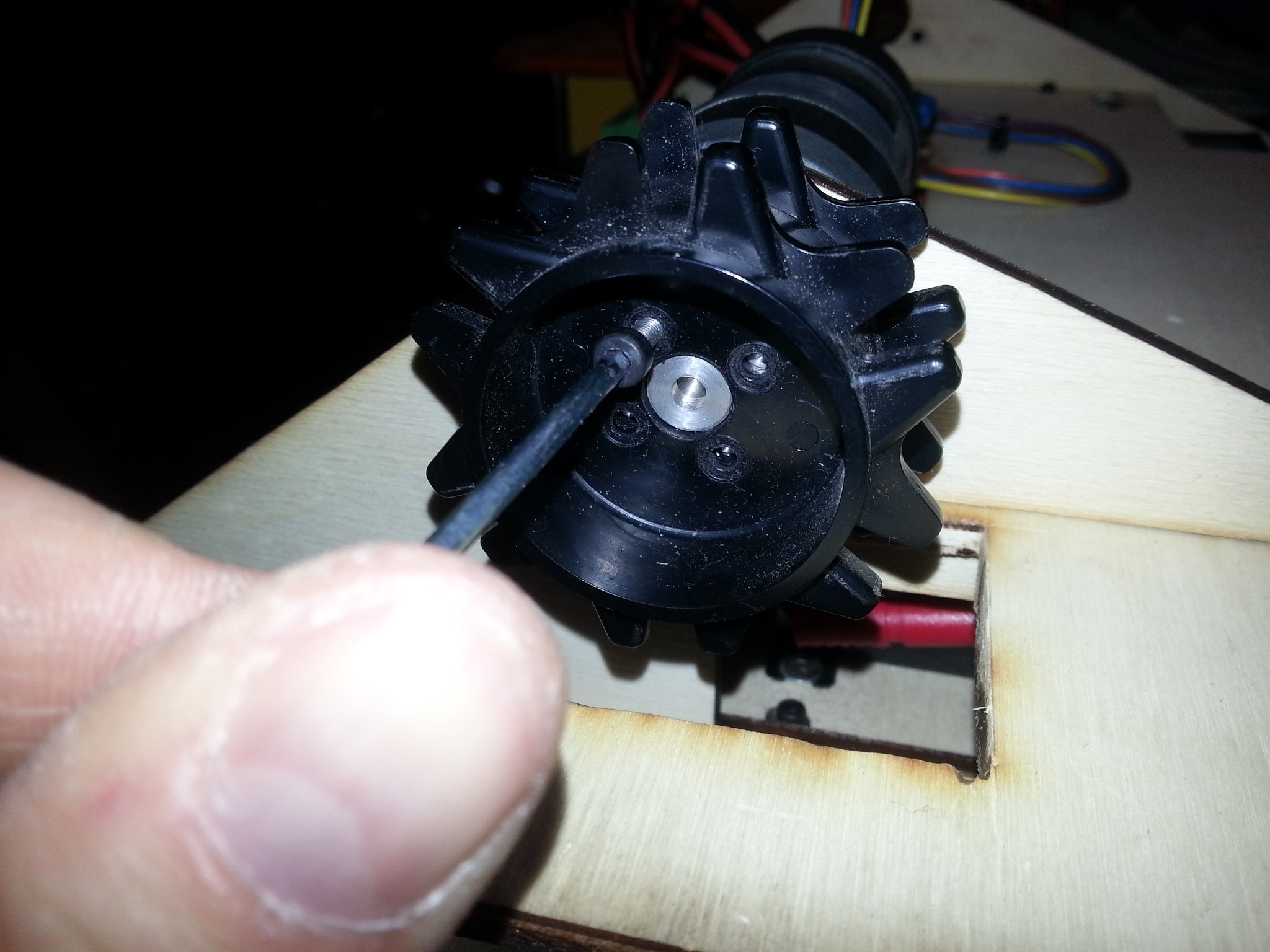

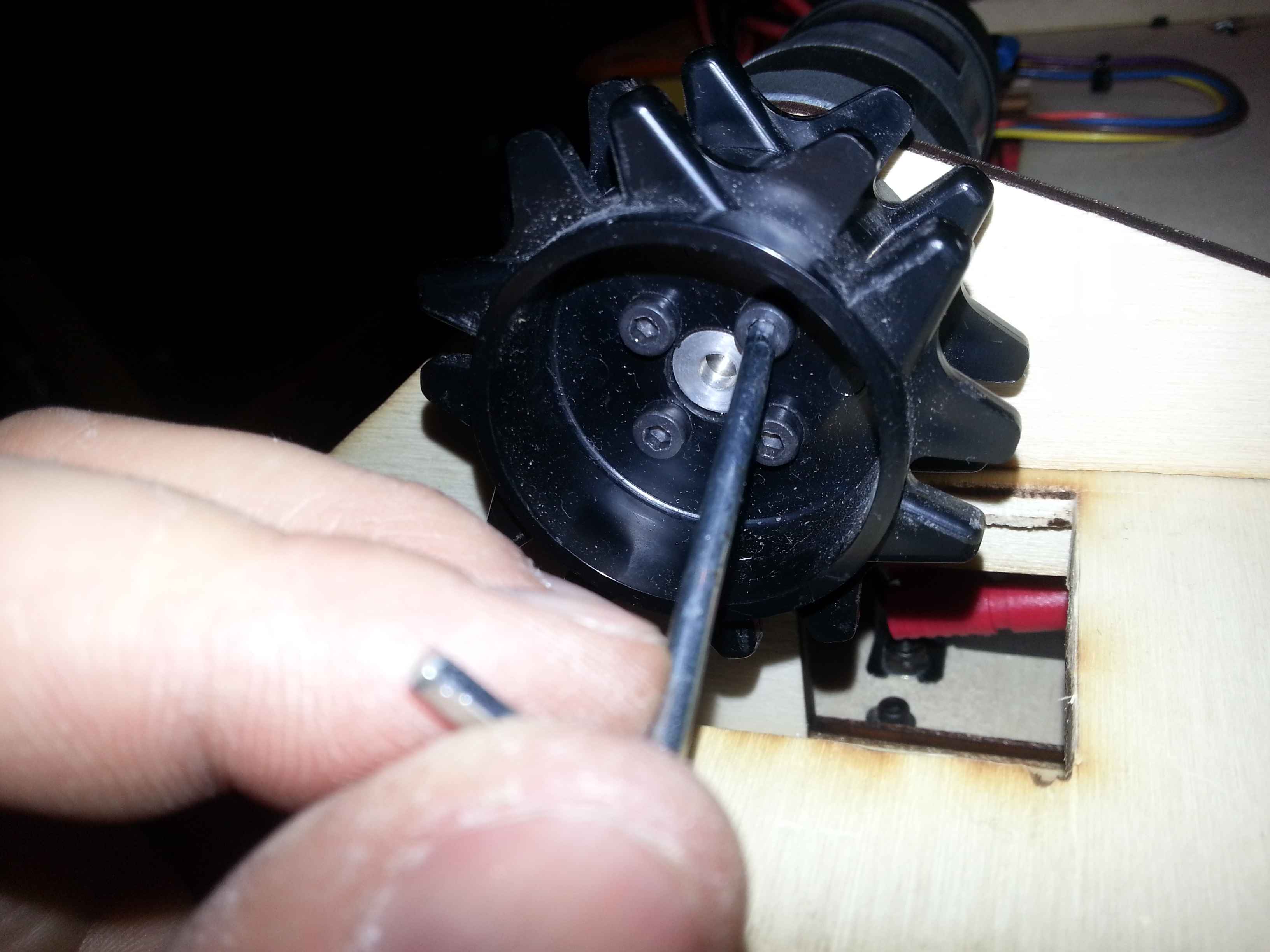

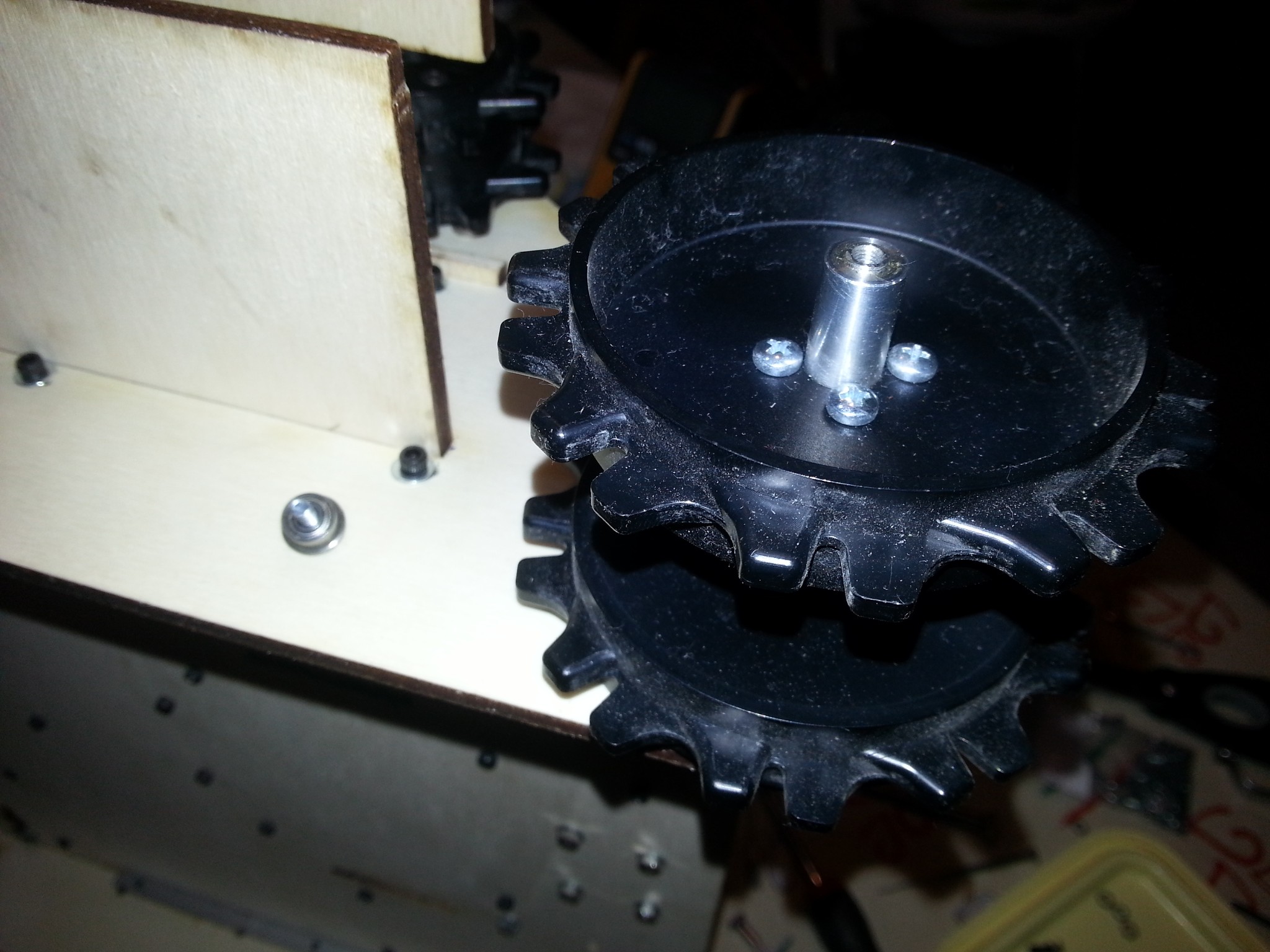

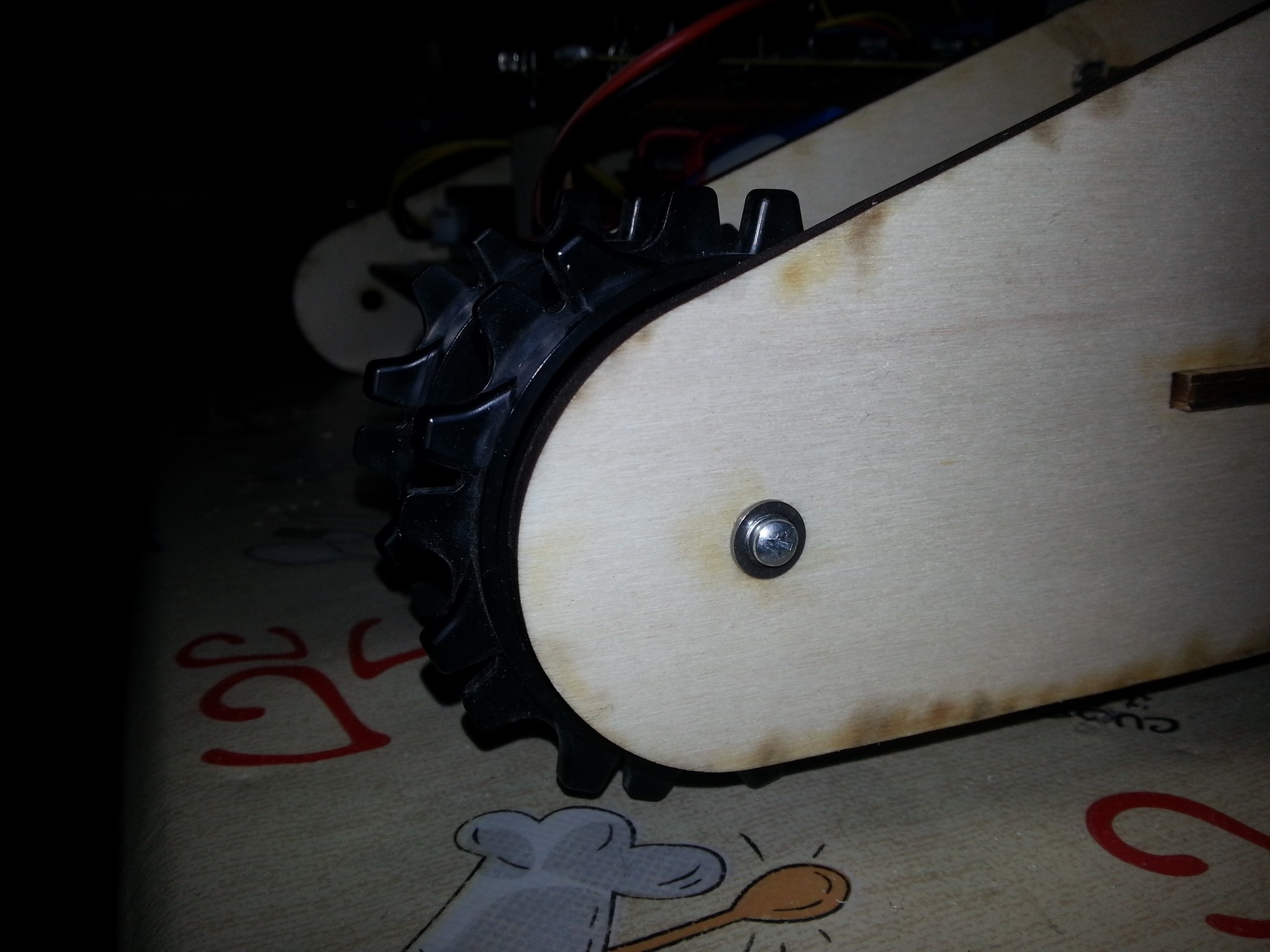

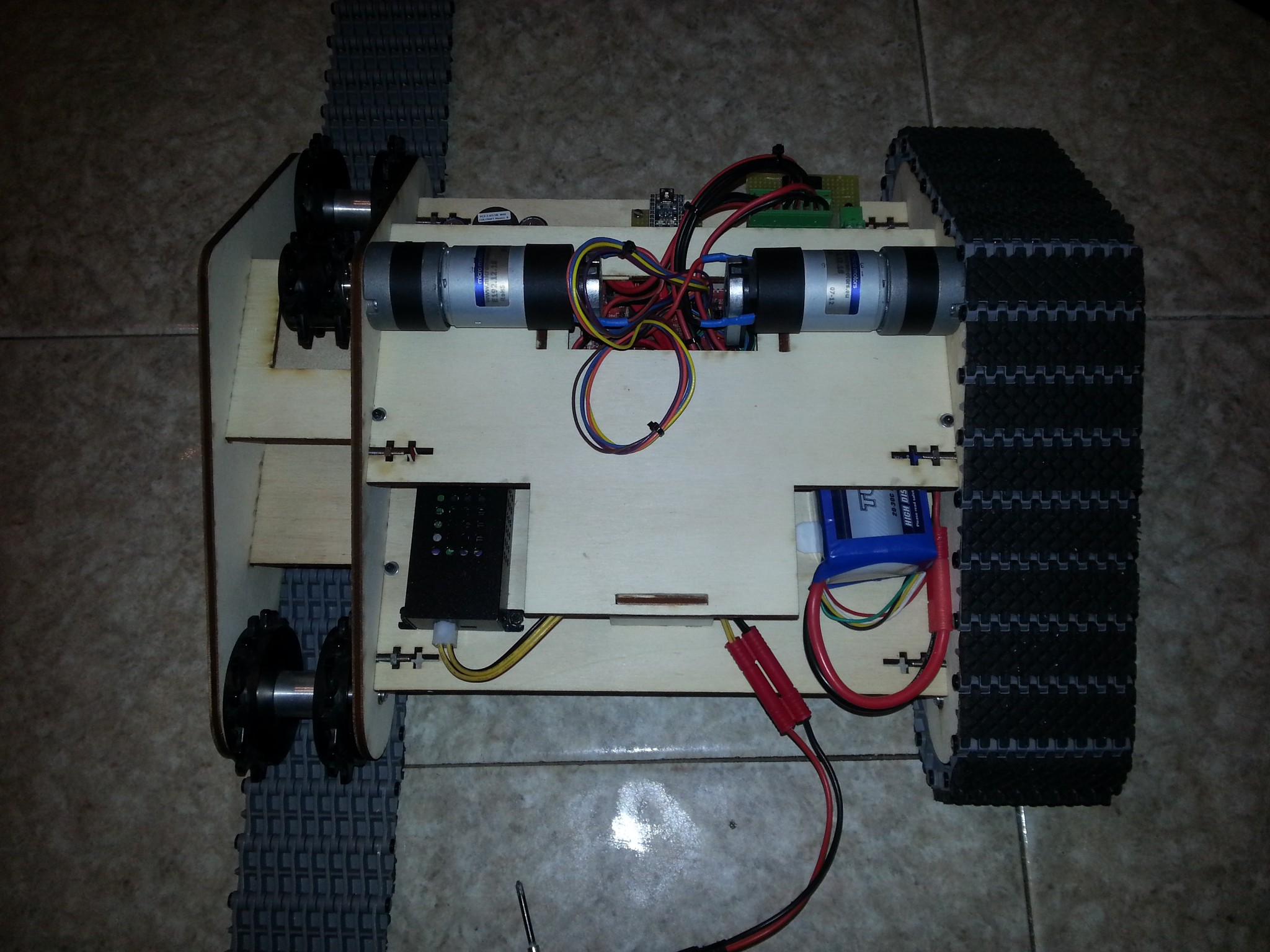

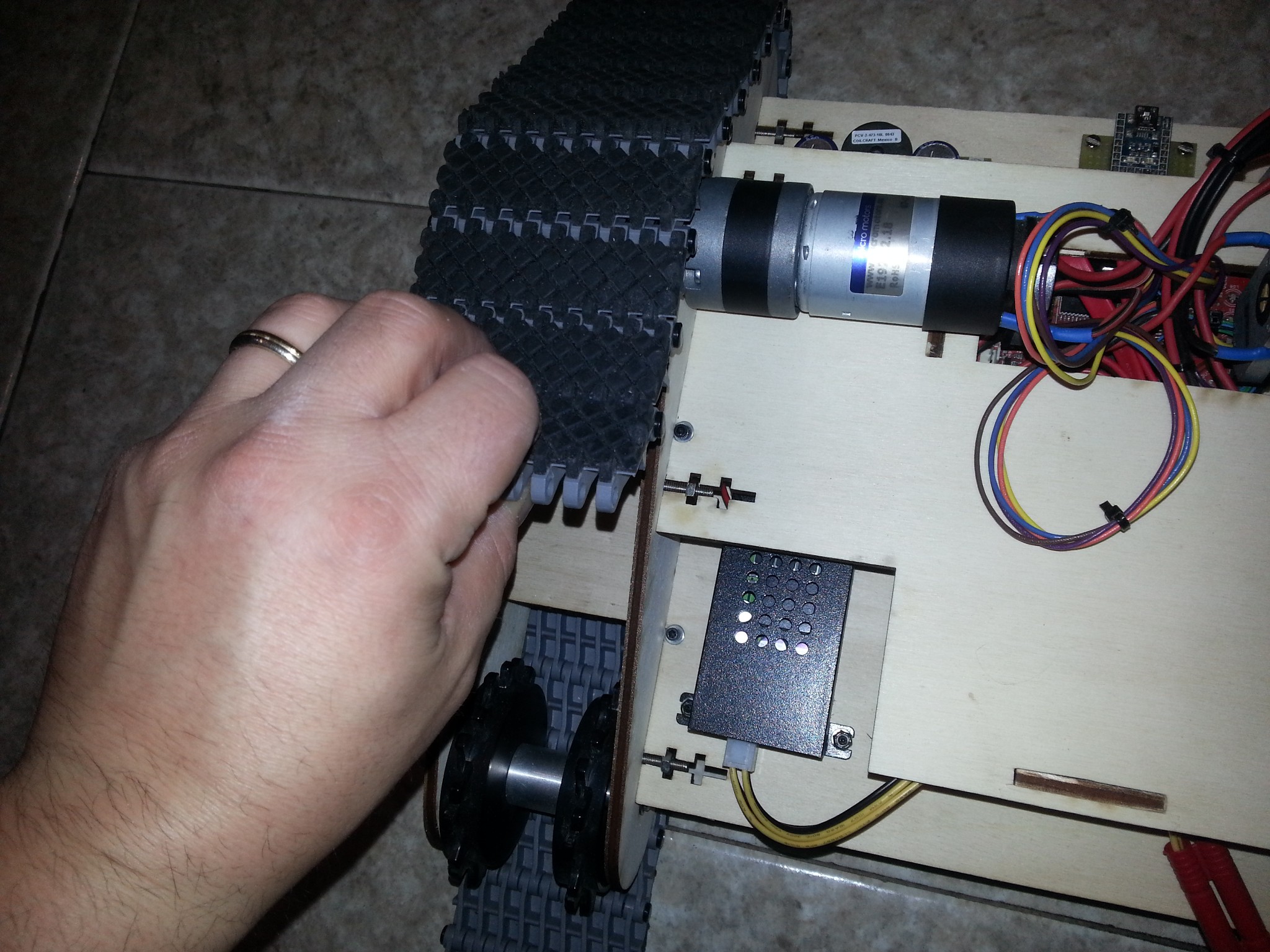

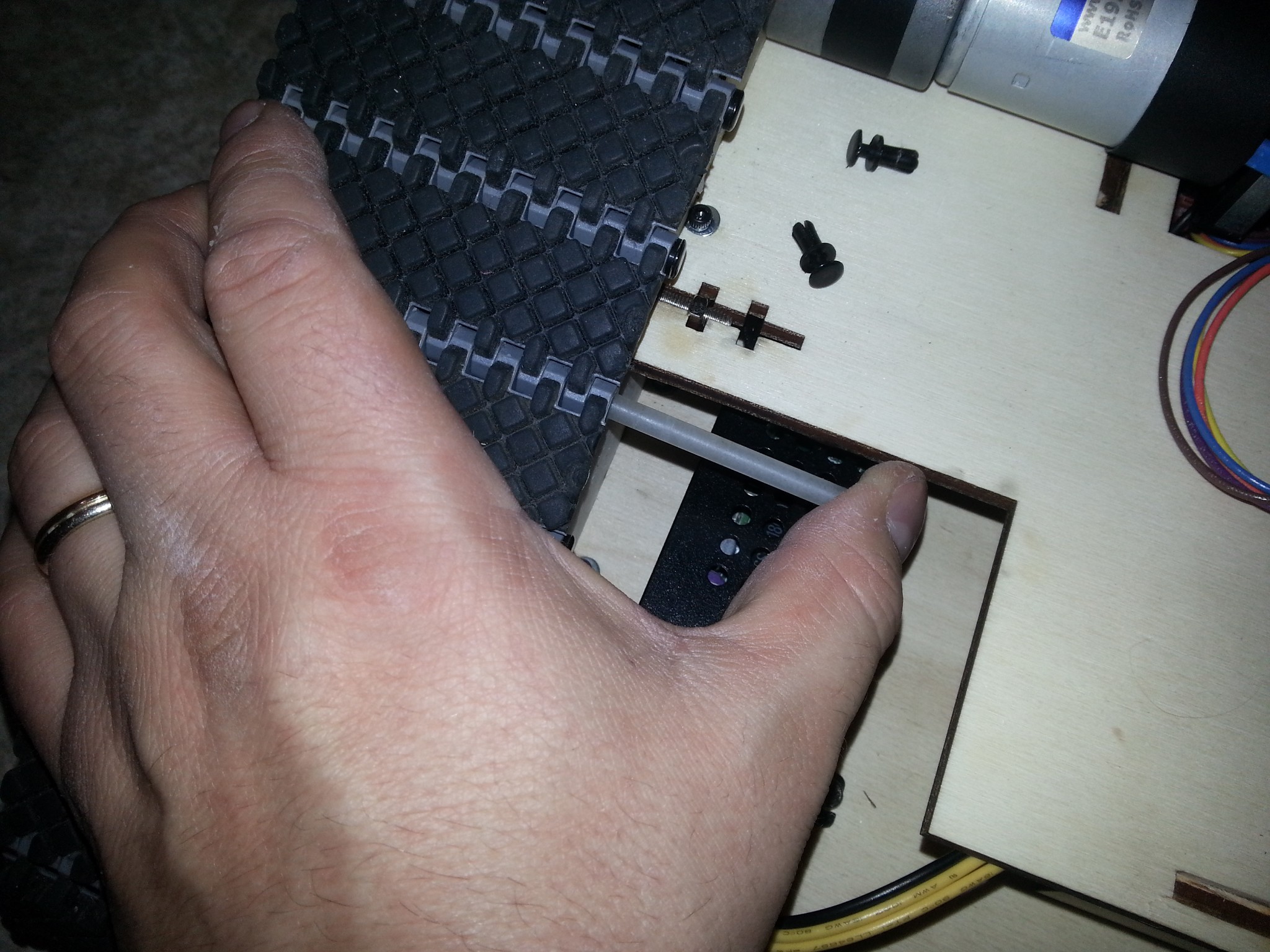

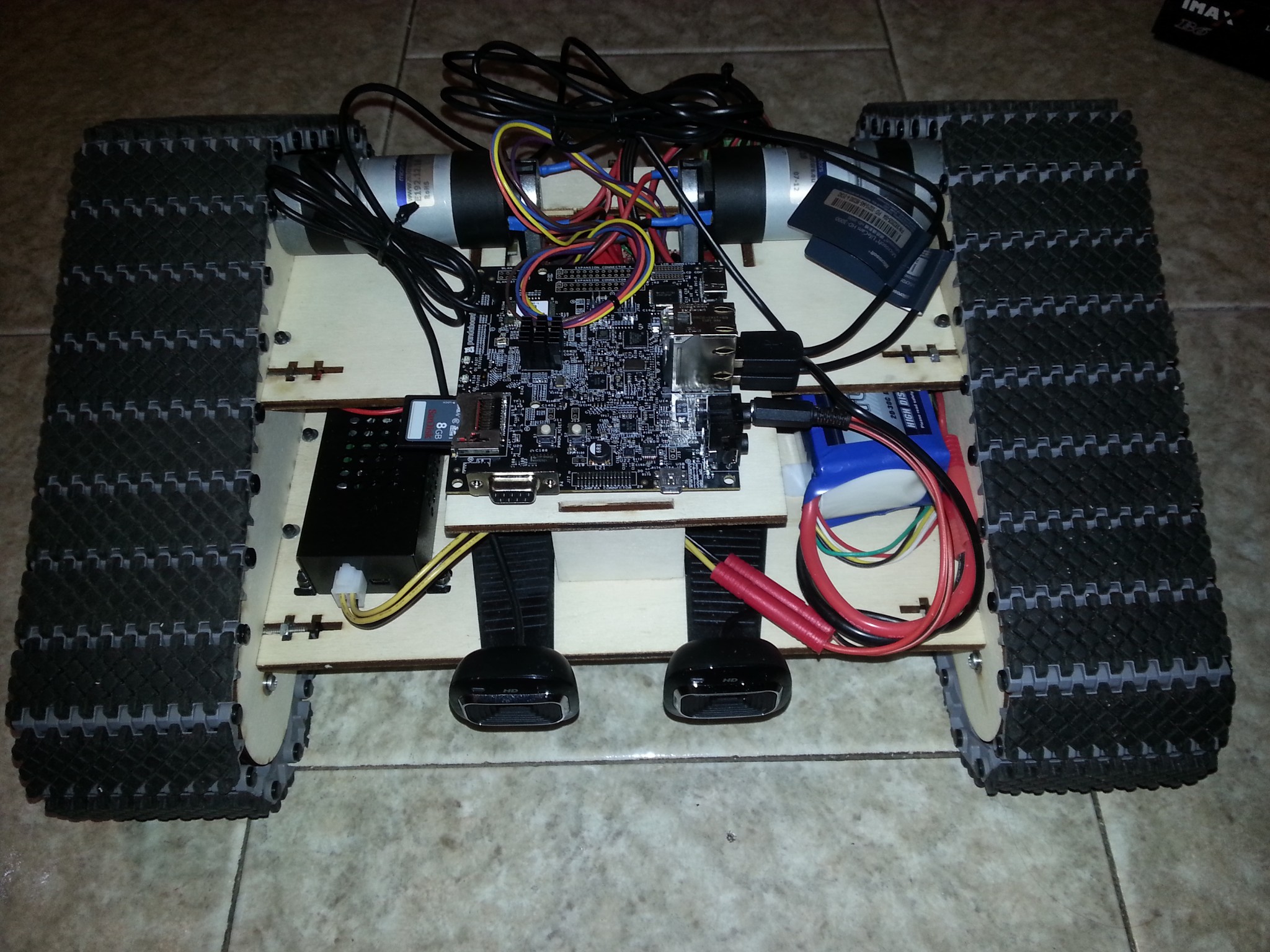

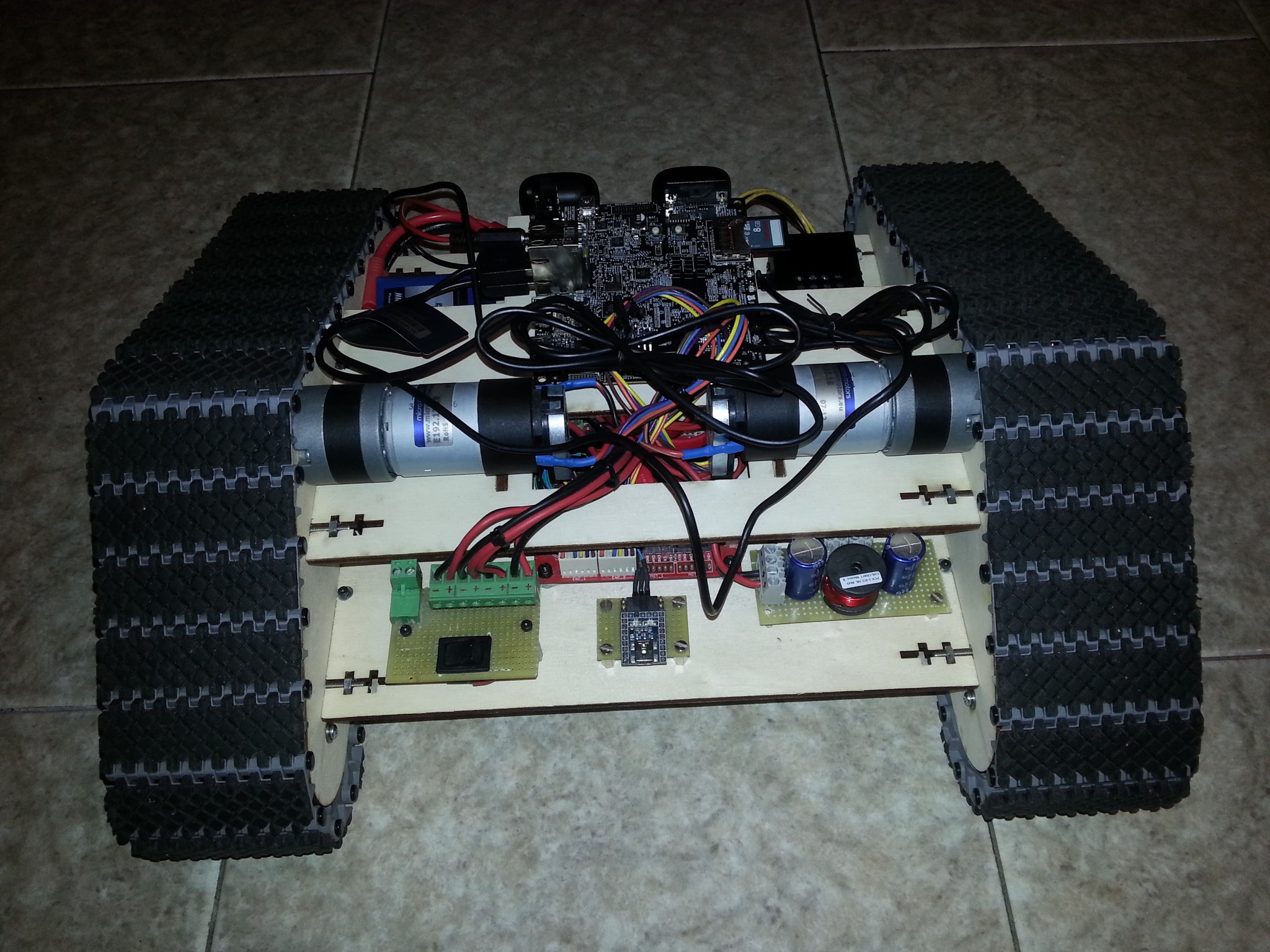

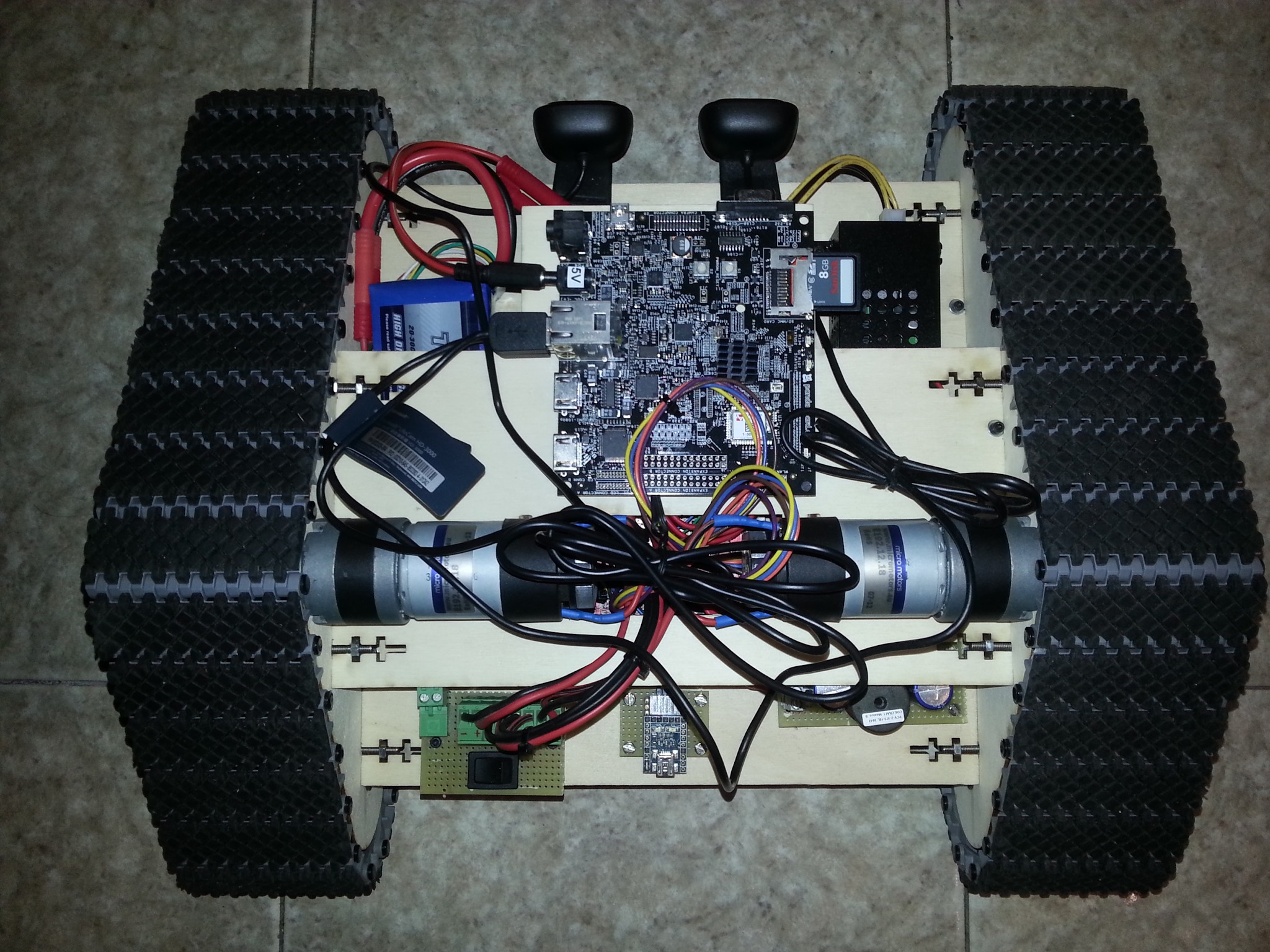

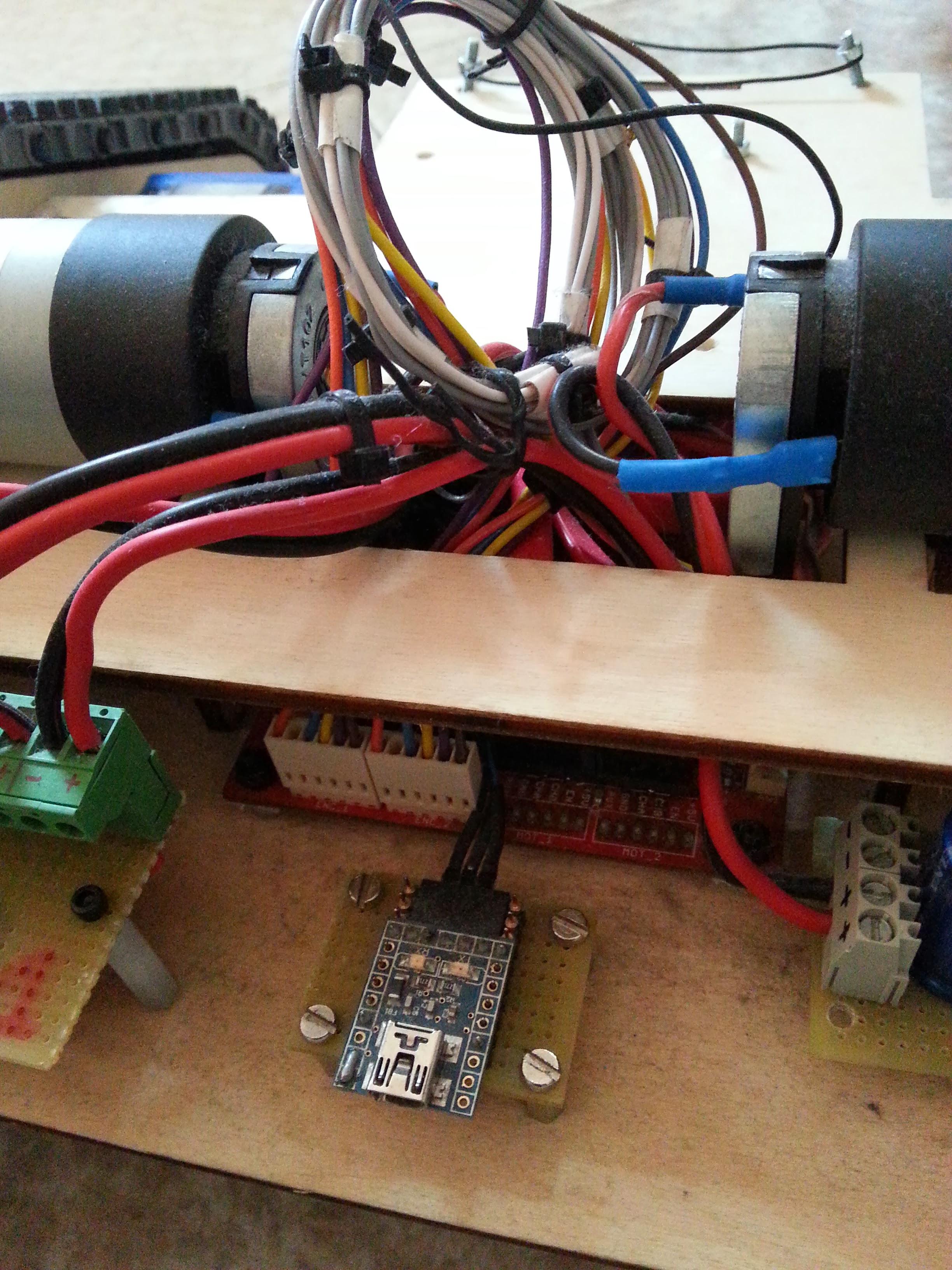

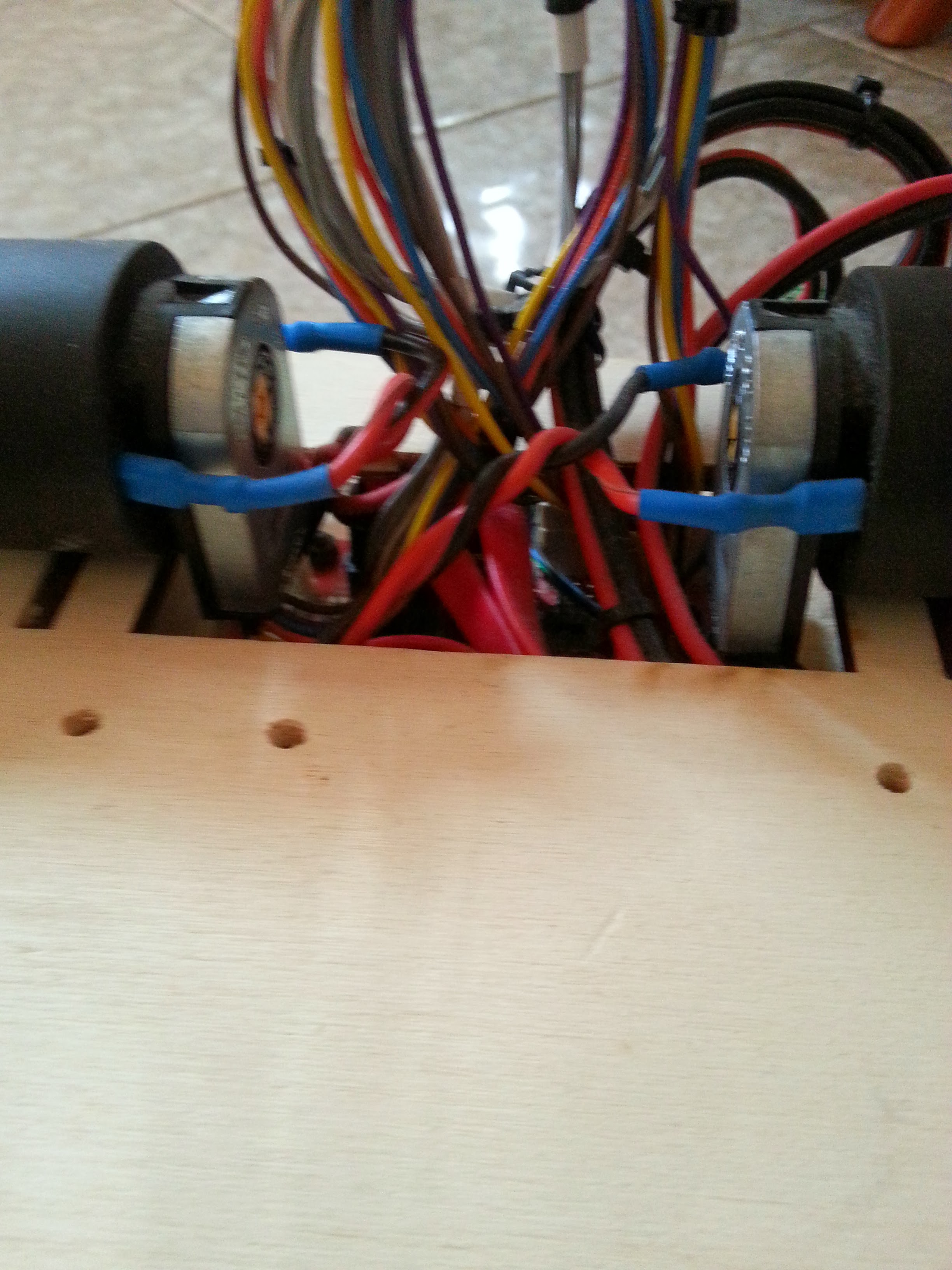

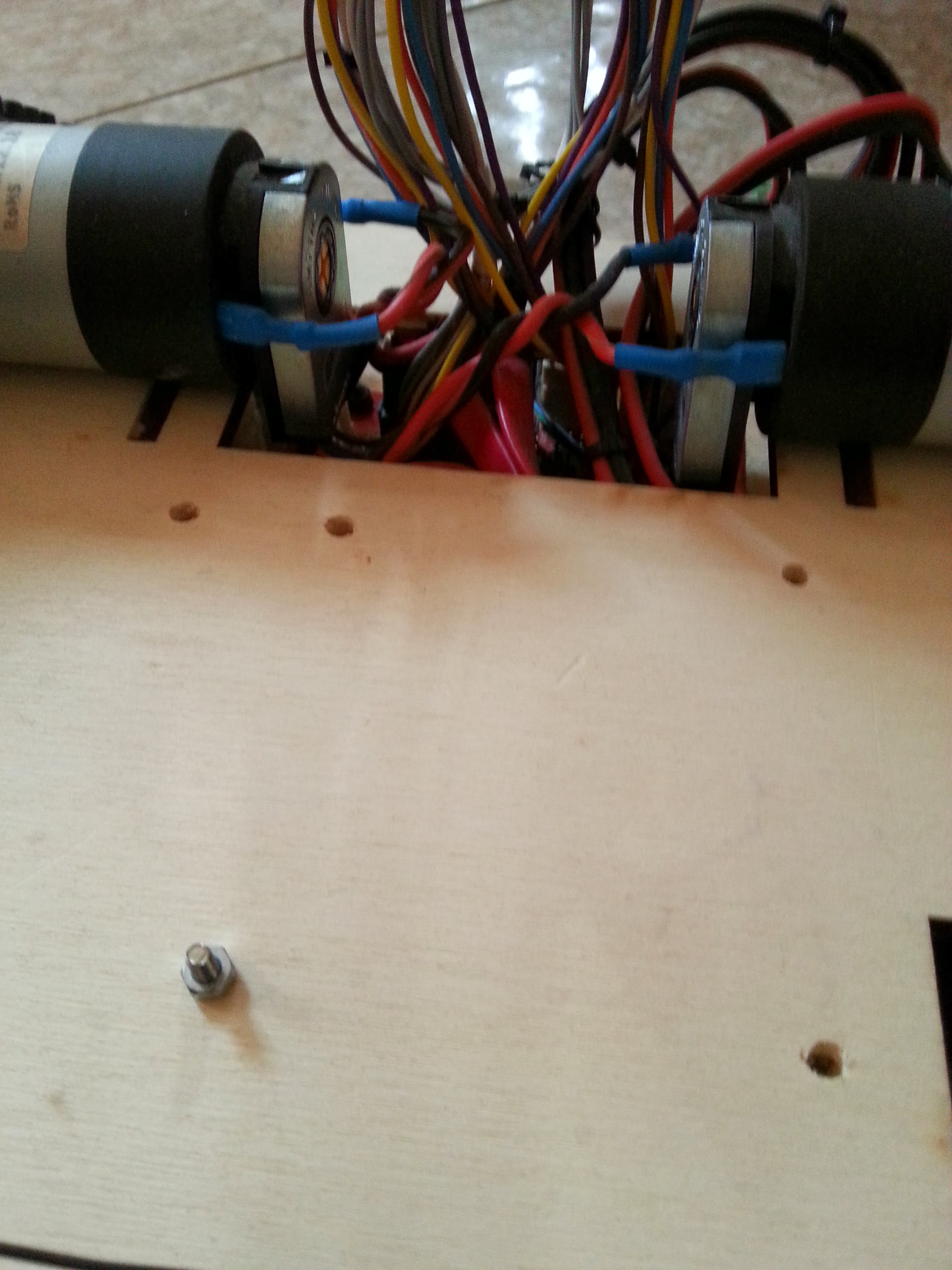

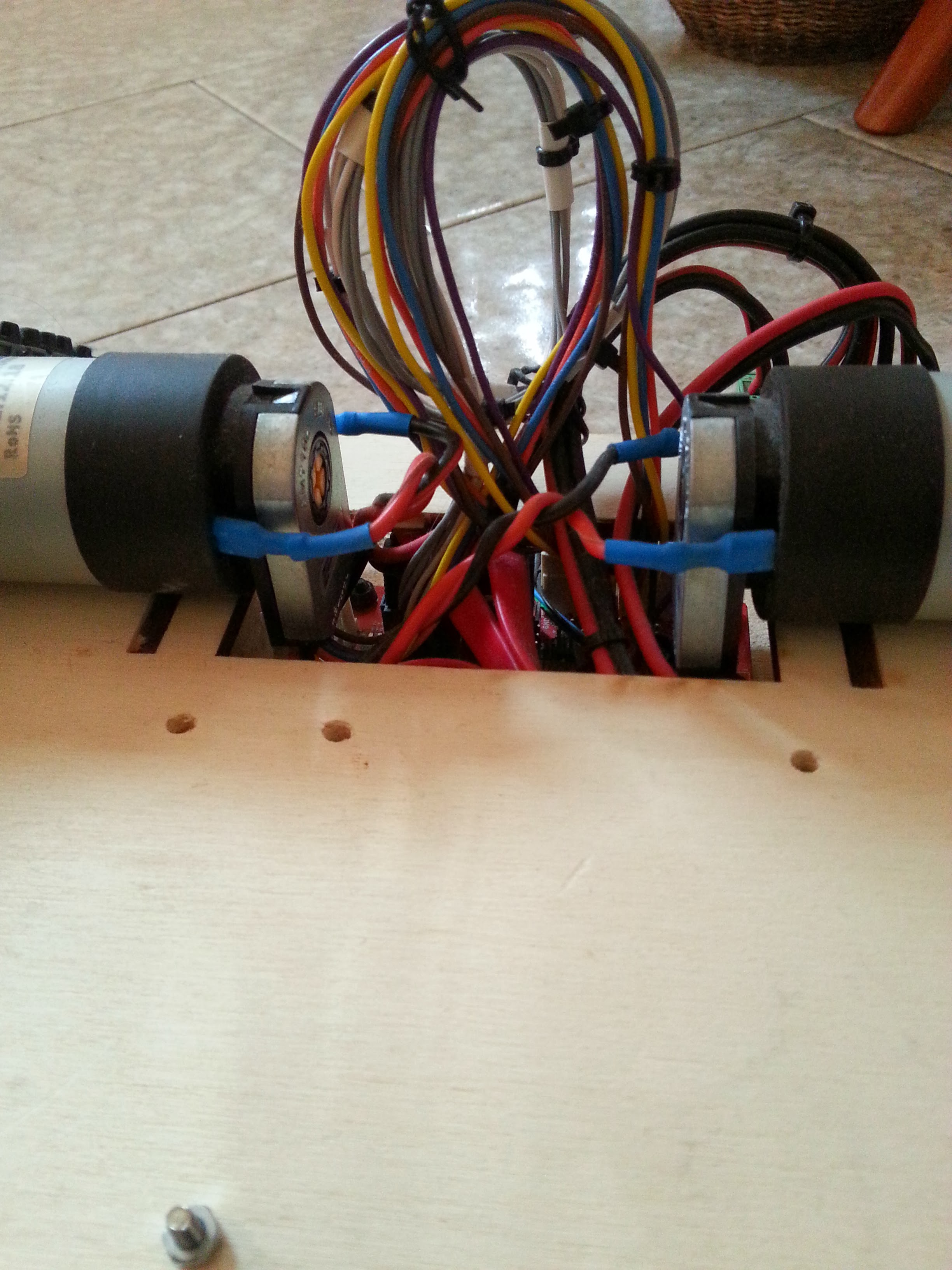

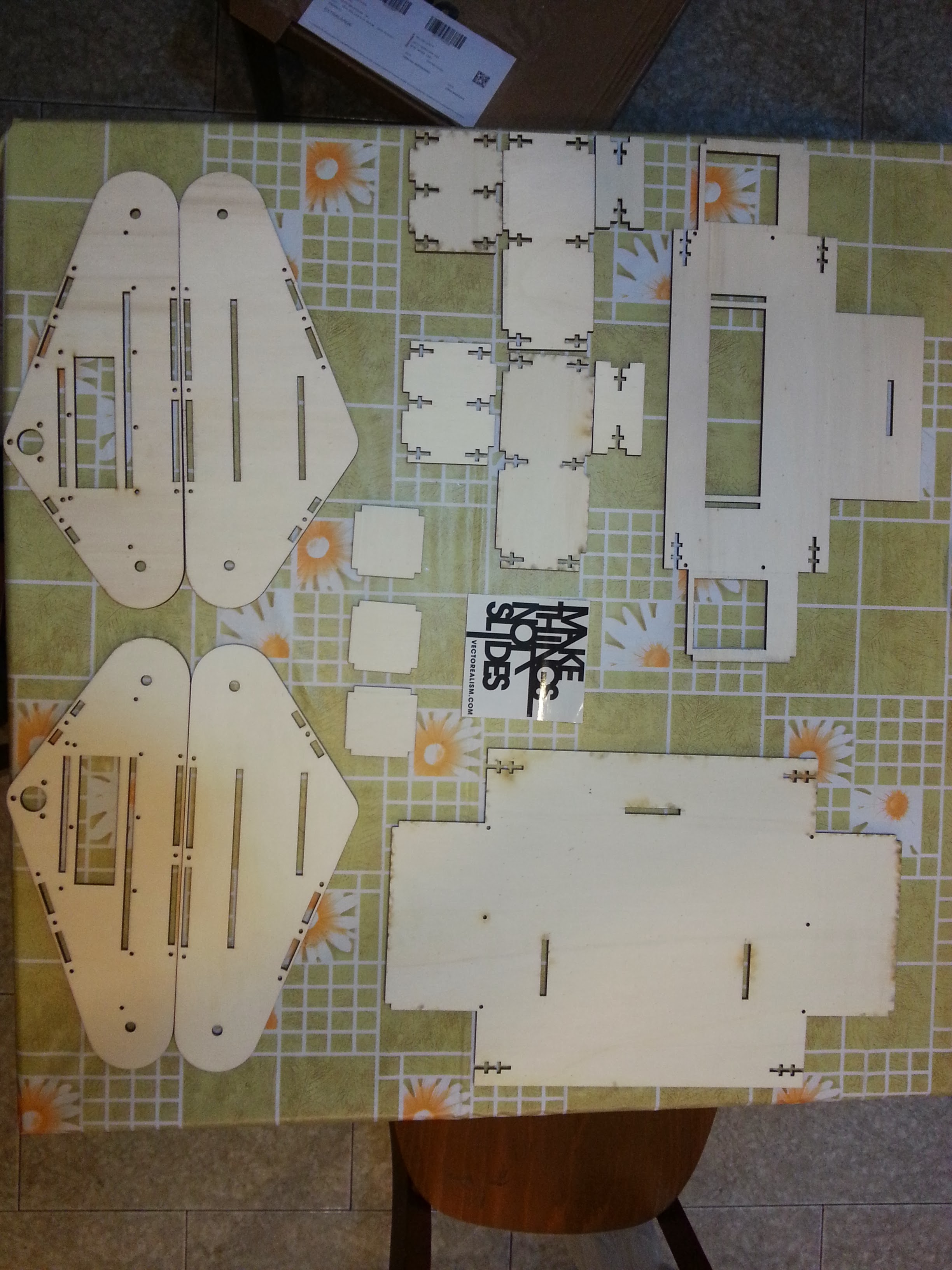

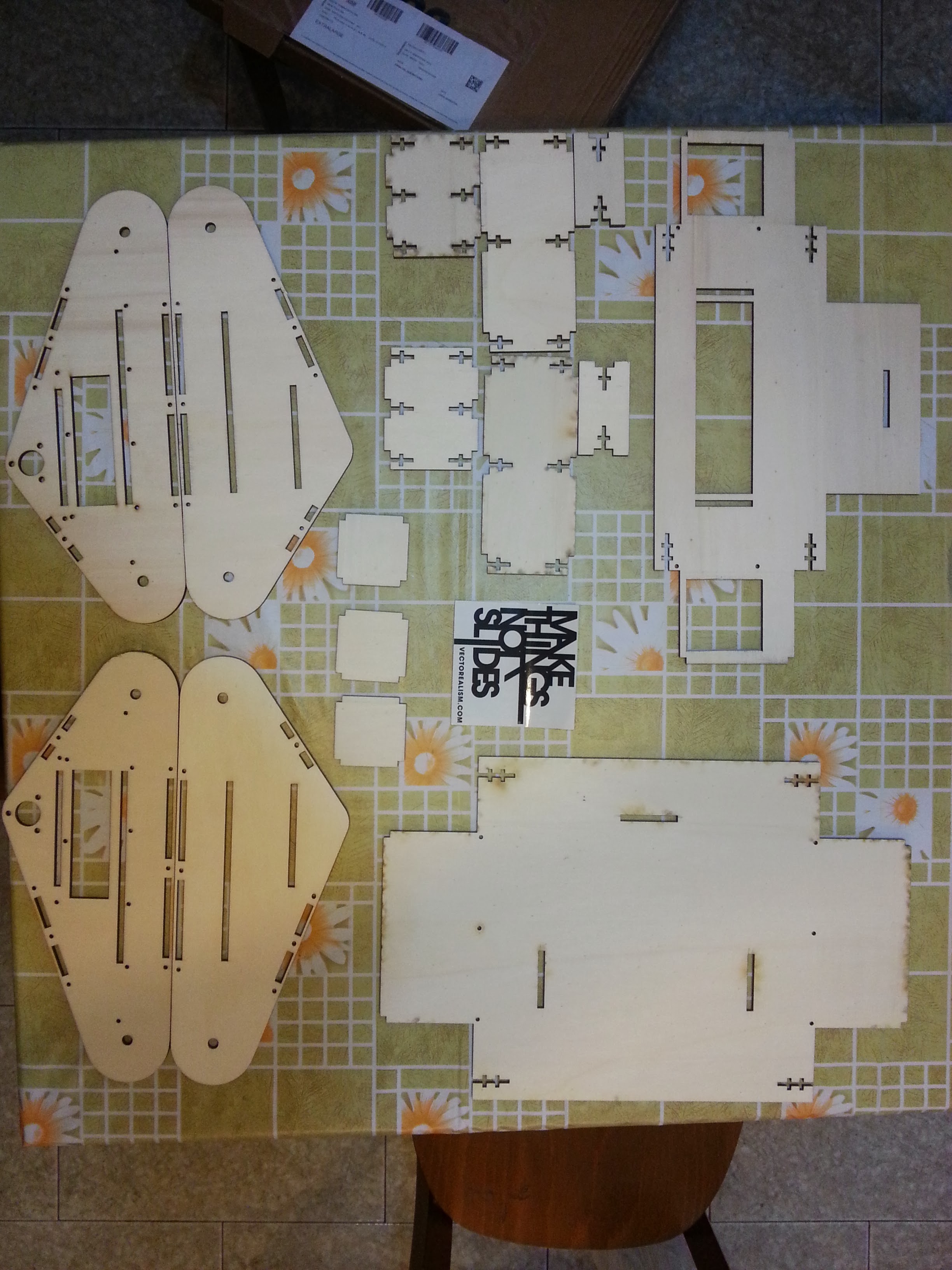

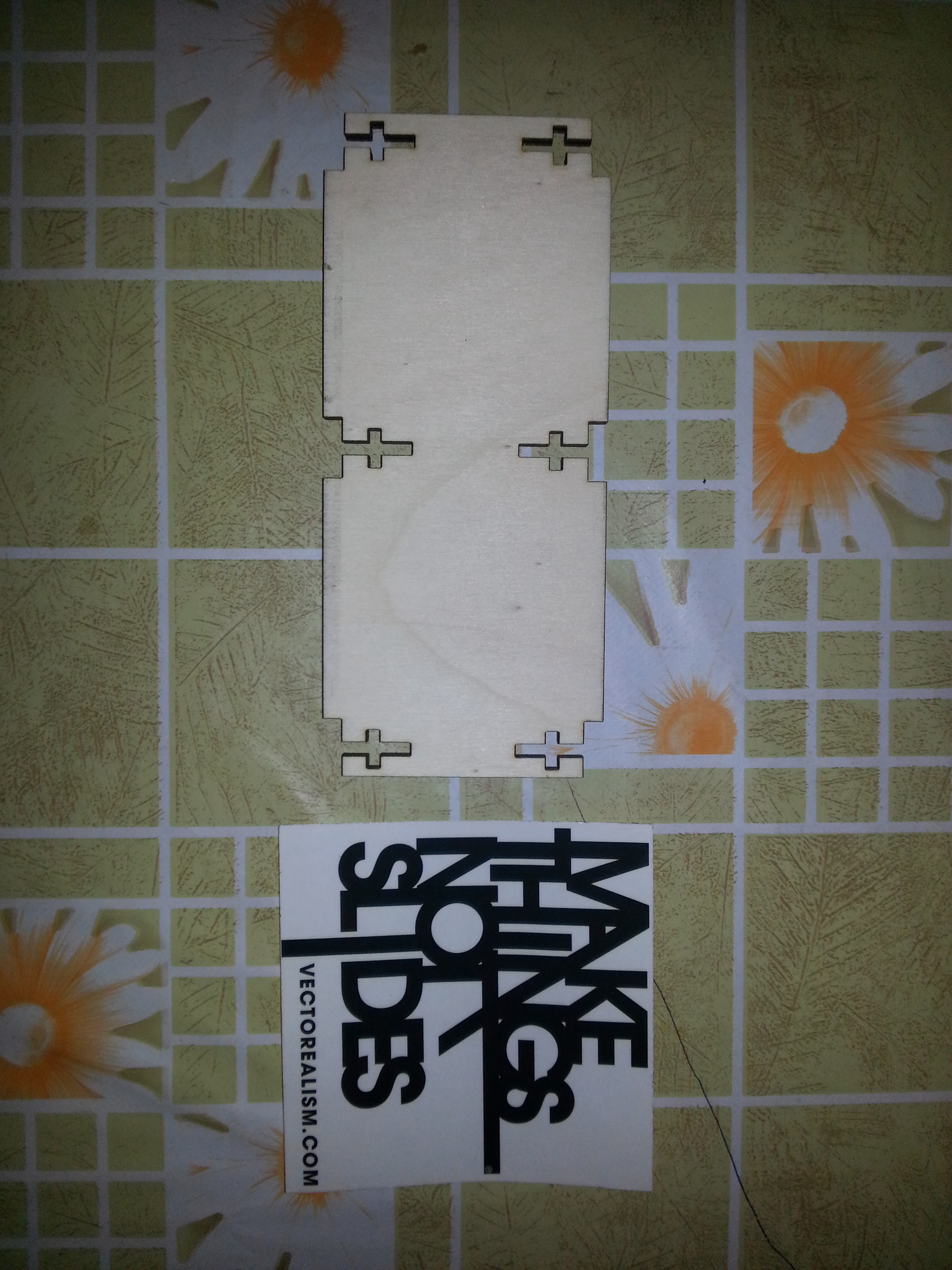

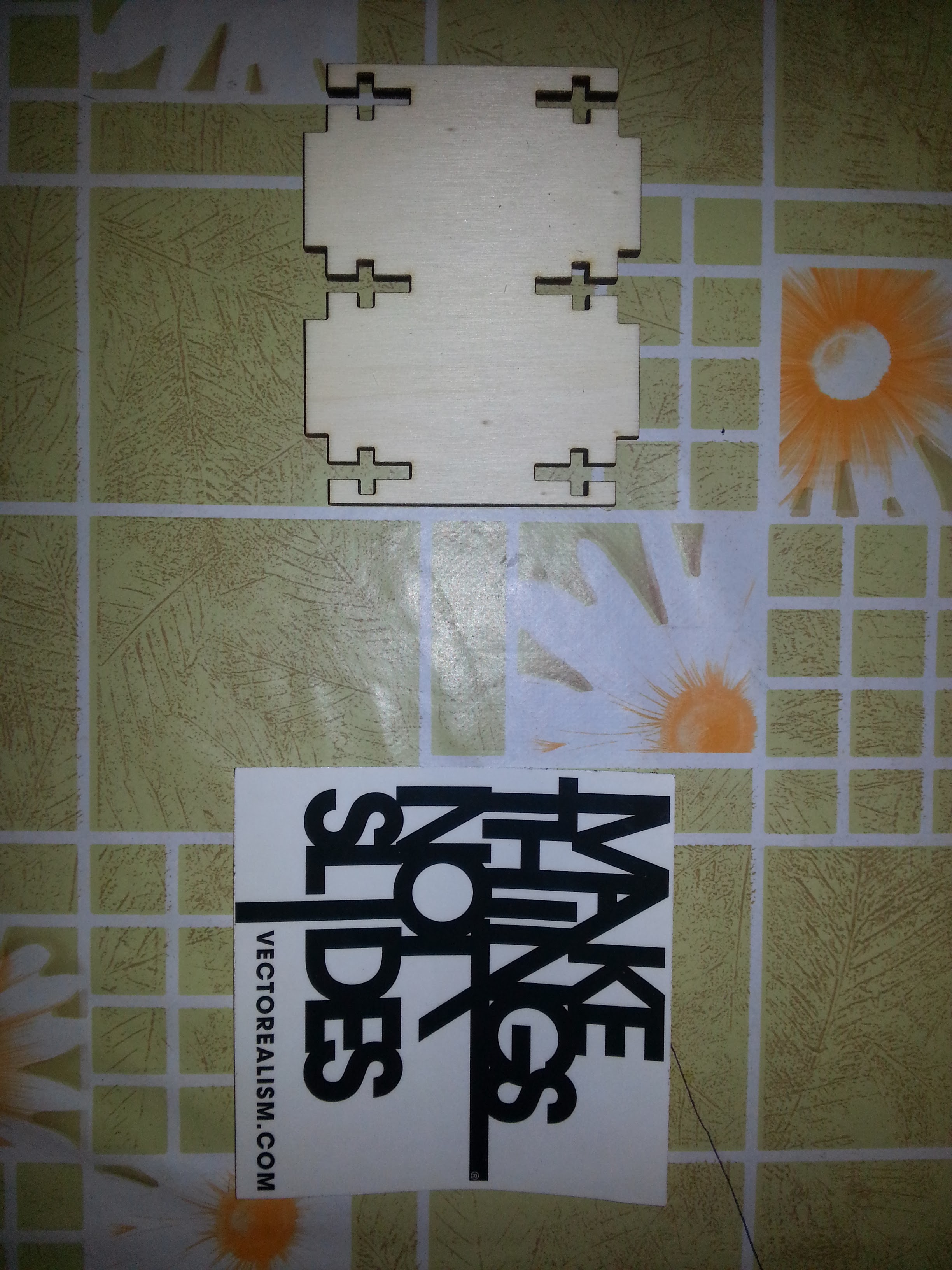

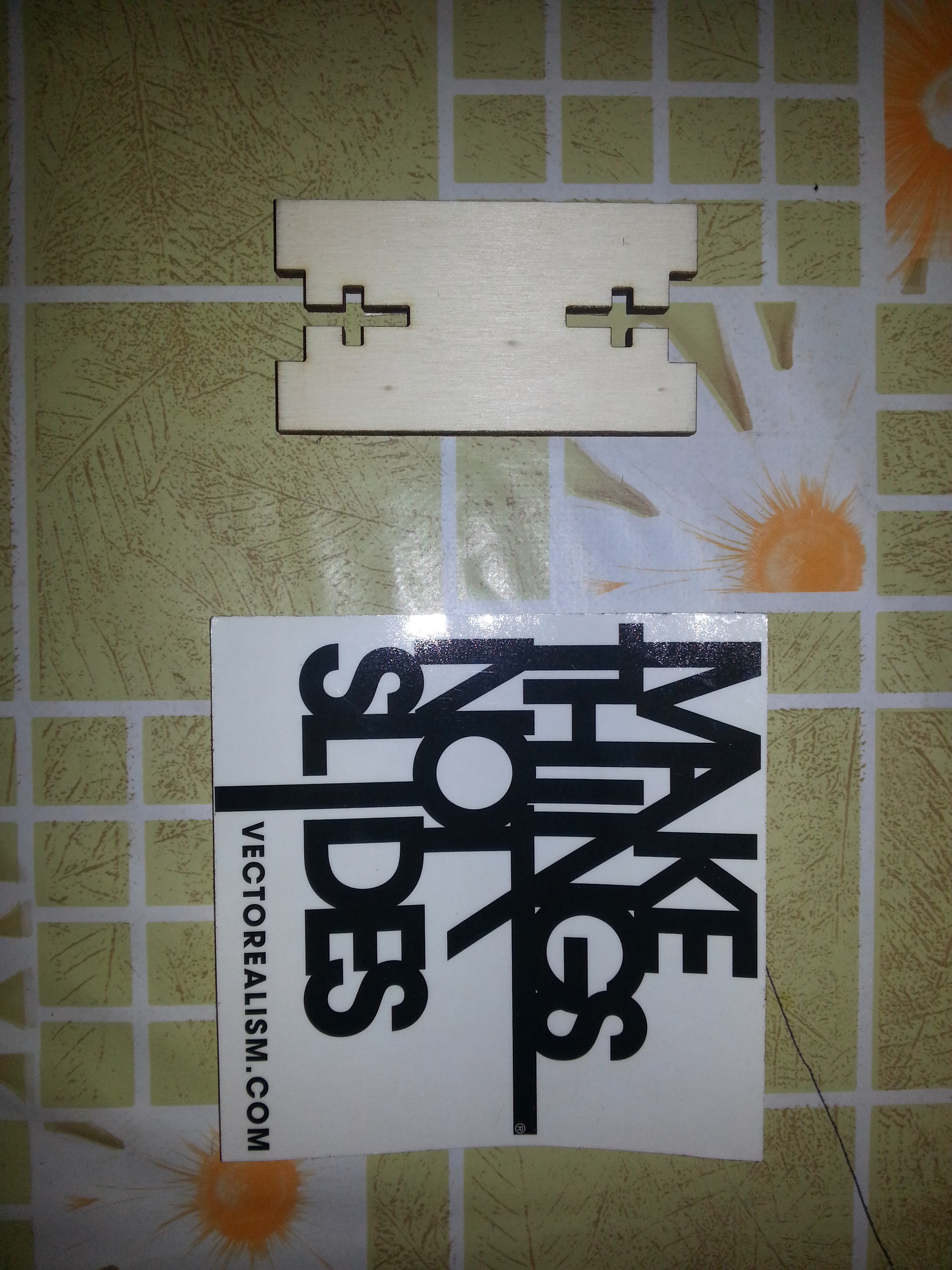

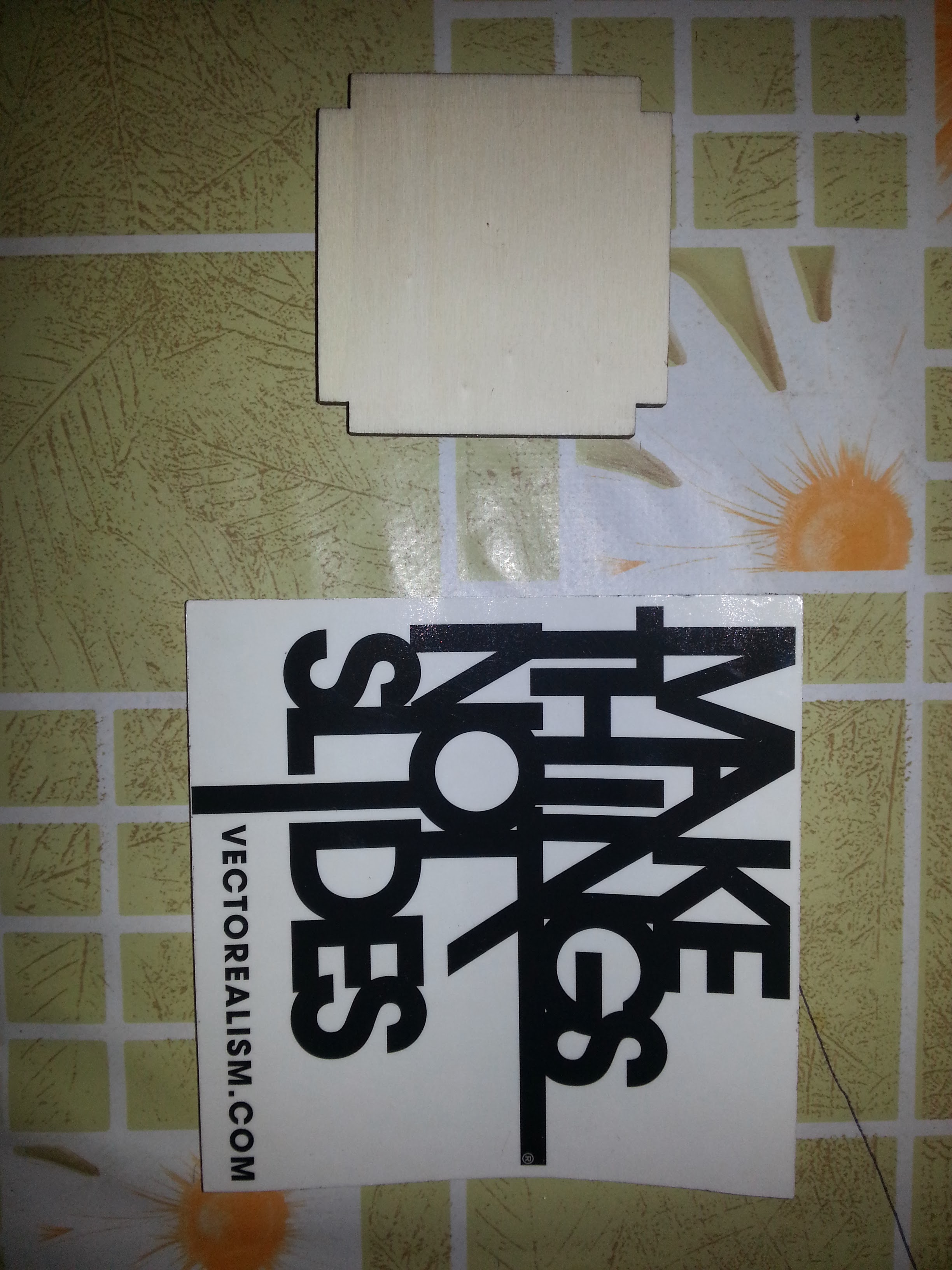

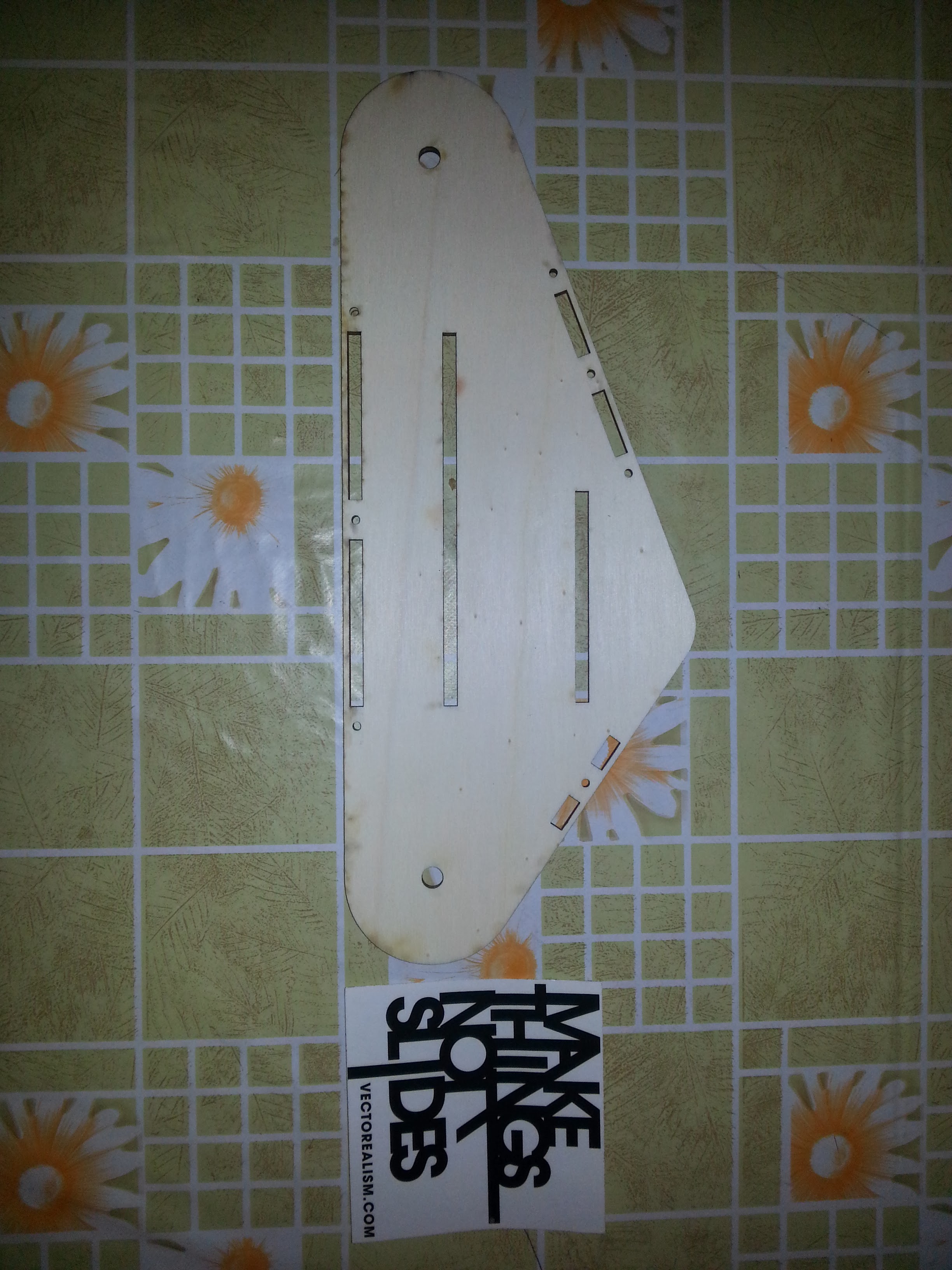

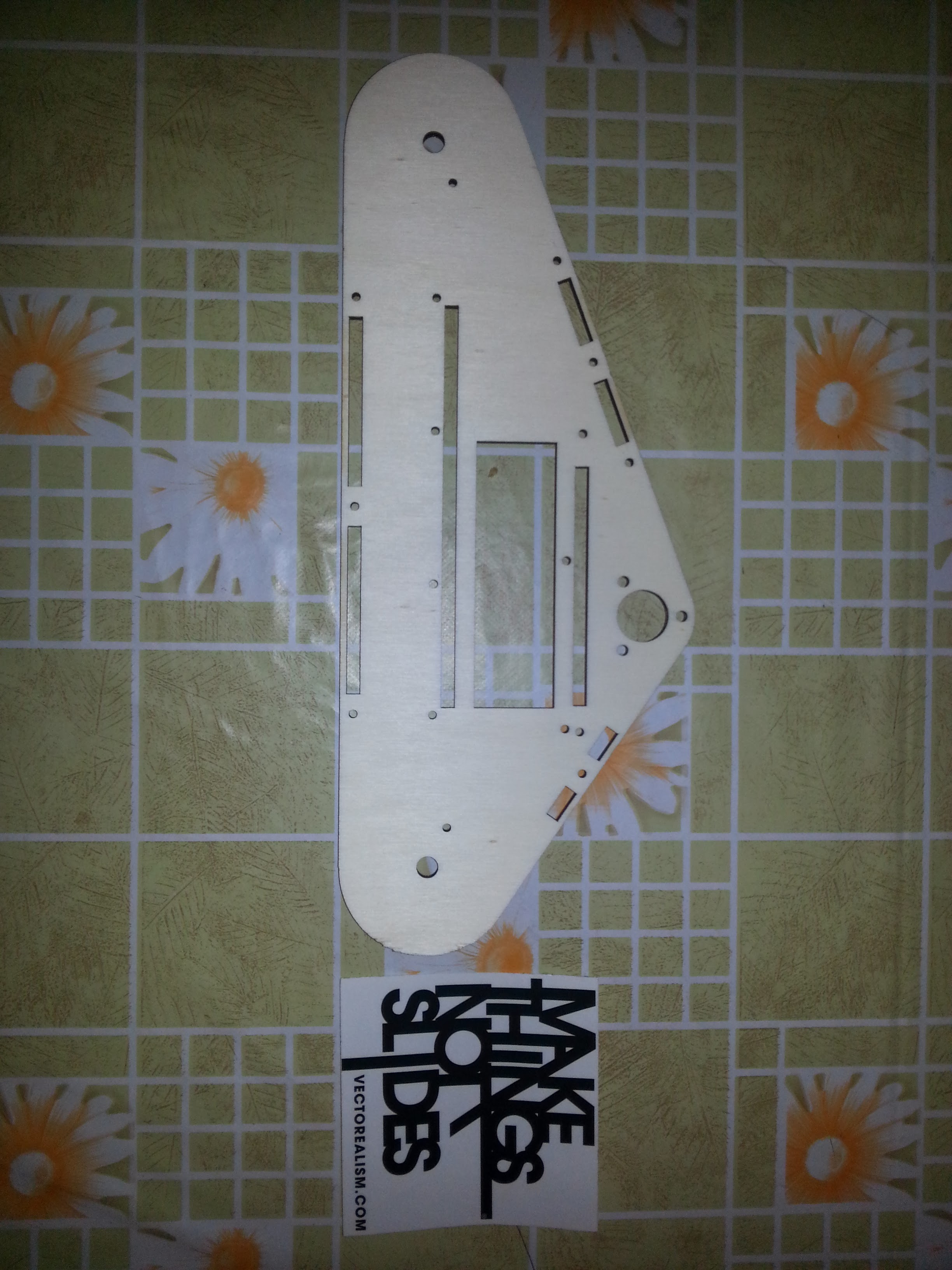

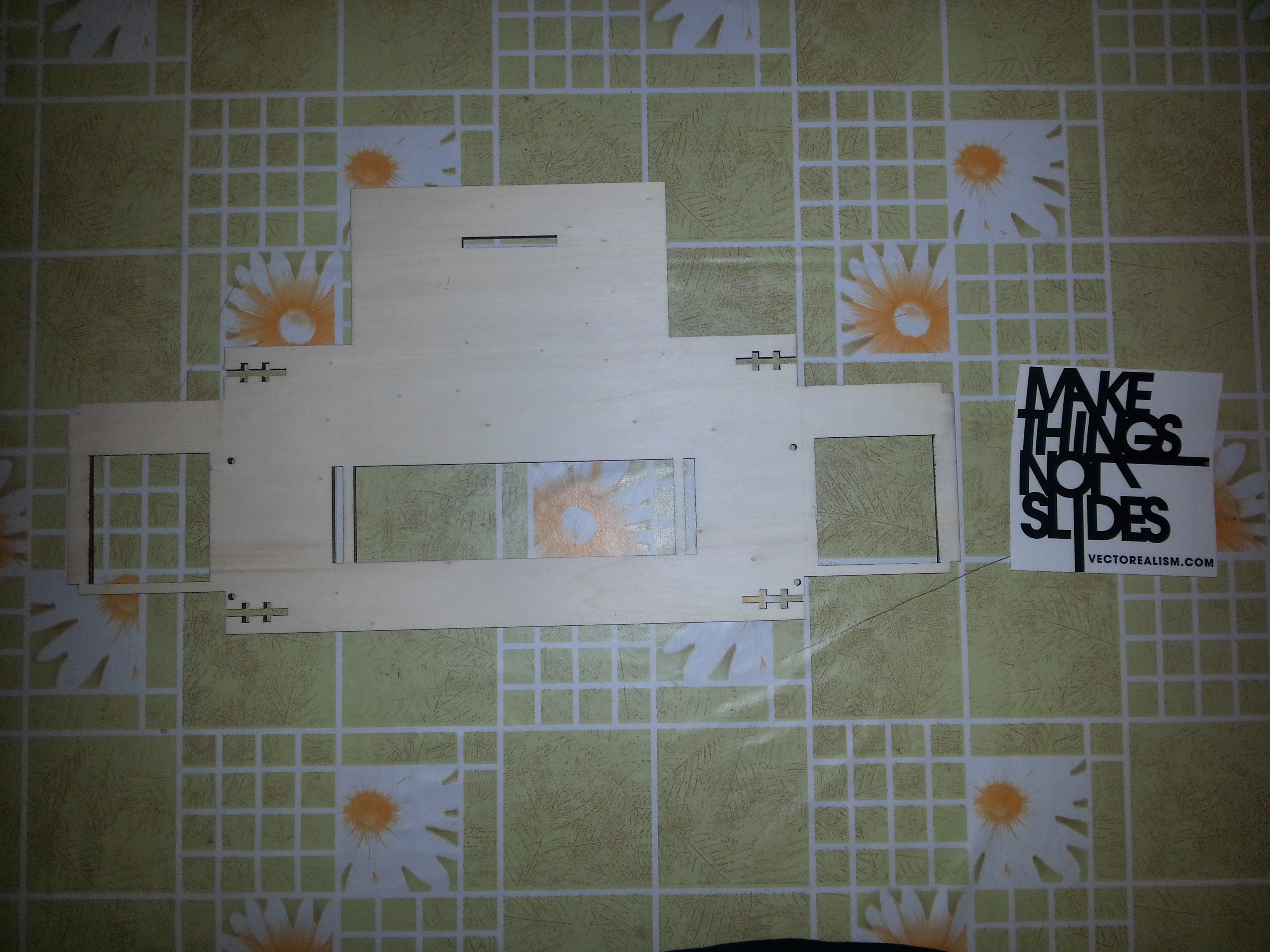

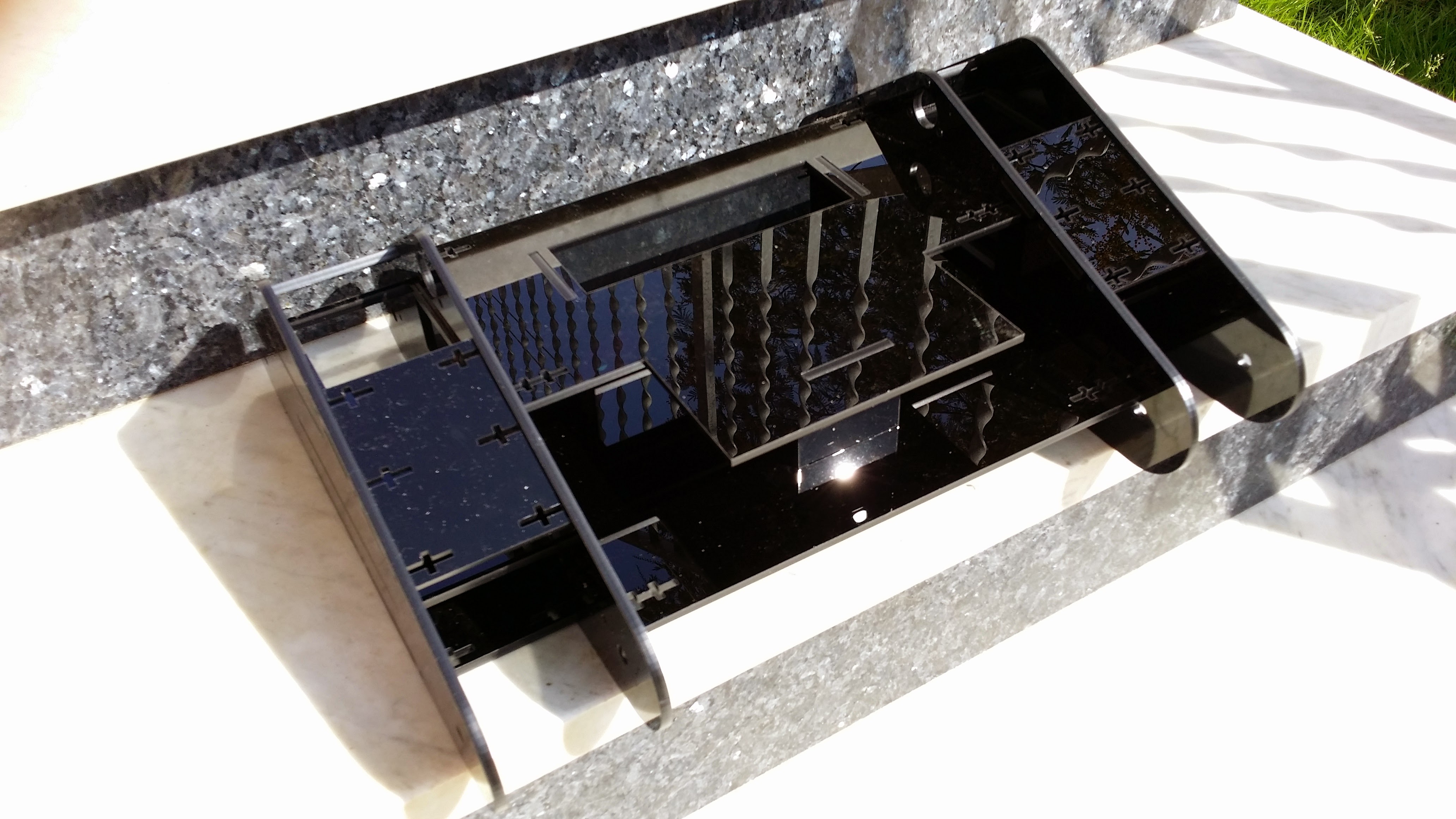

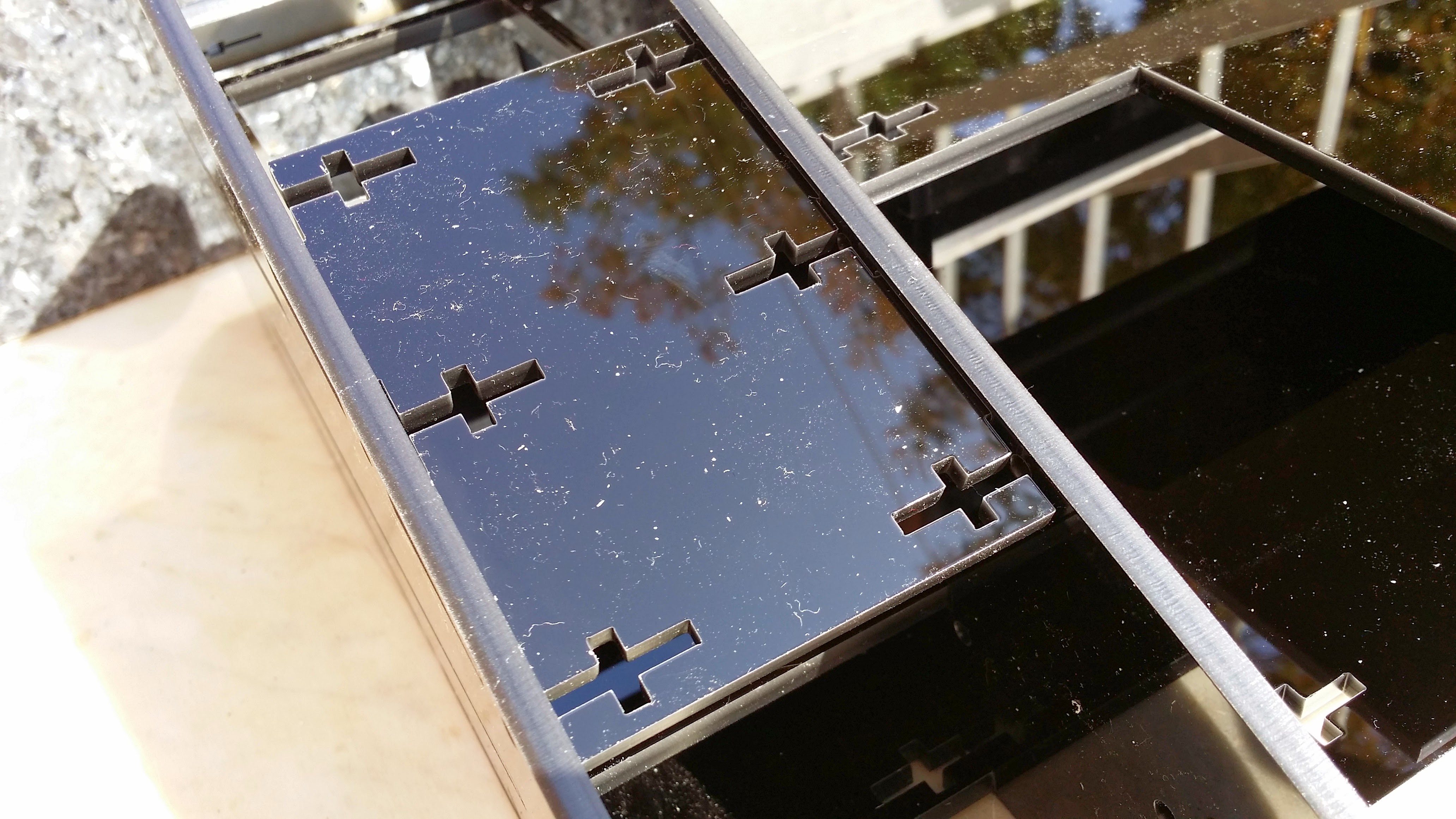

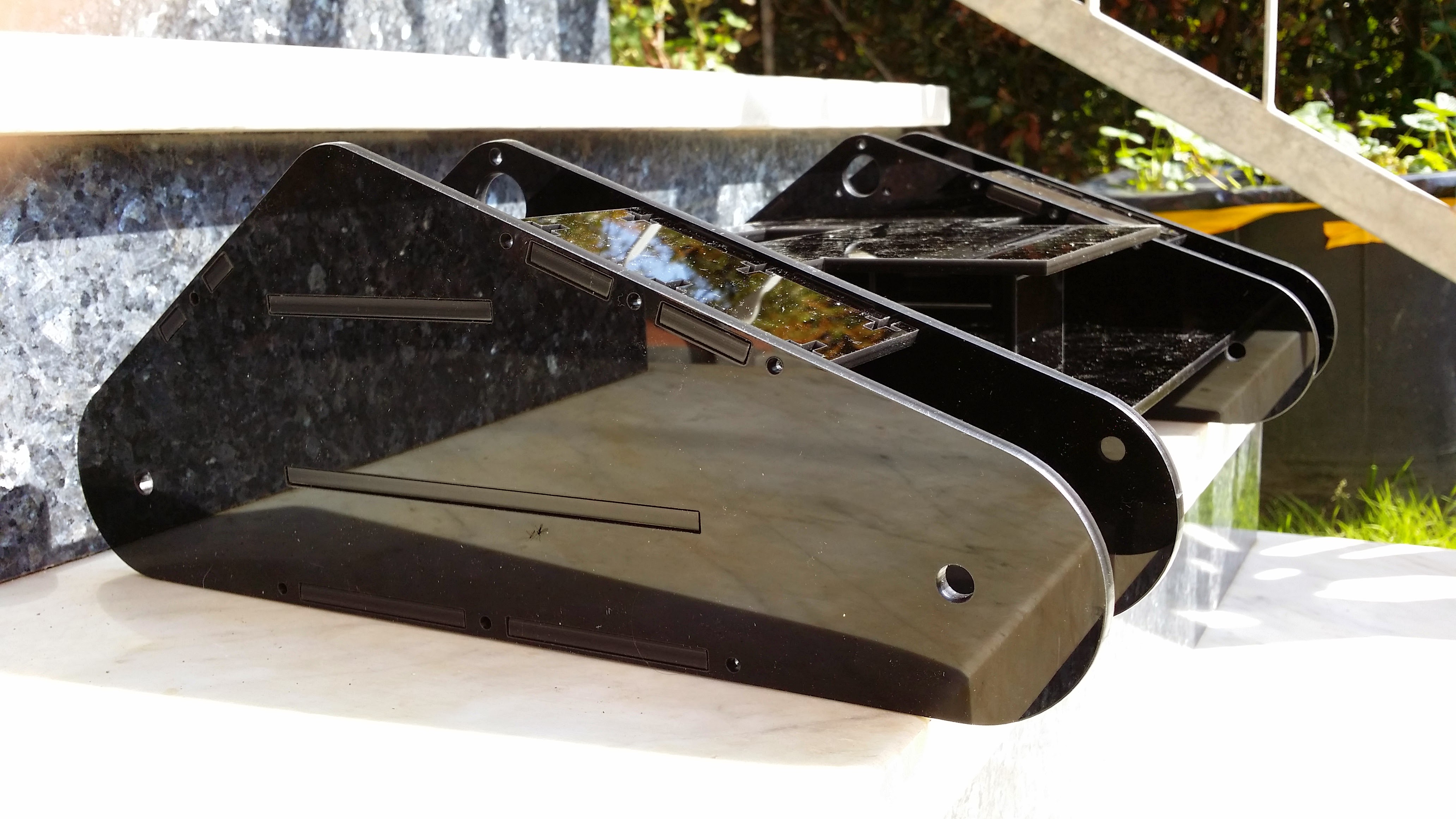

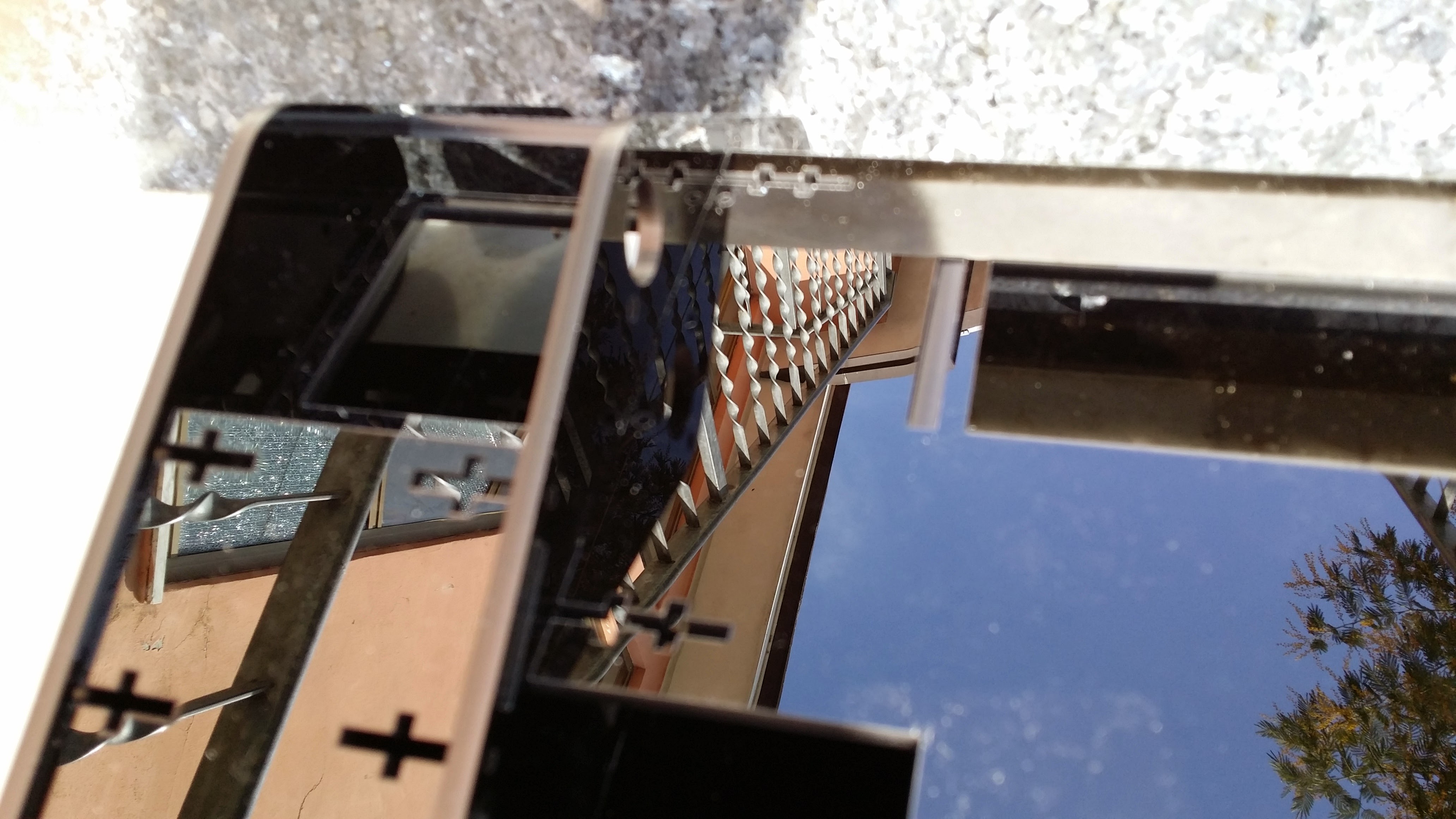

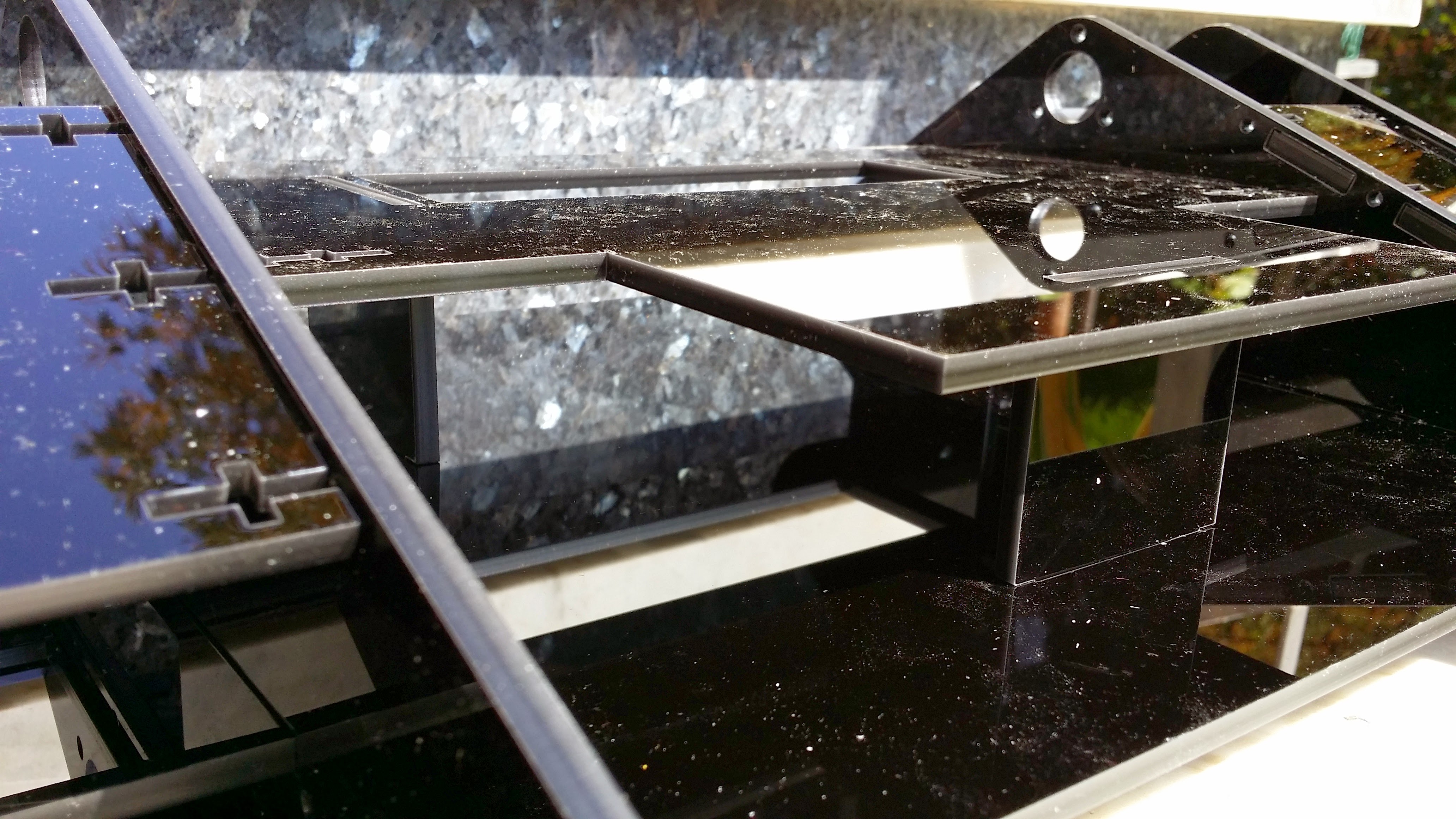

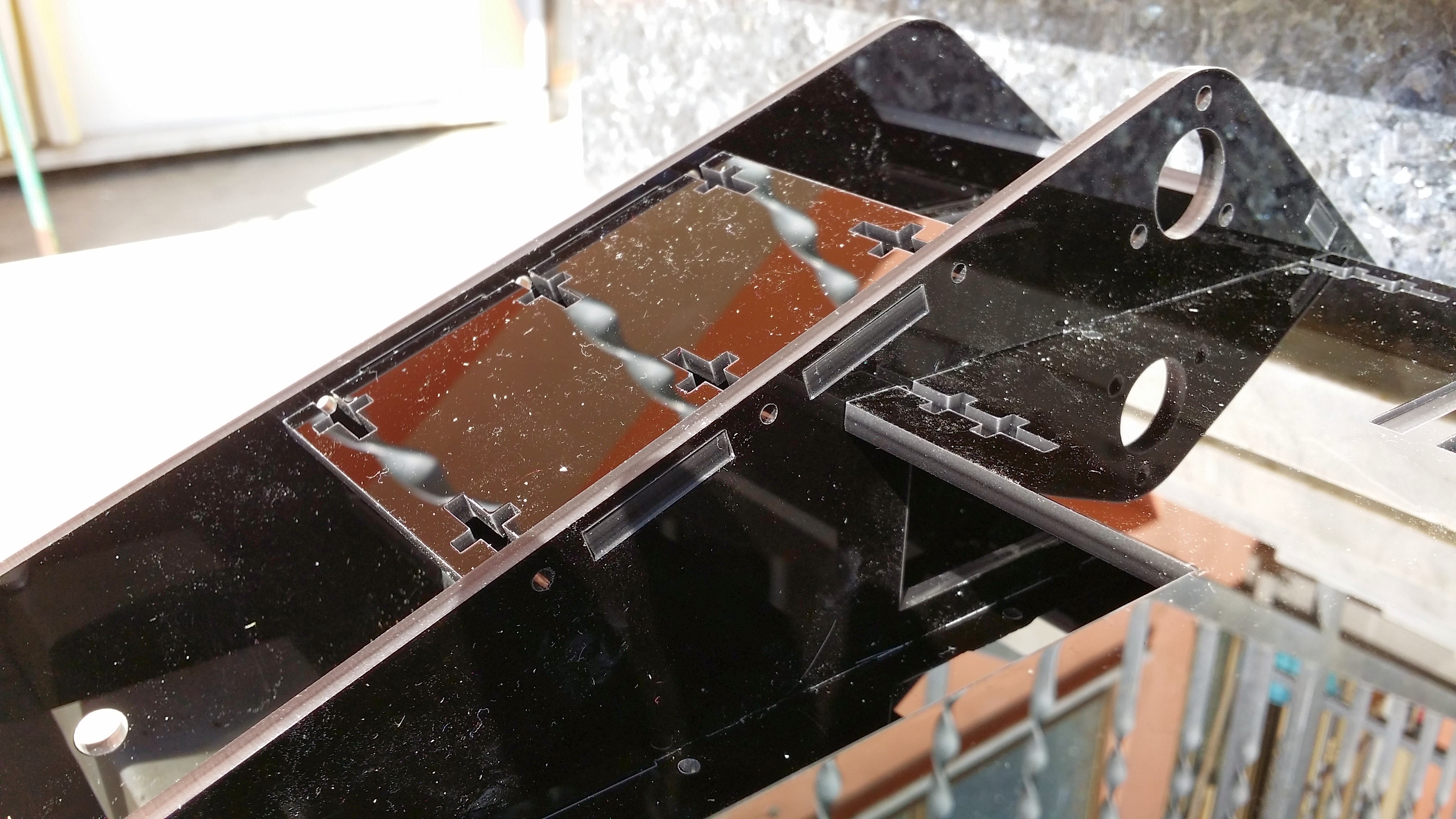

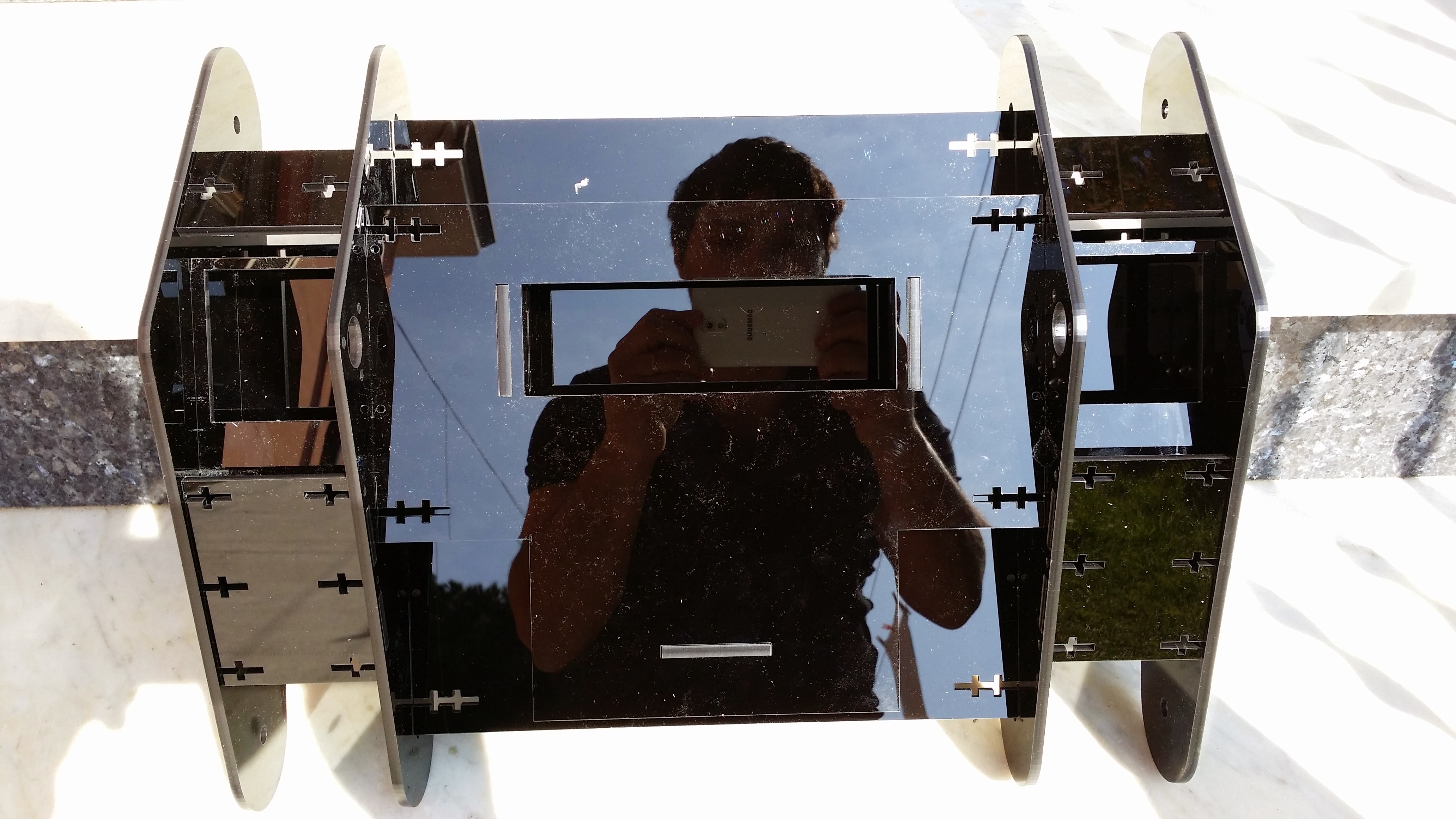

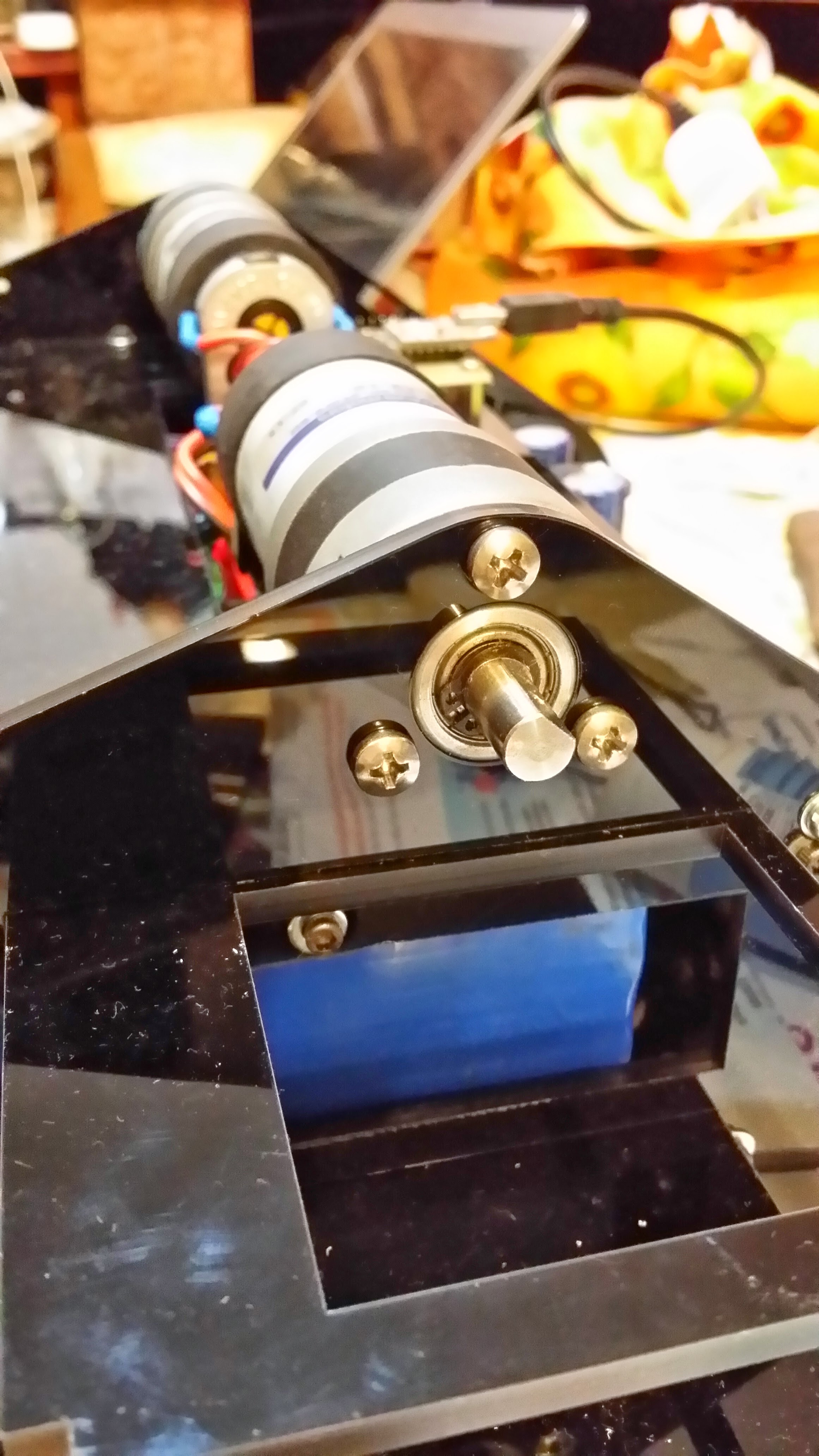

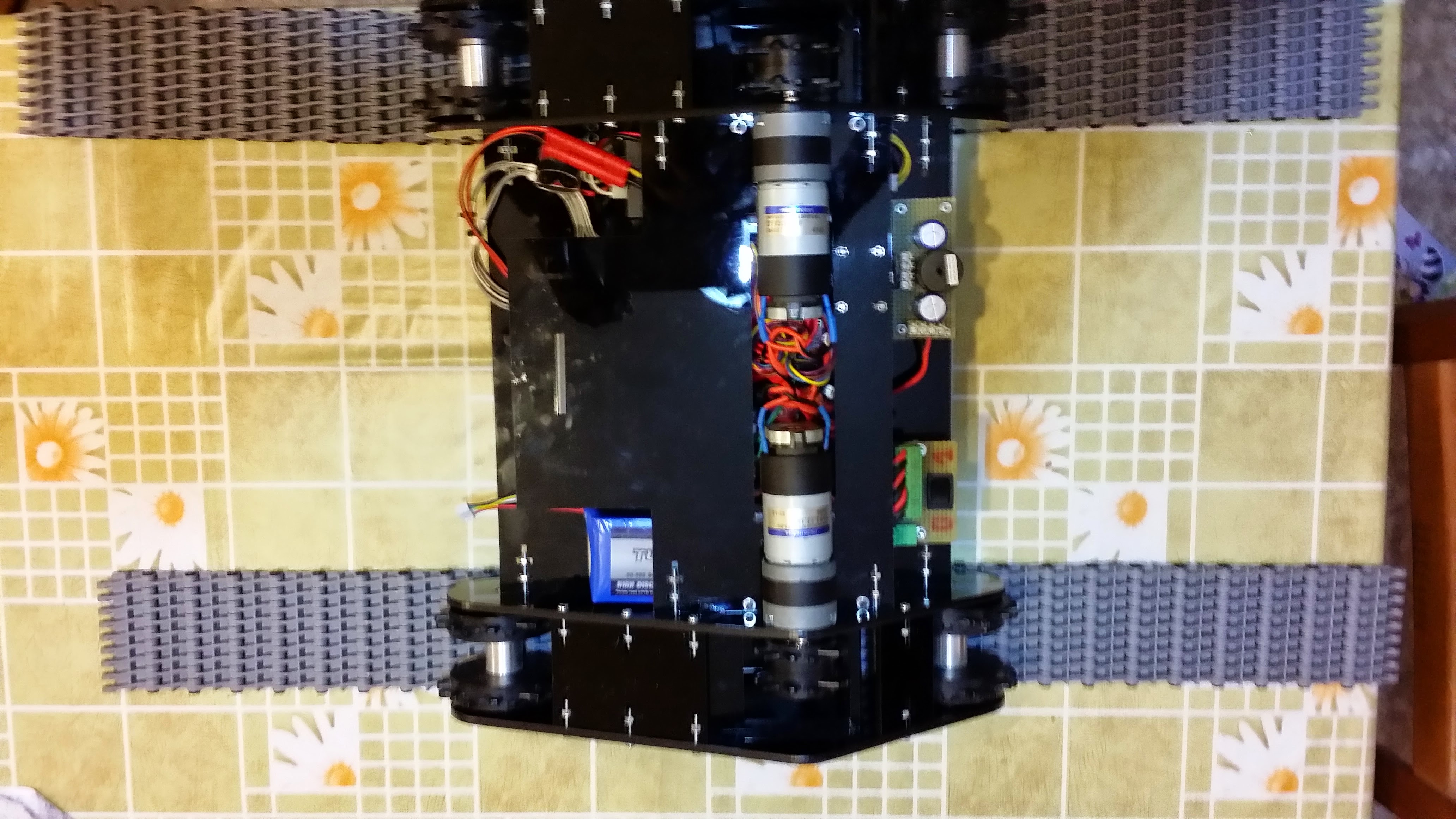

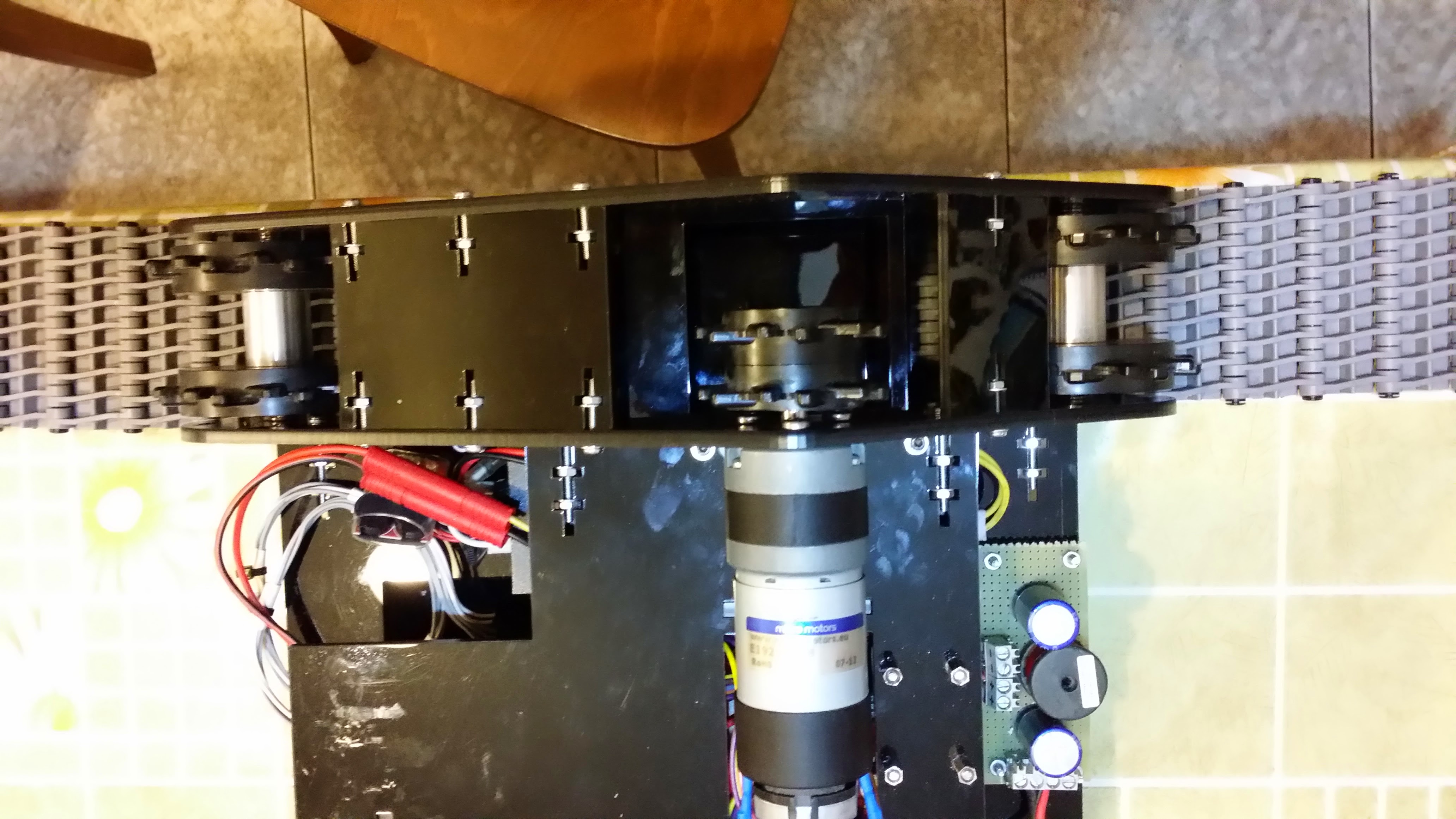

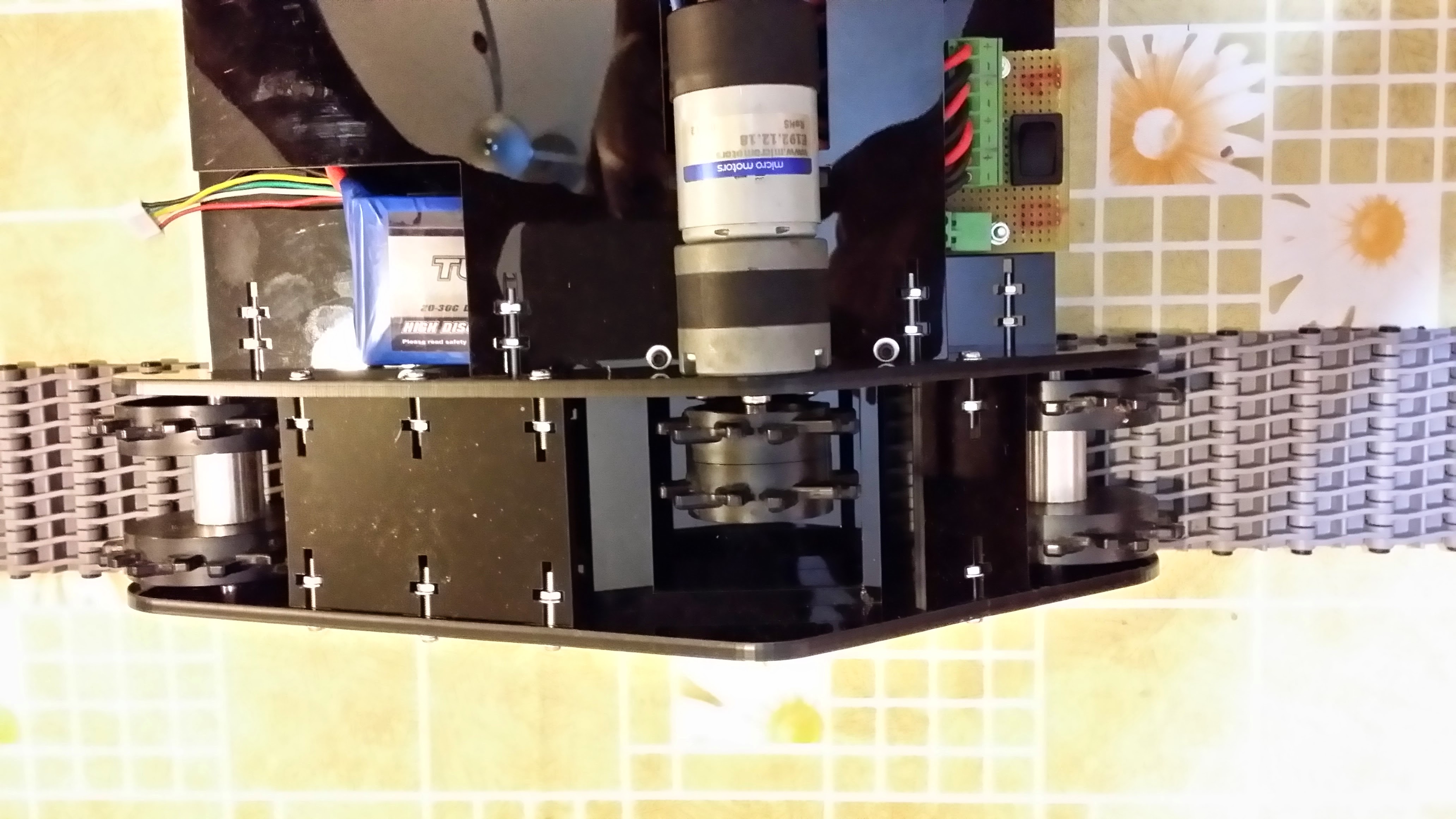

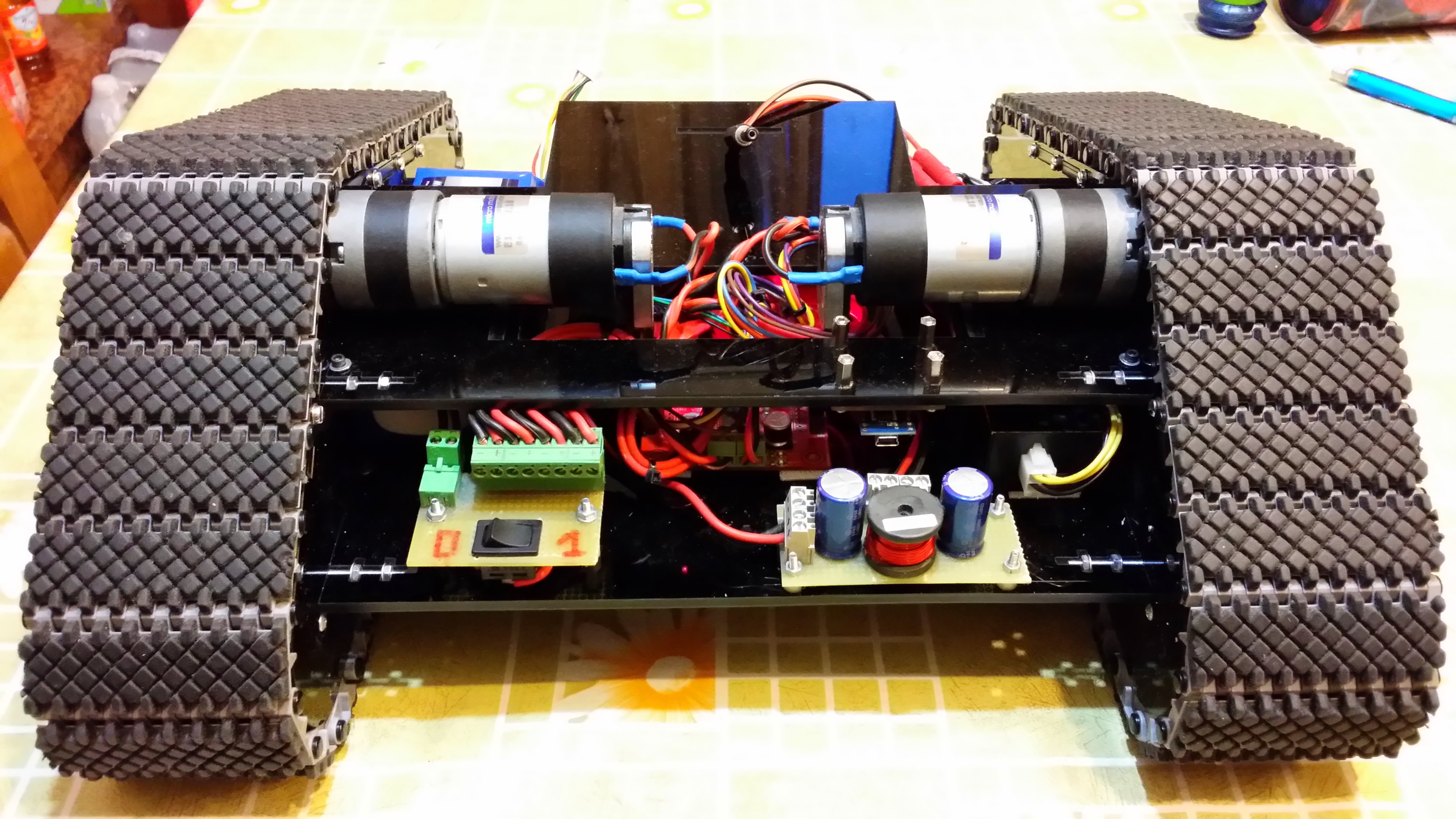

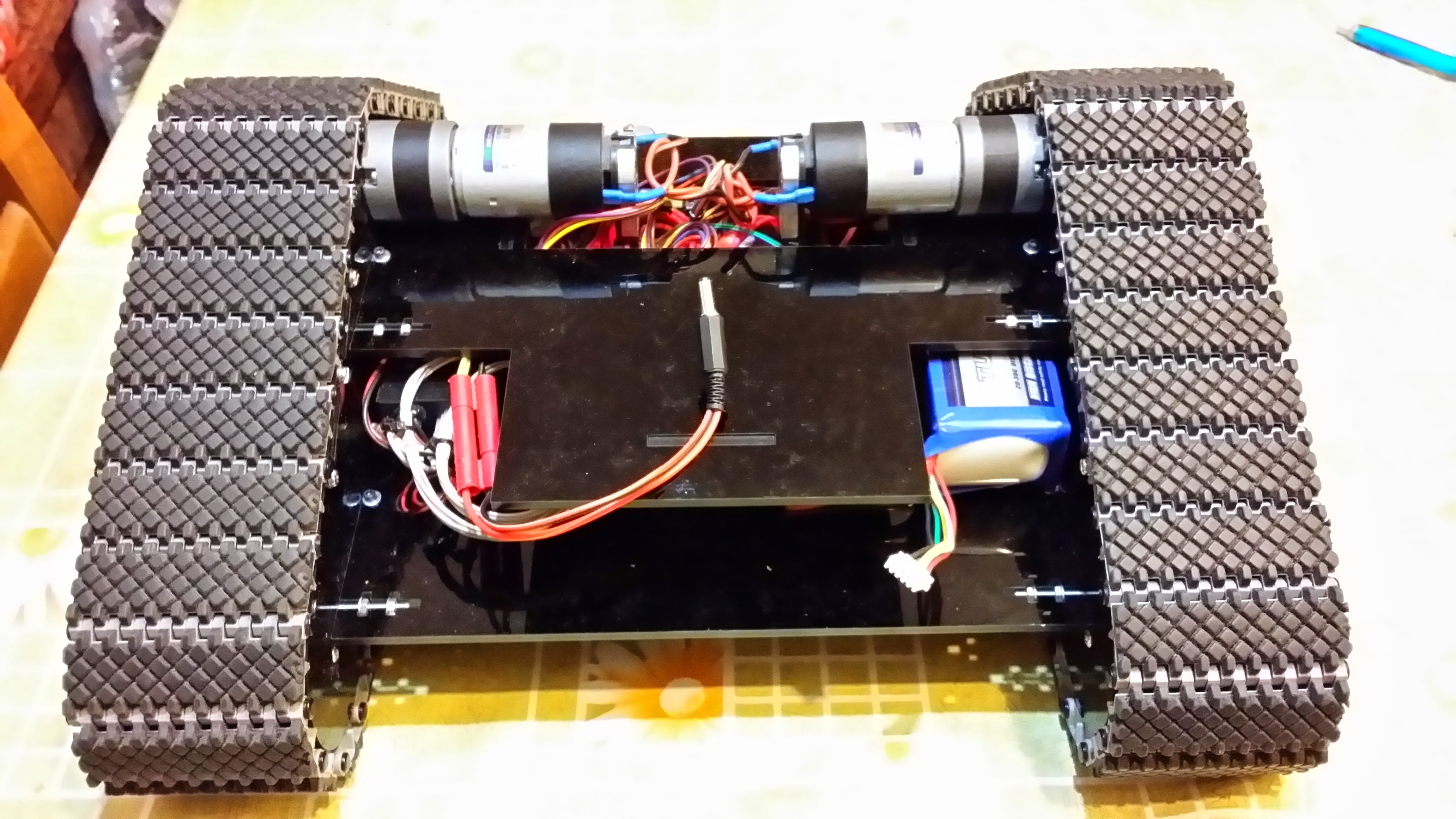

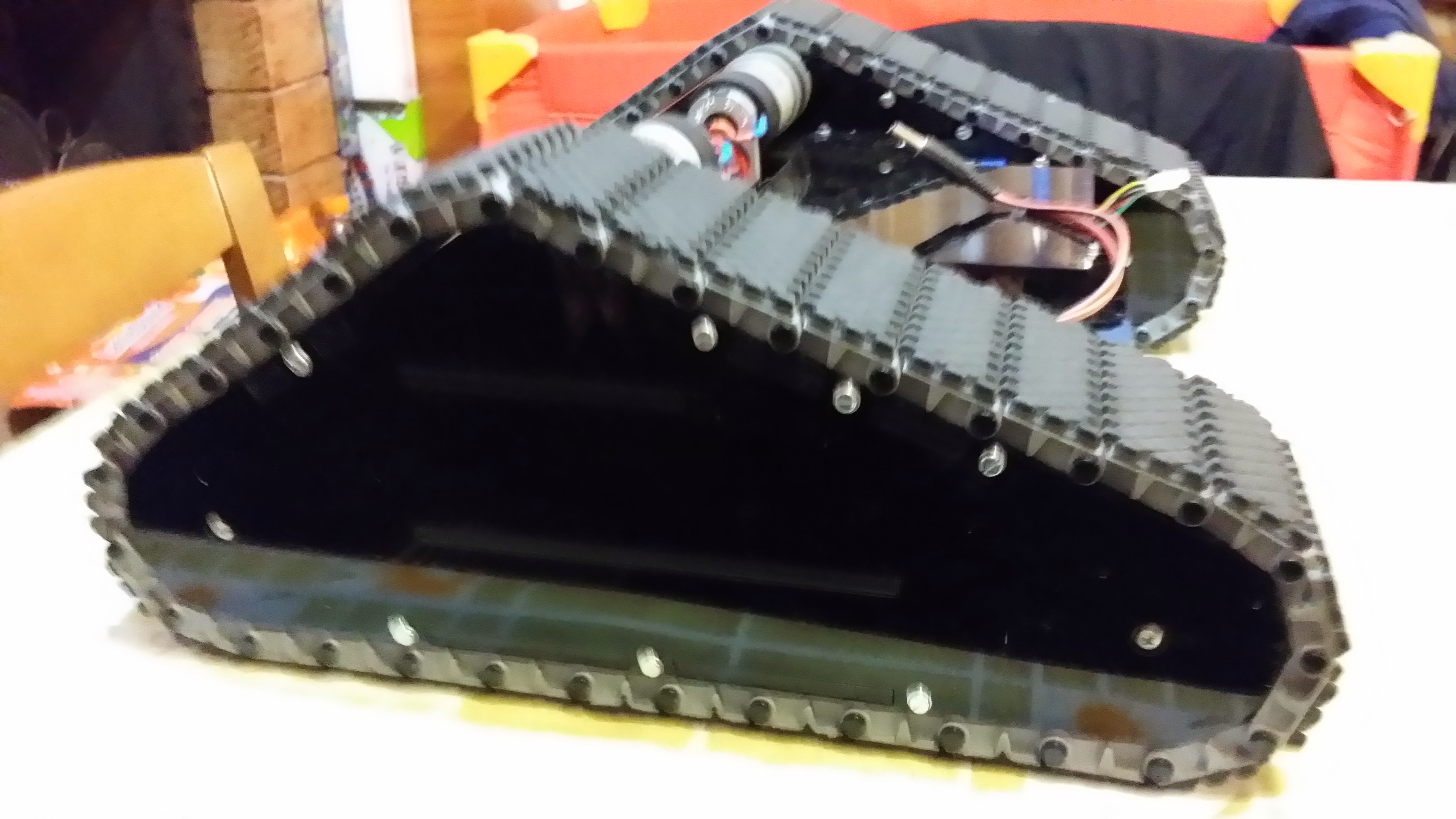

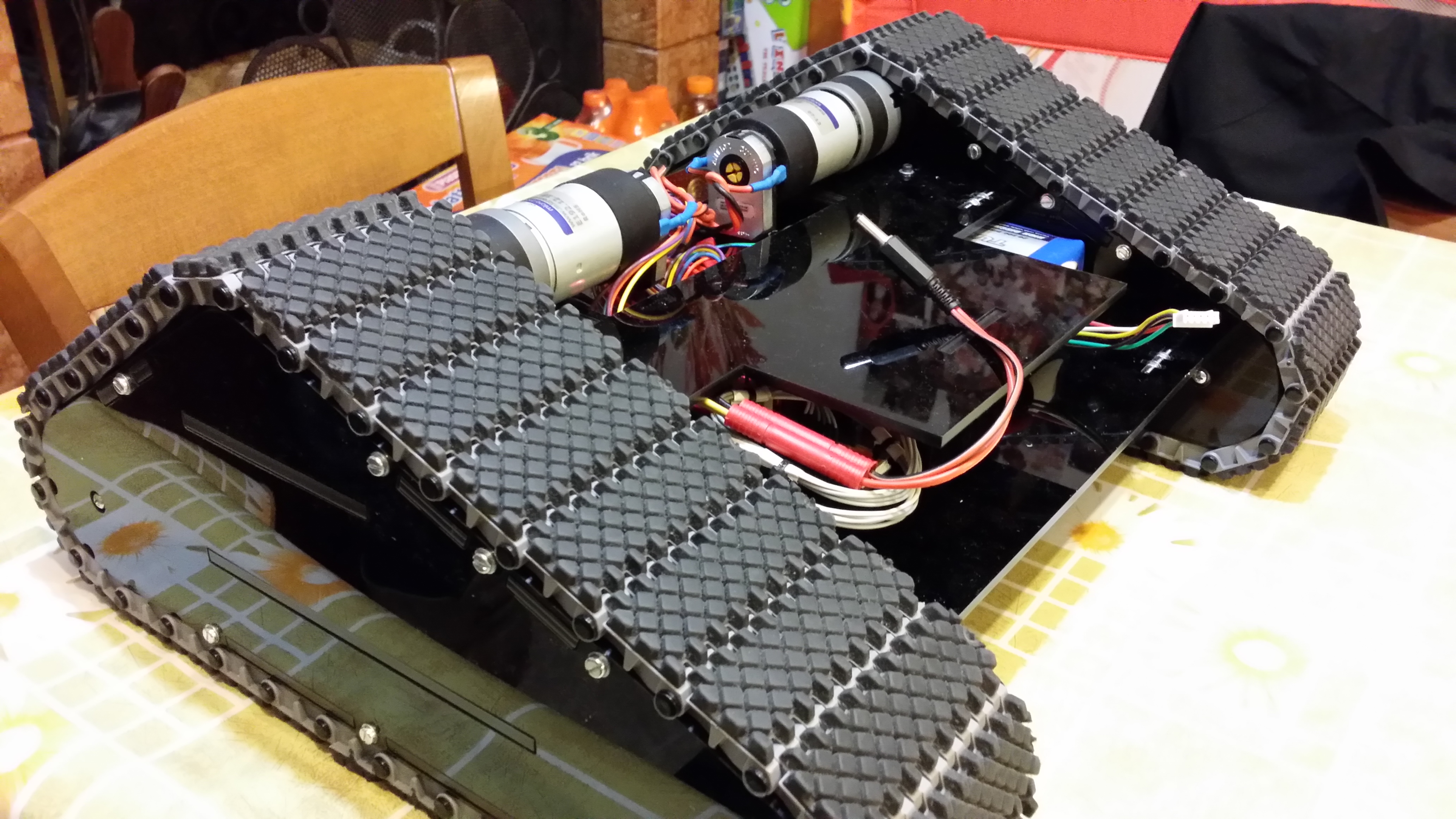

New Layout

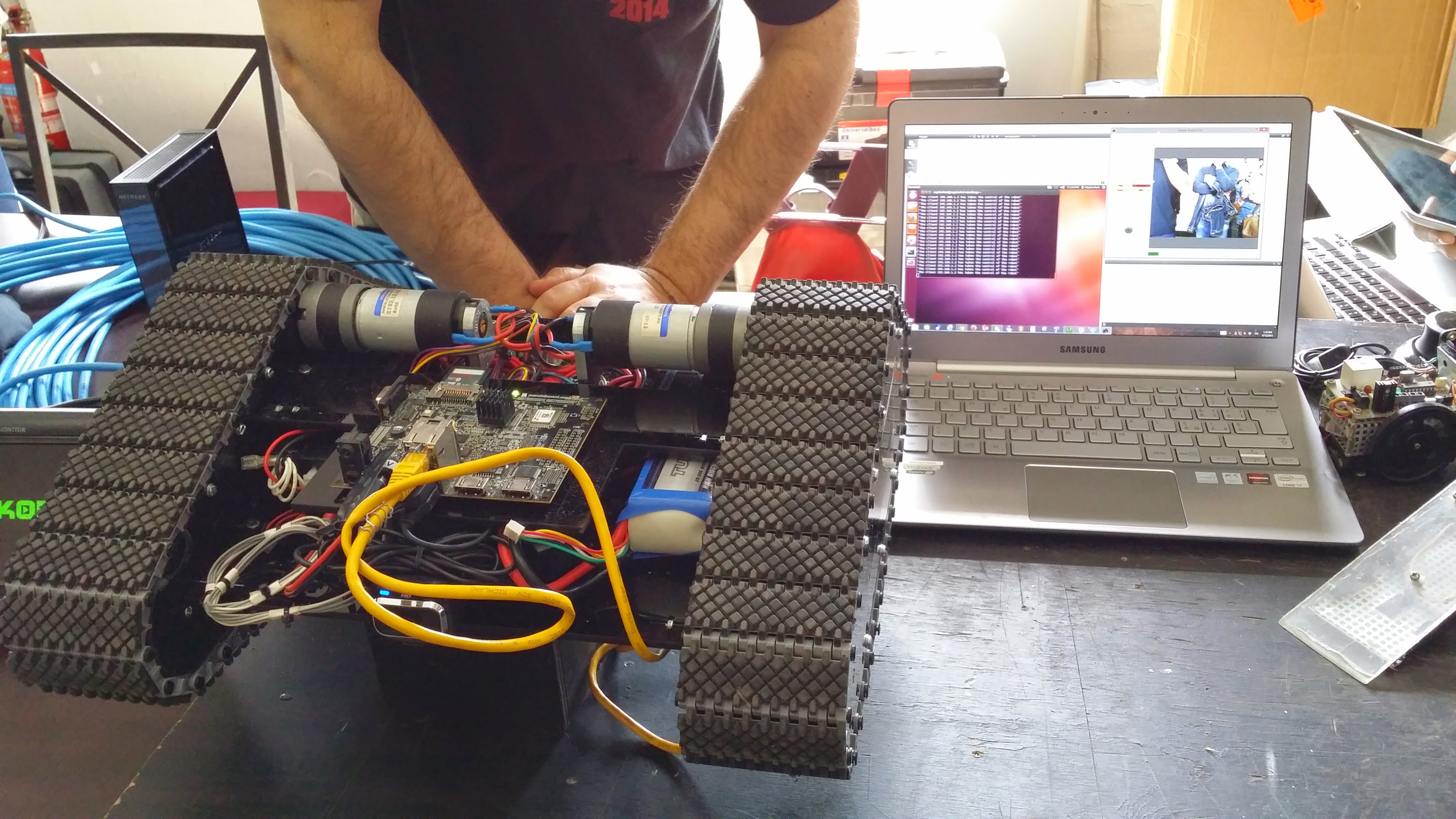

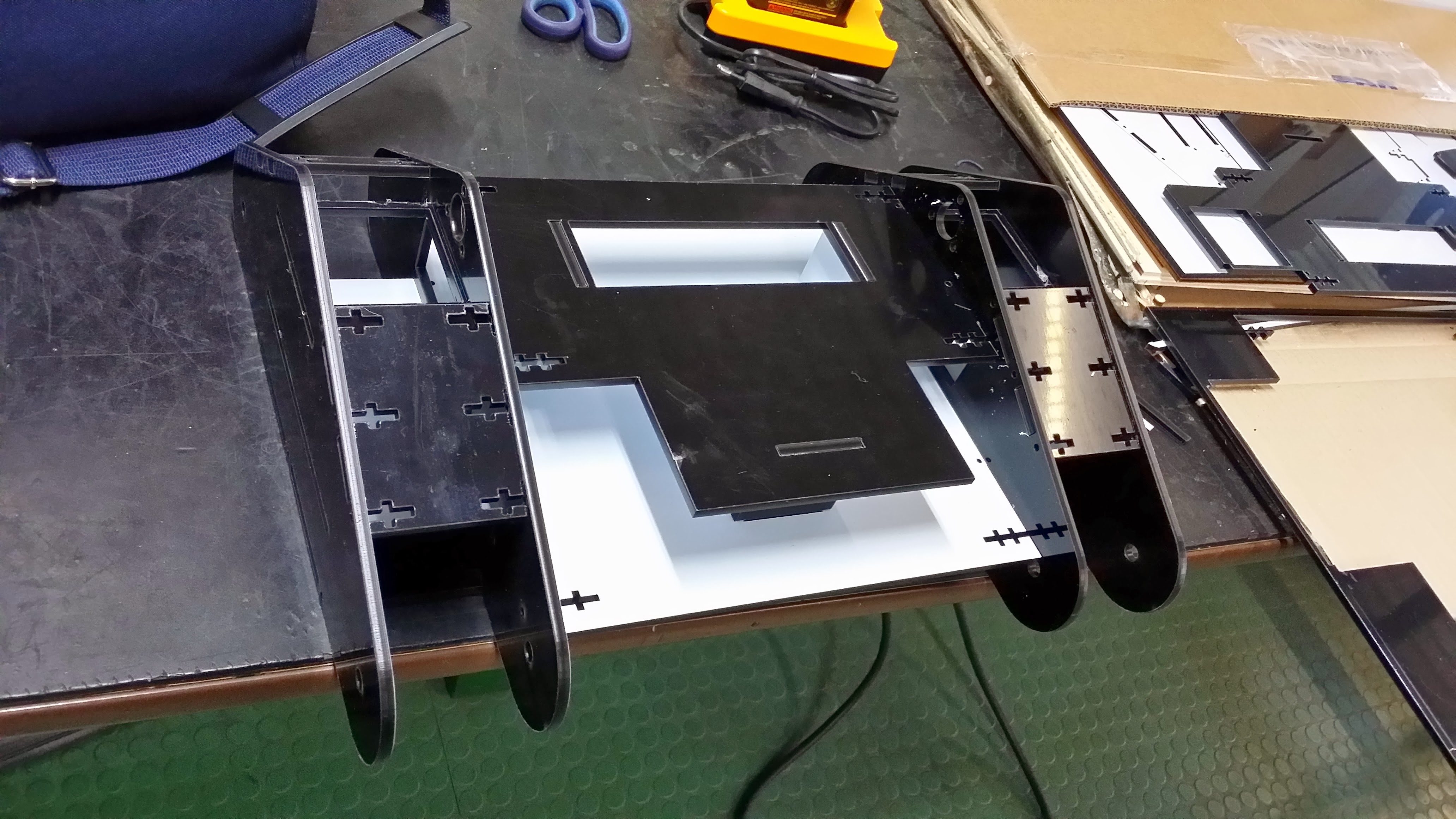

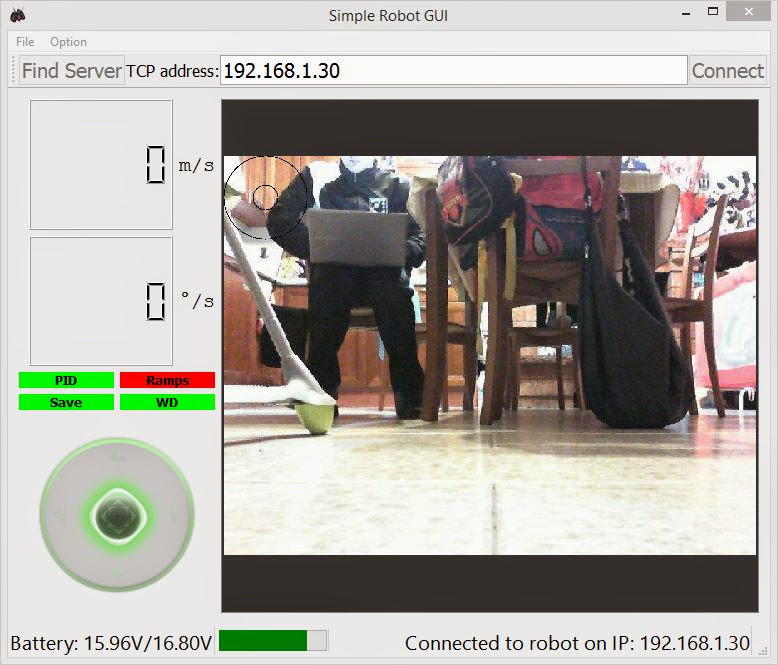

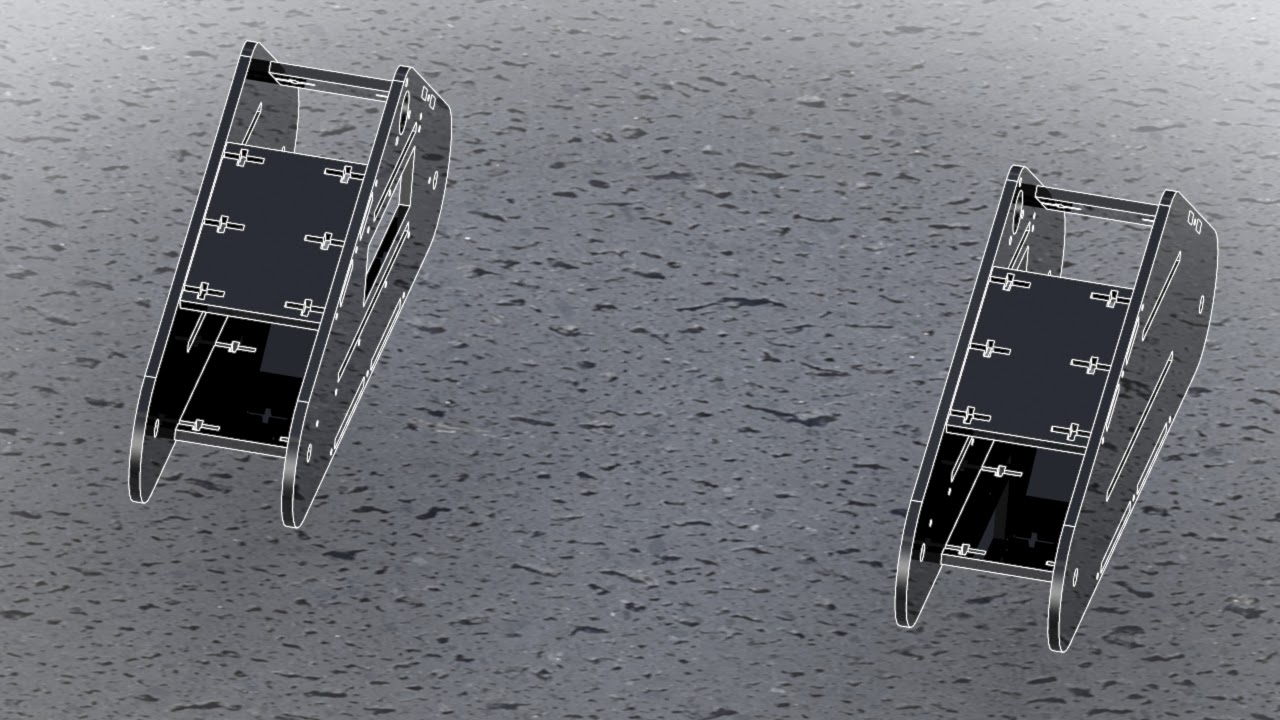

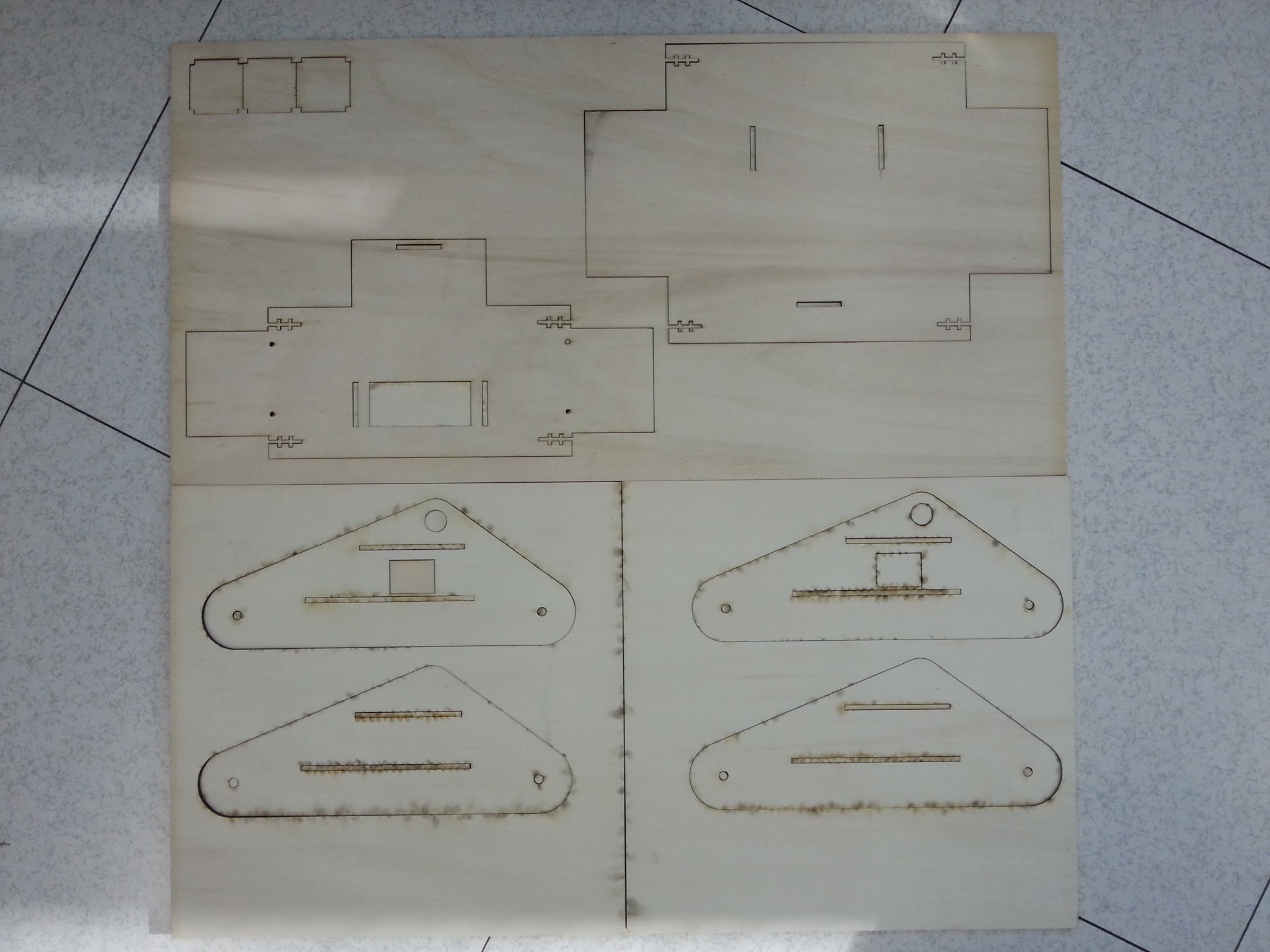

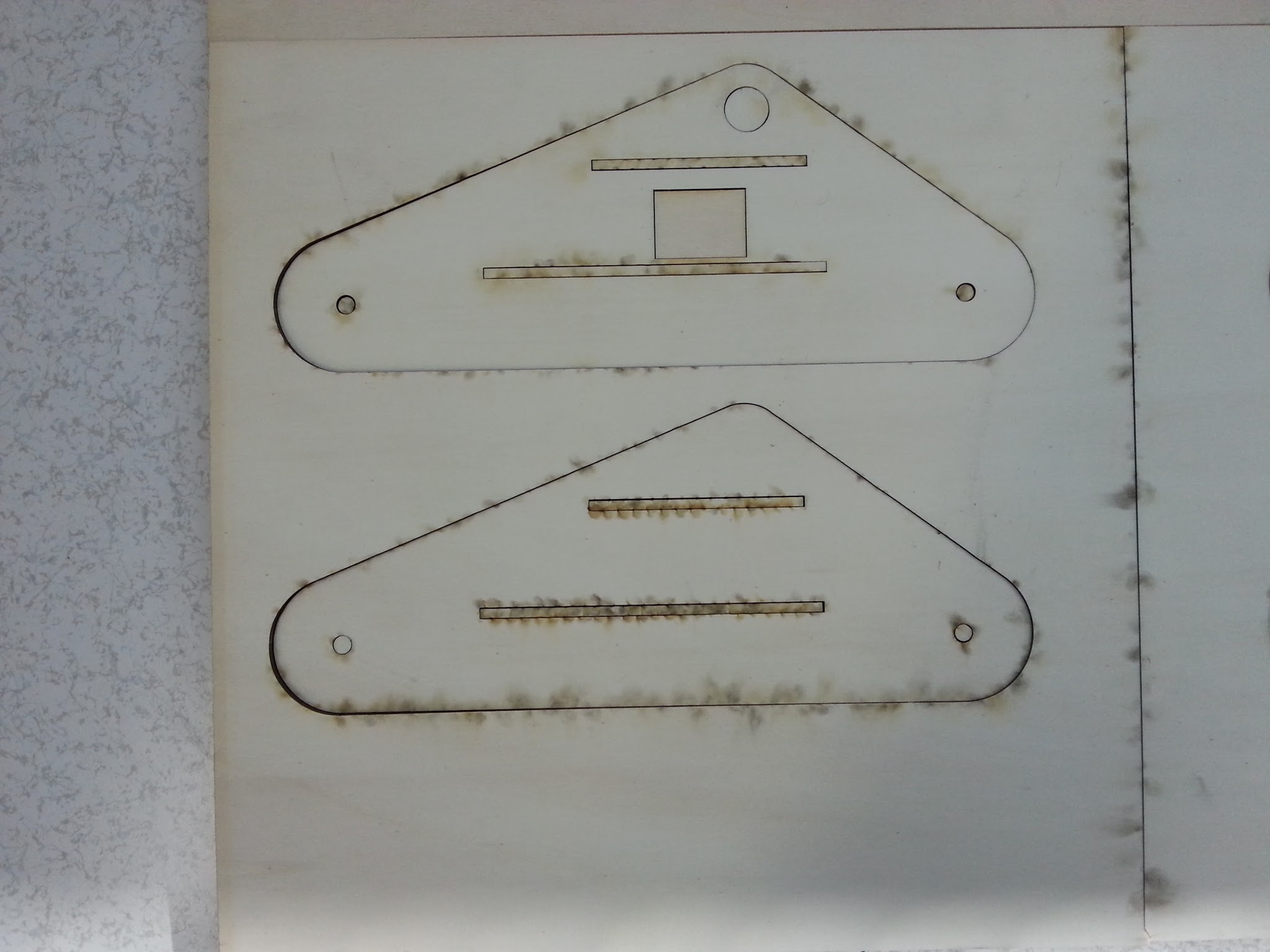

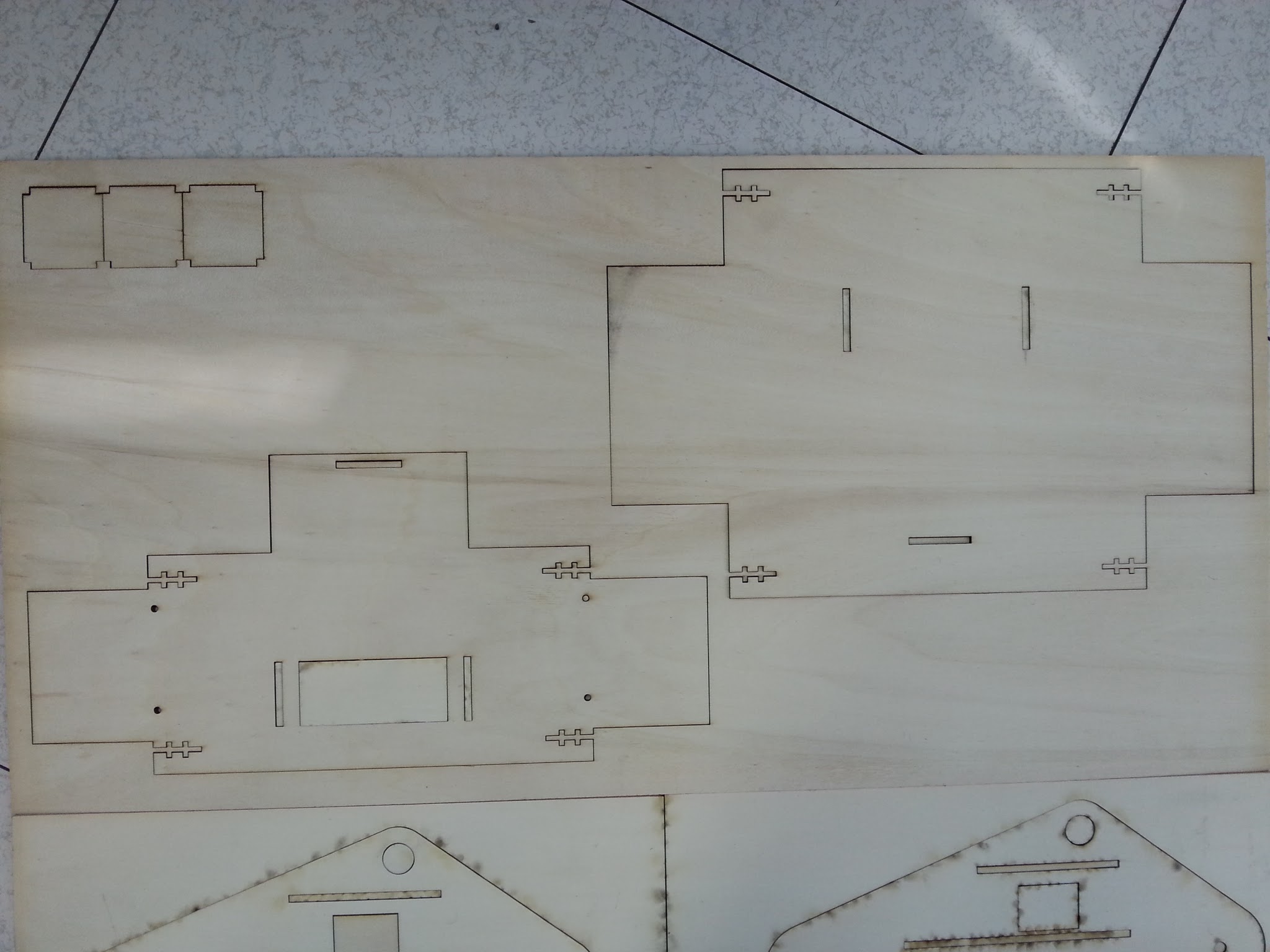

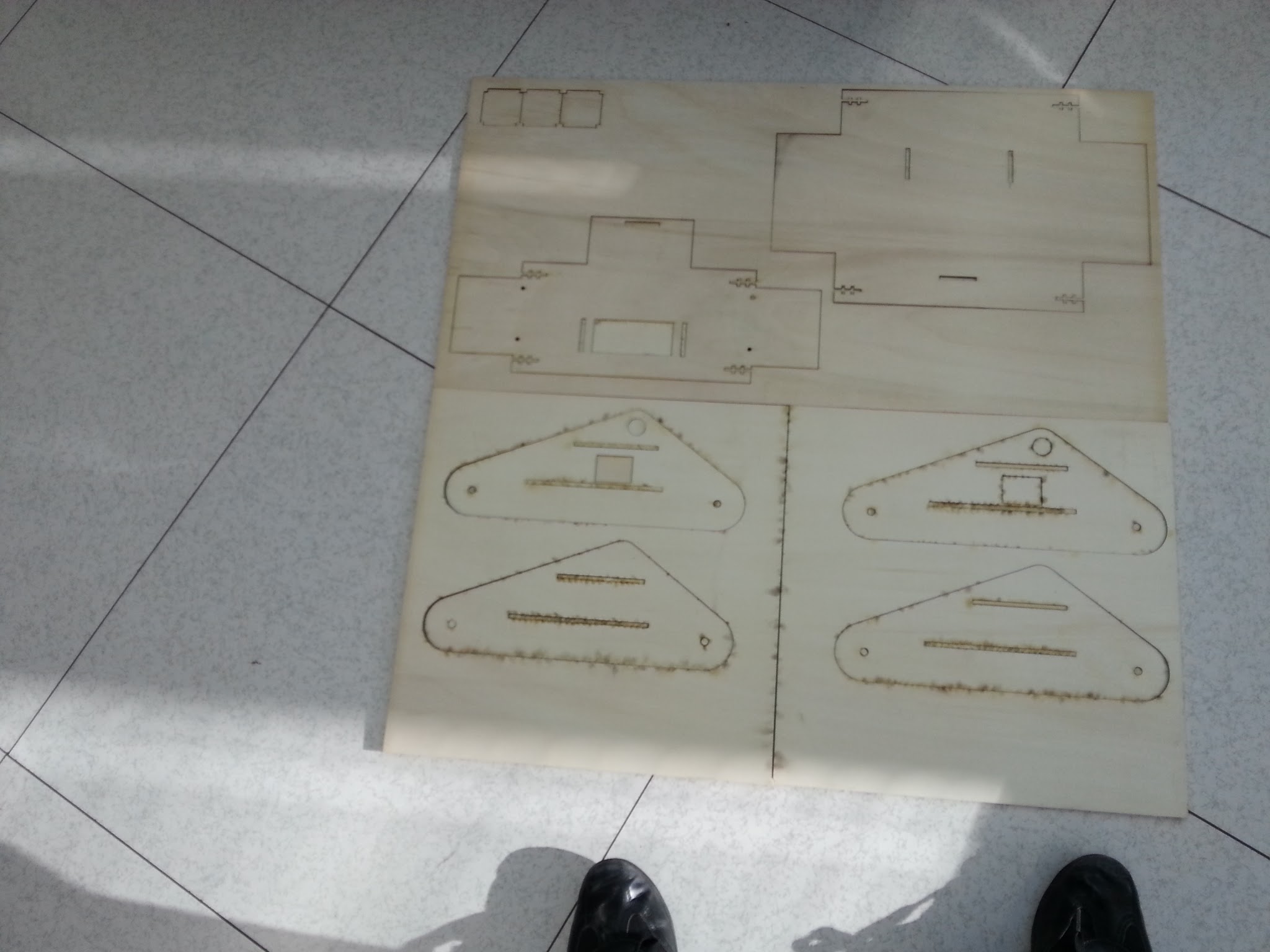

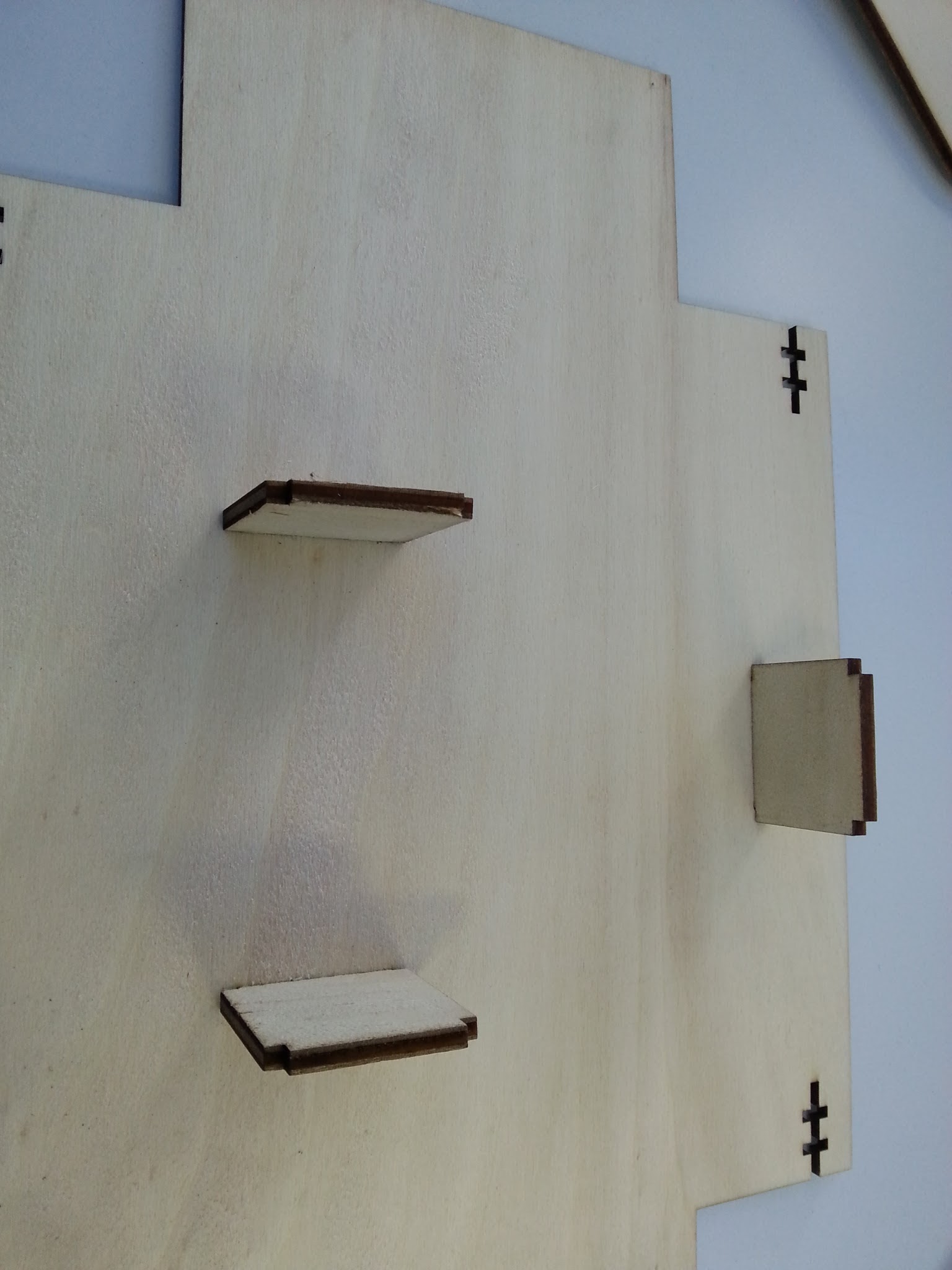

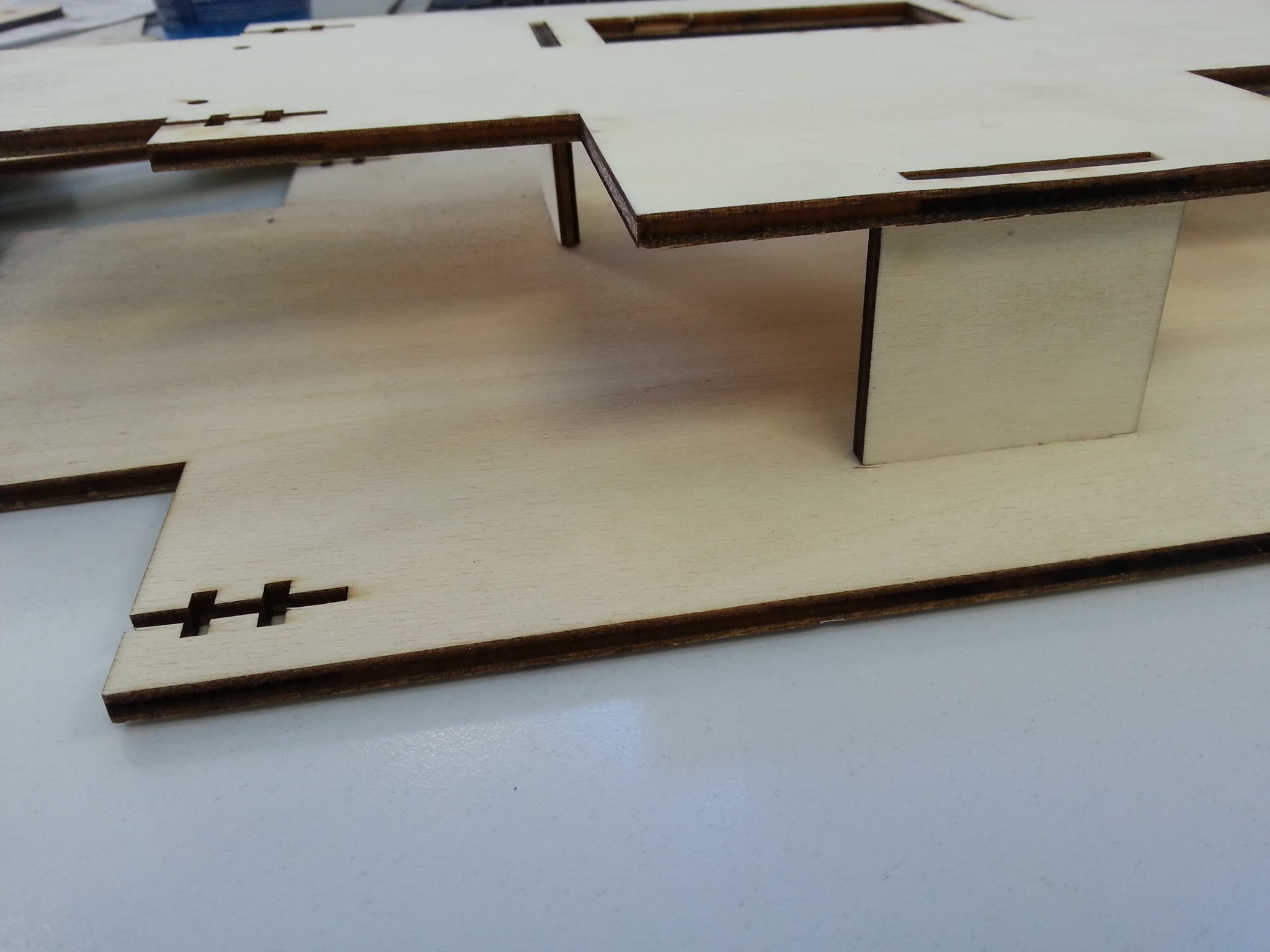

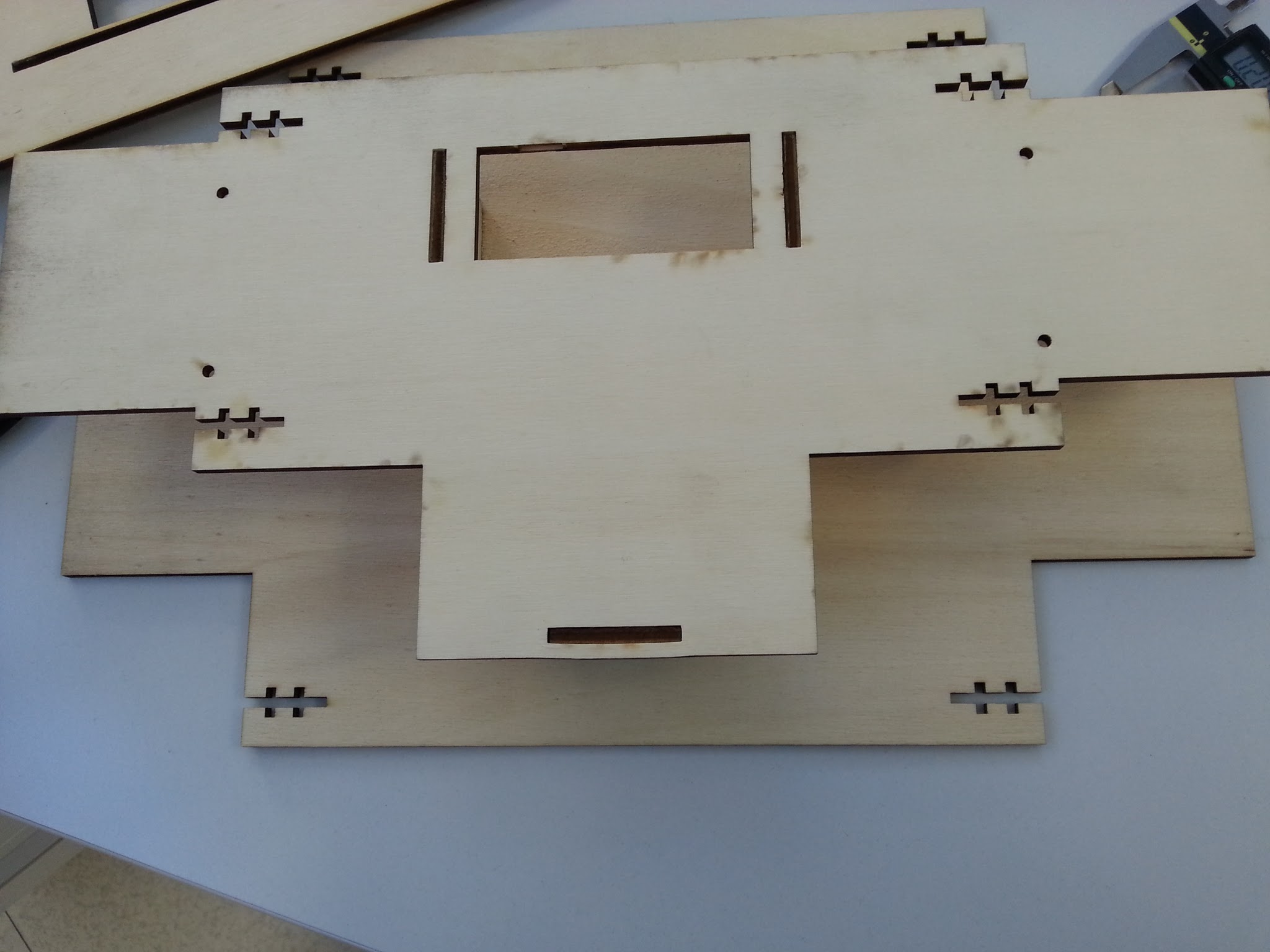

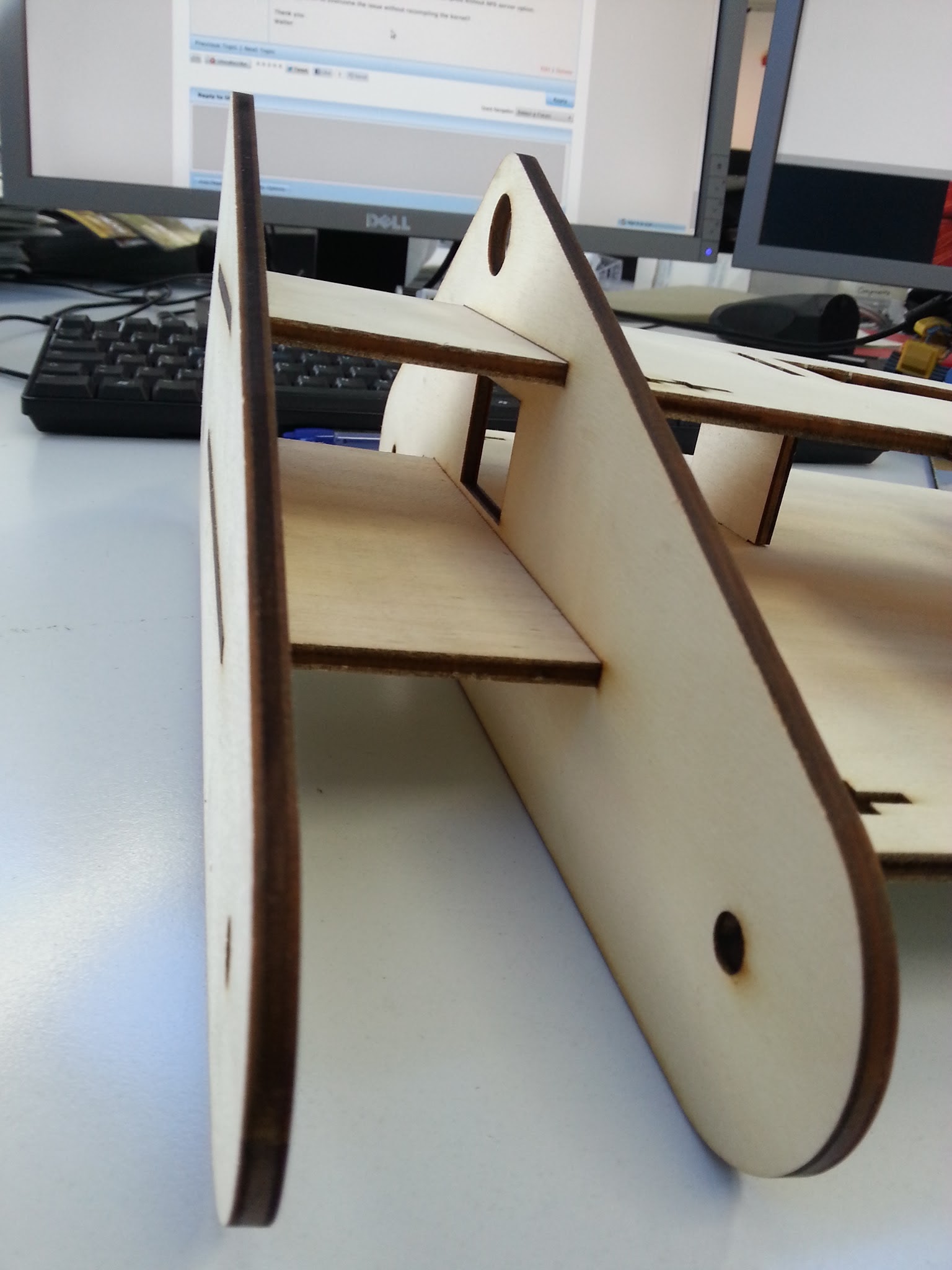

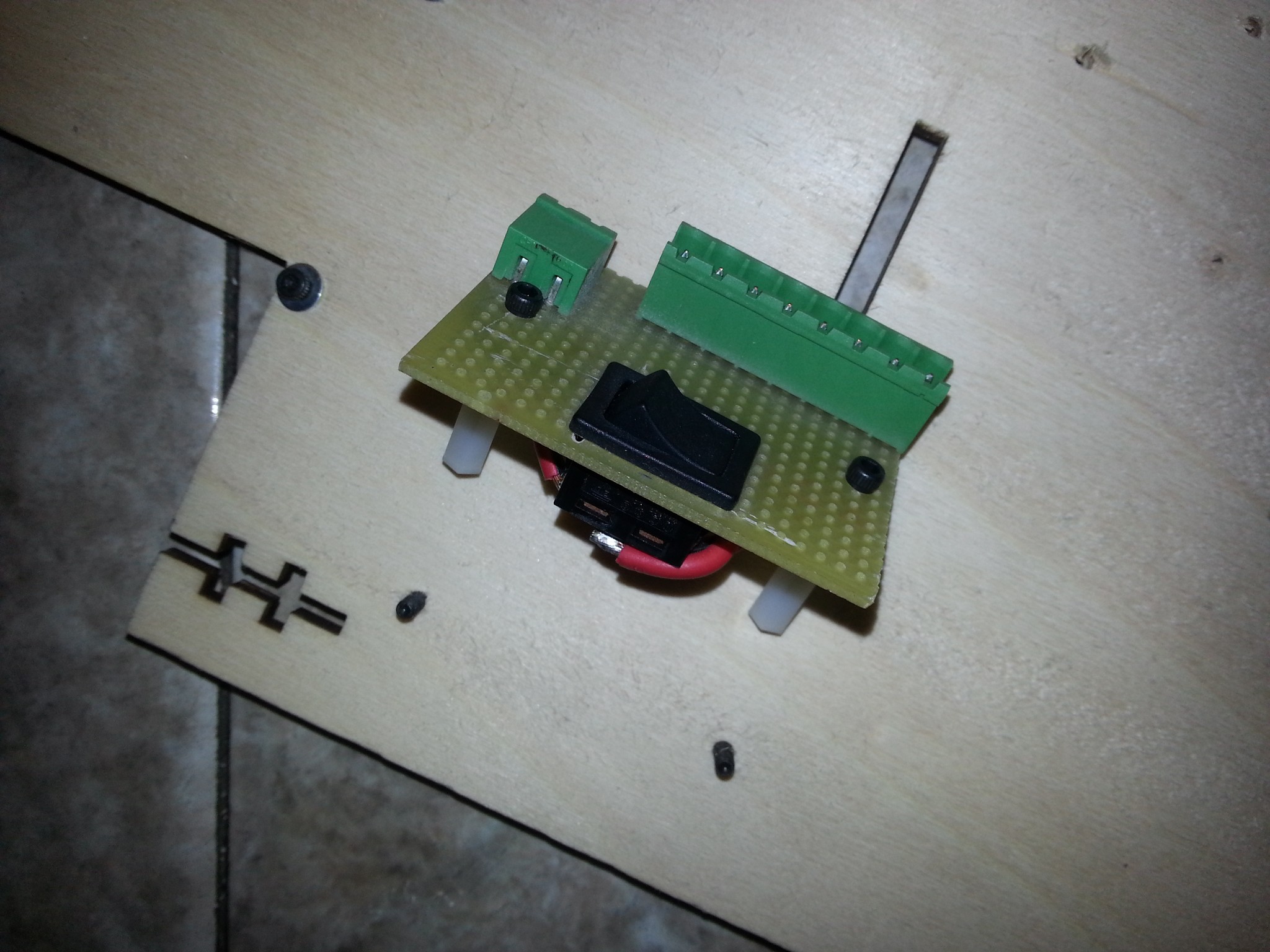

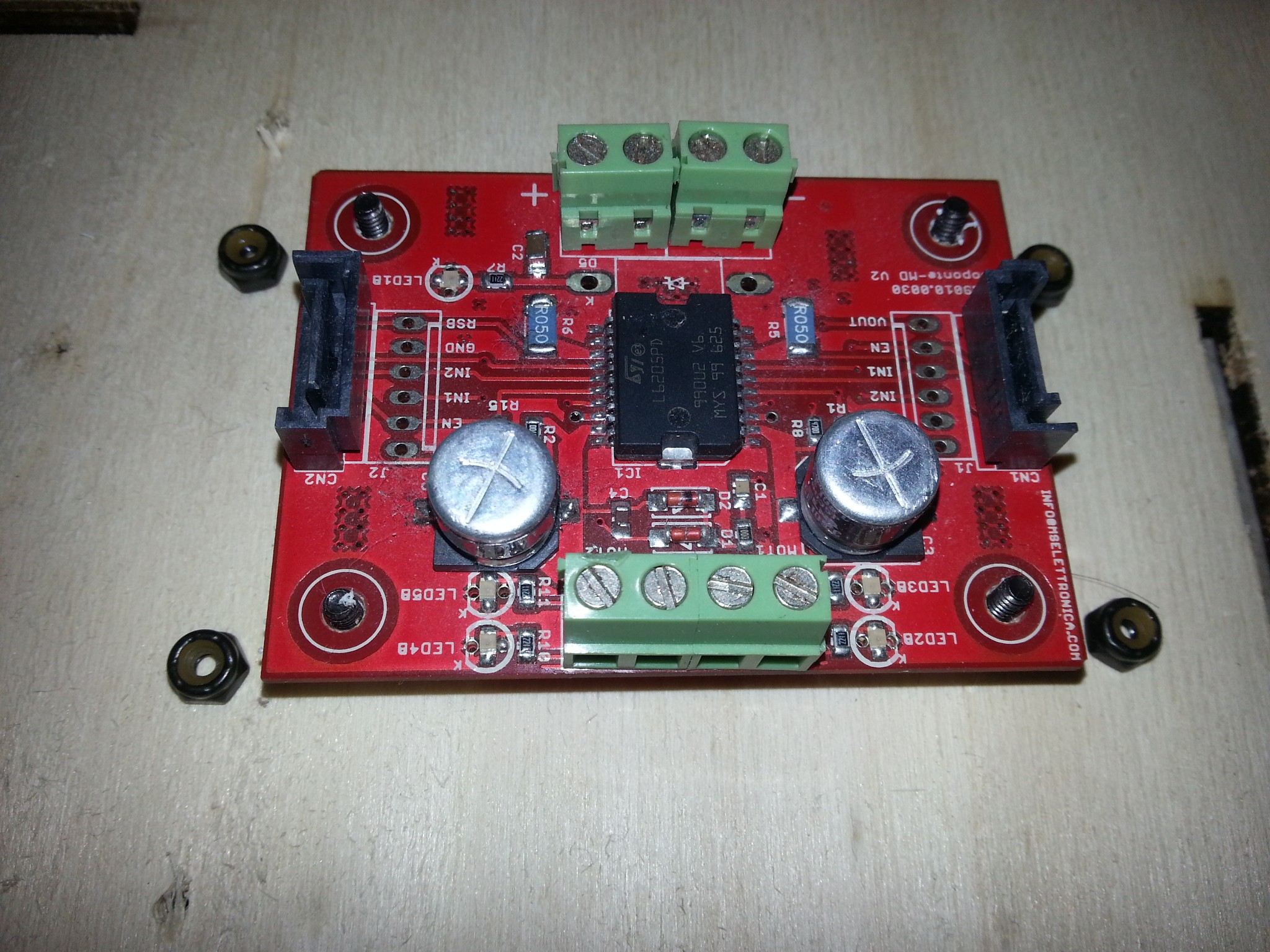

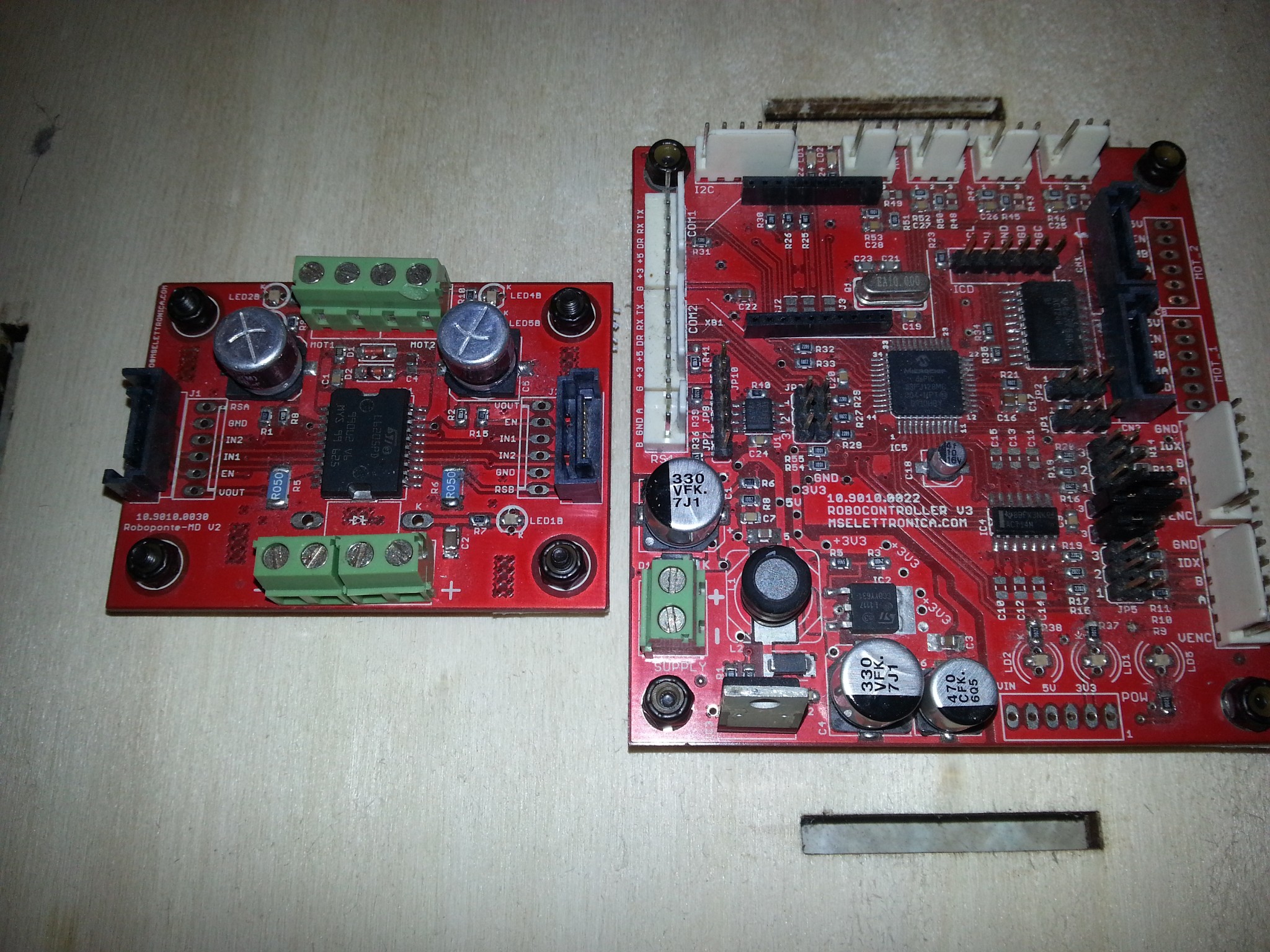

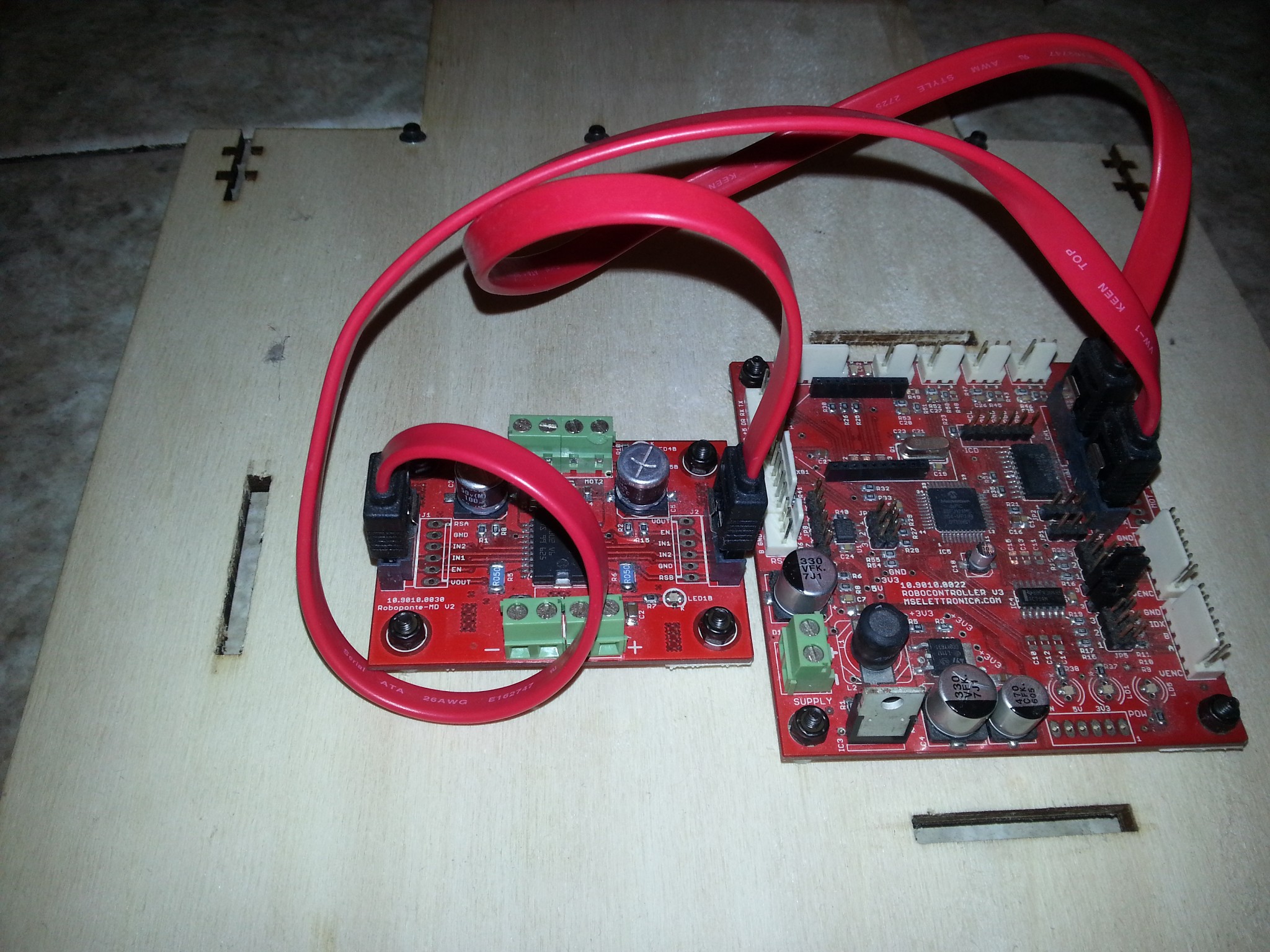

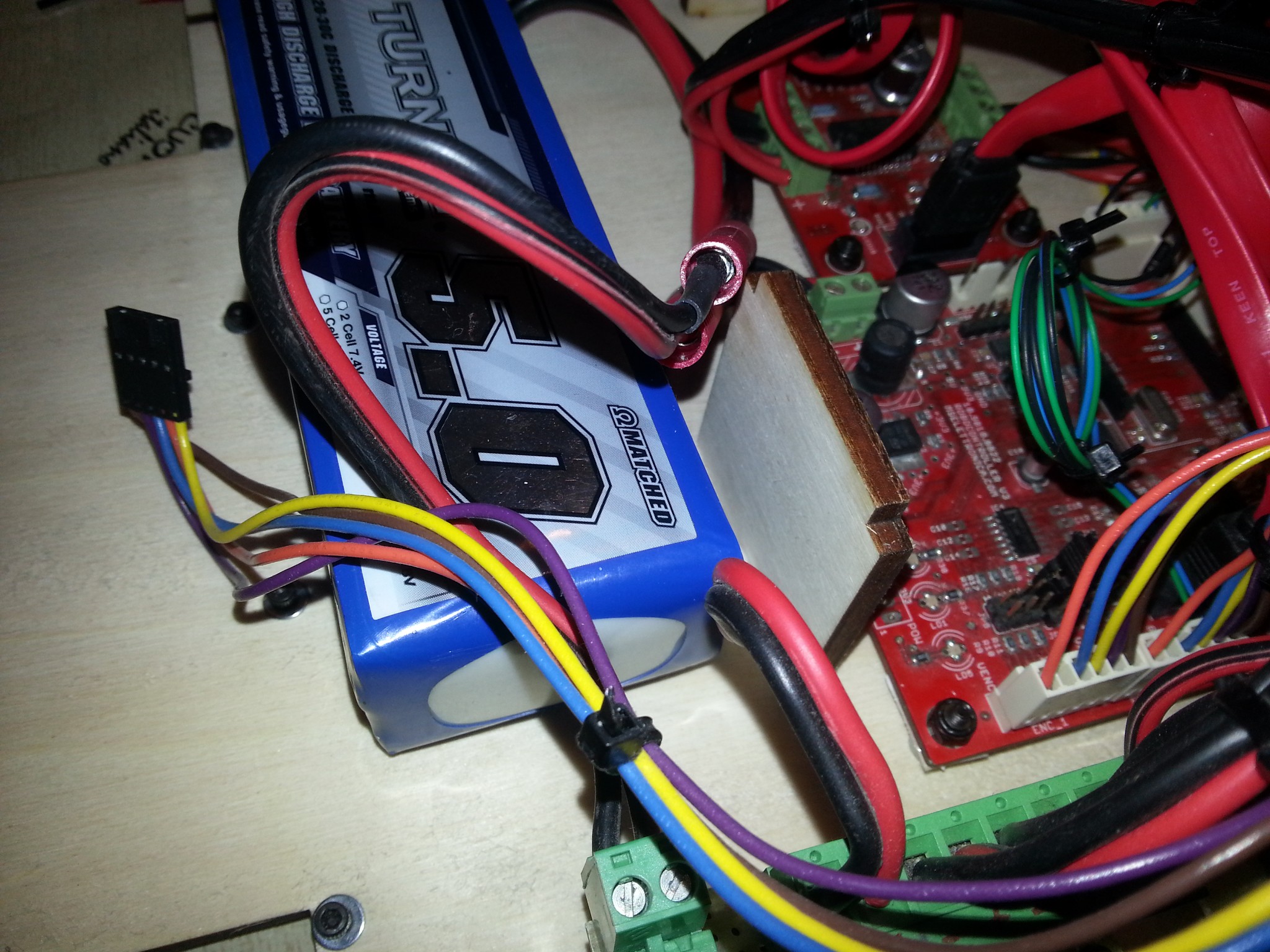

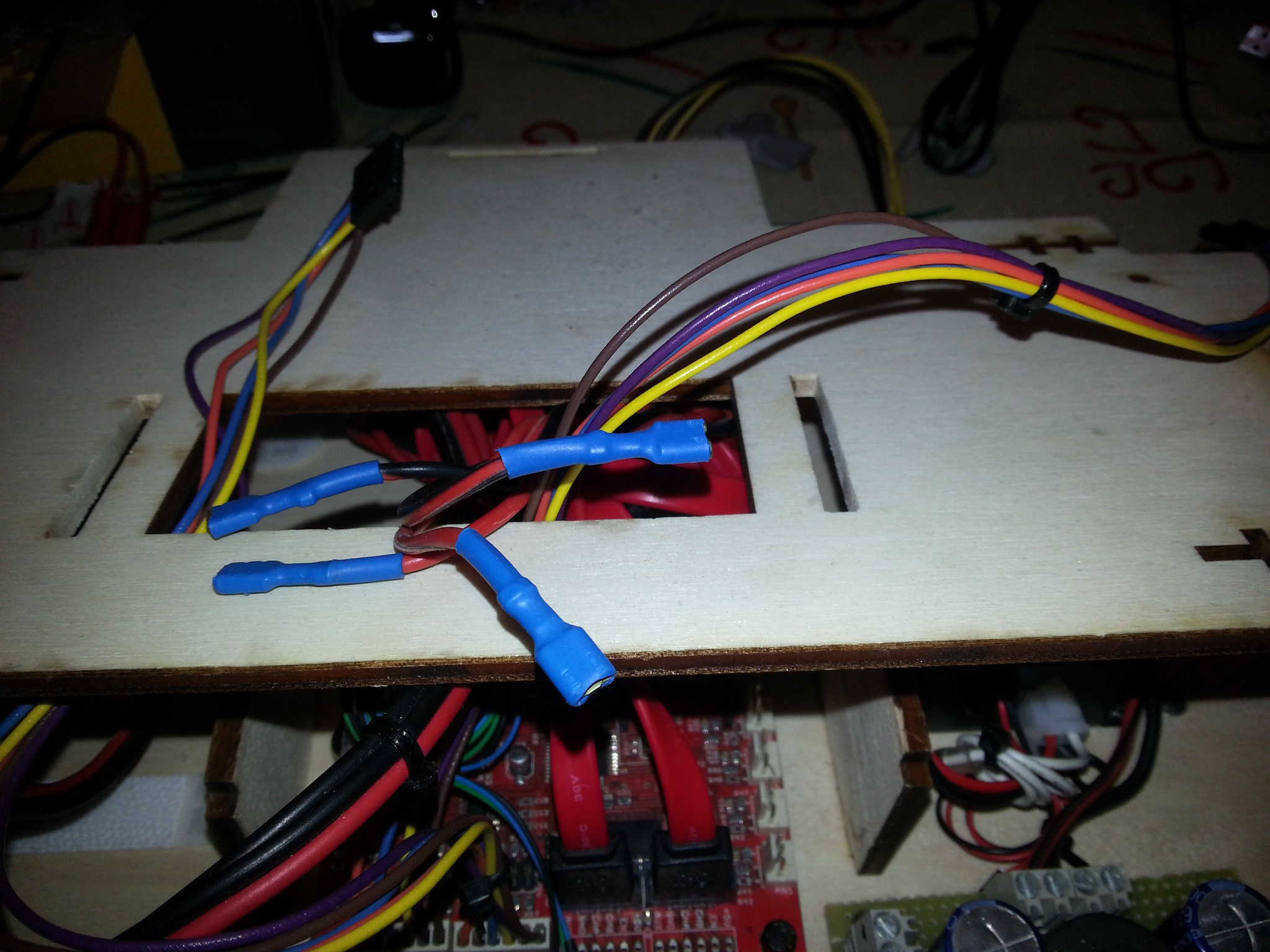

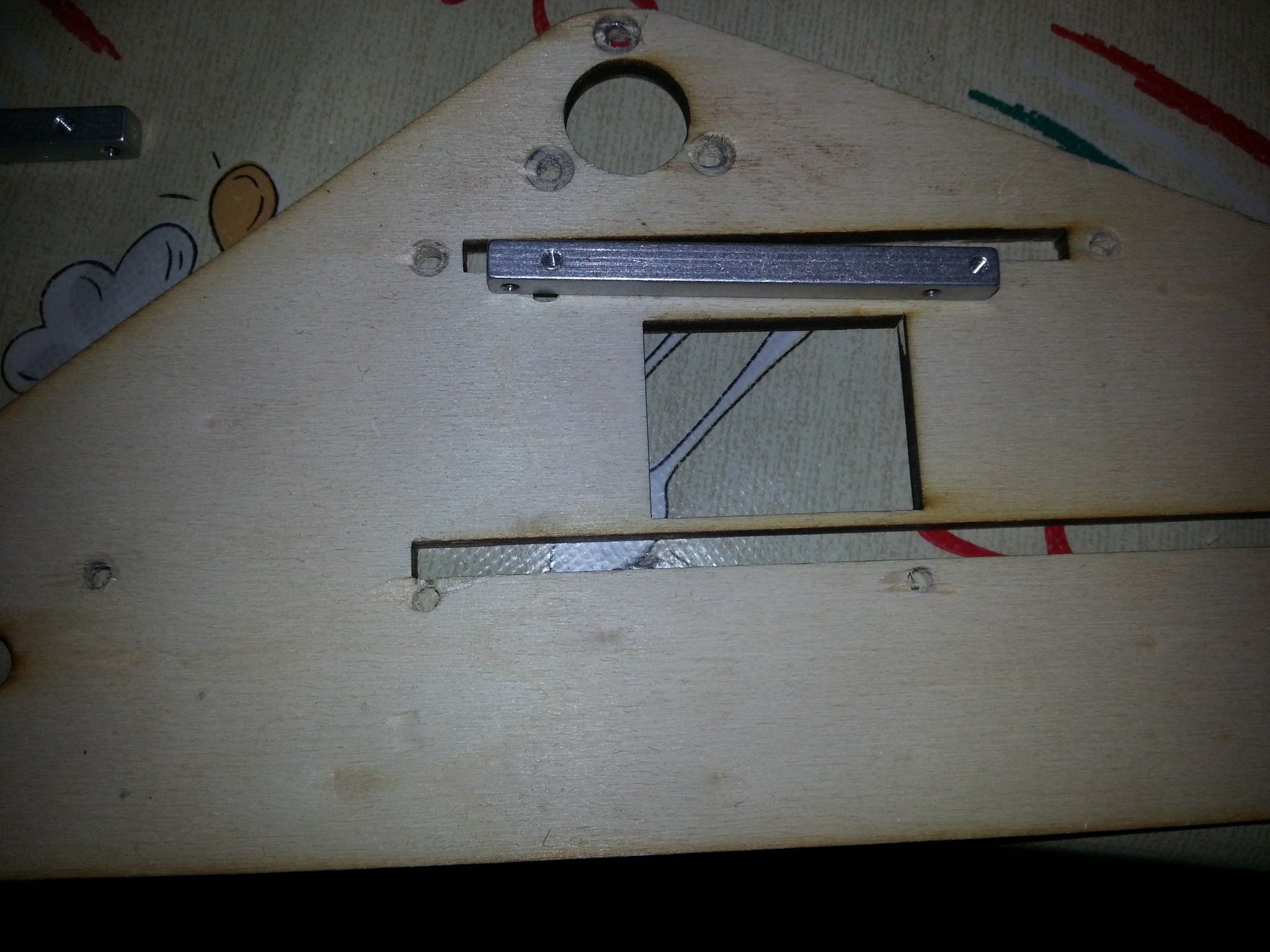

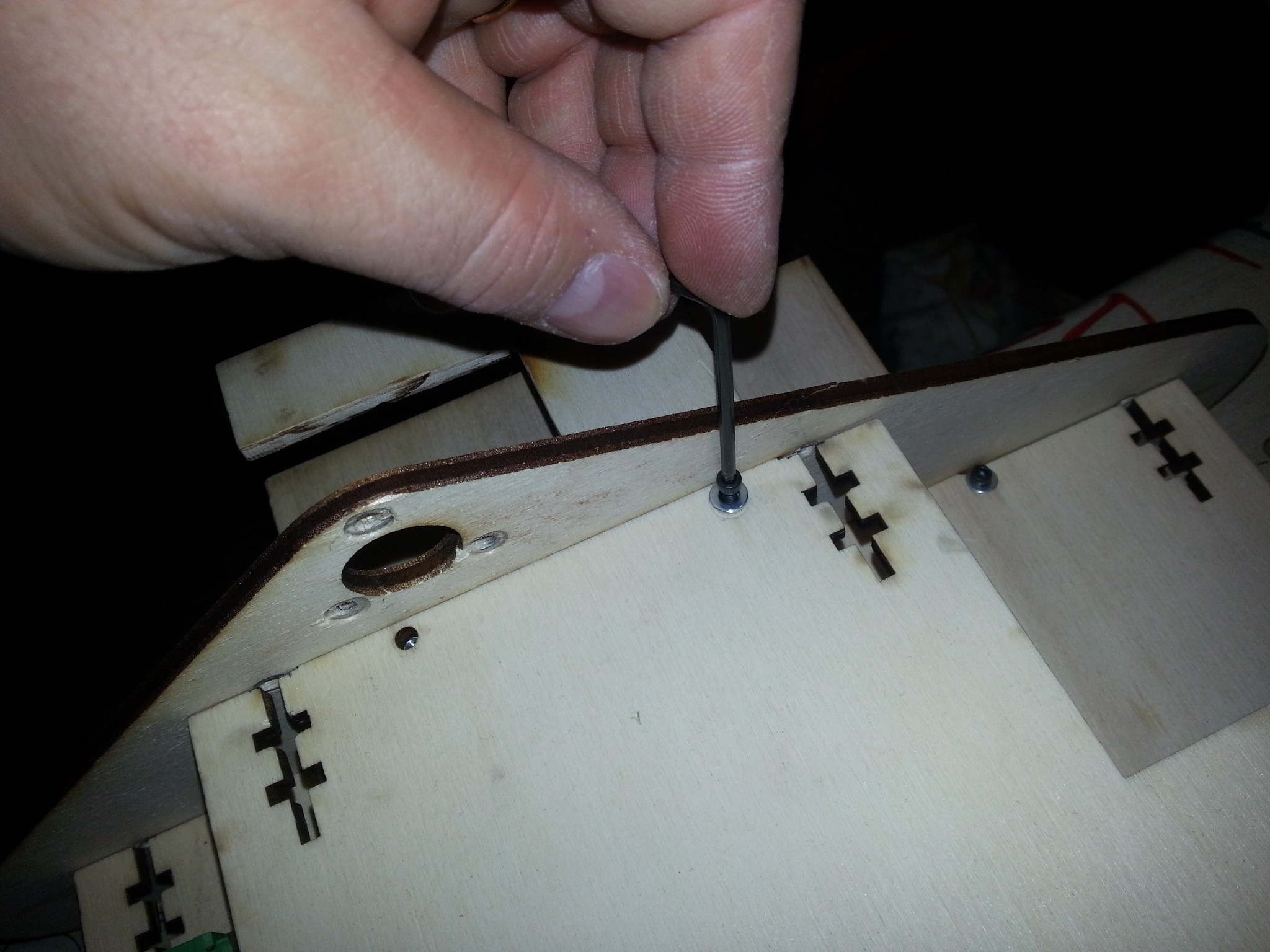

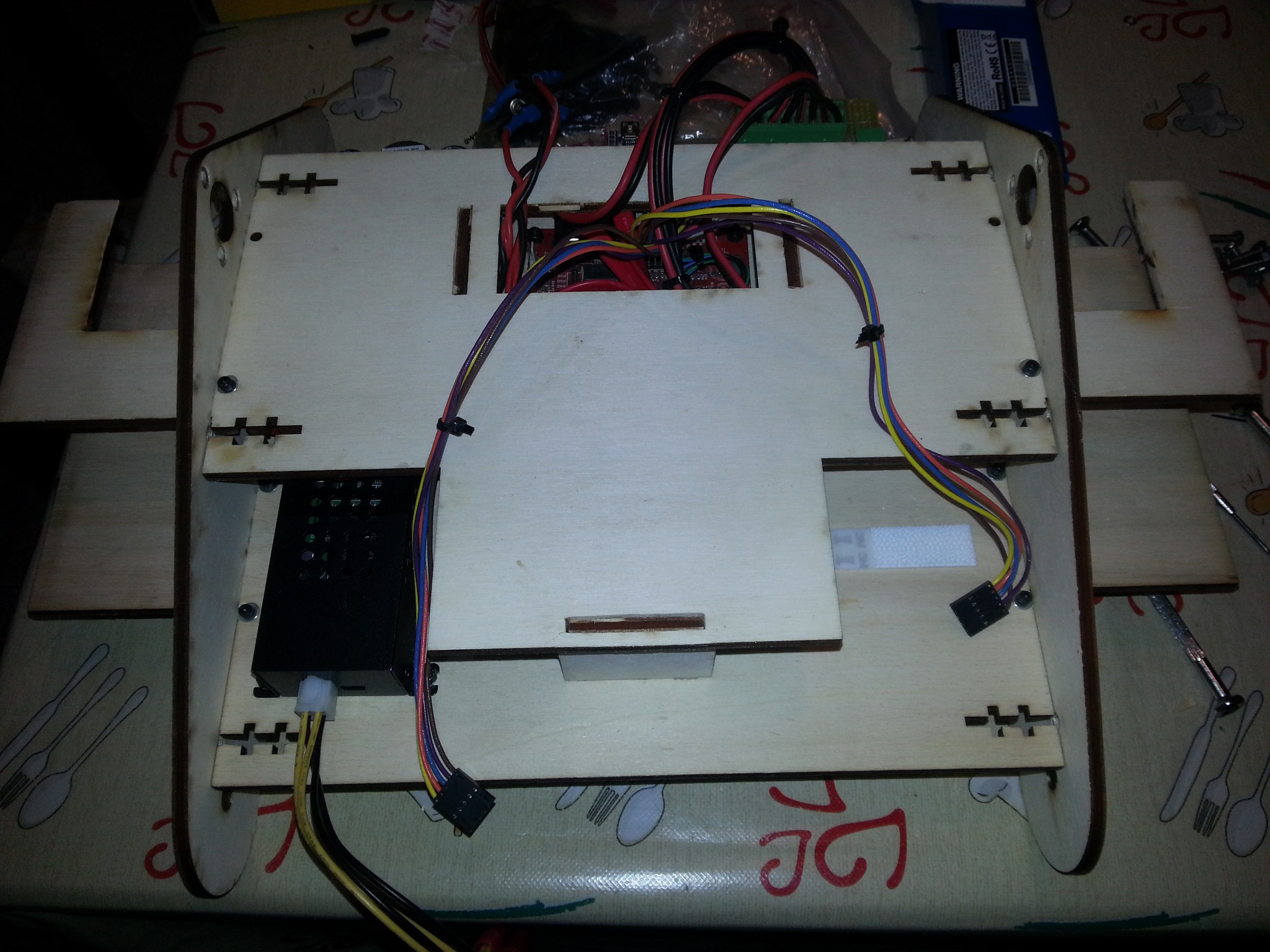

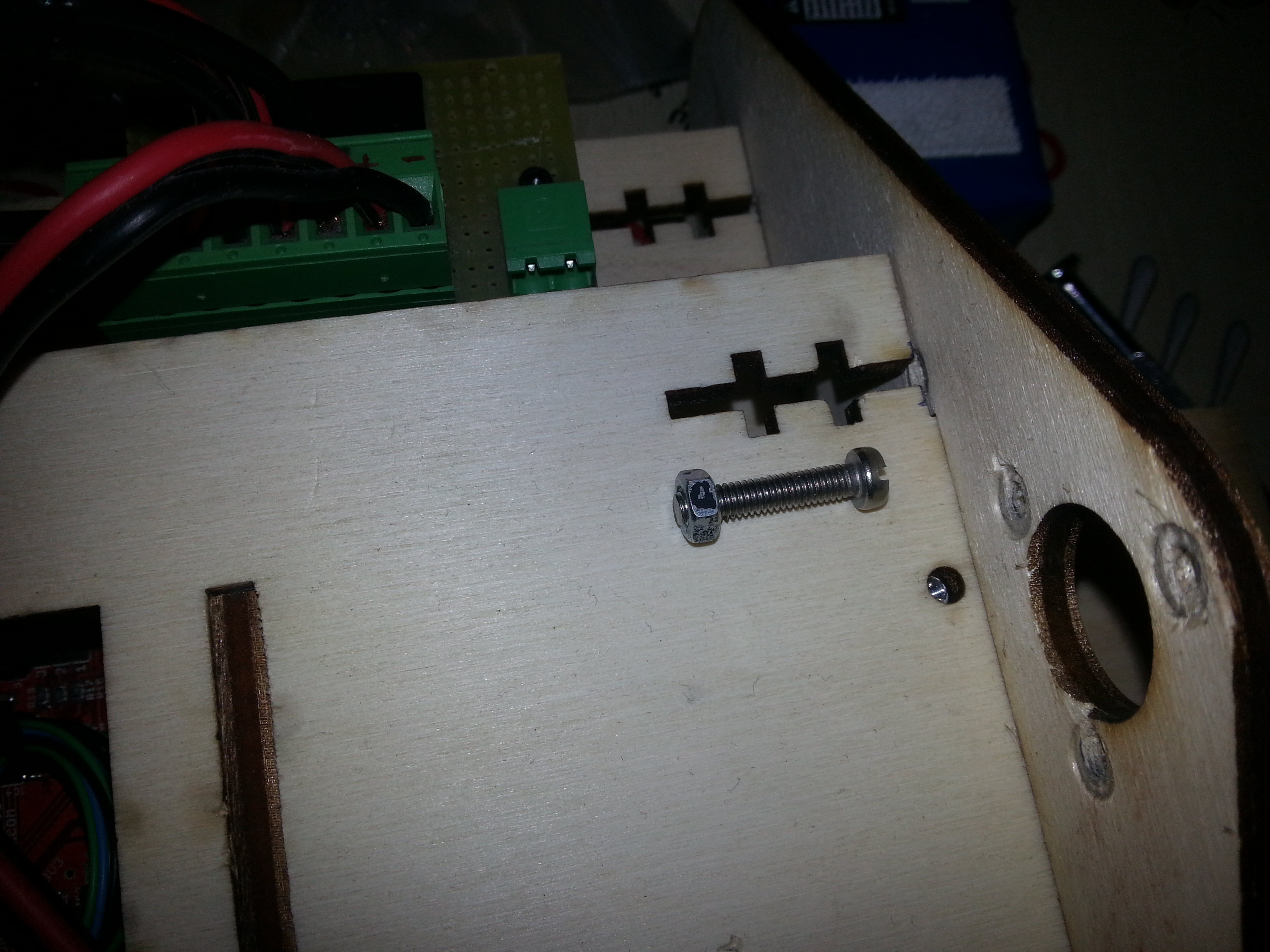

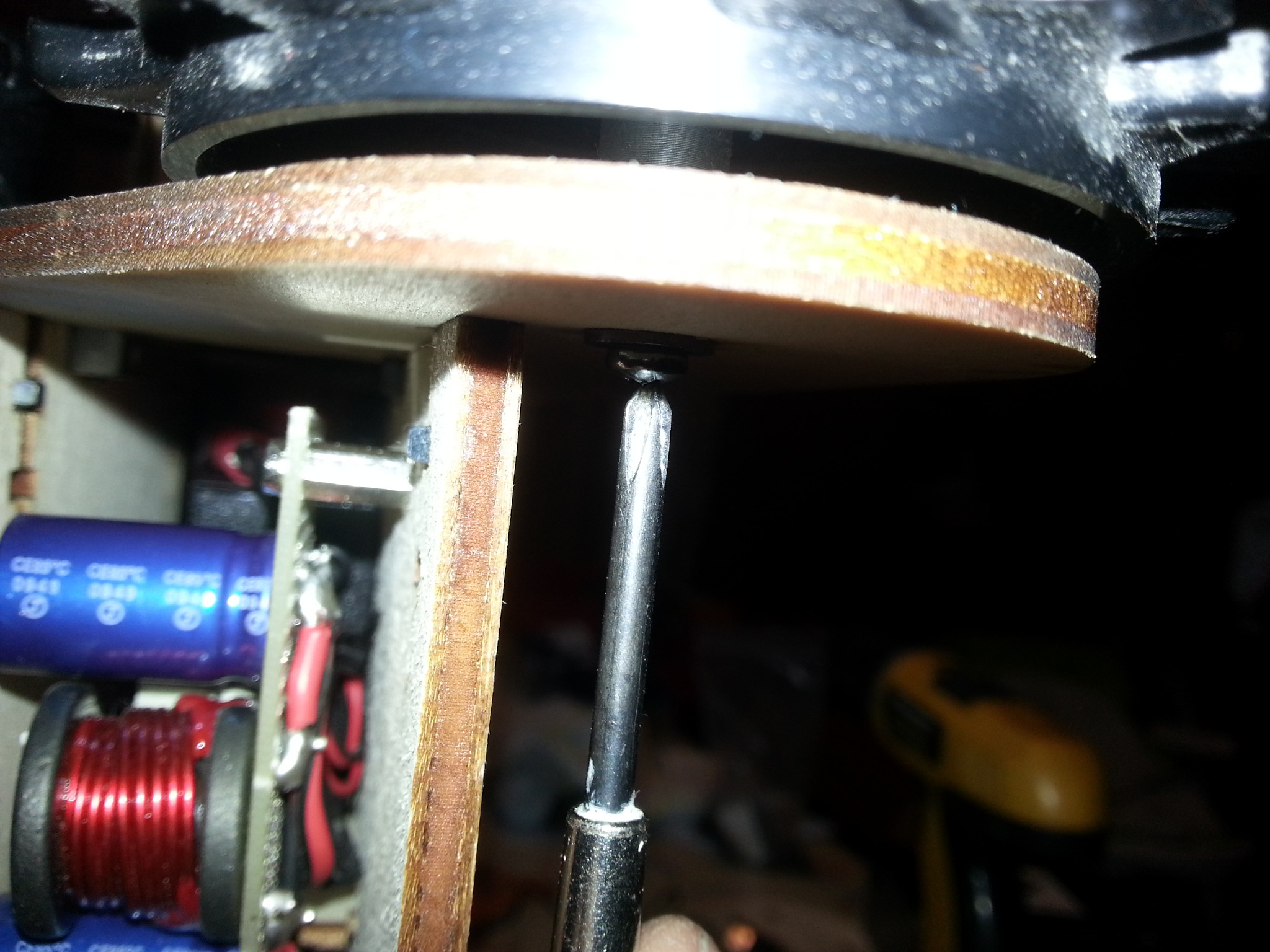

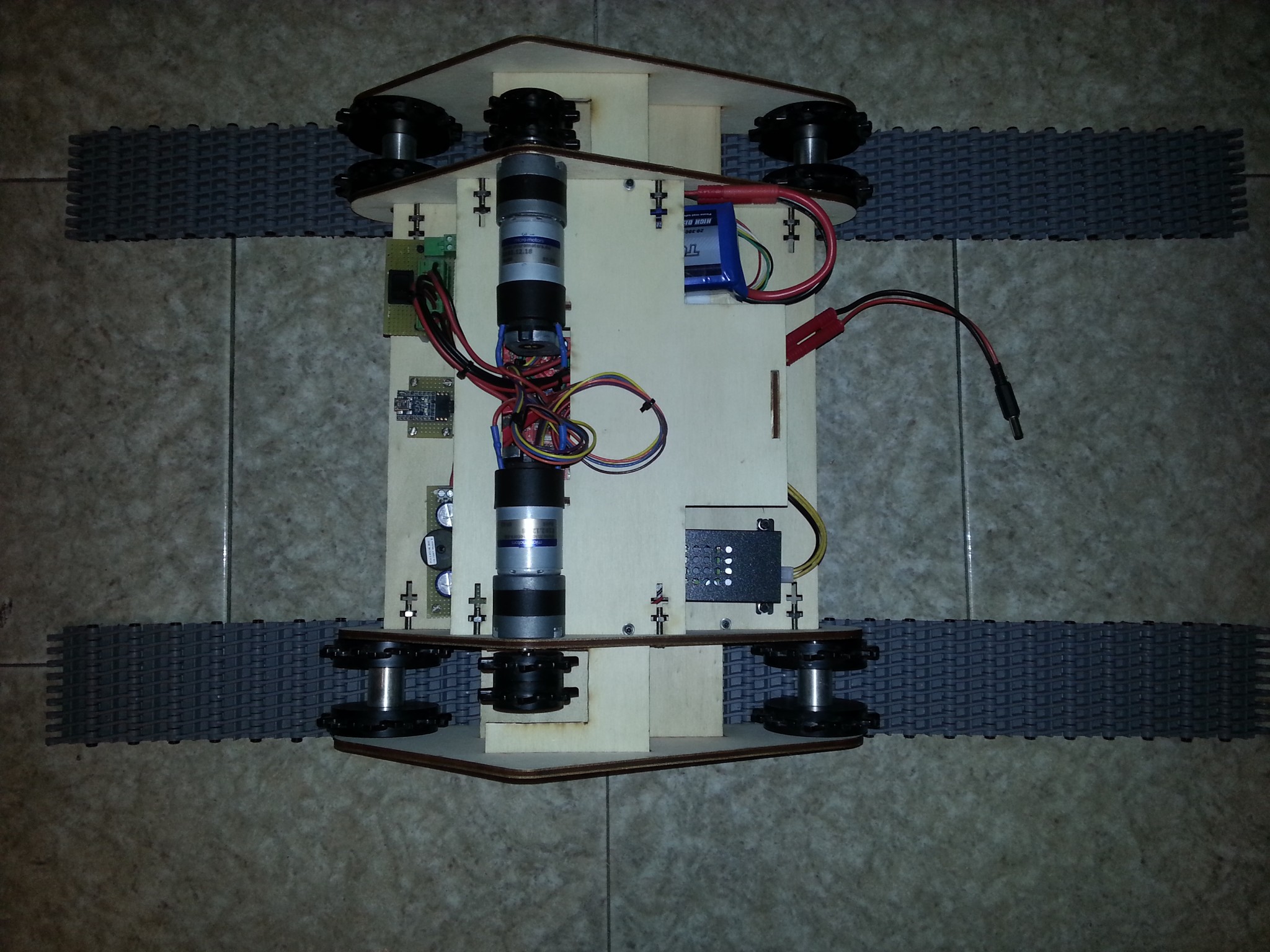

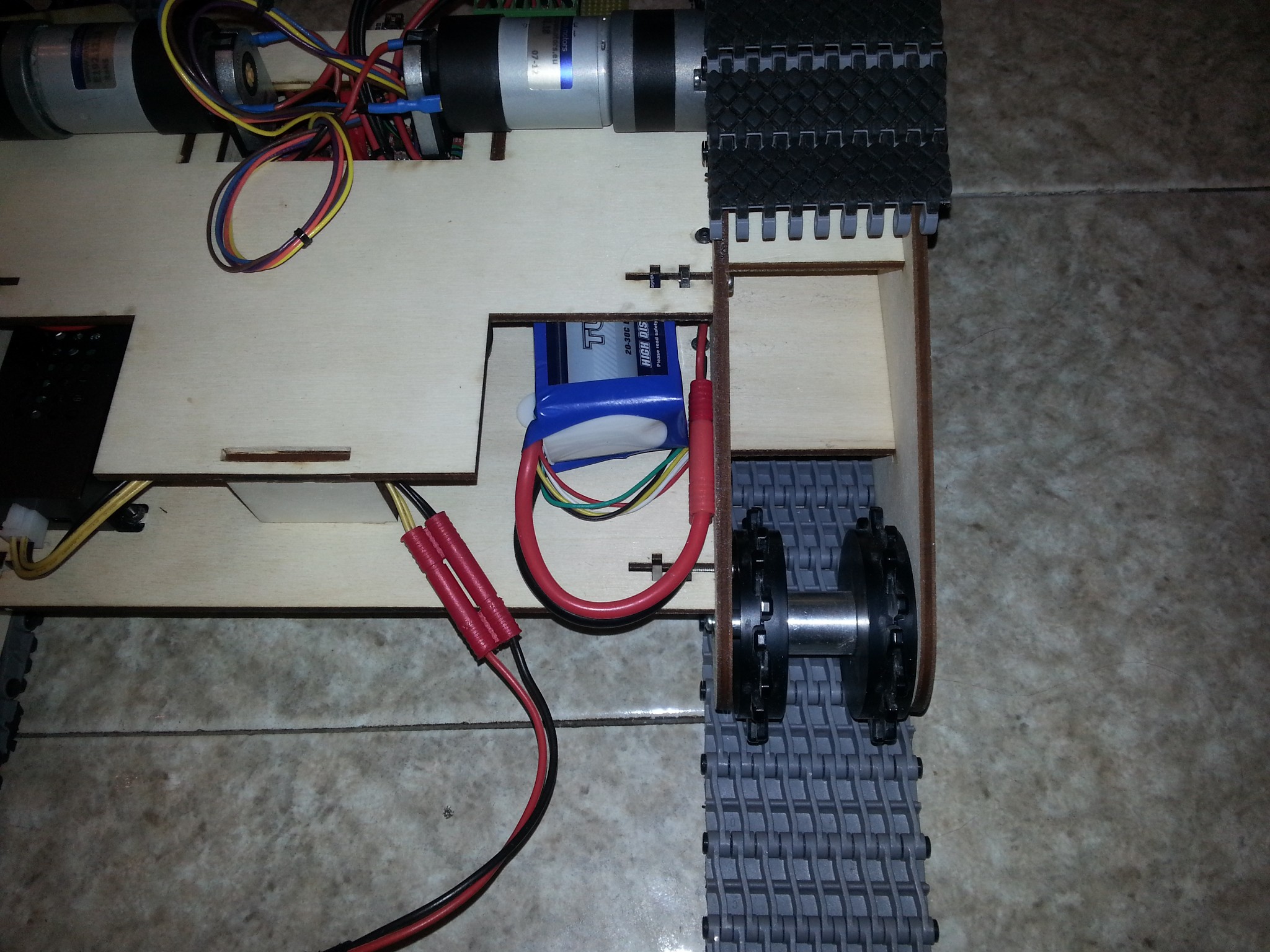

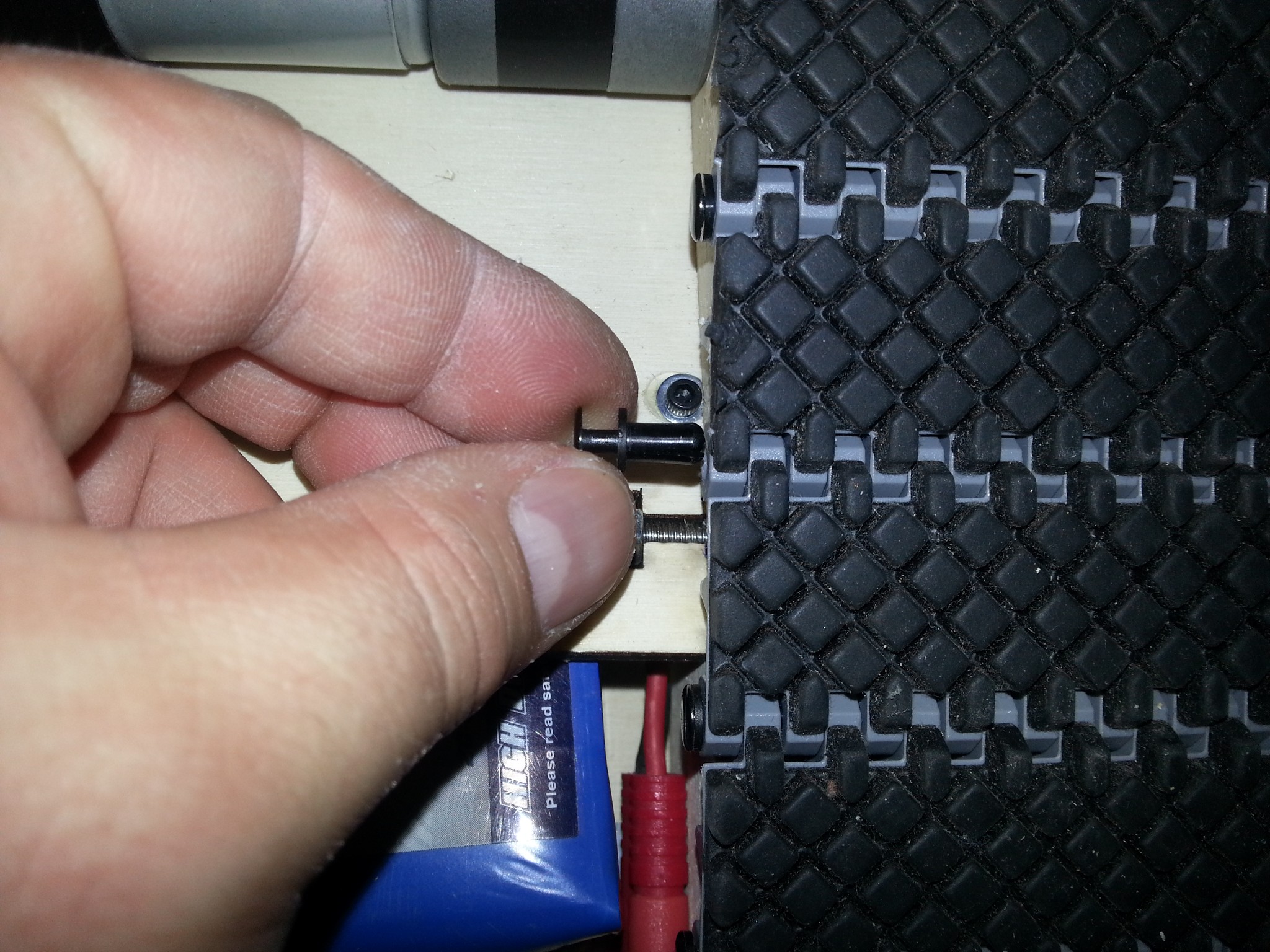

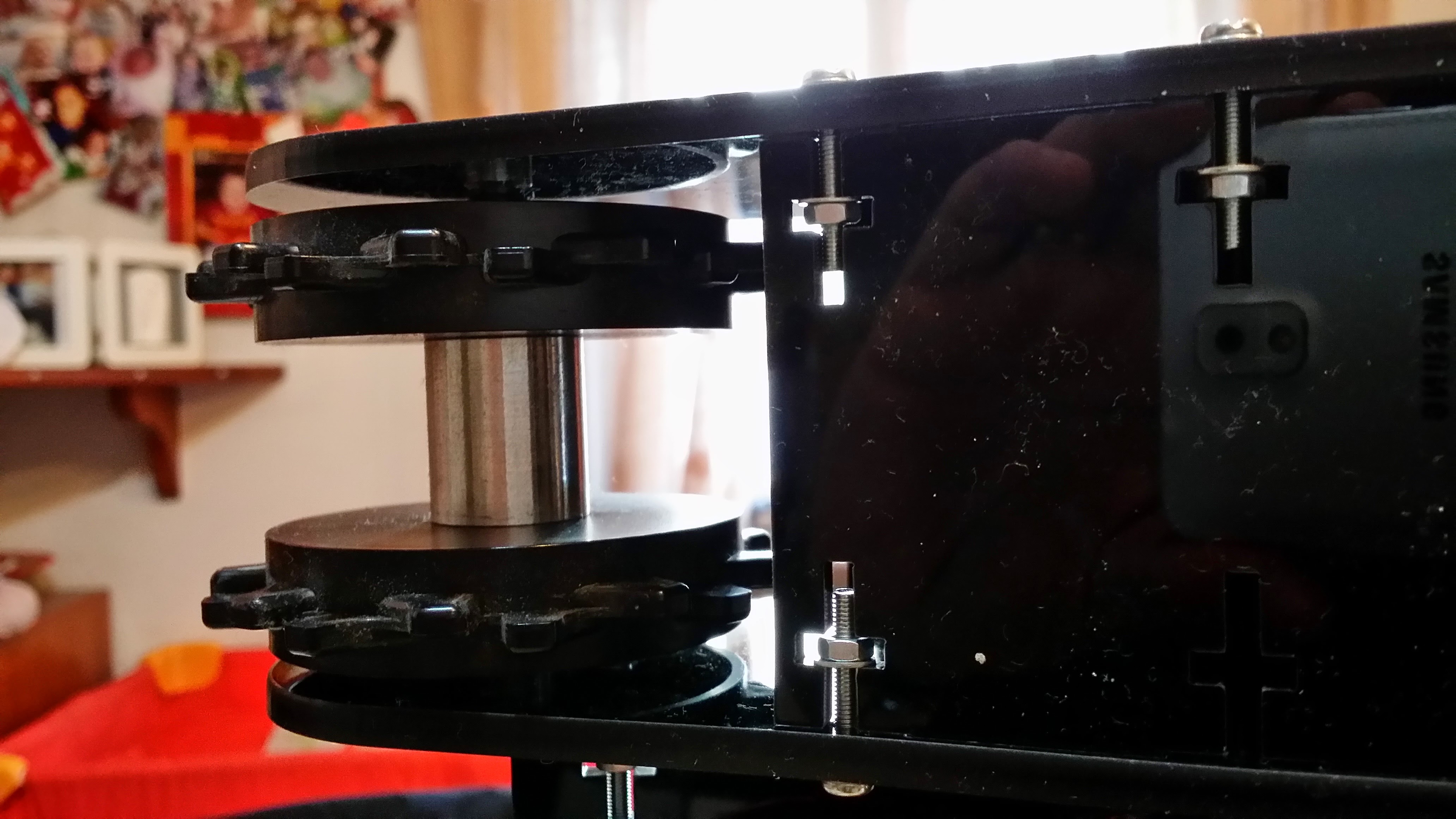

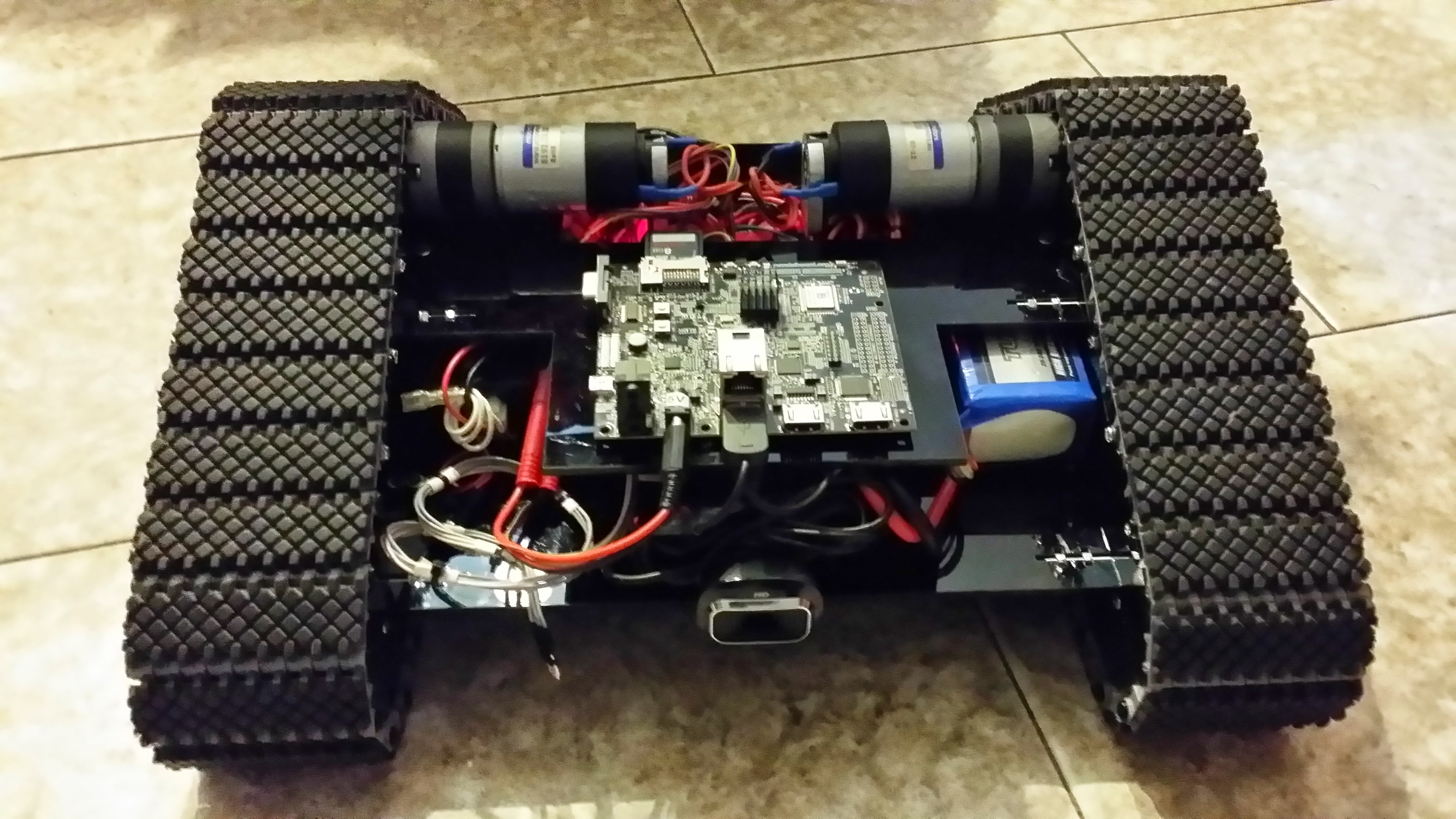

I redesigned the chassis to accommodate the Pandaboard ES and two USB webcams. The new design featured an additional top plate for mounting the board and cameras, with reorganized components to optimize space utilization and weight distribution.

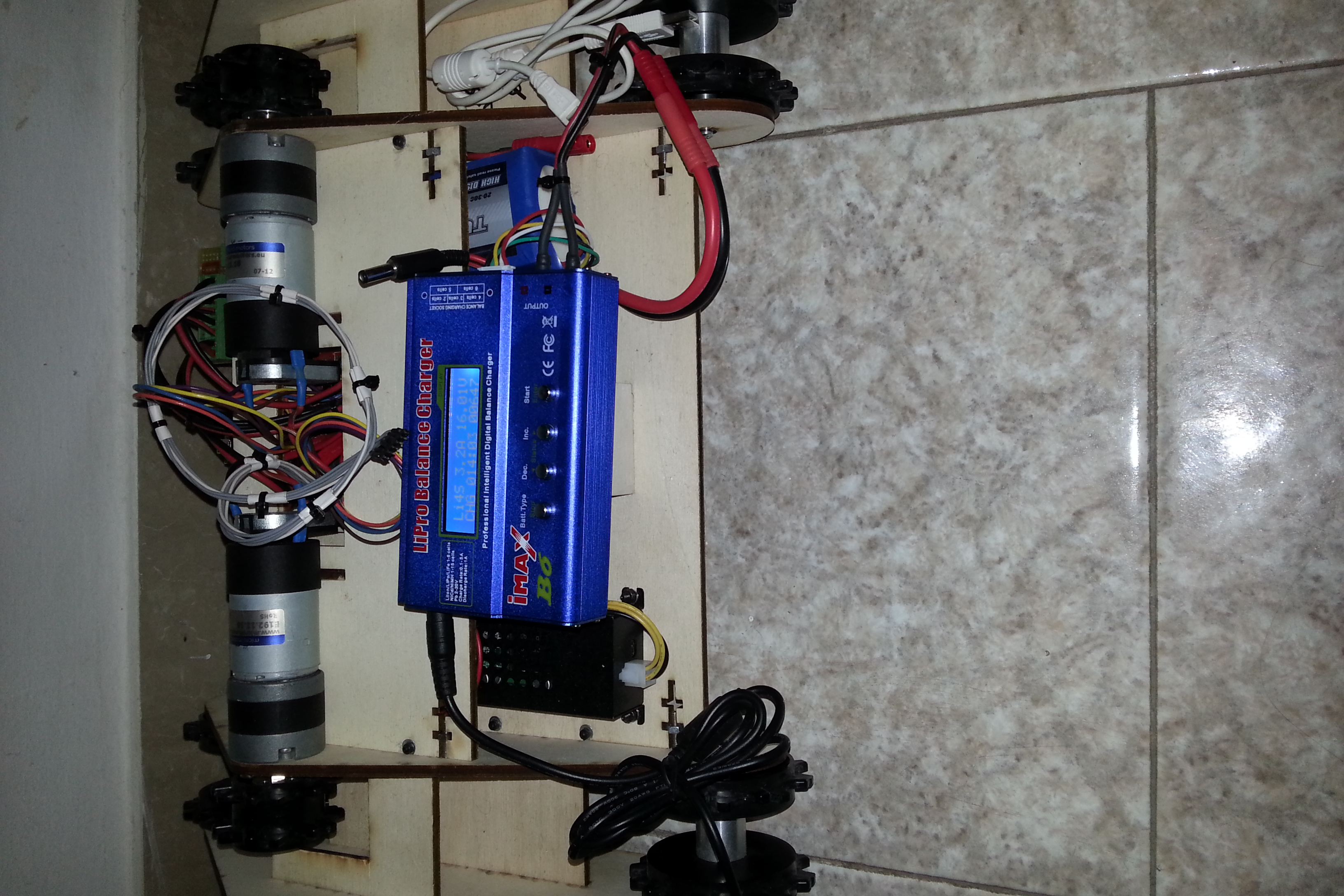

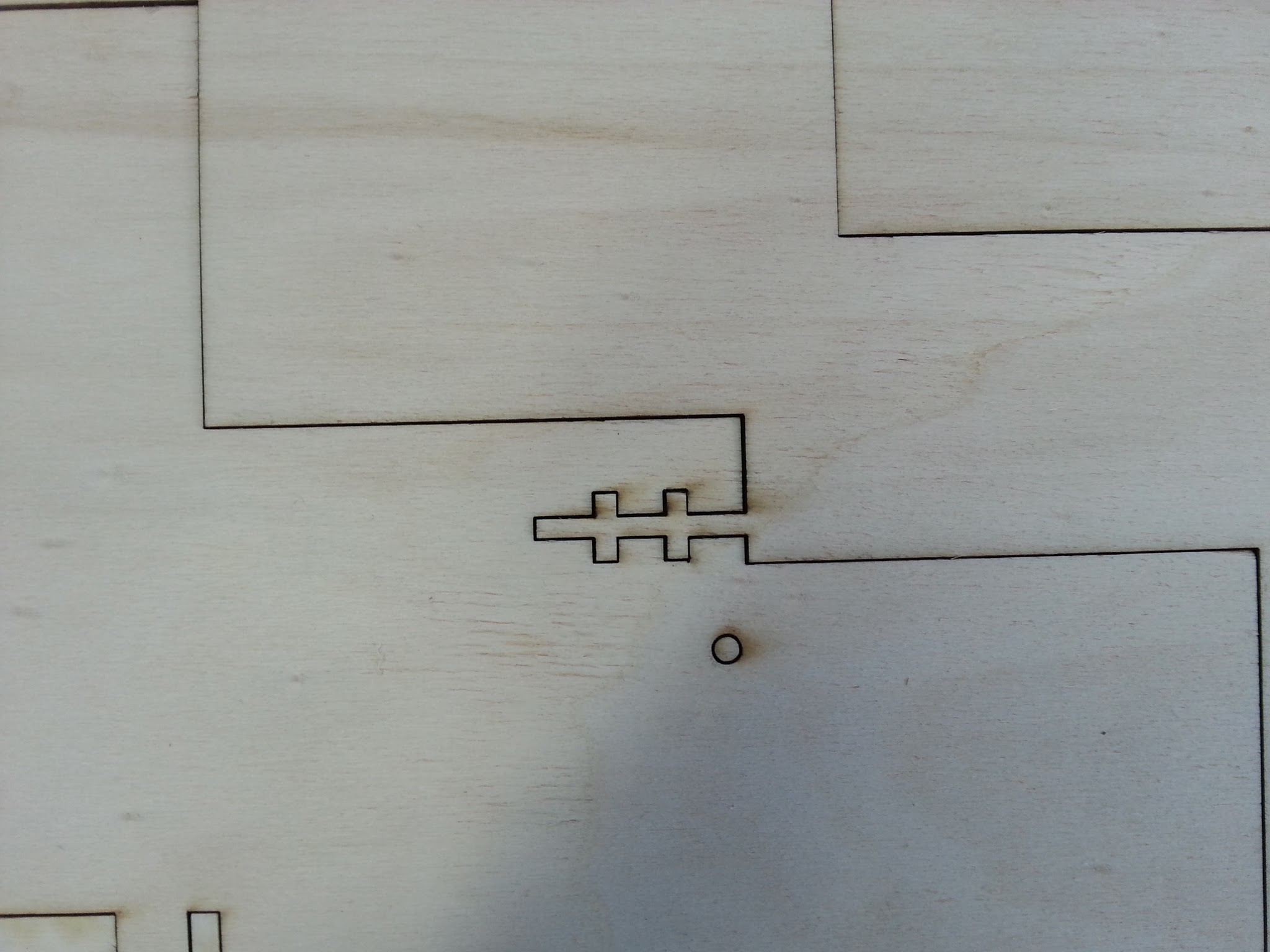

The initial prototype utilized laser-cut wood, which I later replaced with a refined 3D-printed plastic version.

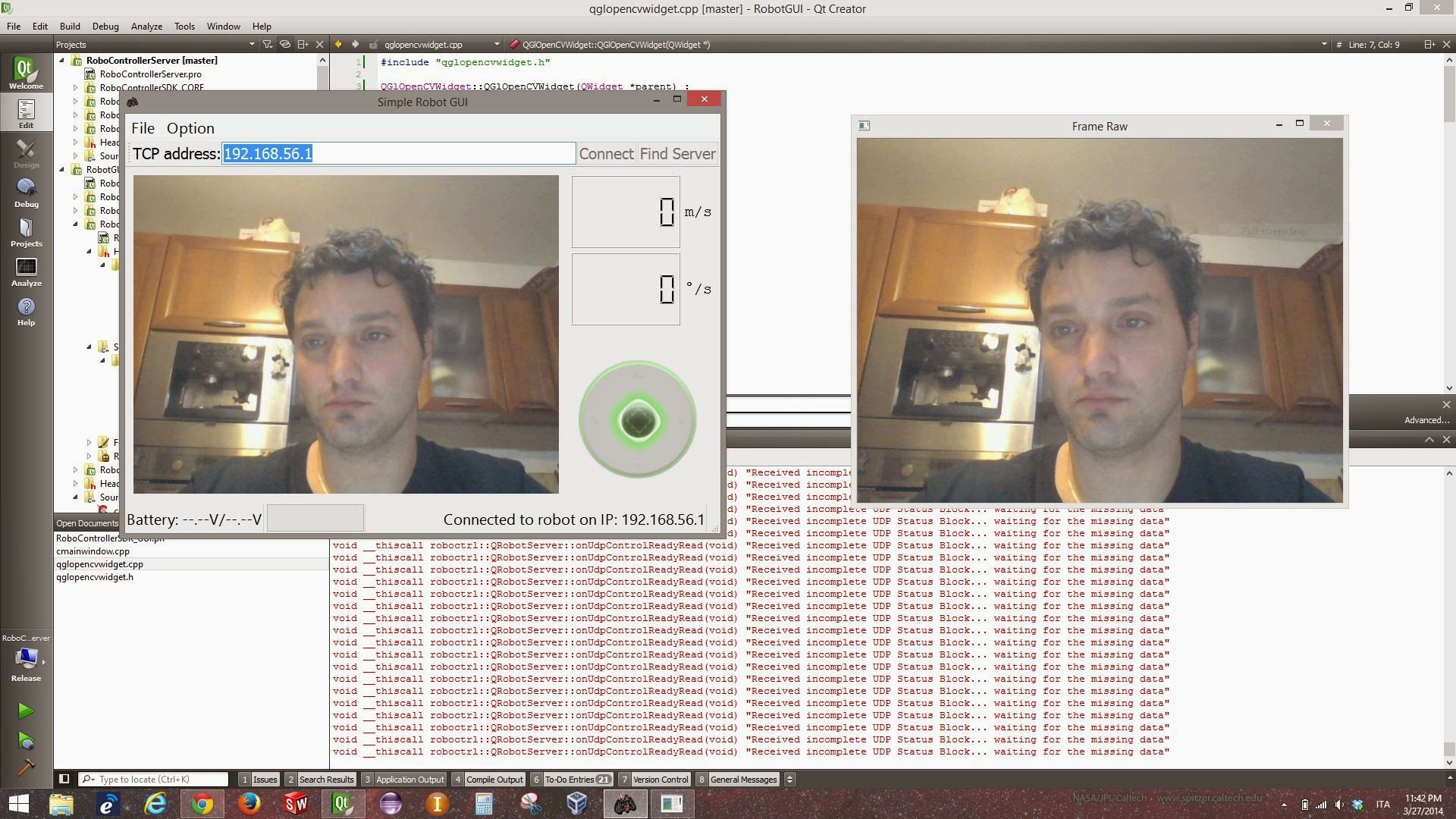

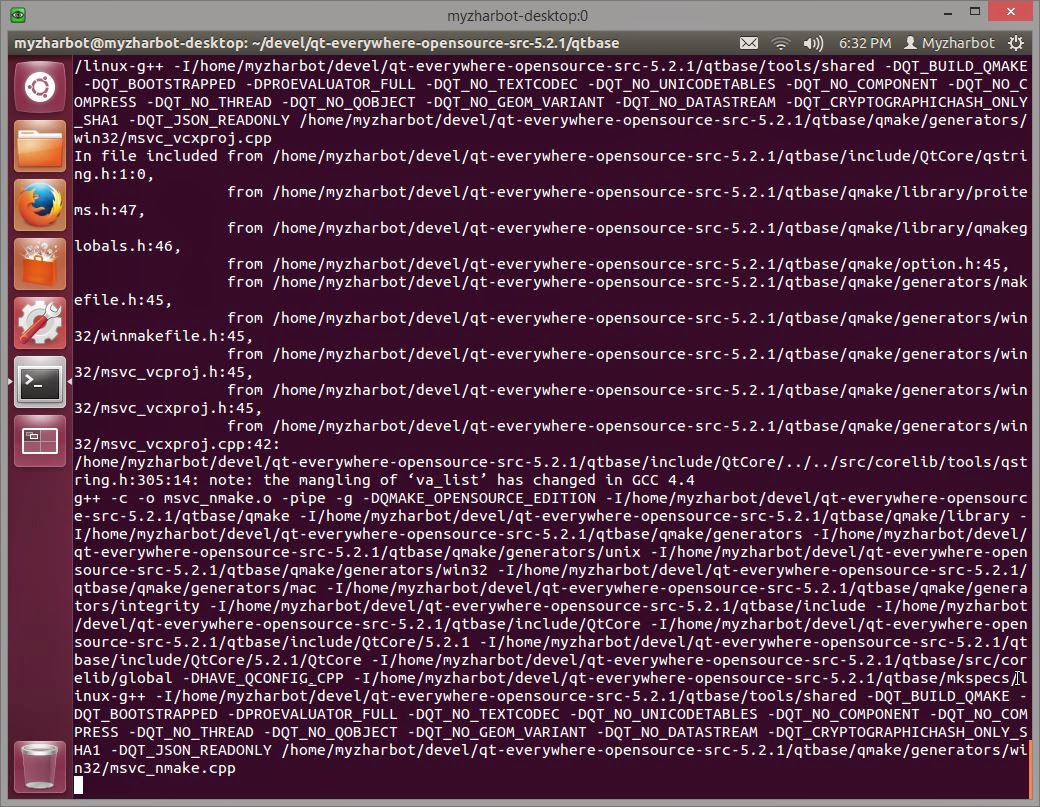

Software Development

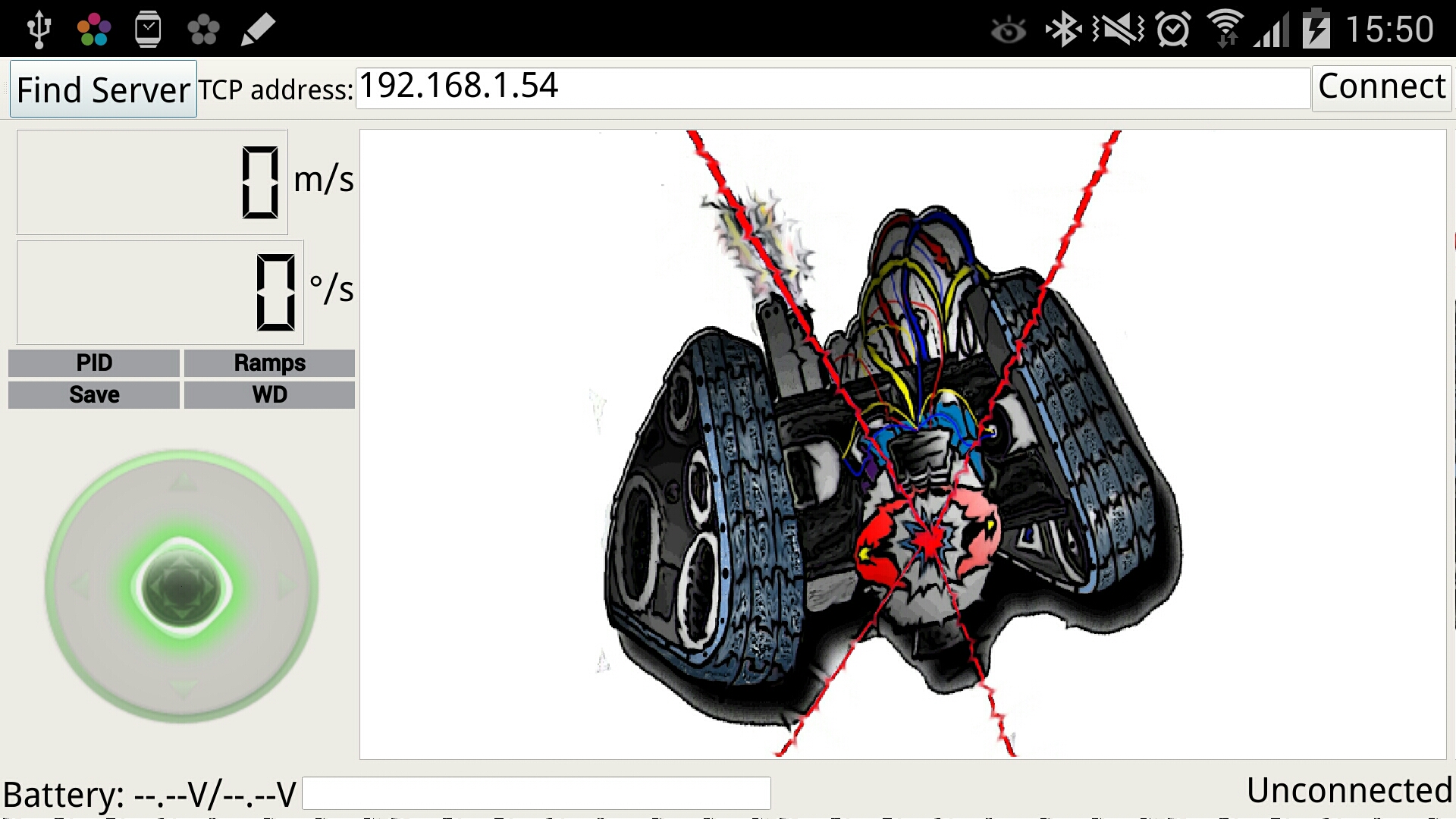

I embarked on creating a custom robotics framework from scratch, implementing core functionalities including motor control, webcam image acquisition, and network-based image data publishing. This approach consumed considerable time, as I initially avoided leveraging existing libraries or frameworks. This experience taught me a valuable lesson about the importance of utilizing established tools to accelerate development and enhance efficiency.

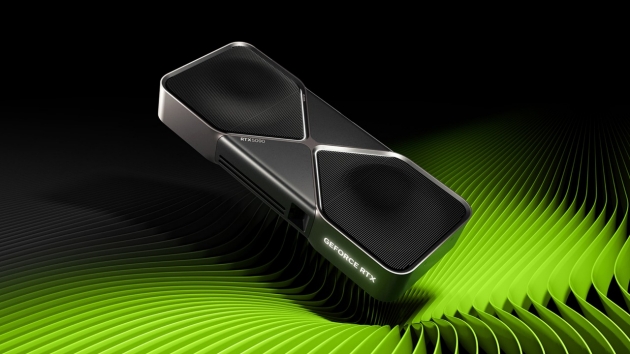

Development continued for nearly two years until mid-2014, when I transitioned to the third version of MyzharBot, upgrading to the NVIDIA® Jetson TK1 as the primary computing platform.

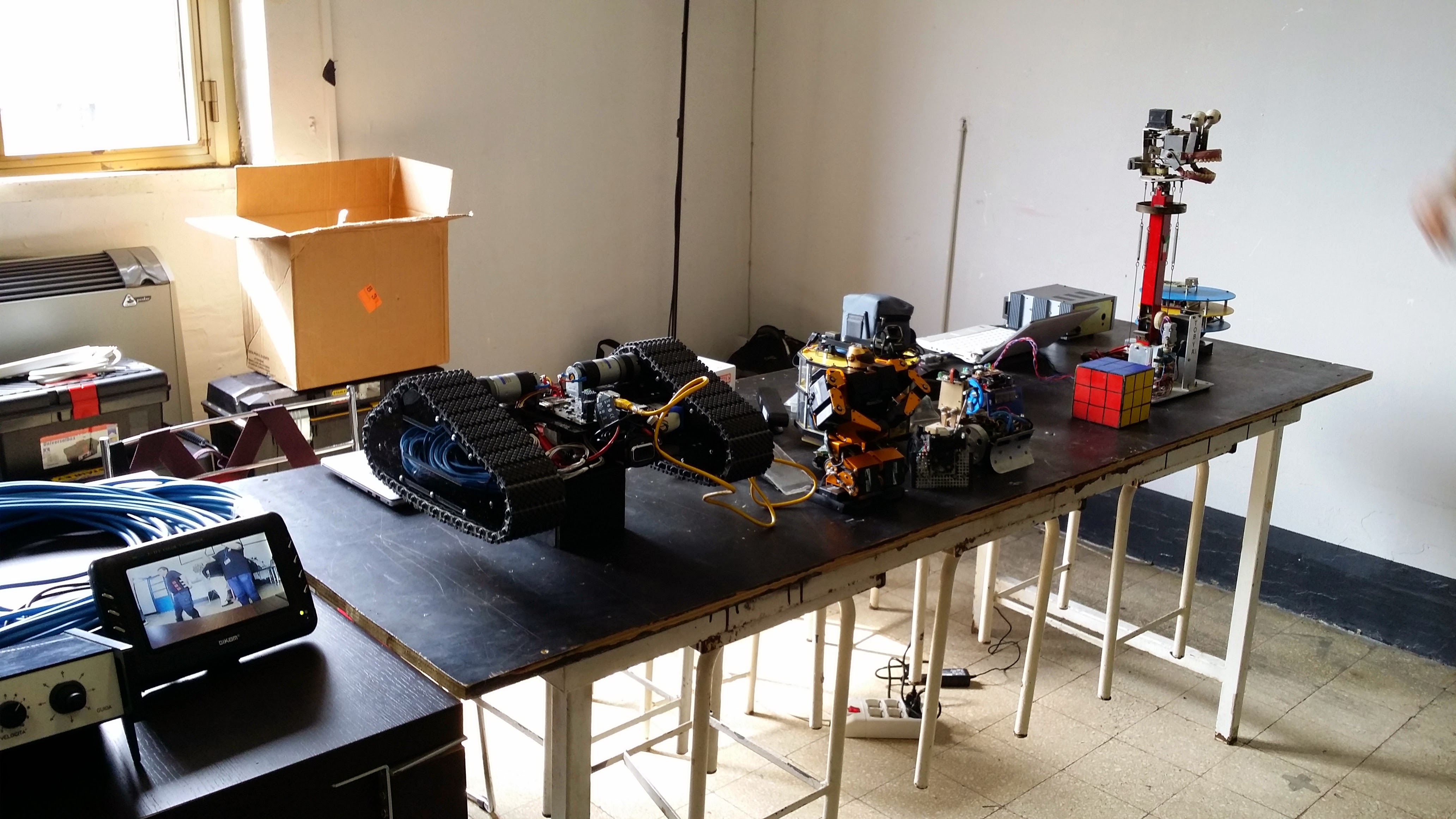

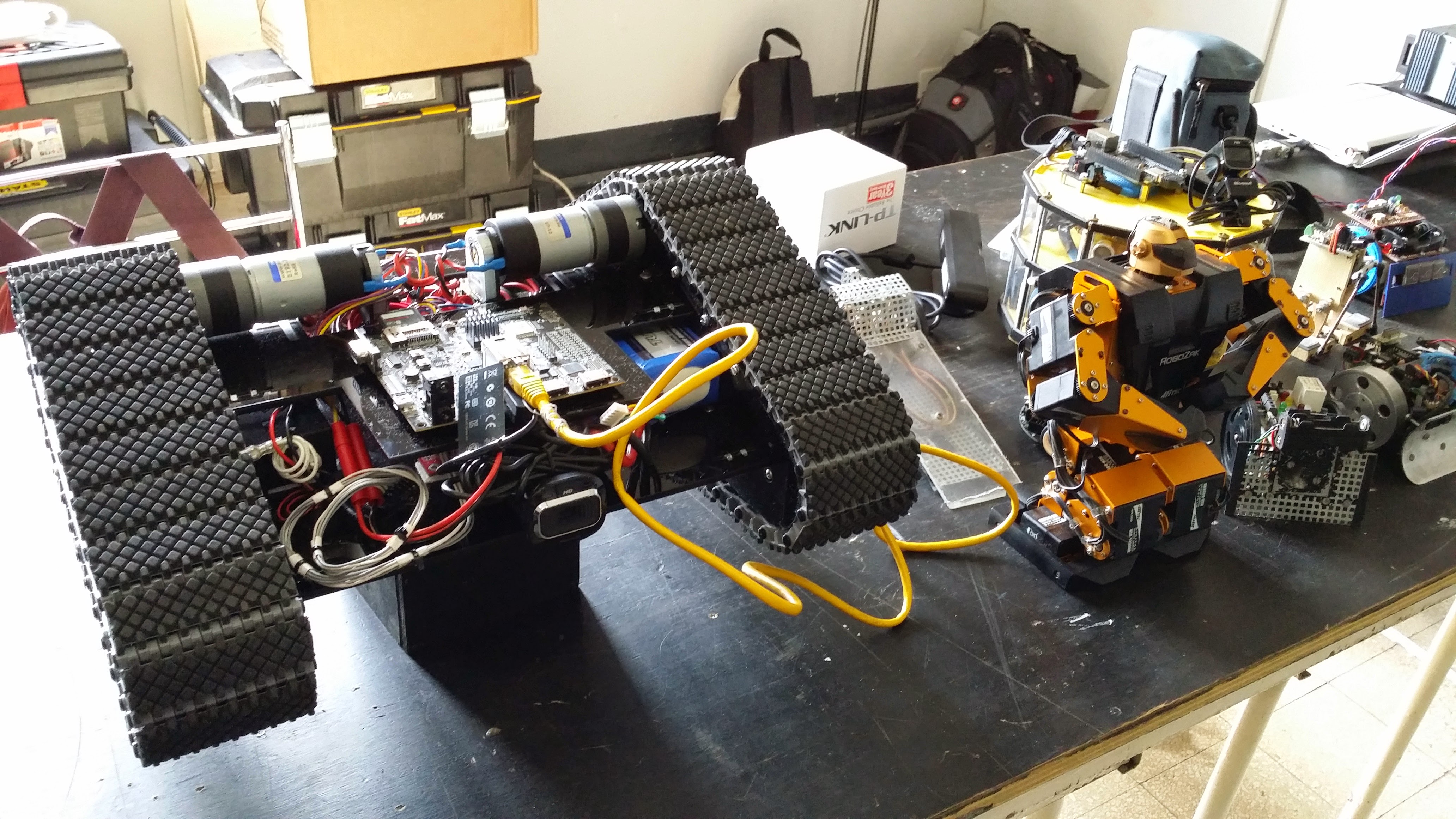

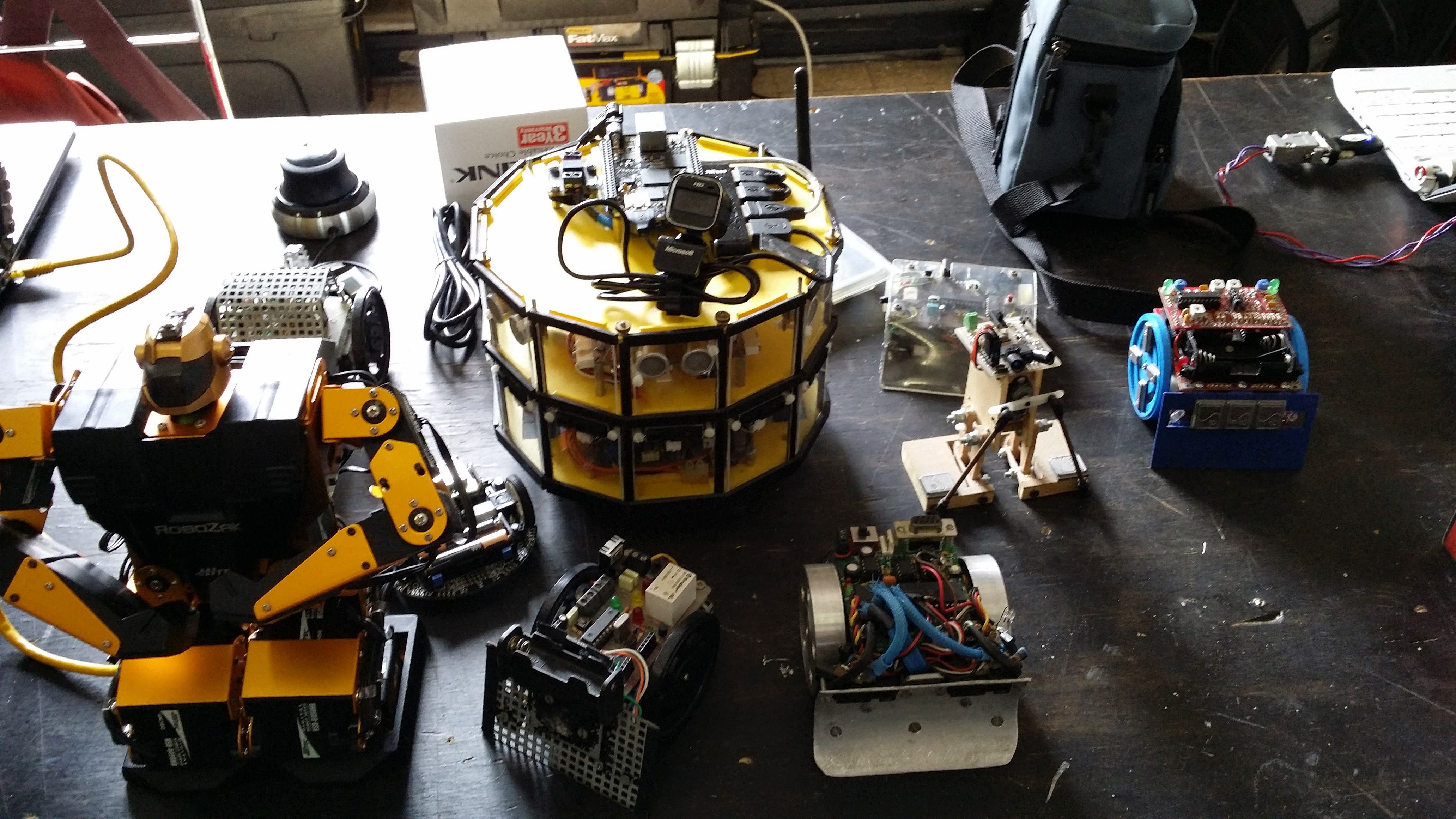

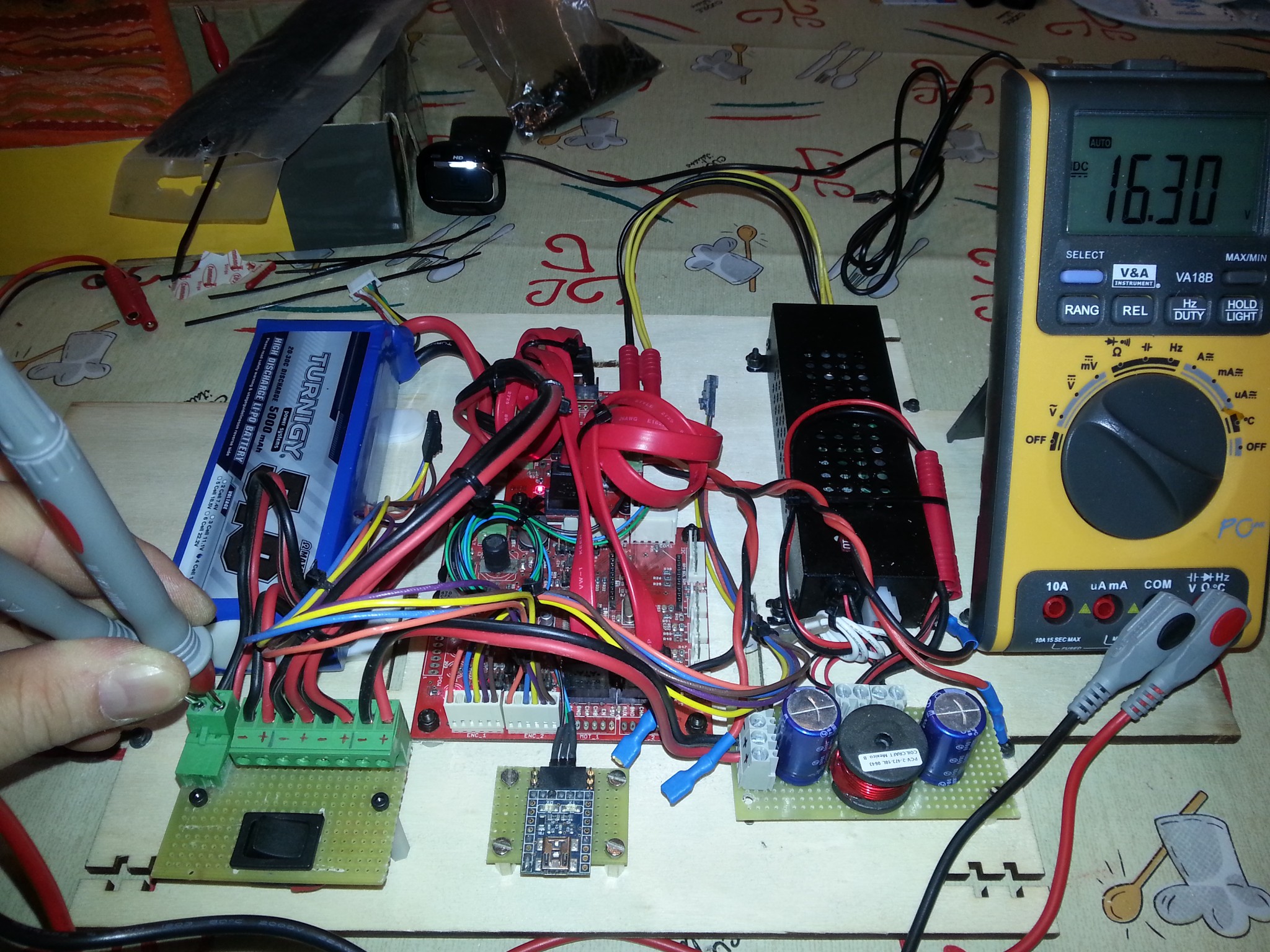

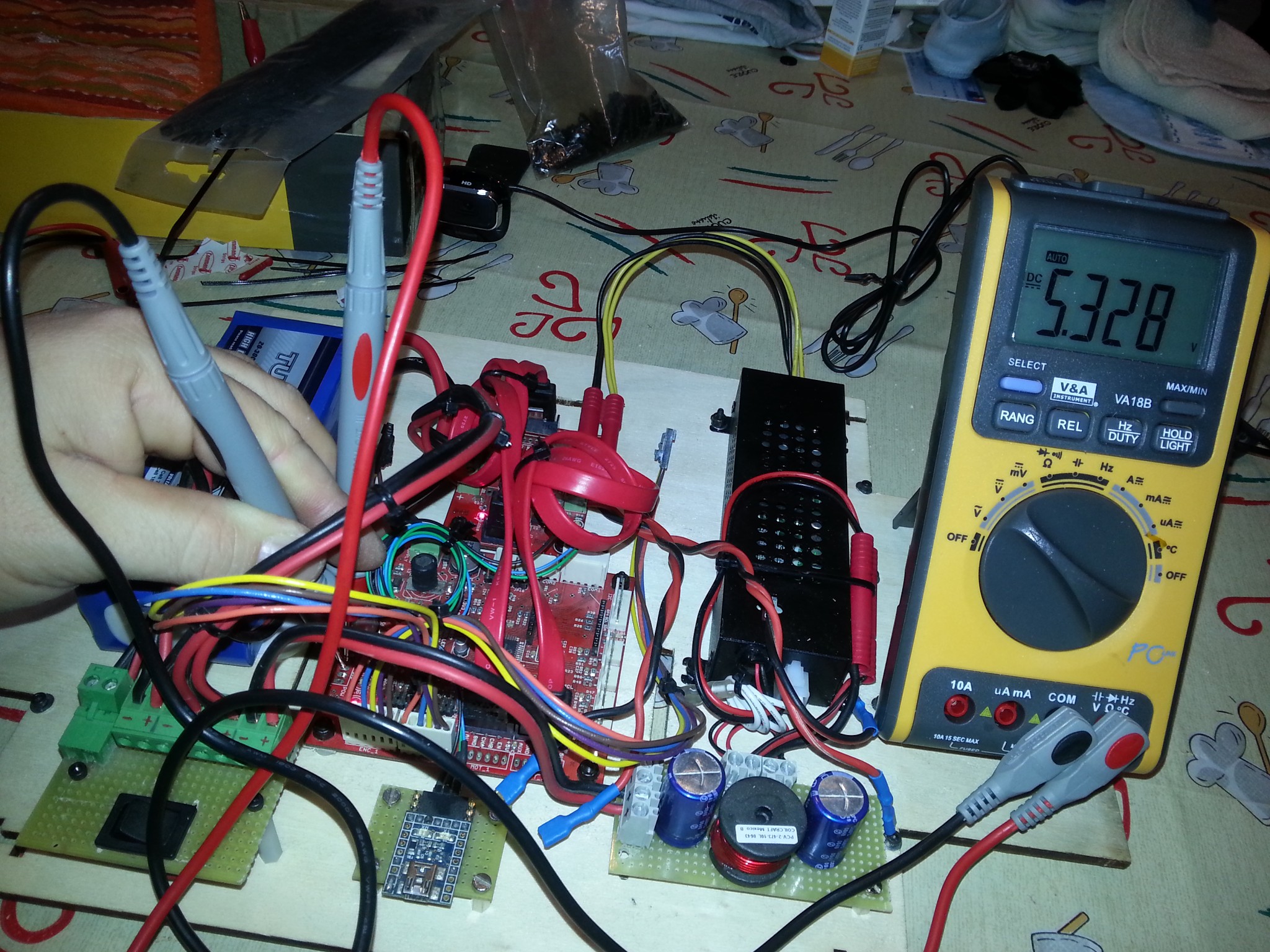

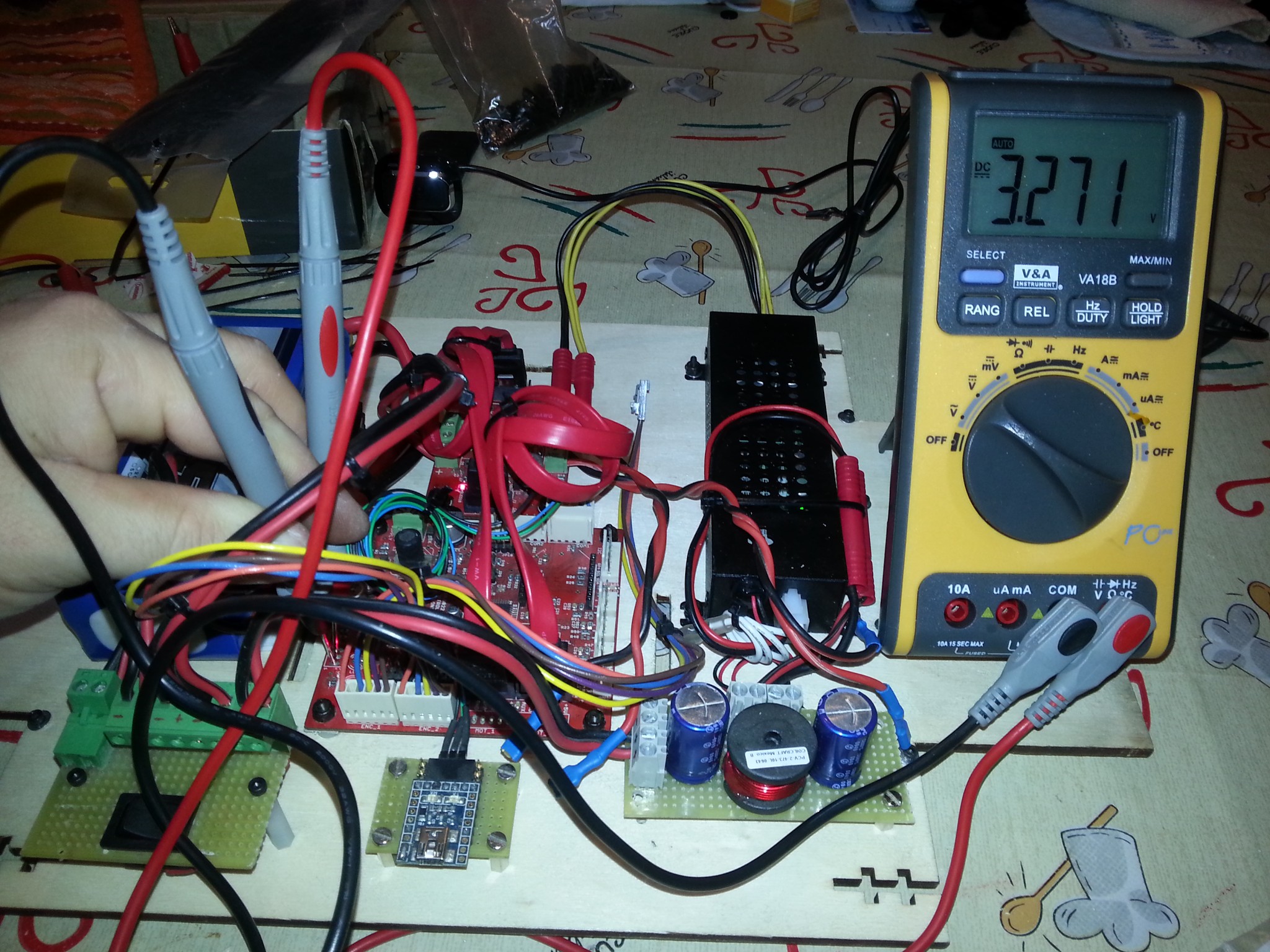

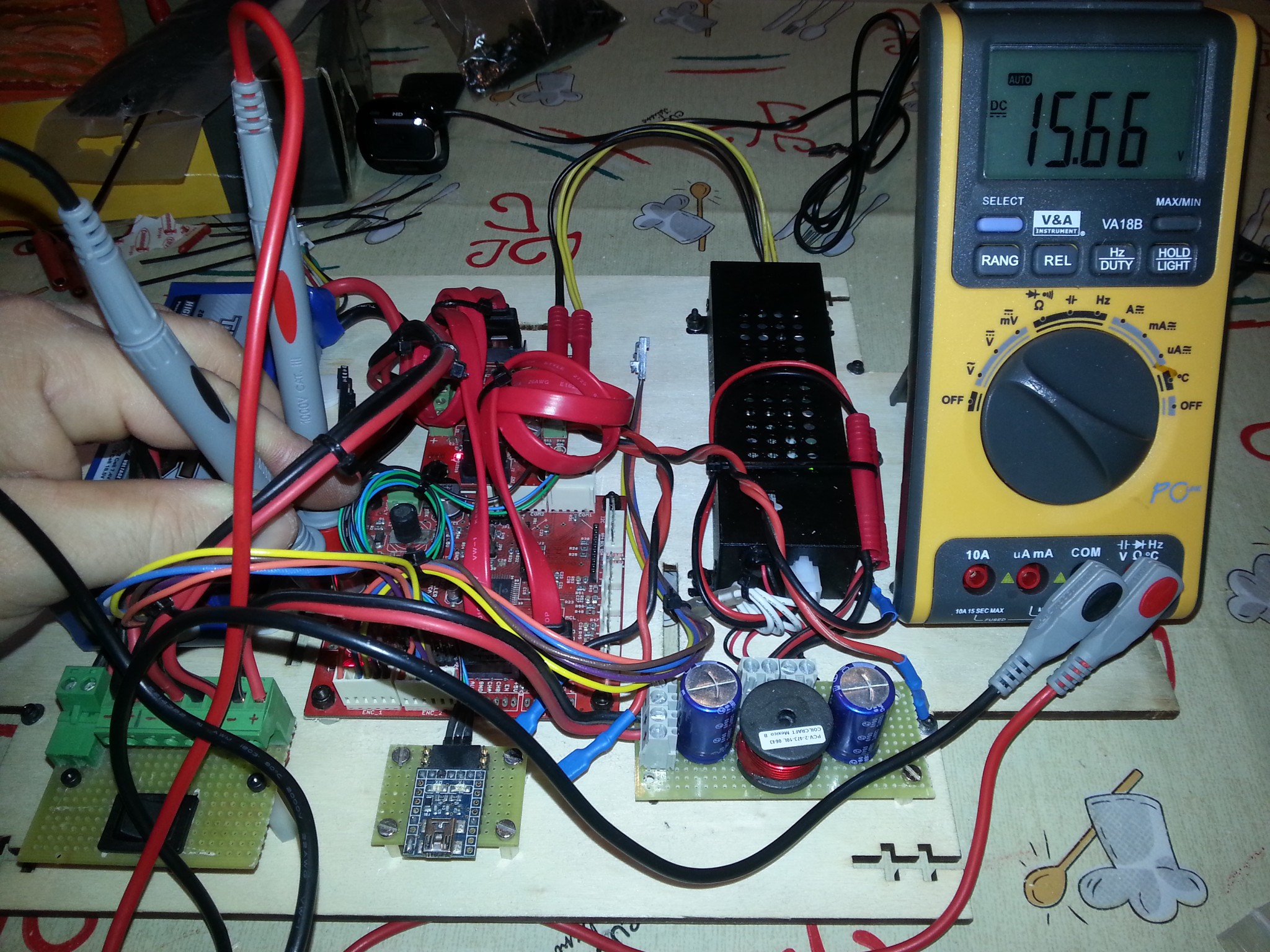

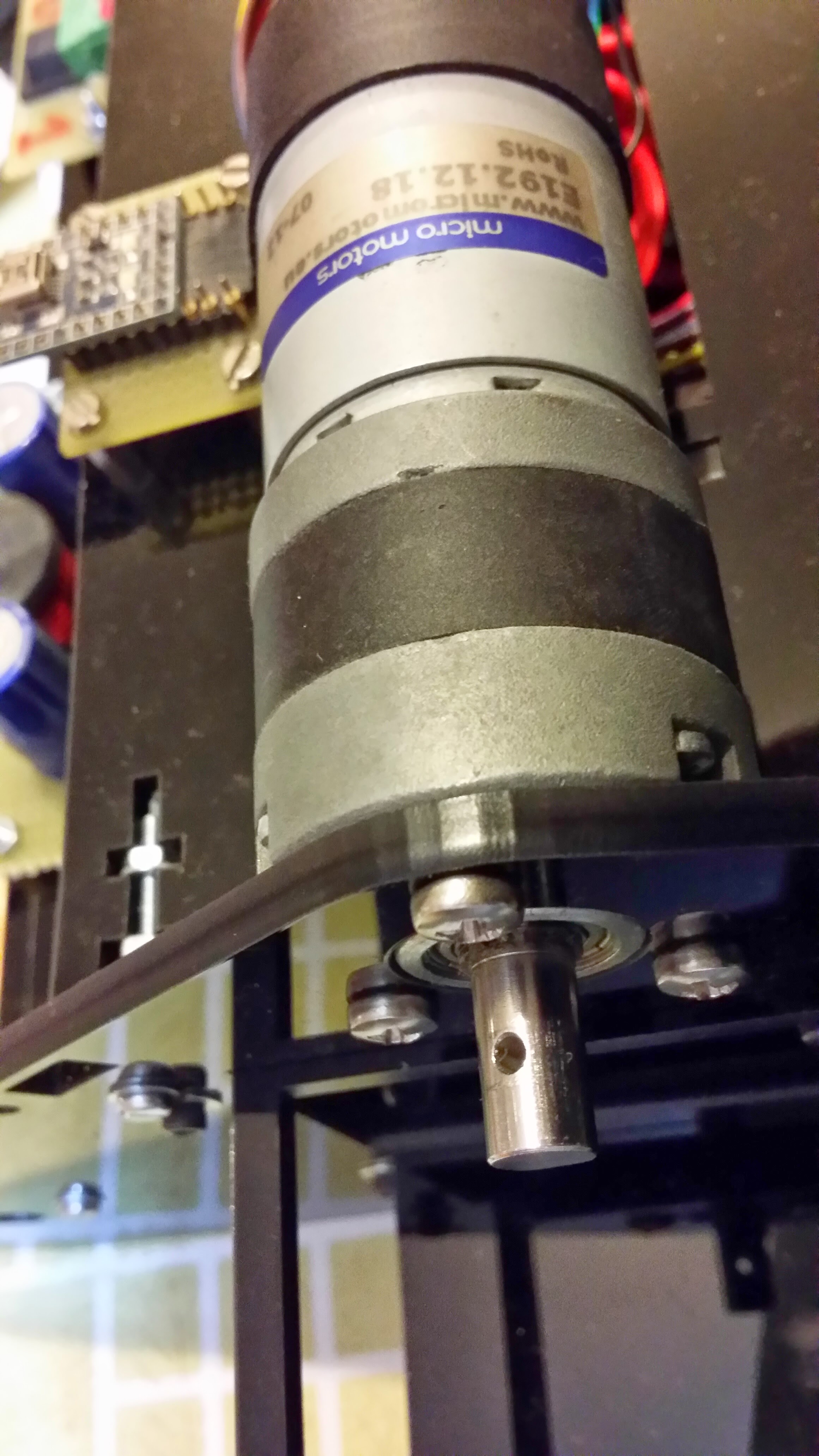

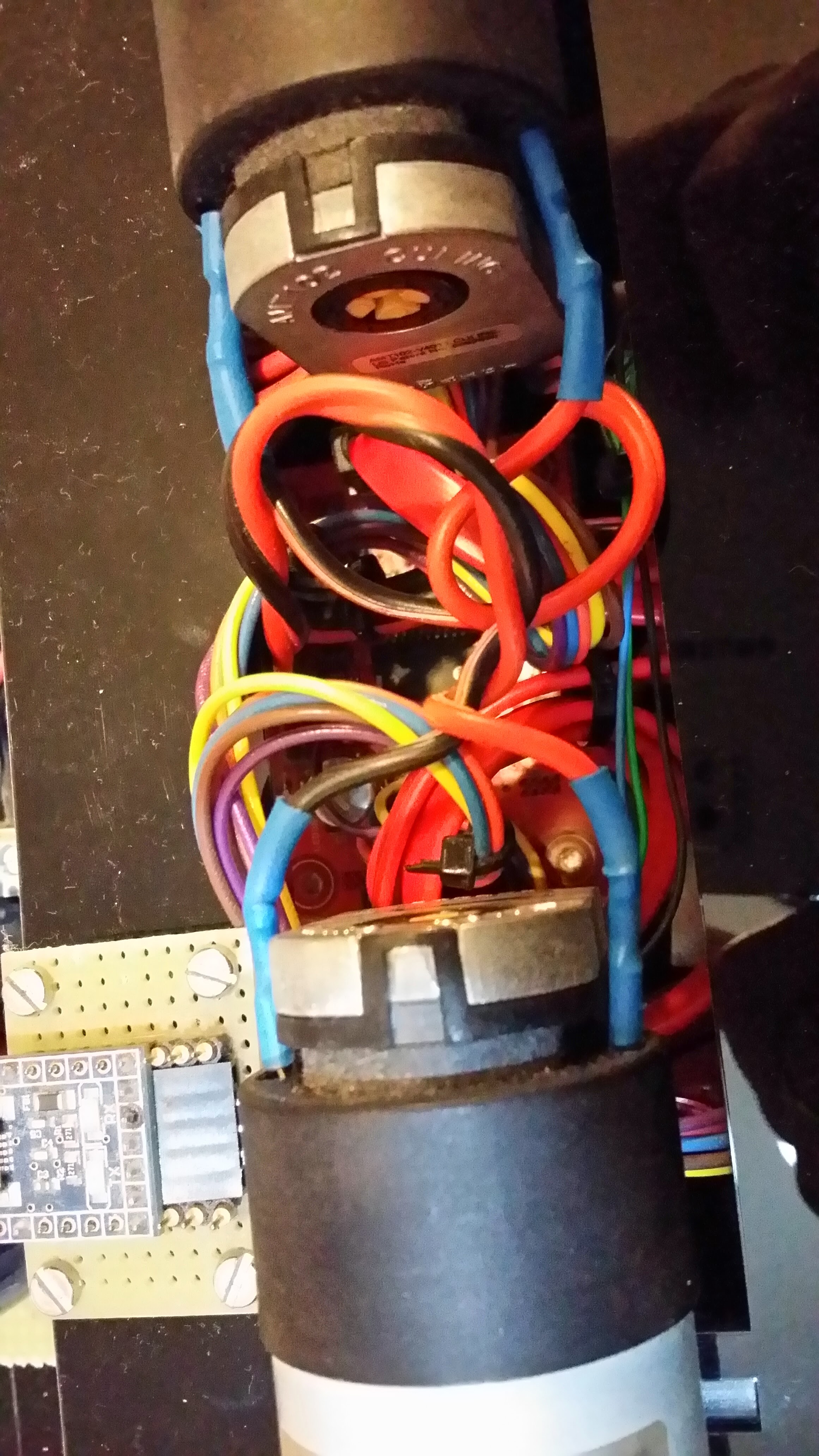

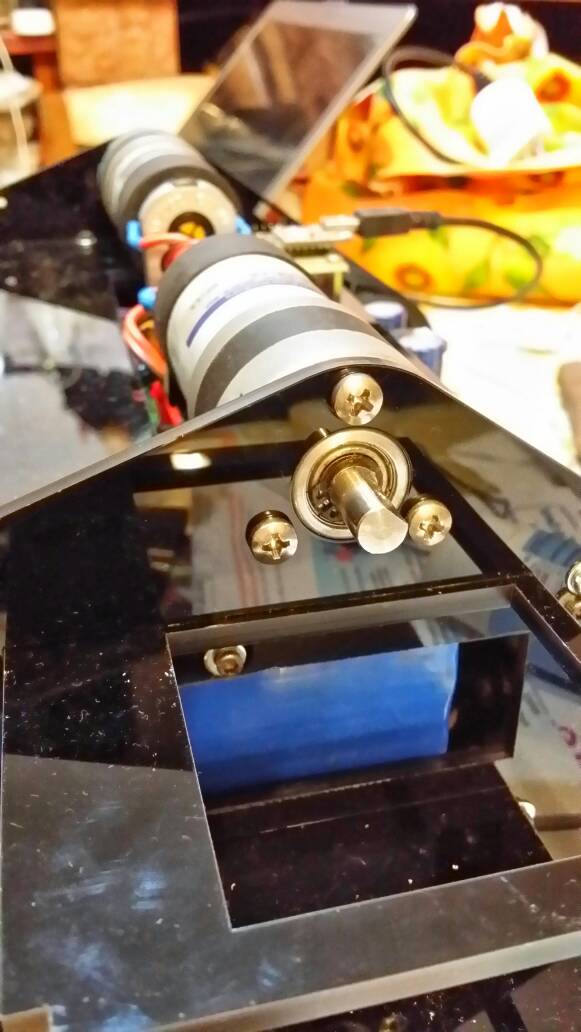

Photos

.jpg)