MyzharBot v3 - The Beginning of a New Era

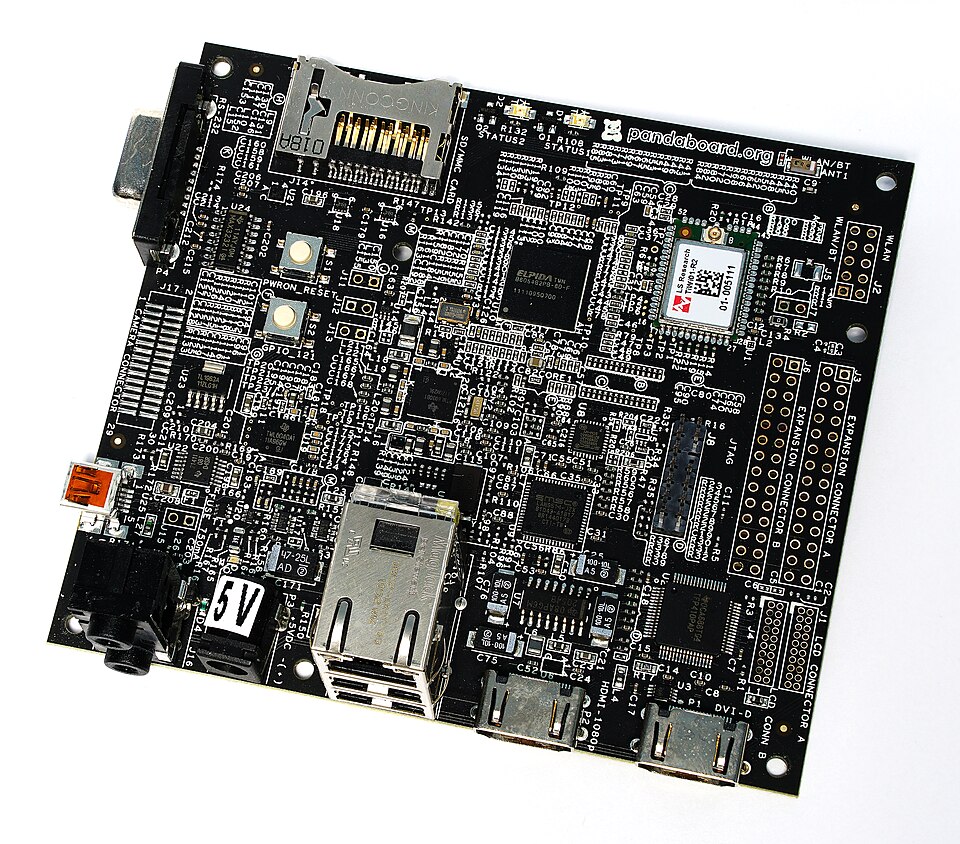

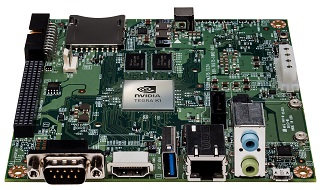

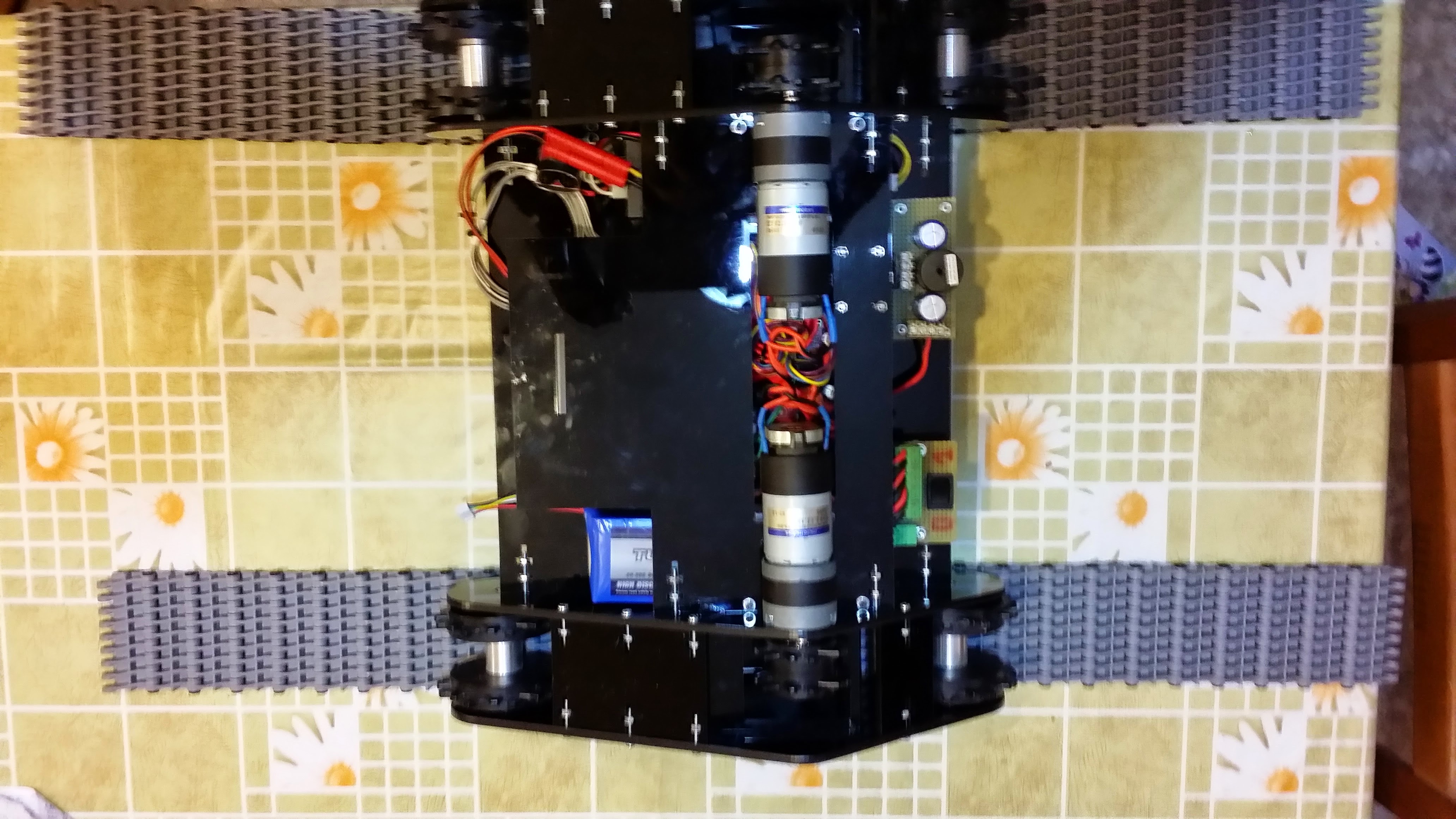

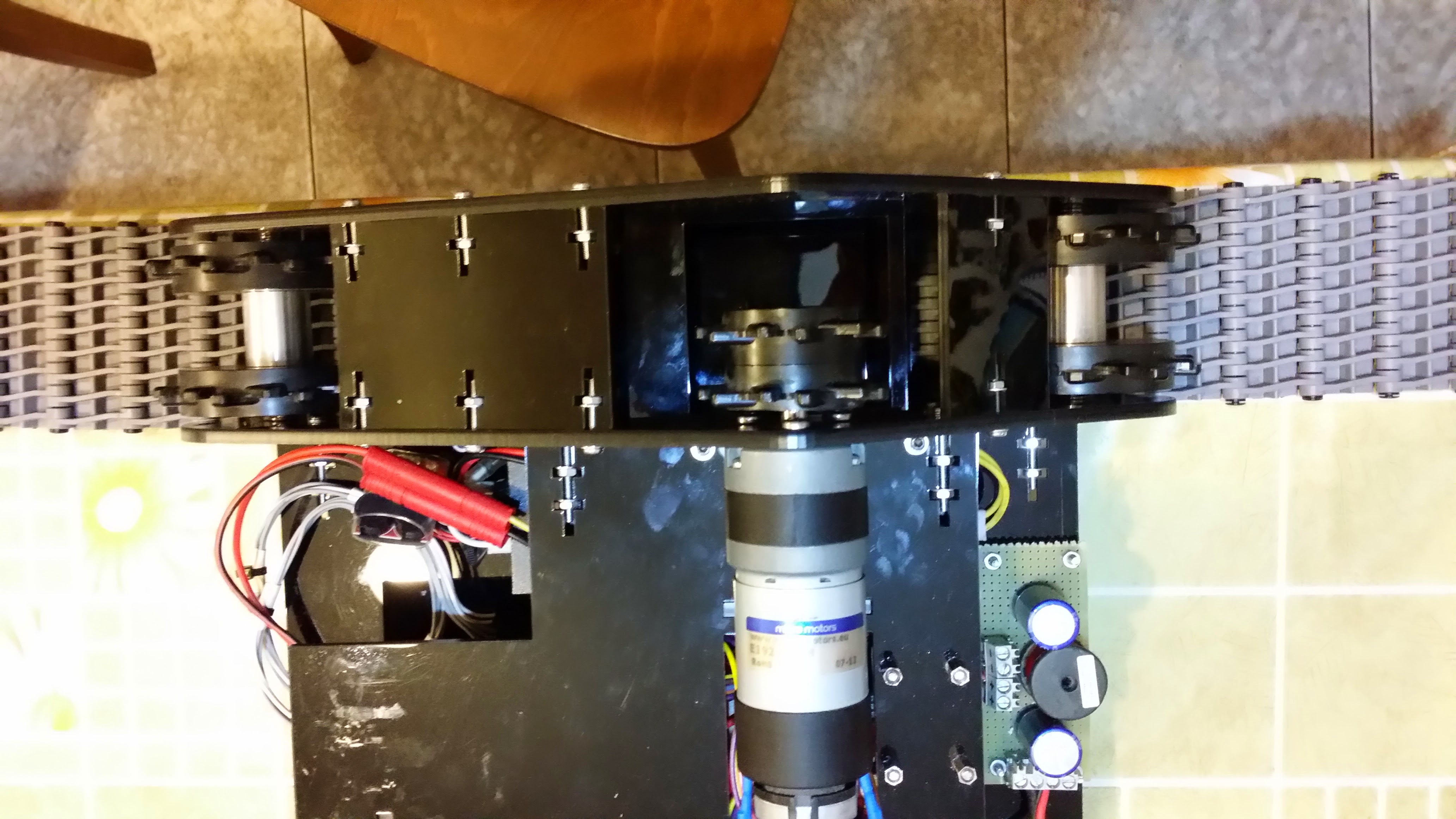

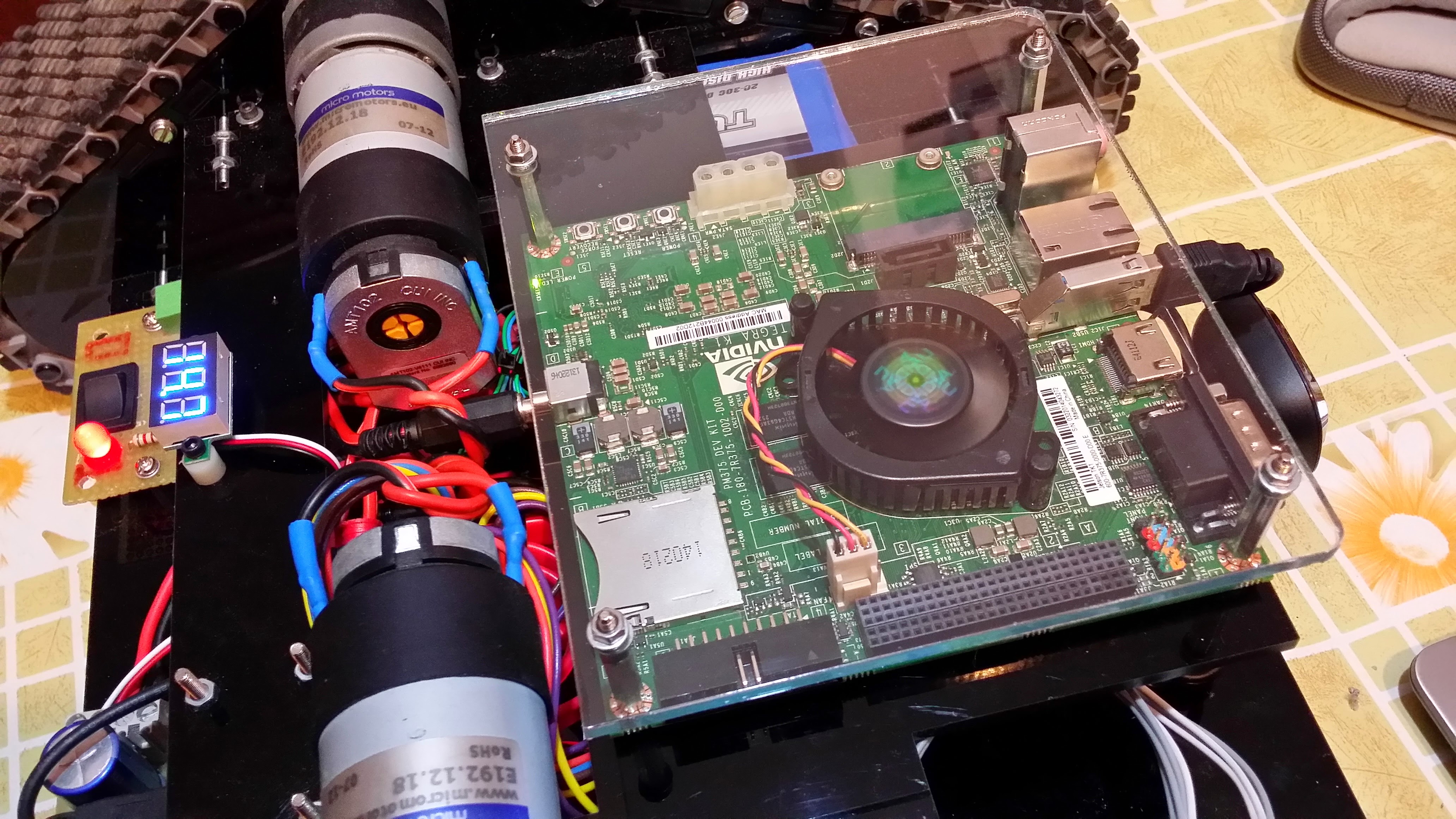

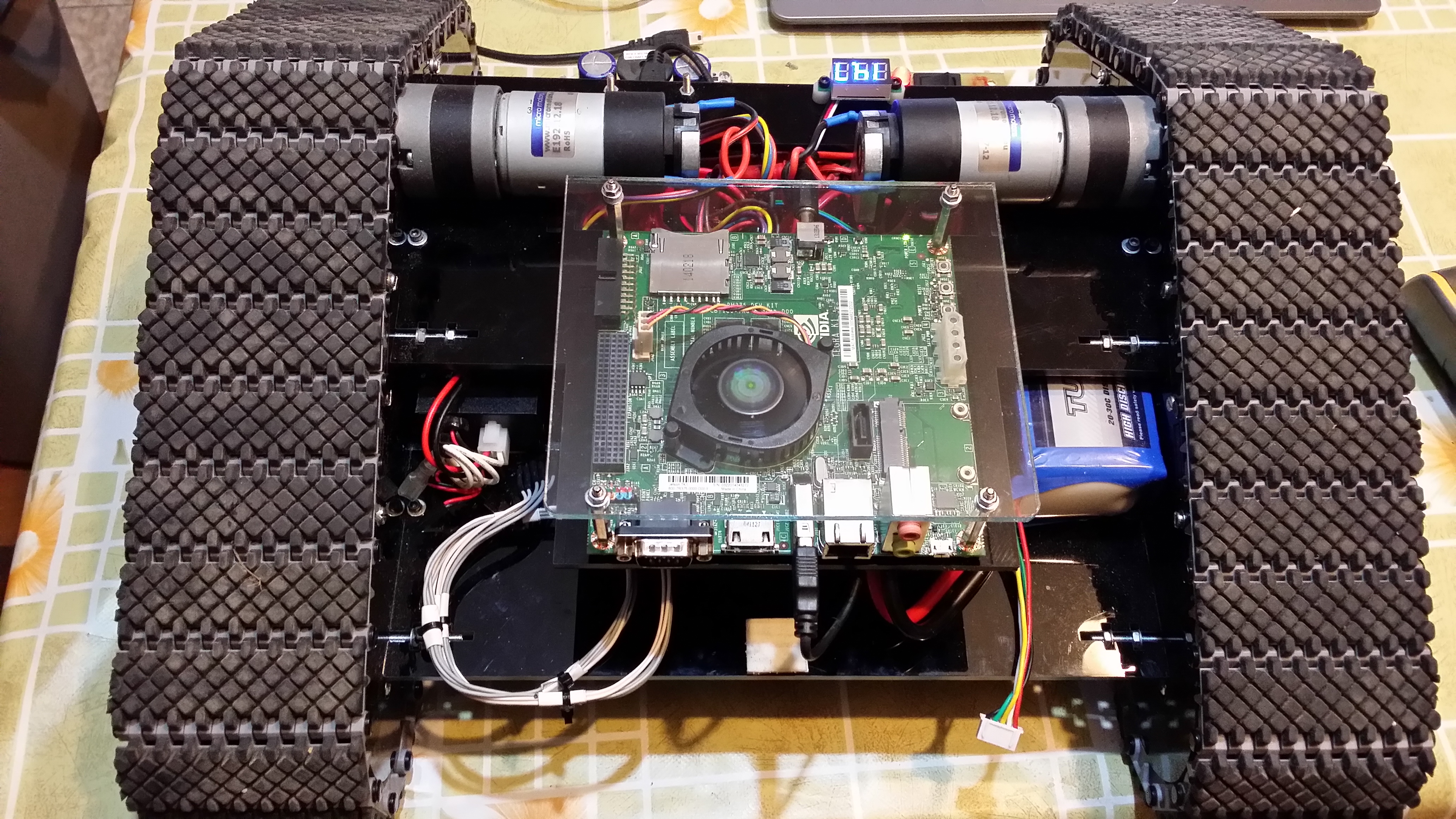

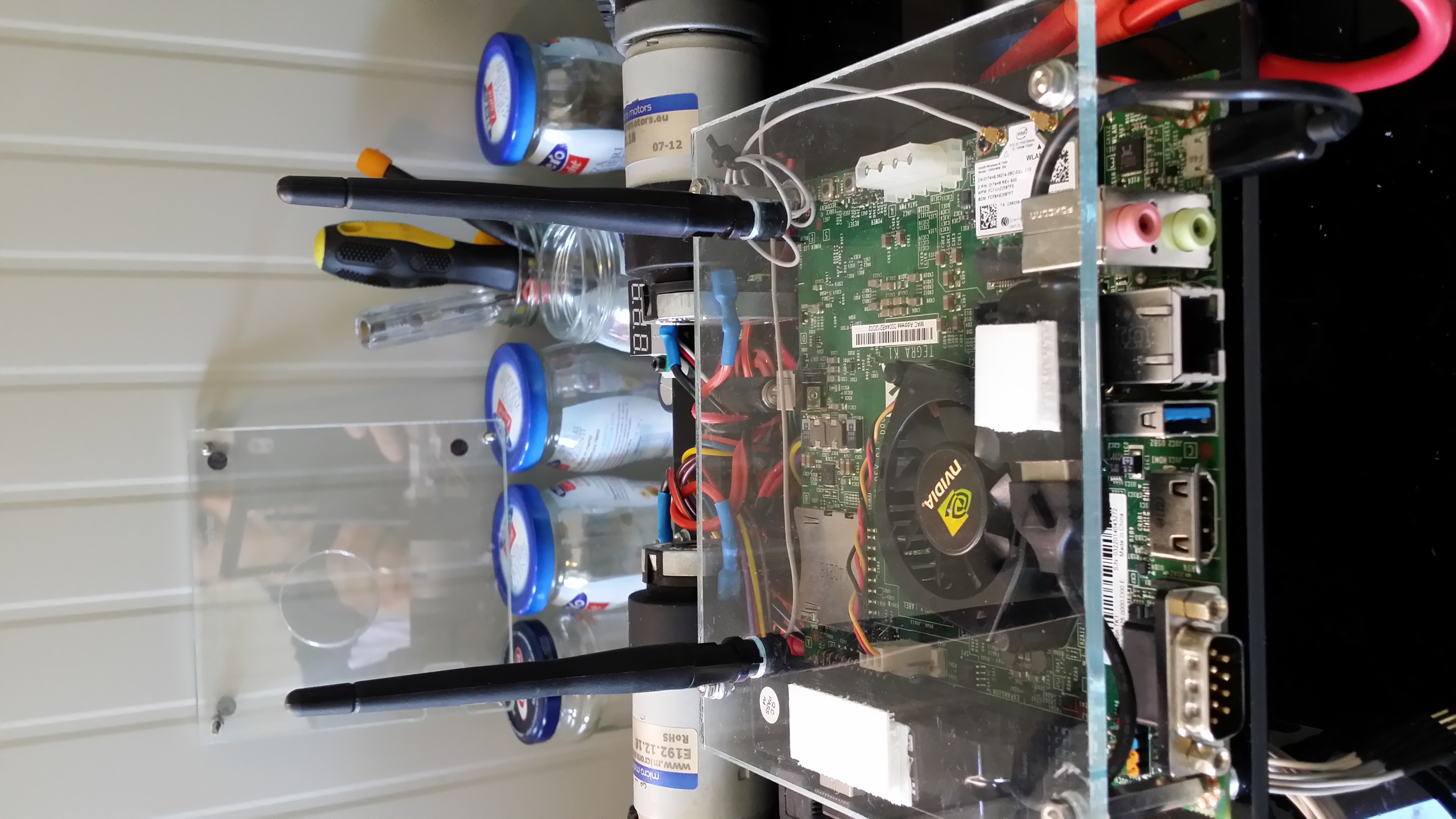

In mid 2014, I embarked on developing MyzharBot’s third version, upgrading from the Pandaboard ES to the NVIDIA® Jetson TK1 as the main computer. My dream had finally materialized: NVIDIA® released a powerful CUDA-enabled embedded platform capable of running real-time computer vision on a small robot!

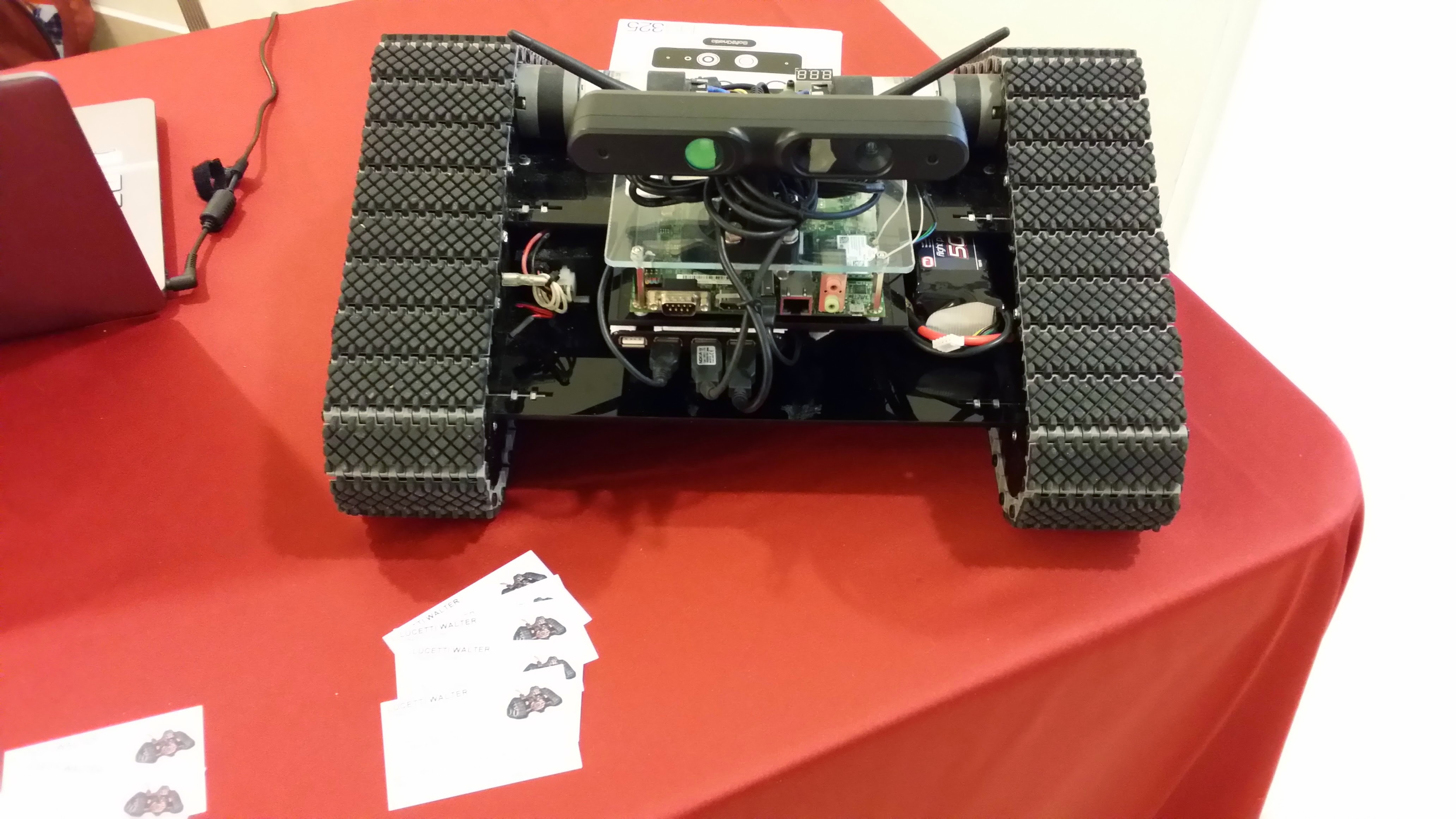

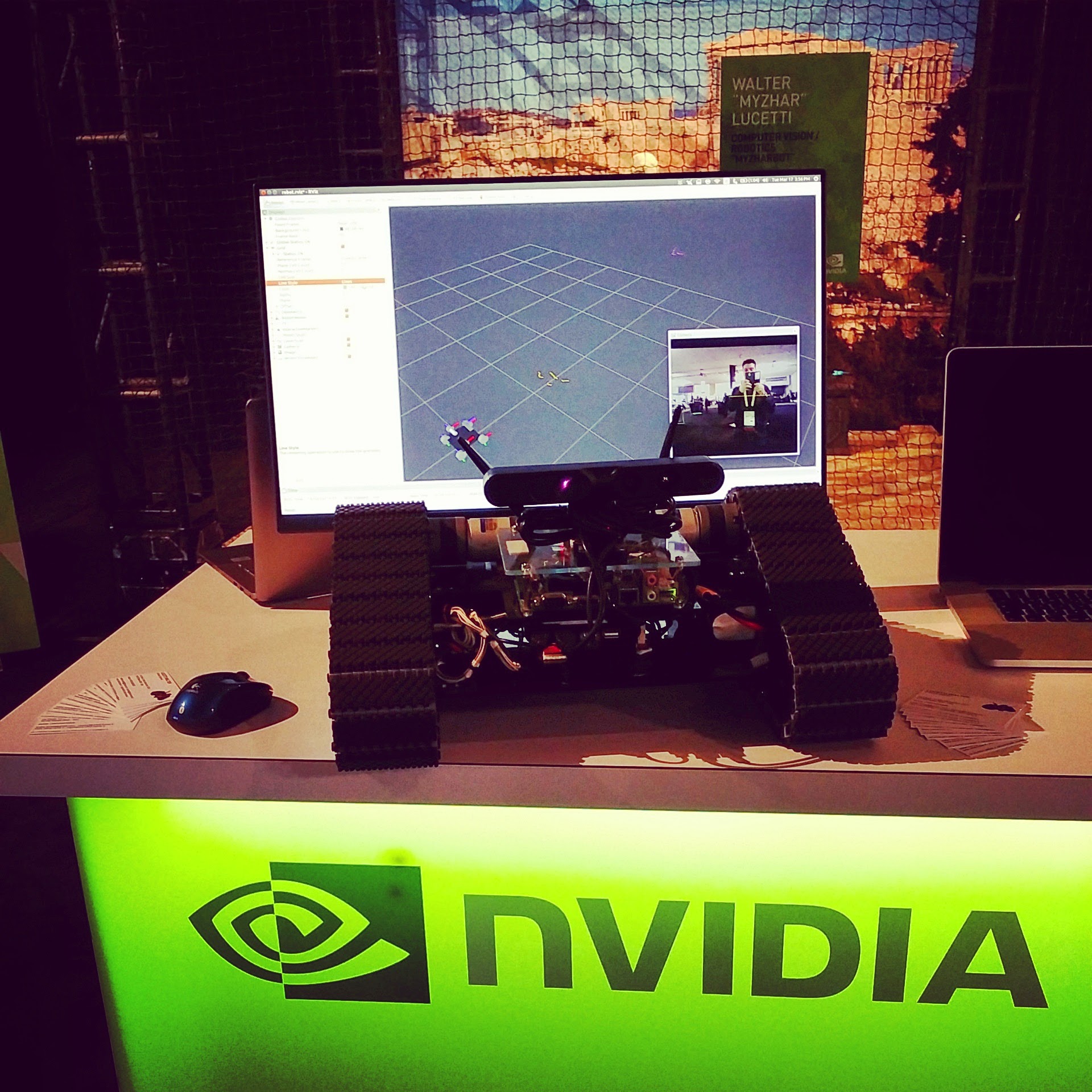

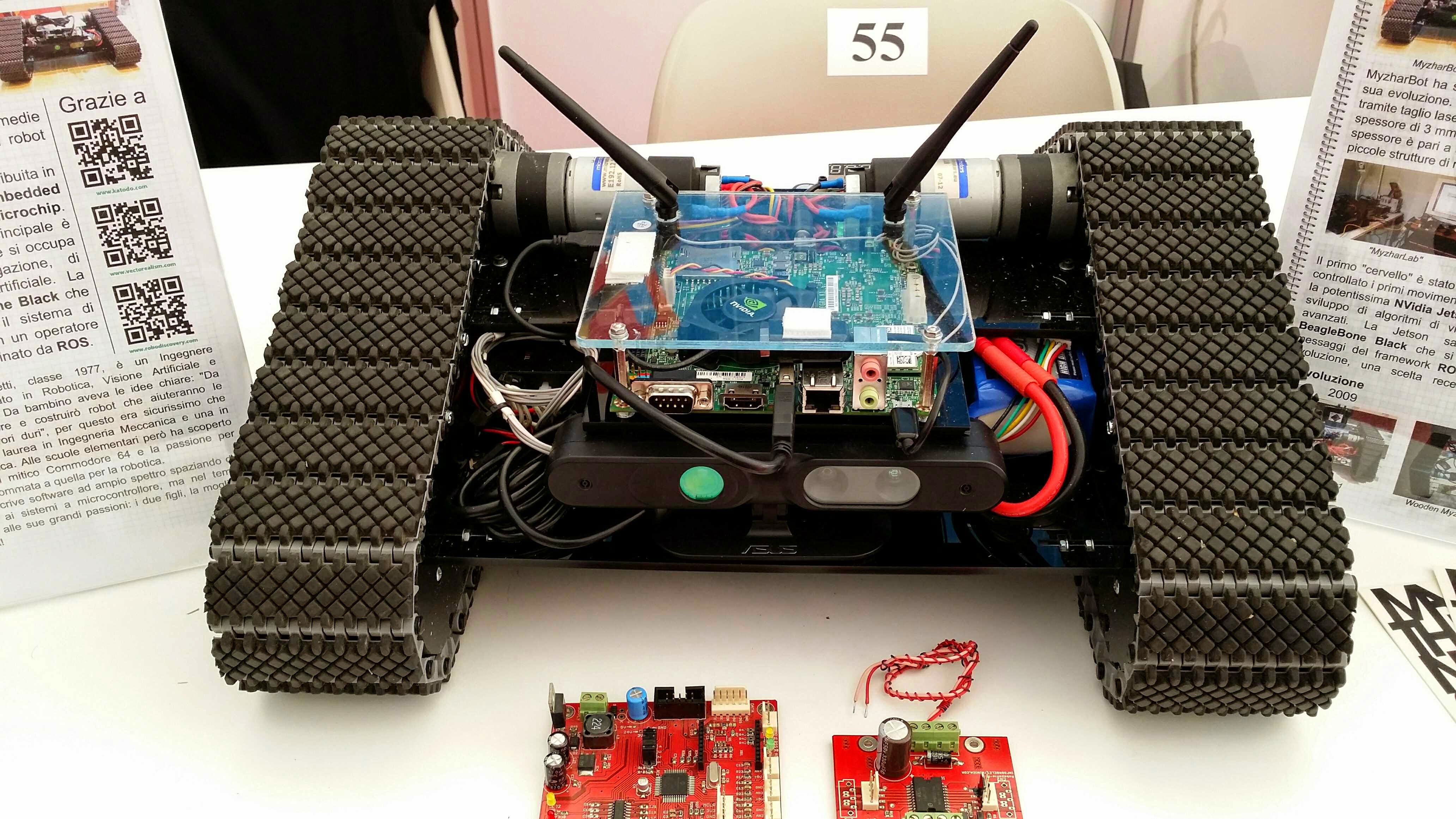

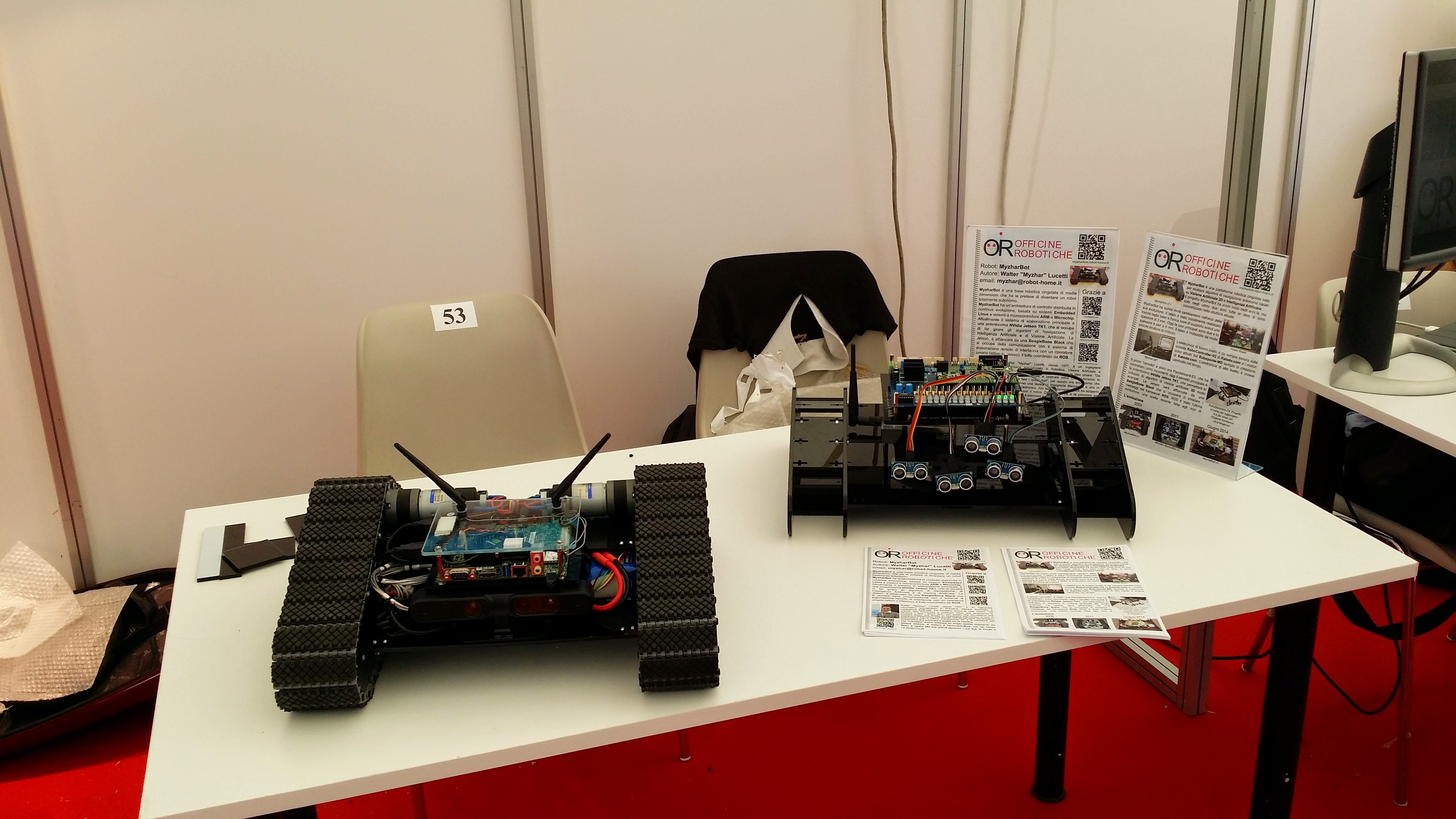

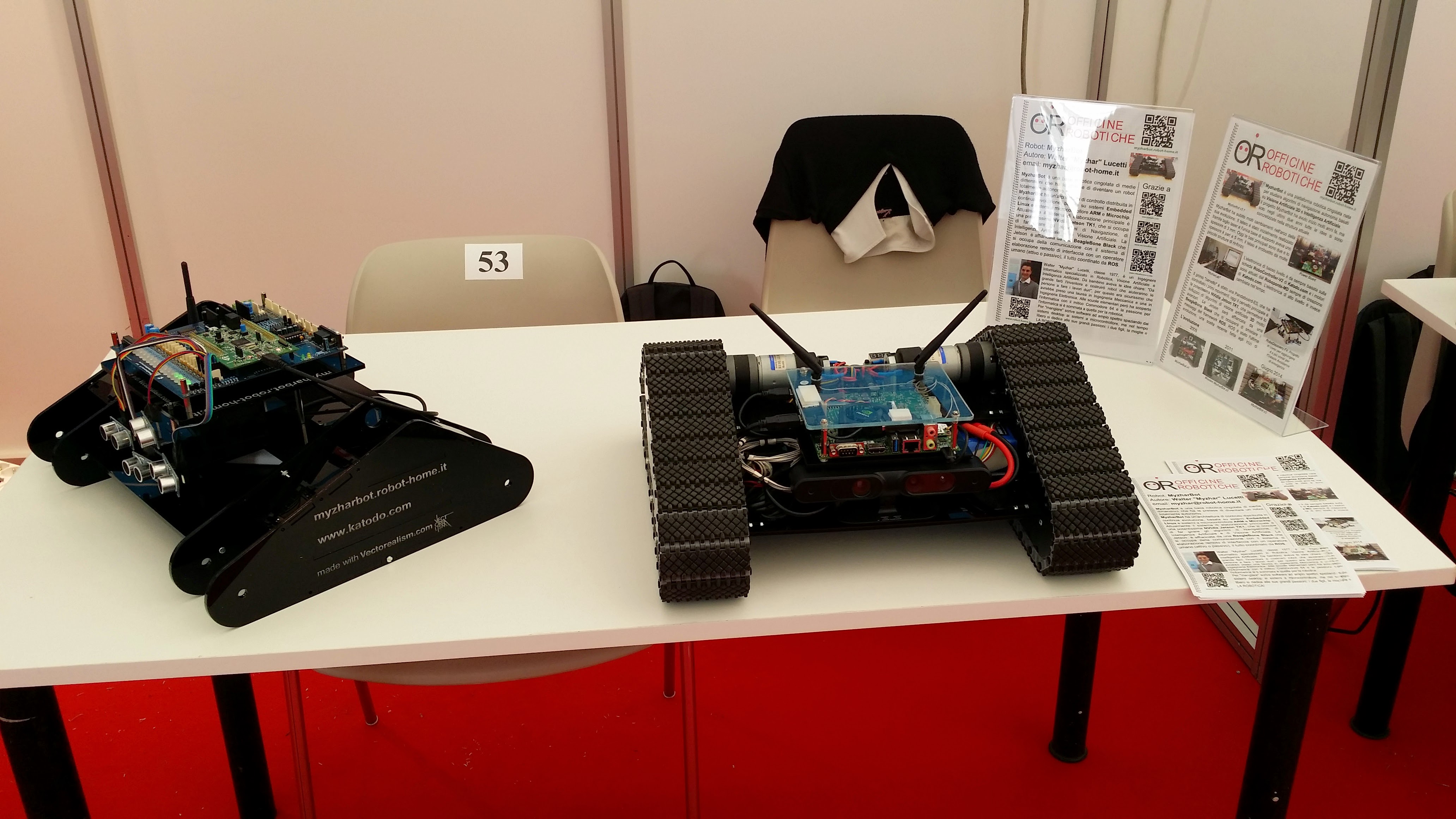

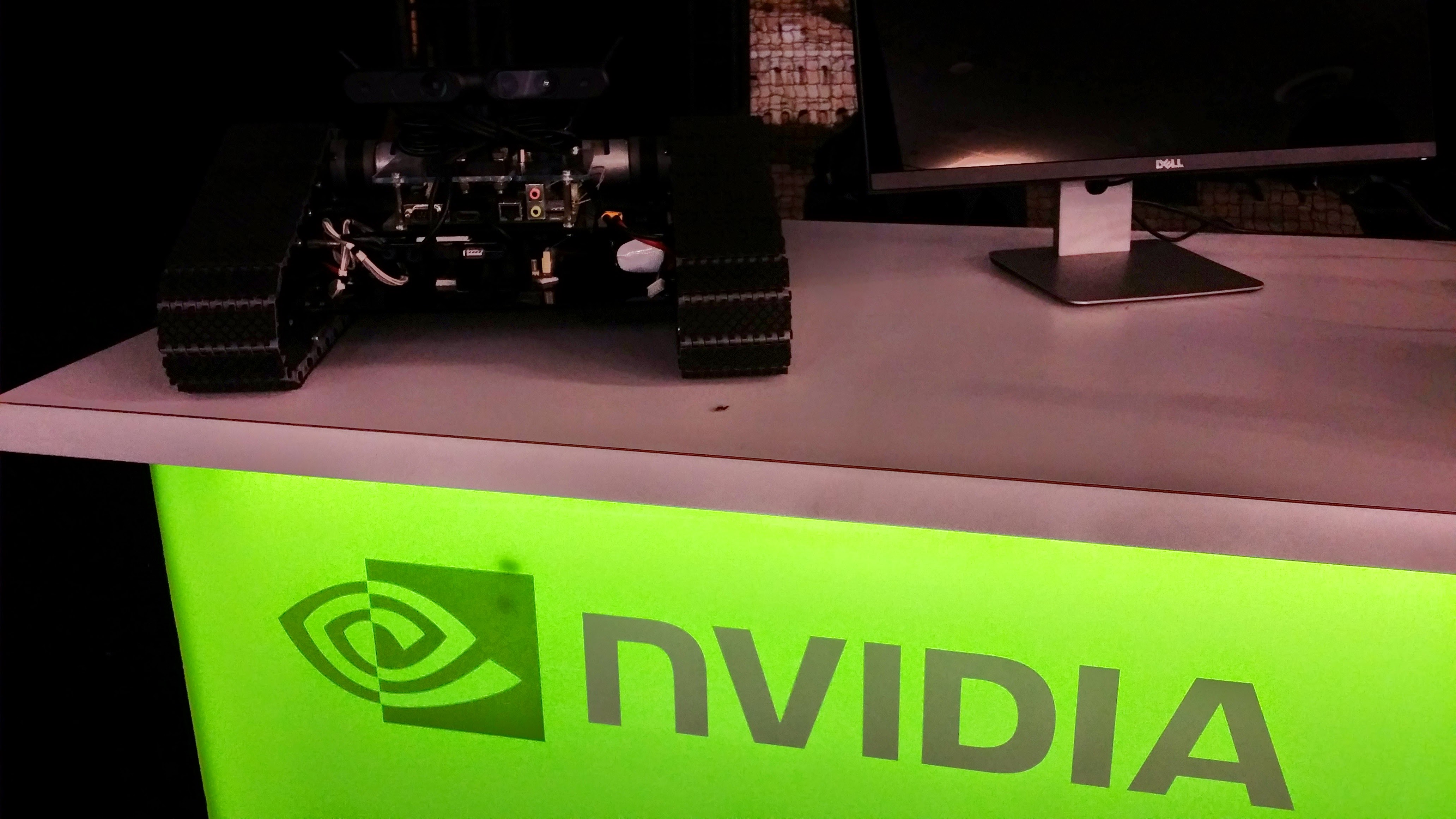

In September 2014, MyzharBot-v3.0 was operational, even if not yet fully autonomous. I exposed it at Maker Faire Rome. It was my first public appearance with the robot, and I was thrilled to showcase its new capabilities.

In December 2014, I received an unexpected Facebook message from an NVIDIA® representative. They were recruiting Jetson TK1 enthusiasts for a new program called Jetson Champions. Initially skeptical, it seemed too good to be true, I soon realized the opportunity was genuine. They had discovered my blog and wanted me to continue sharing my knowledge and experience with the community while developing projects using NVIDIA® Jetson platforms. Nothing easier than that!

MyzharBot (and Me) on the Spotlight

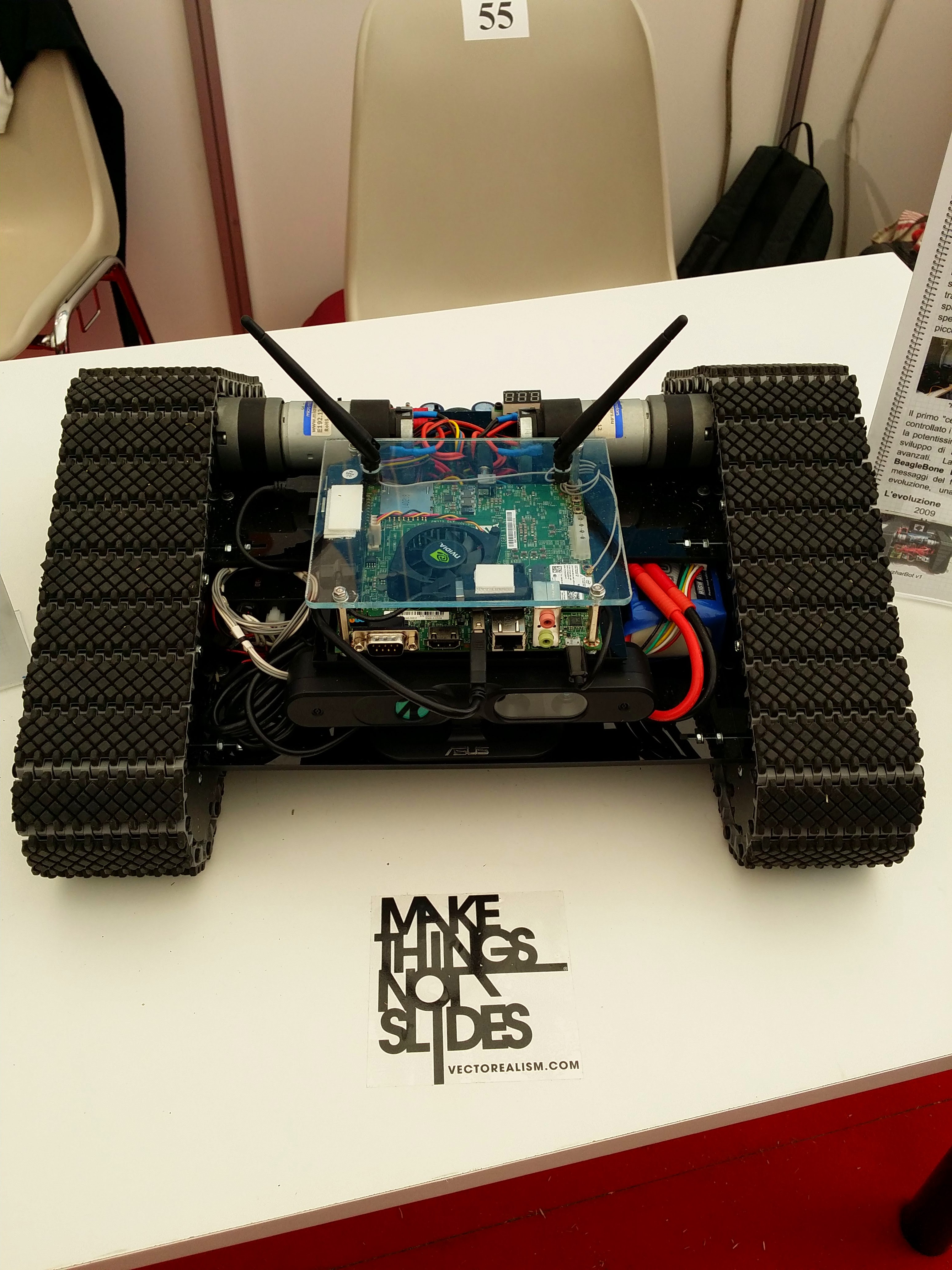

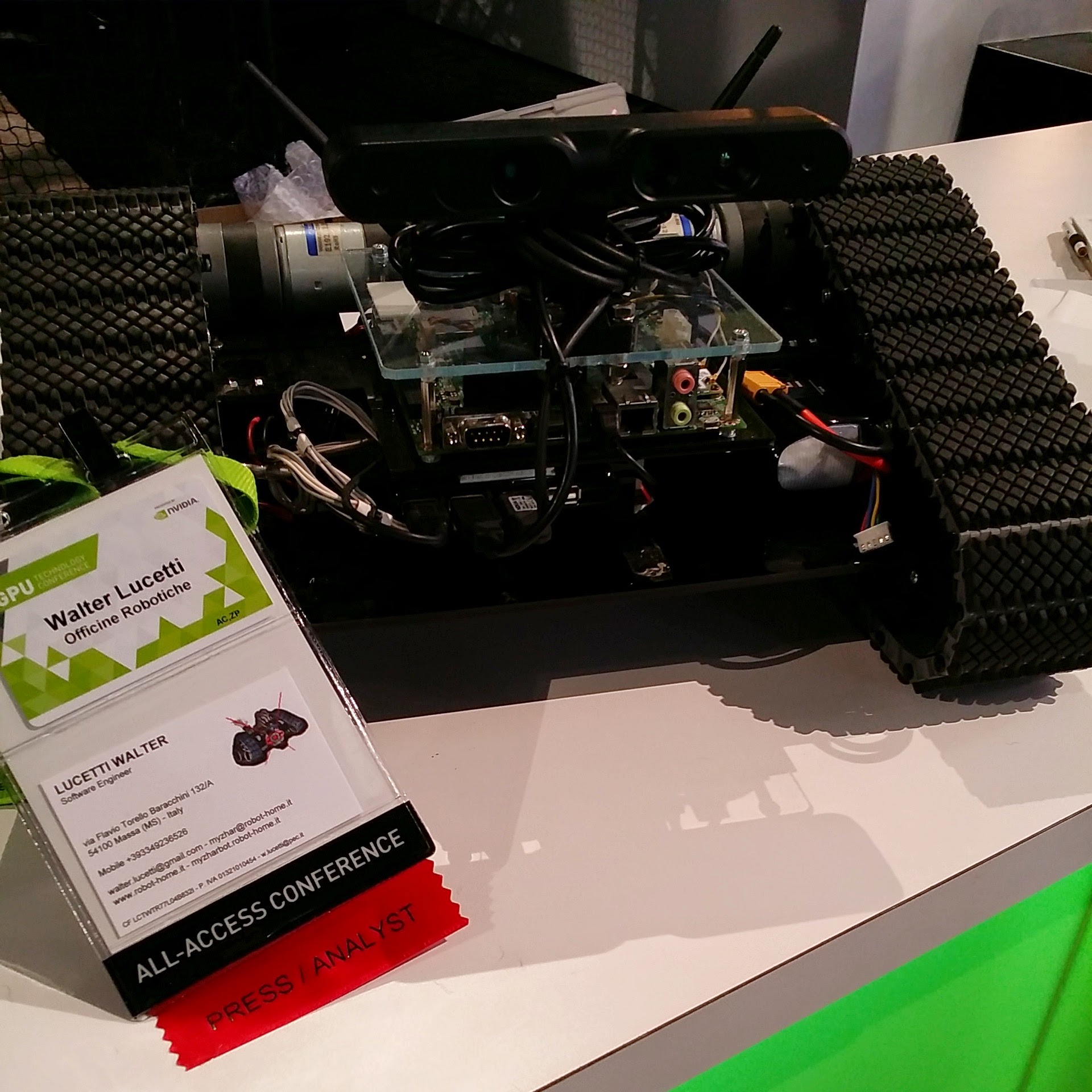

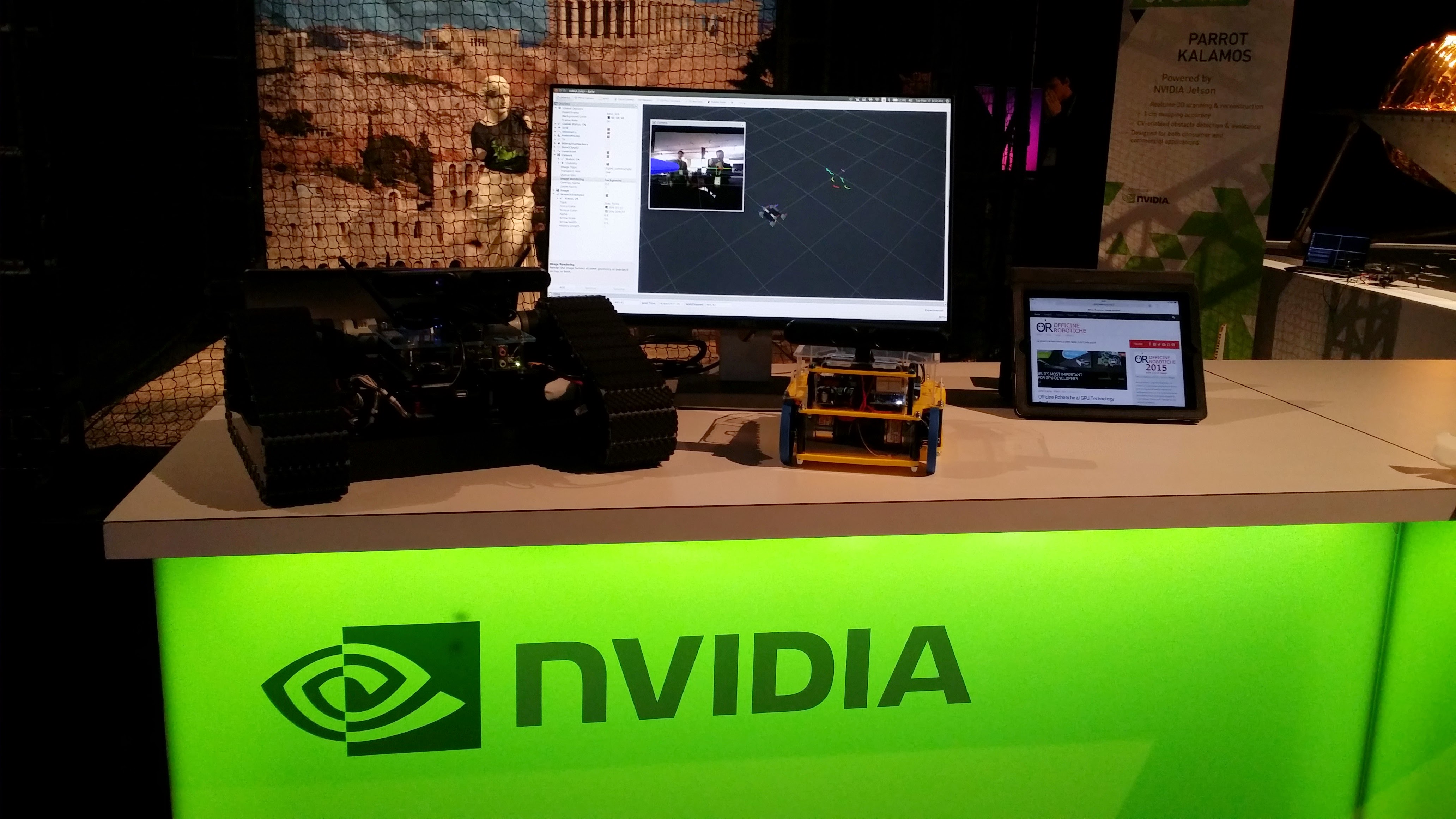

Weeks later, NVIDIA® invited me to showcase MyzharBot at their GTC 2015 booth in San Jose, California. I was thrilled: my first trip to the USA, representing NVIDIA®, and demonstrating my robot to a global audience!

A new chapter was unfolding—a new robot, a powerful brain, and an exciting adventure ahead.

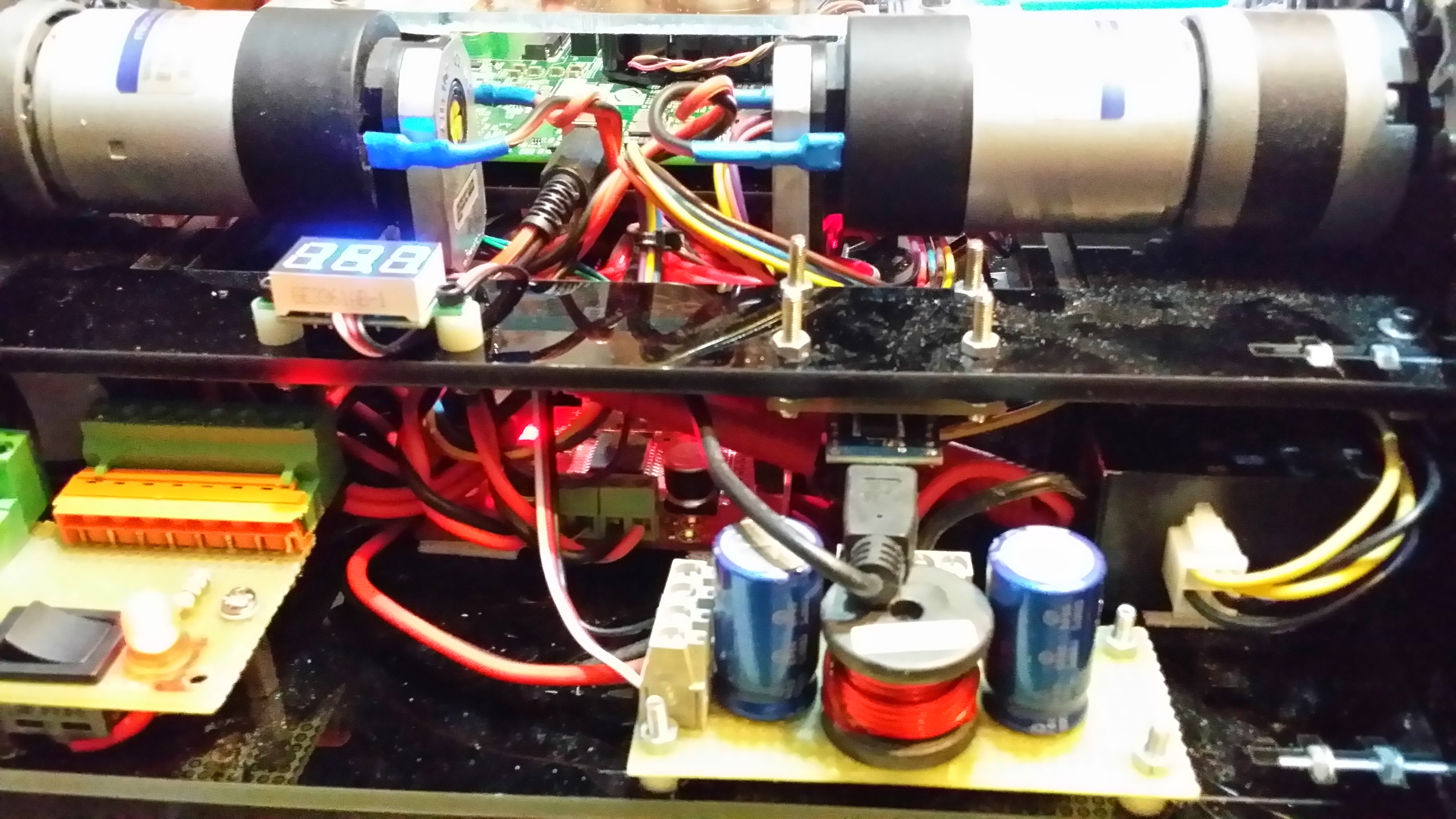

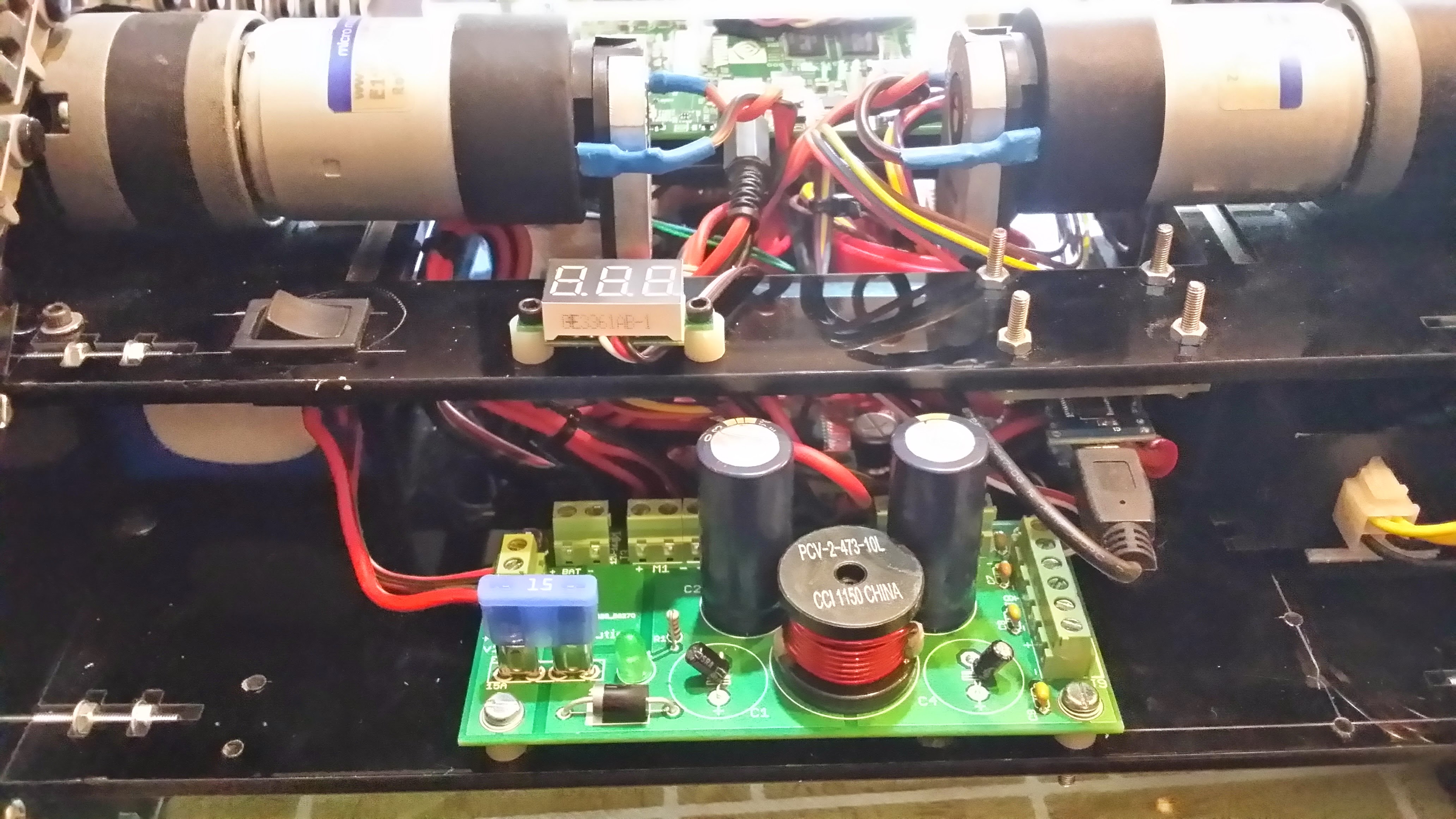

I was not alone on this journey; my old friend Raffaello Bonghi collaborated with me on the firmware for the motor driver board, ensuring seamless integration with the new system. For this reason, I asked NVIDIA® to invite him to GTC 2015 as well, and they gladly accepted. This was a great opportunity for Raffaello too, as he is now a Technical Marketing Engineer at NVIDIA®.

It was an unforgettable experience because we also had the chance to visit San Francisco for two full days after GTC, exploring the city and its iconic landmarks.

Software Overhaul: Embracing ROS

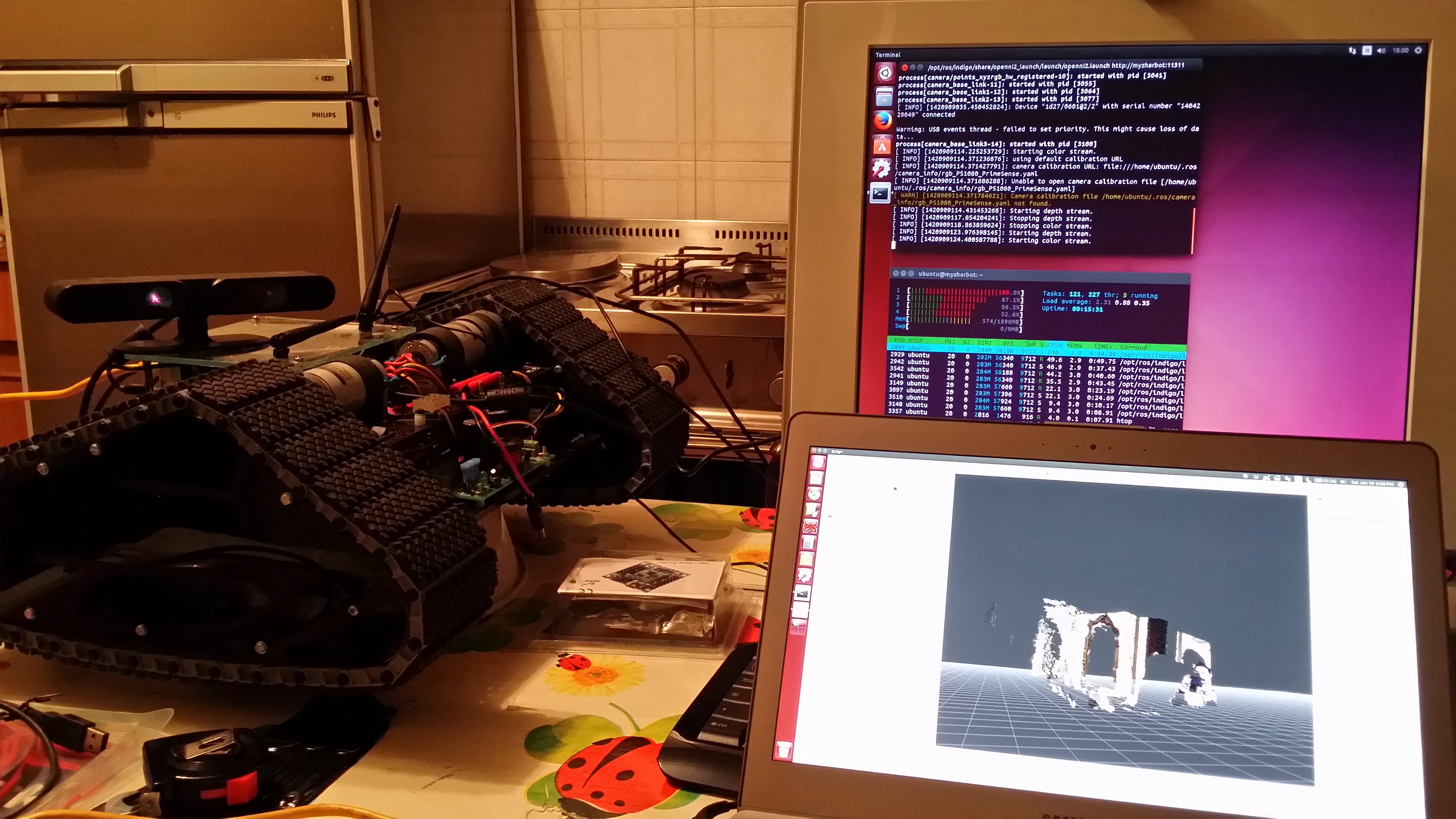

Coincidentally, I decided to abandon my custom robotics framework and adopt ROS (Robot Operating System) as MyzharBot’s primary software platform. Whether it was ROS Hydro or Indigo, within weeks I replicated my previous functionality while leveraging ROS’s extensive ecosystem. Most importantly, I could finally focus on high-level robotics software without worrying about low-level details like data communication, hardware abstraction, or control interfaces—ROS handled it all.

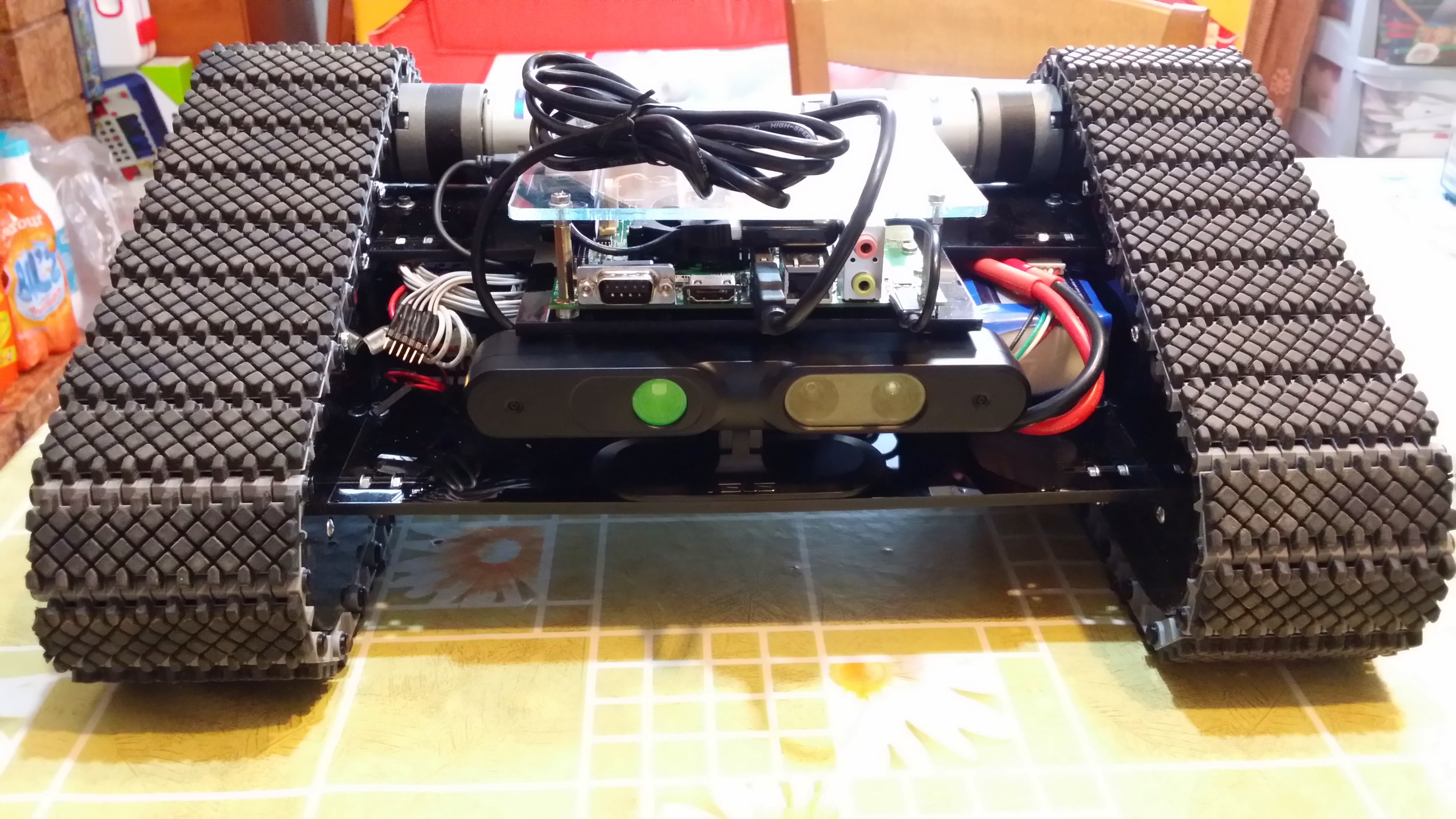

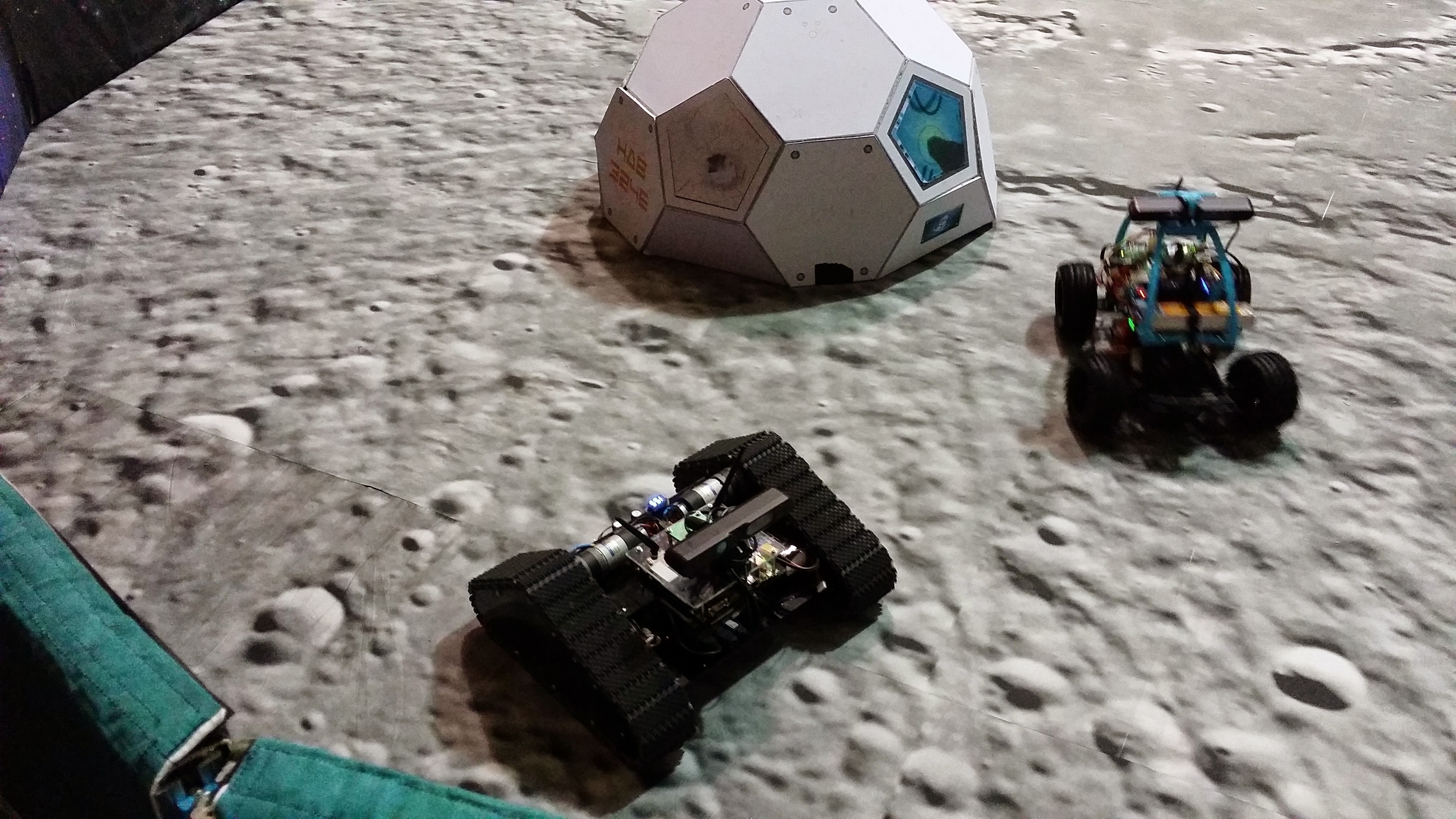

This was the first step toward full autonomous navigation. I implemented a node that allowed MyzharBot to safely navigate while avoiding any kind of obstacle using 3D data from the Asus Xtion Pro Live RGB-D camera (see below).

The source code is still available on GitHub in a fork of the original ros_robot_wandering_demo repository that I published in Officine Robotiche’s GitHub account, where I contributed as a founder and member in those years.

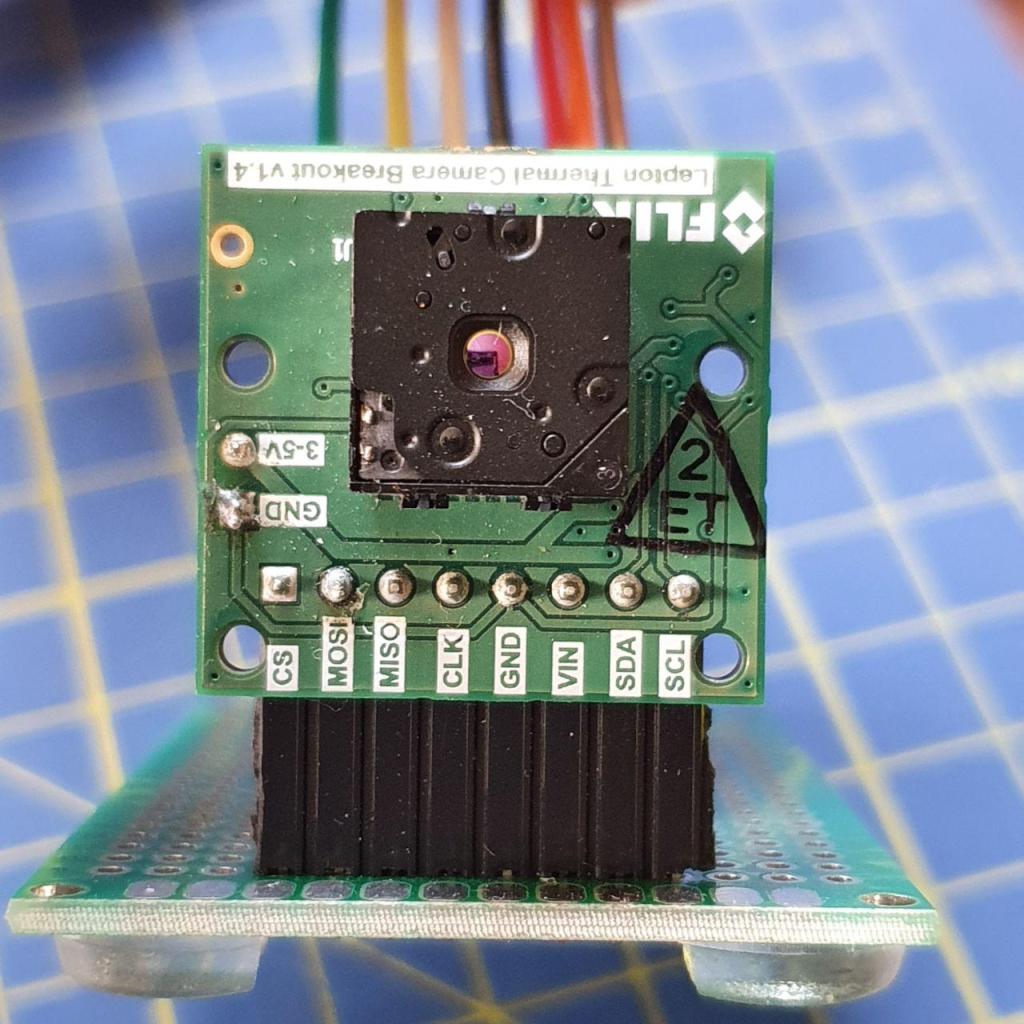

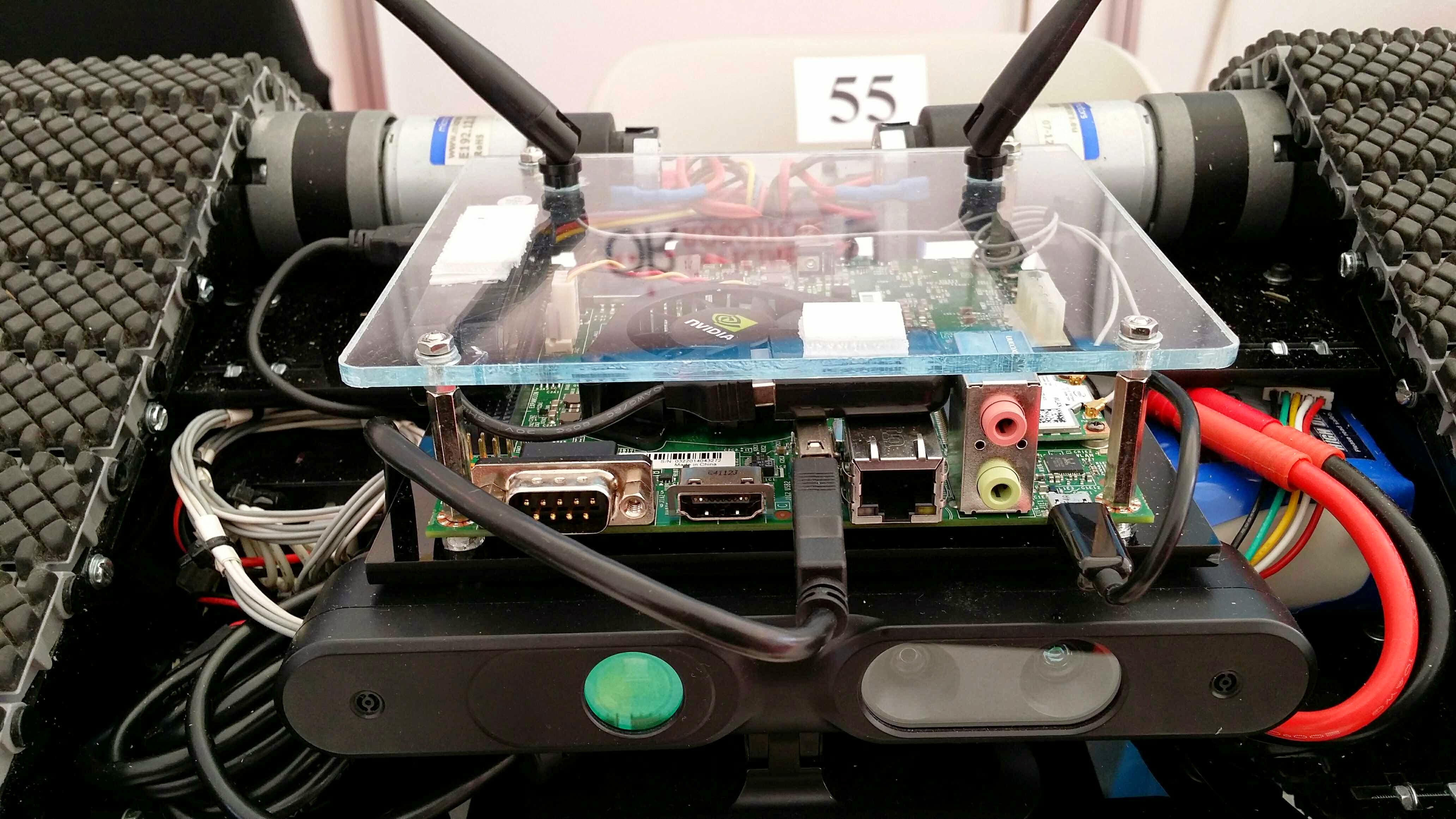

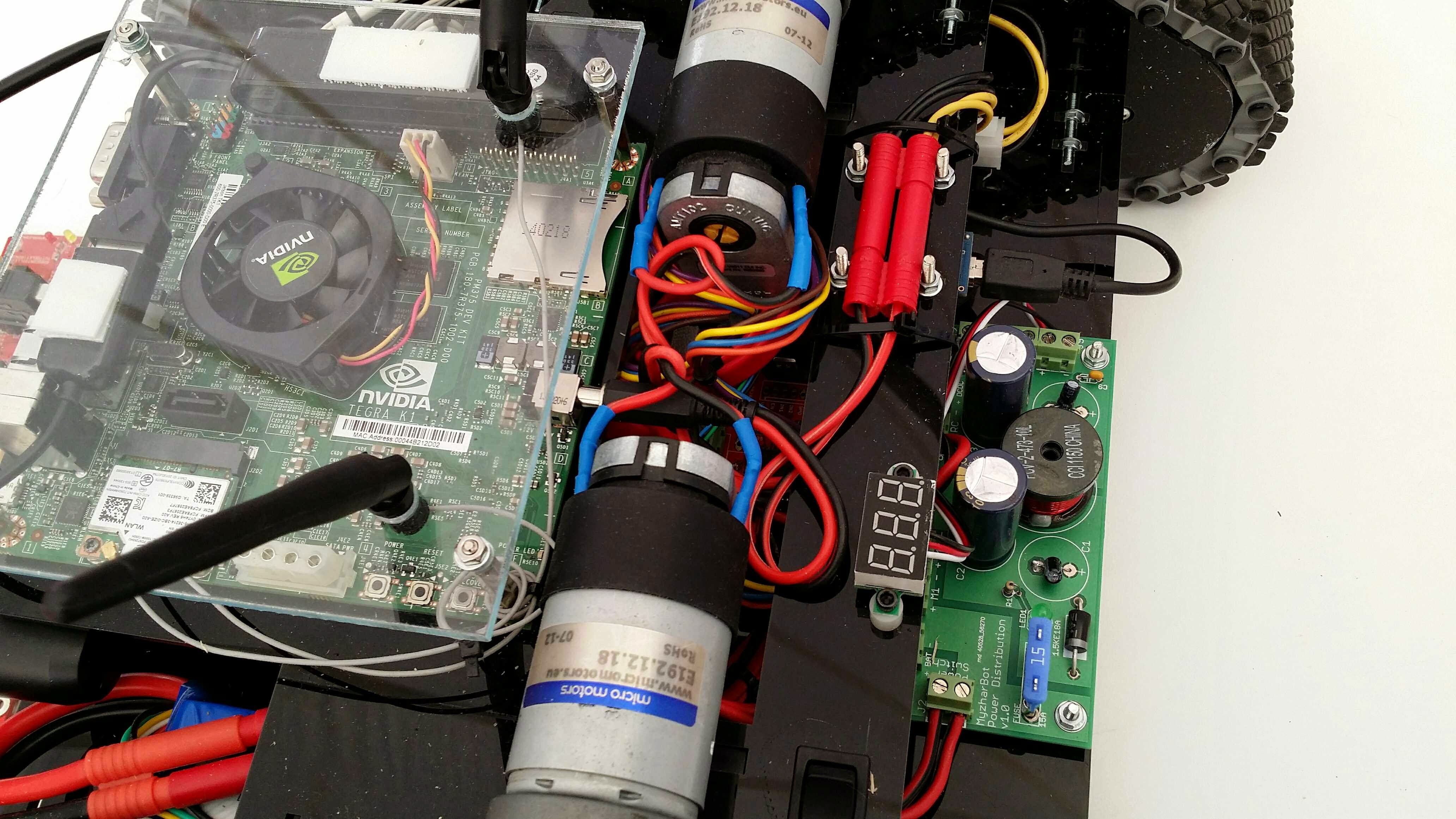

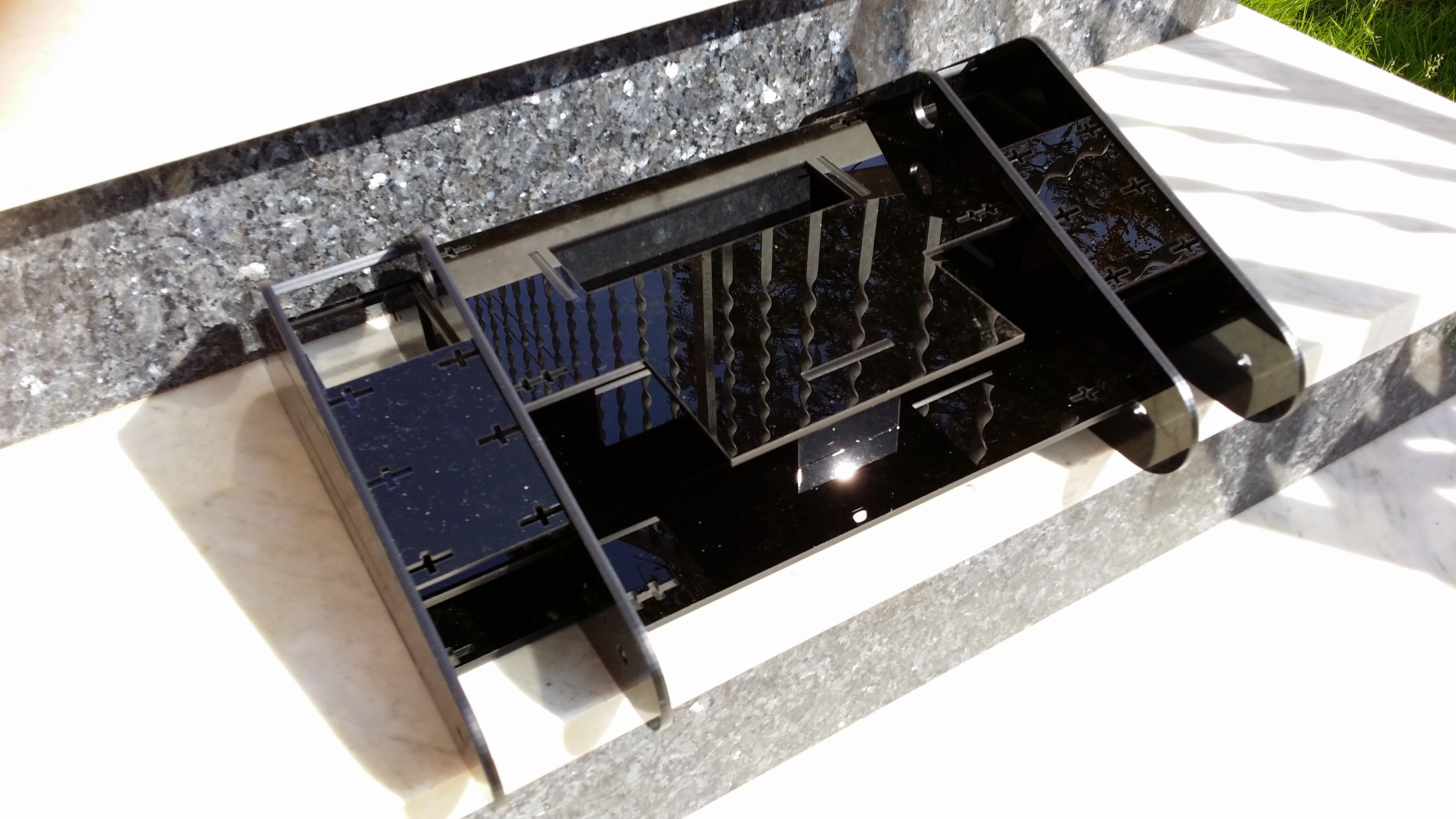

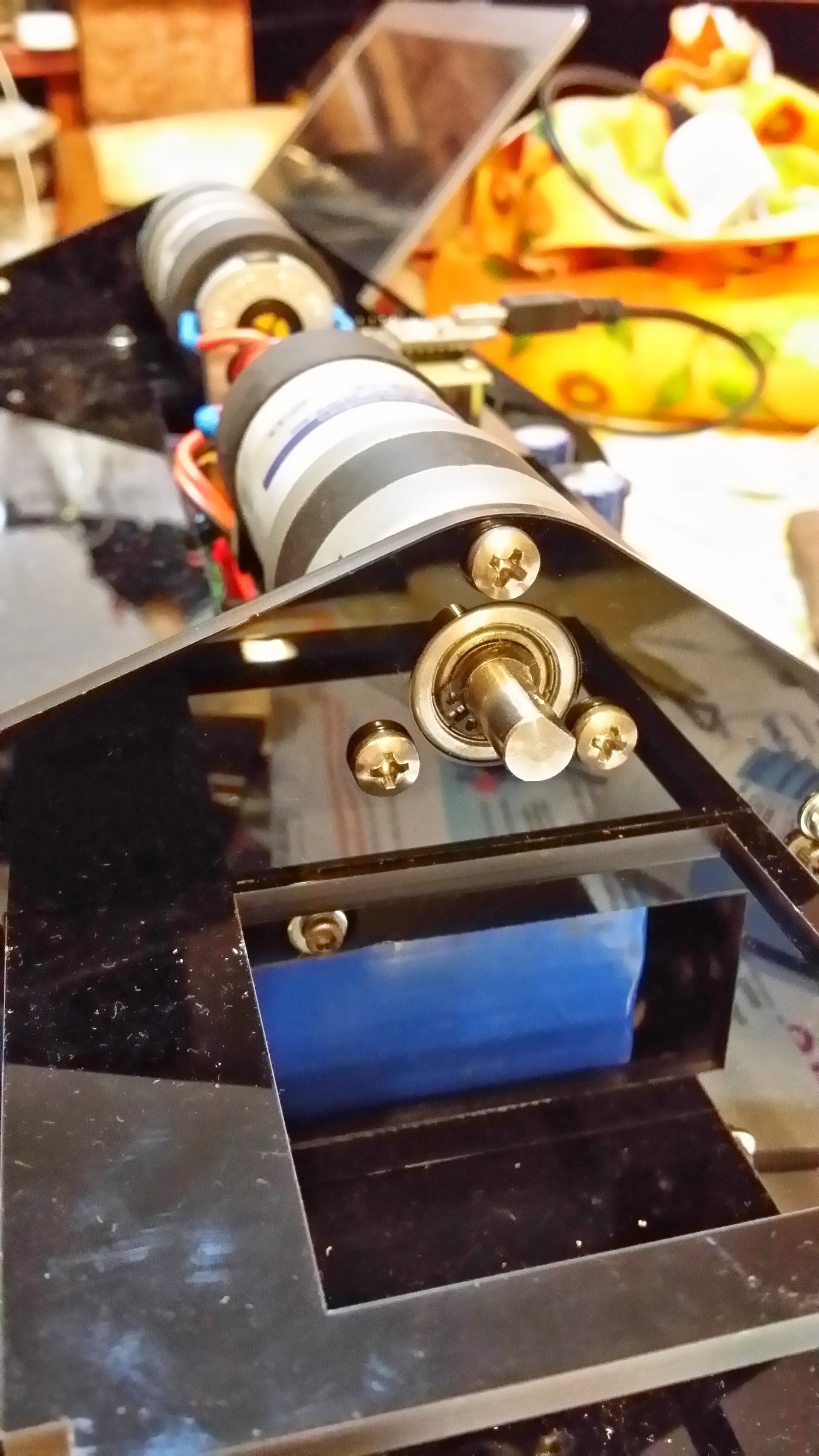

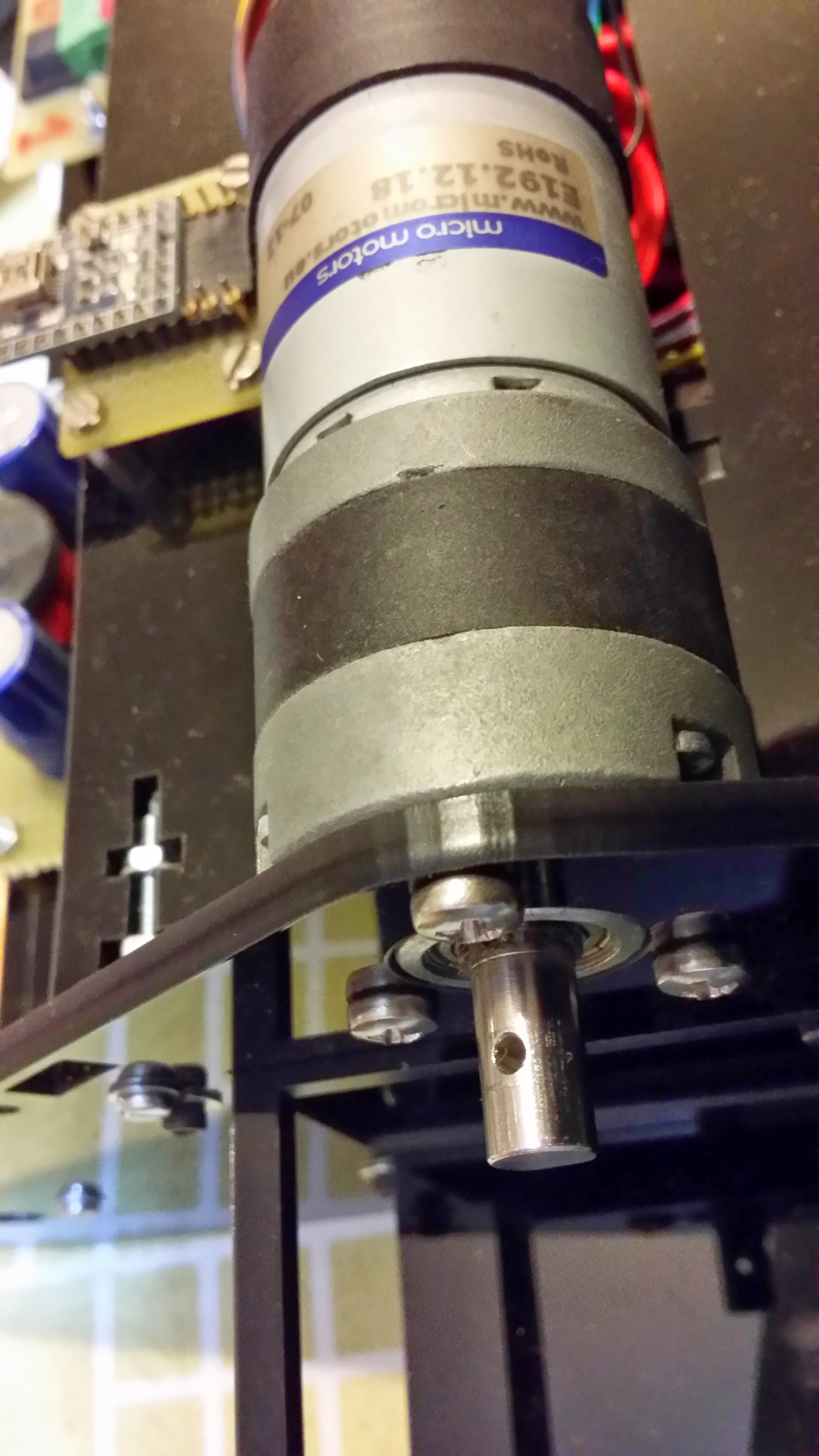

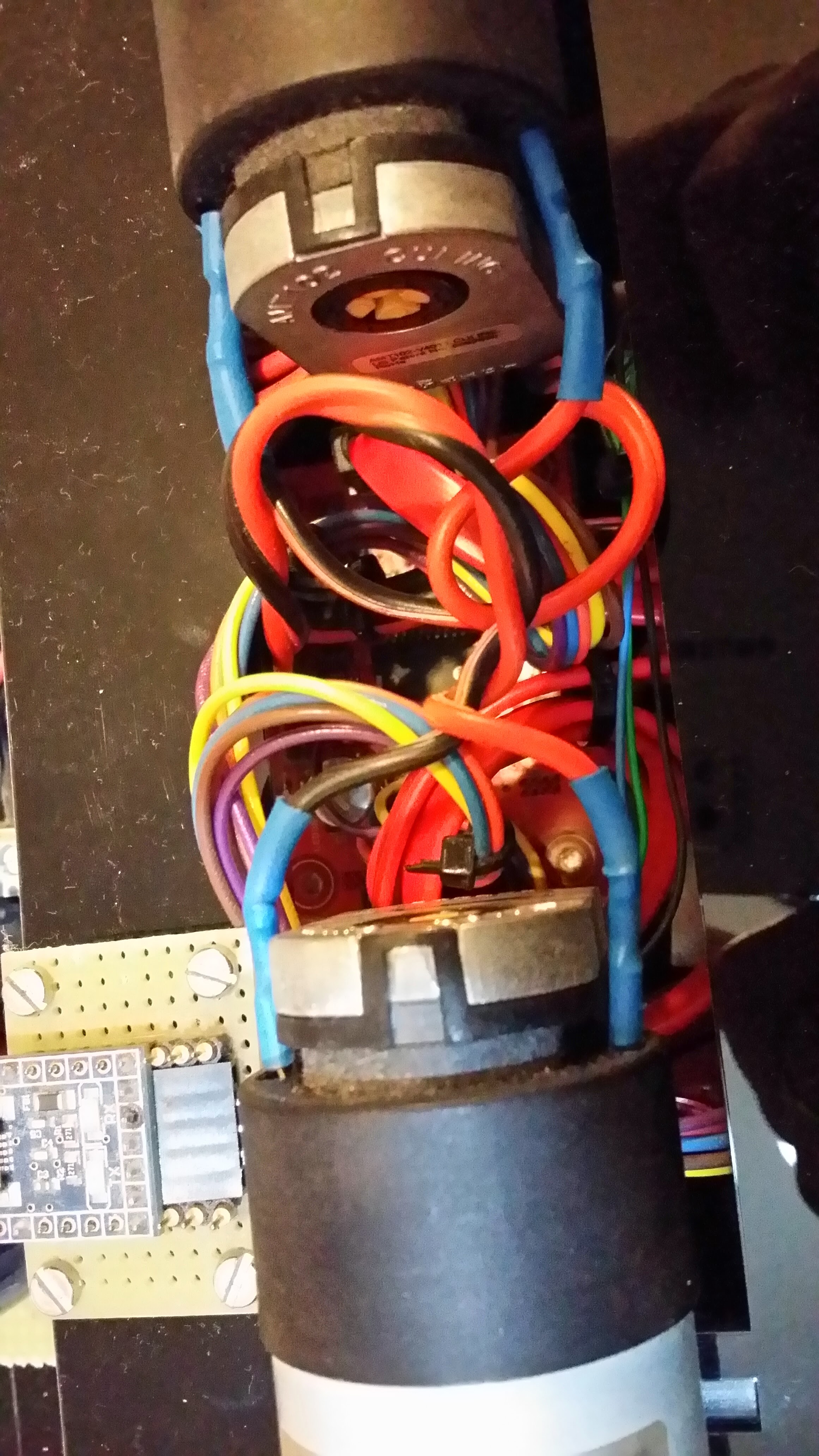

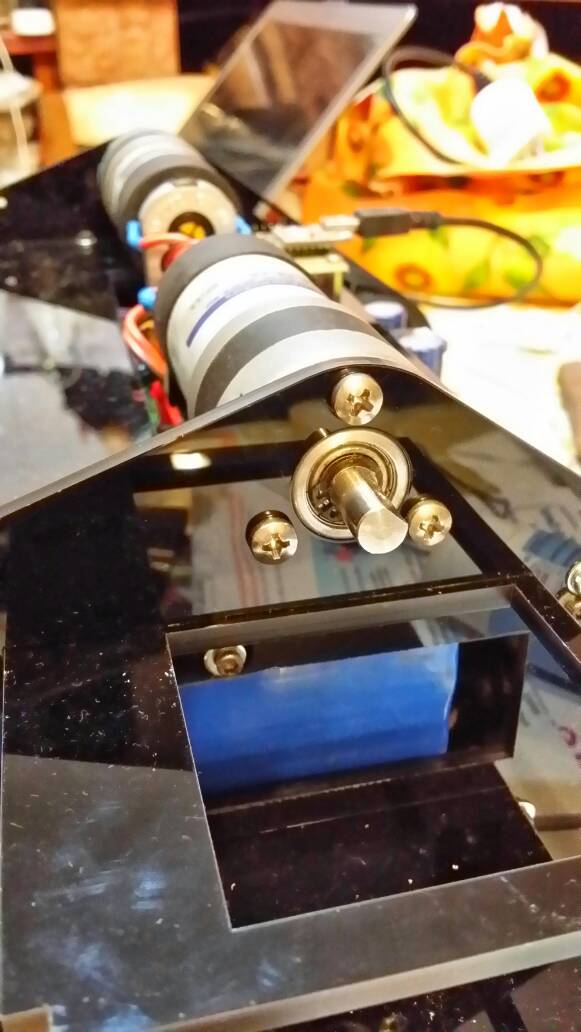

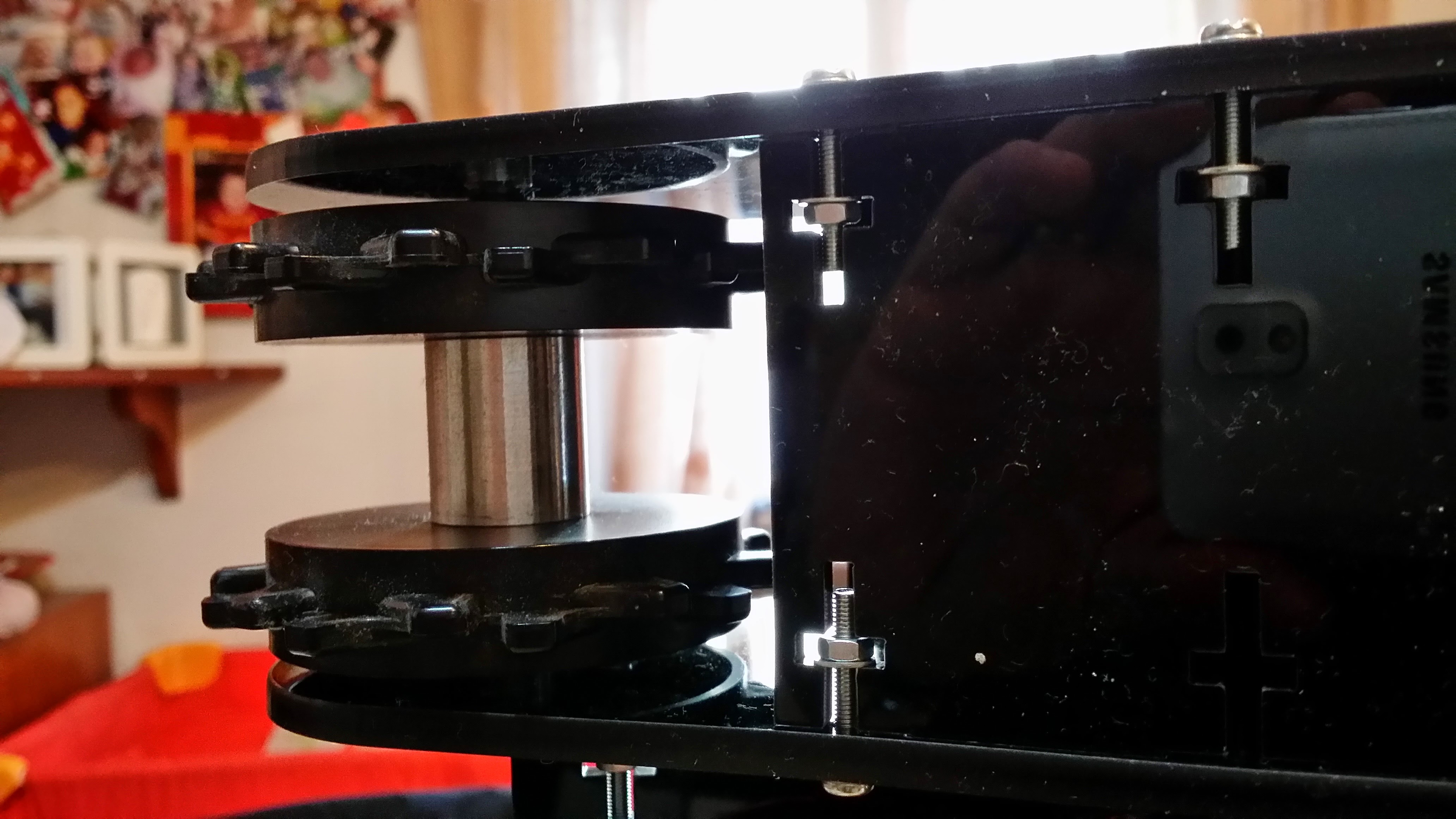

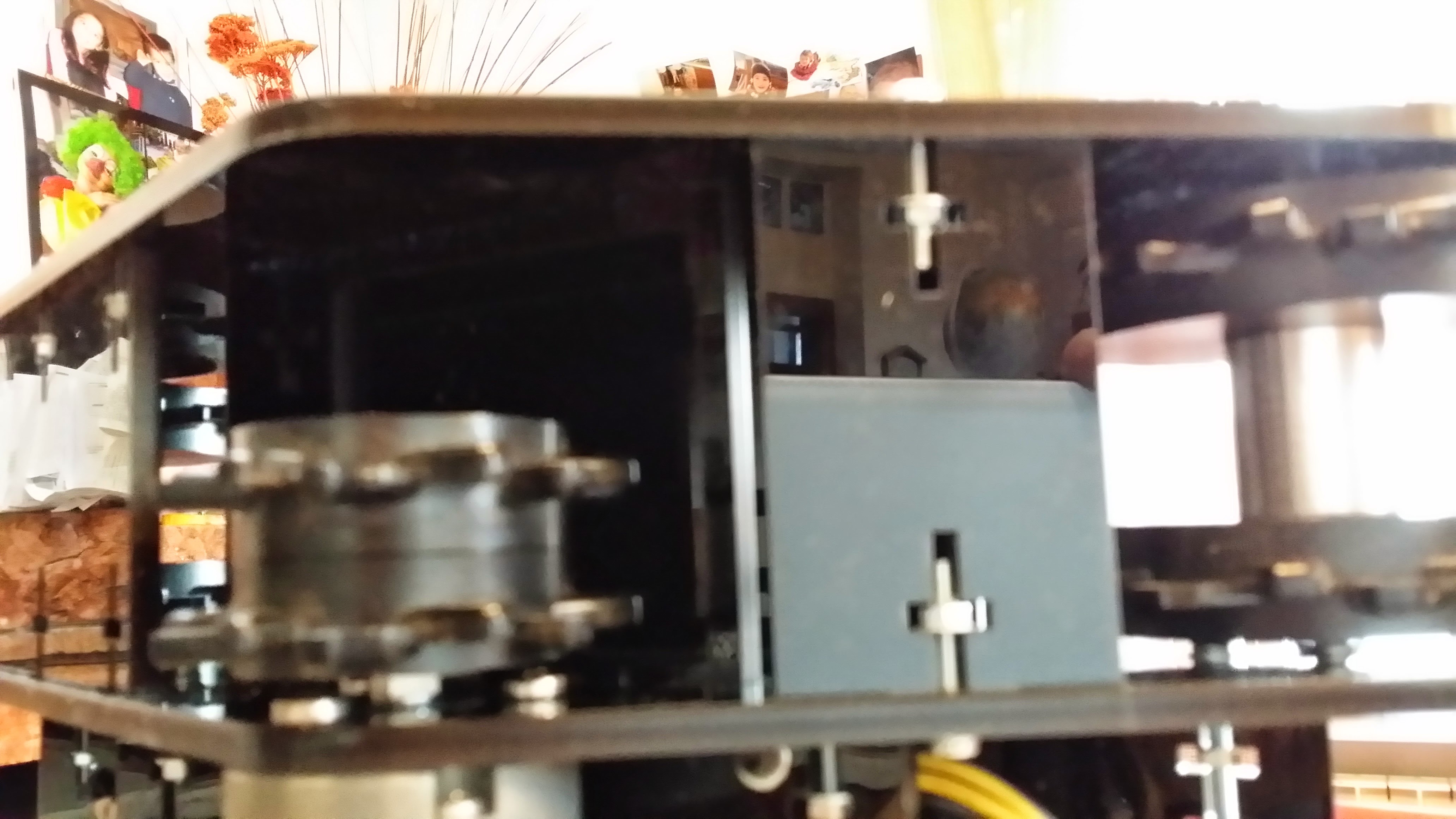

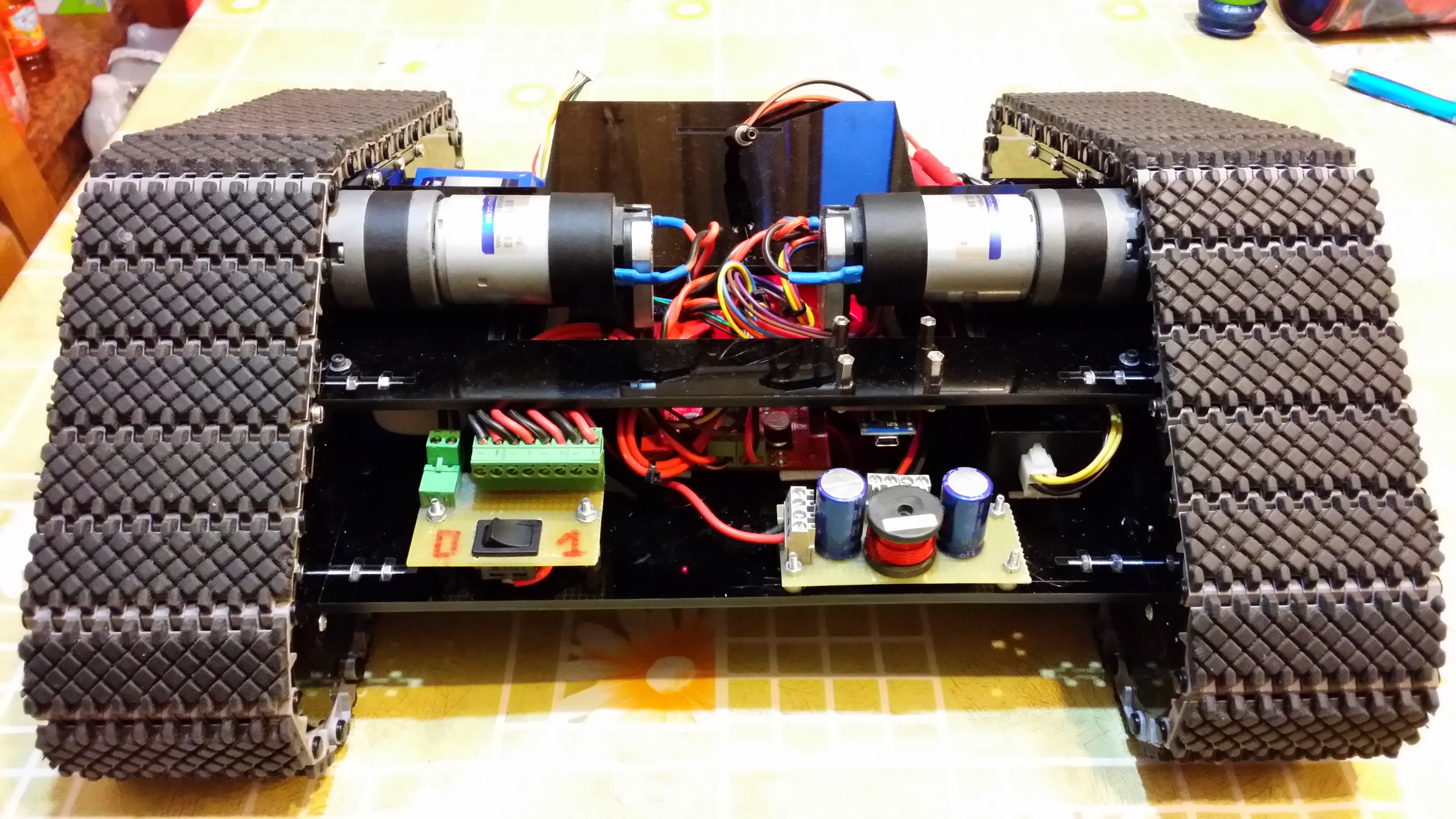

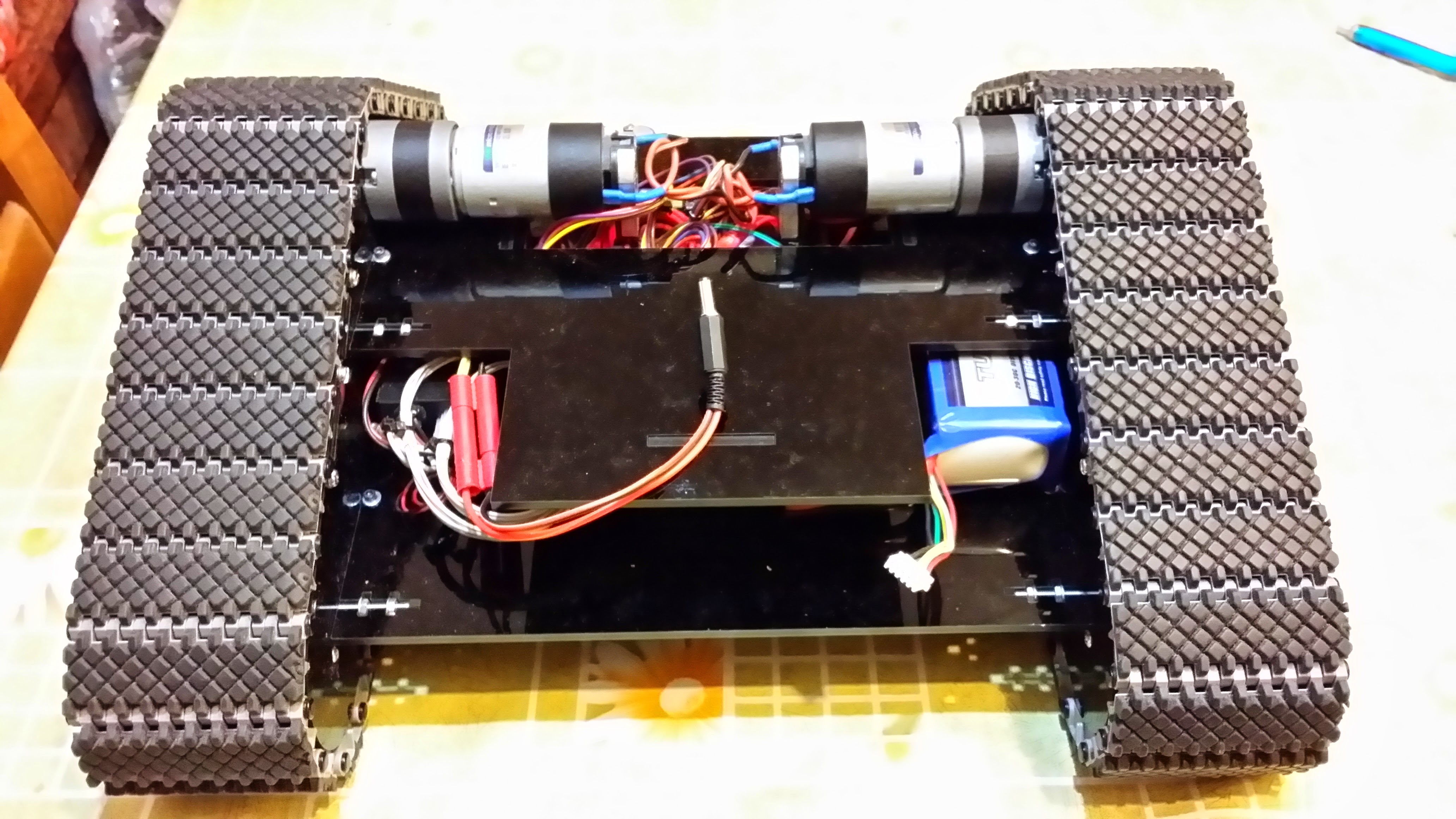

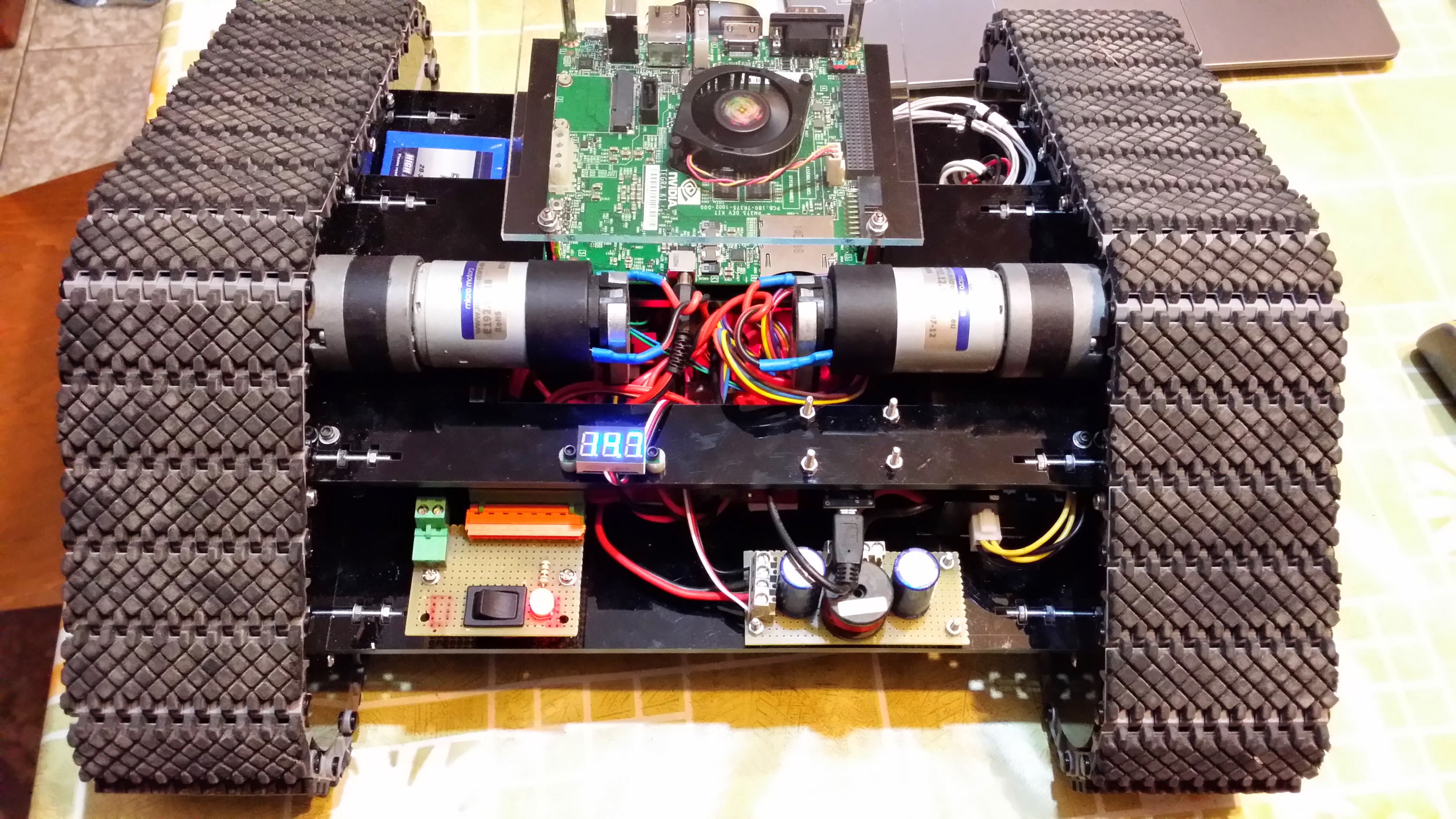

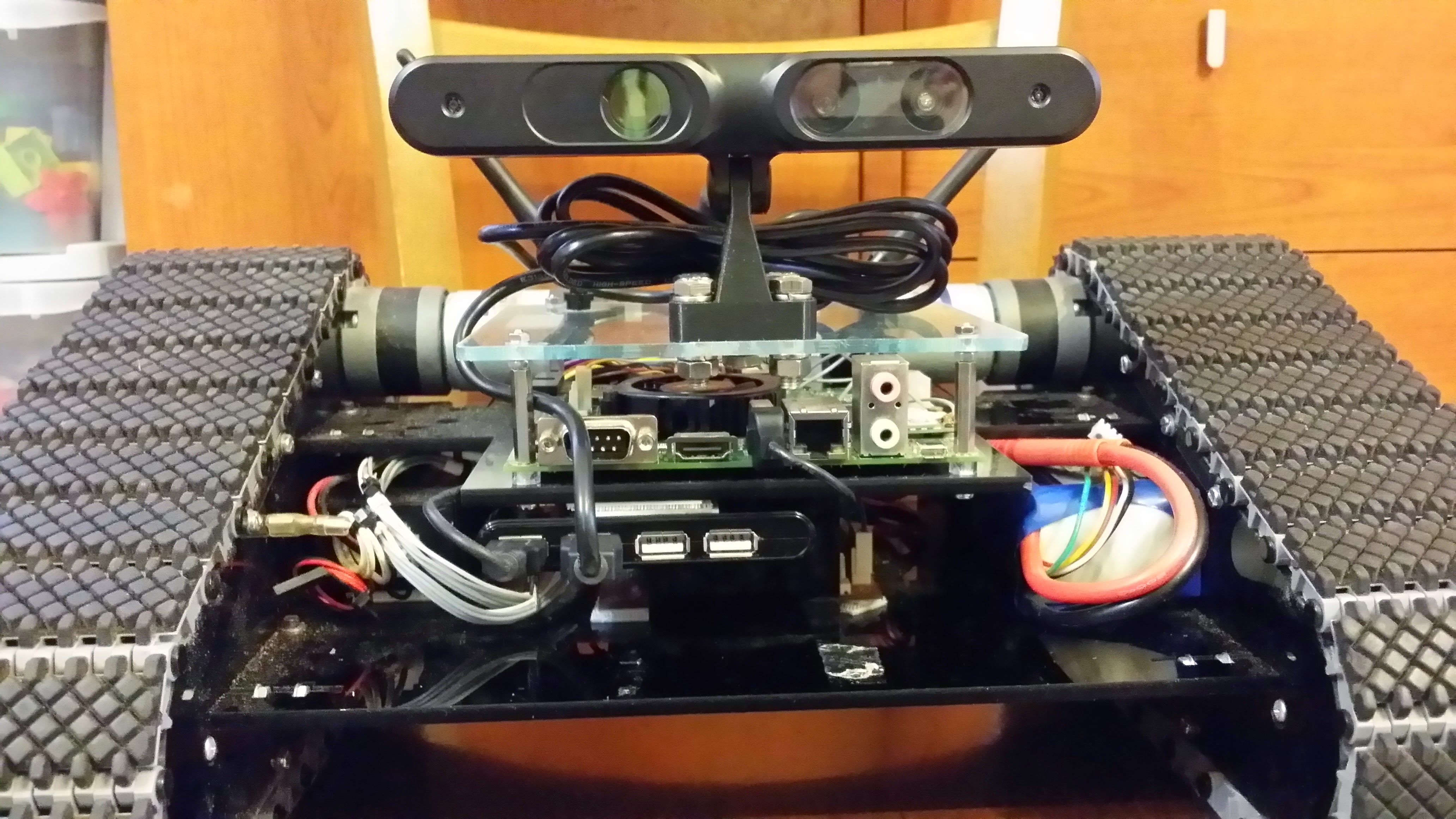

Hardware Upgrades

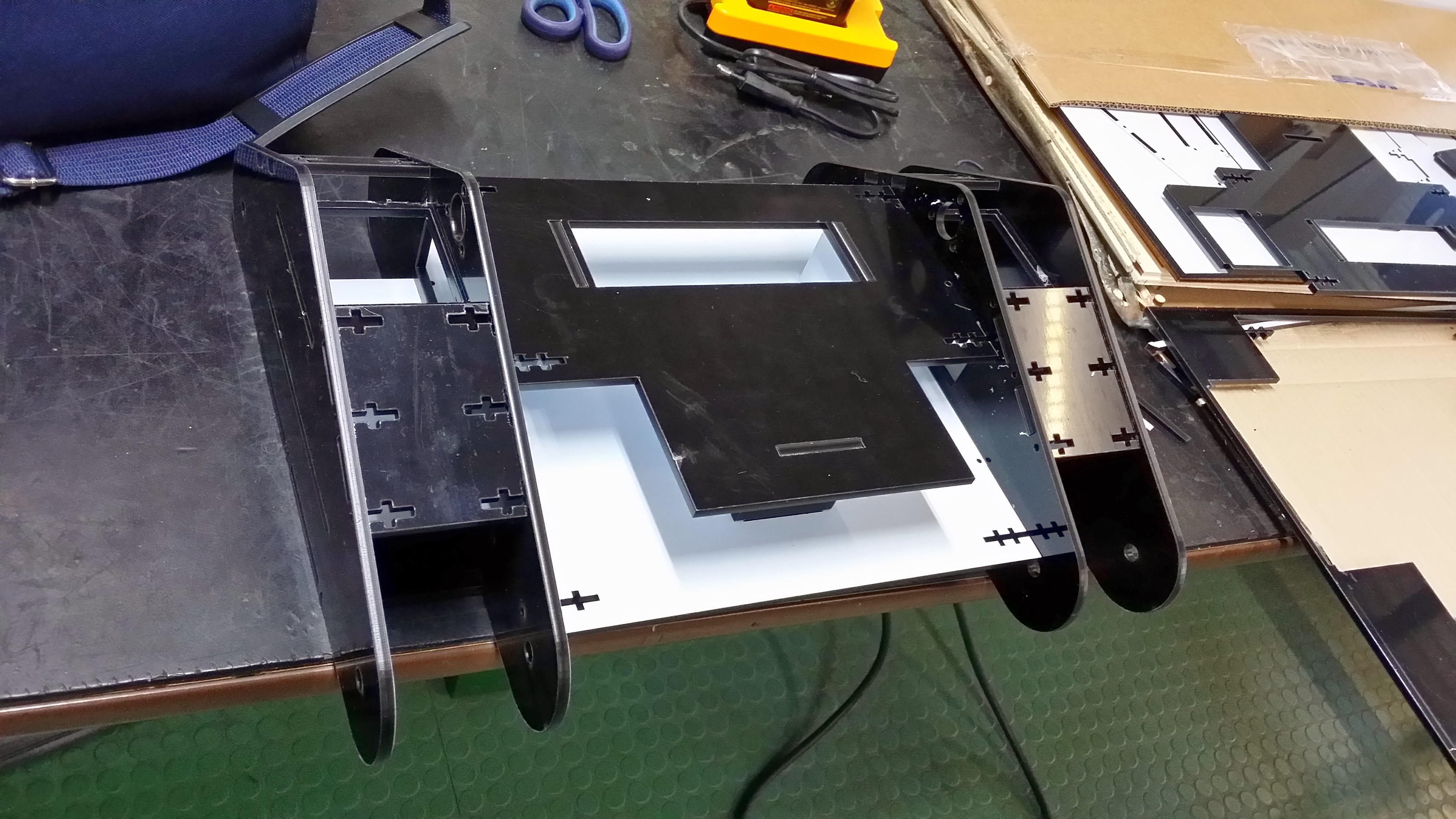

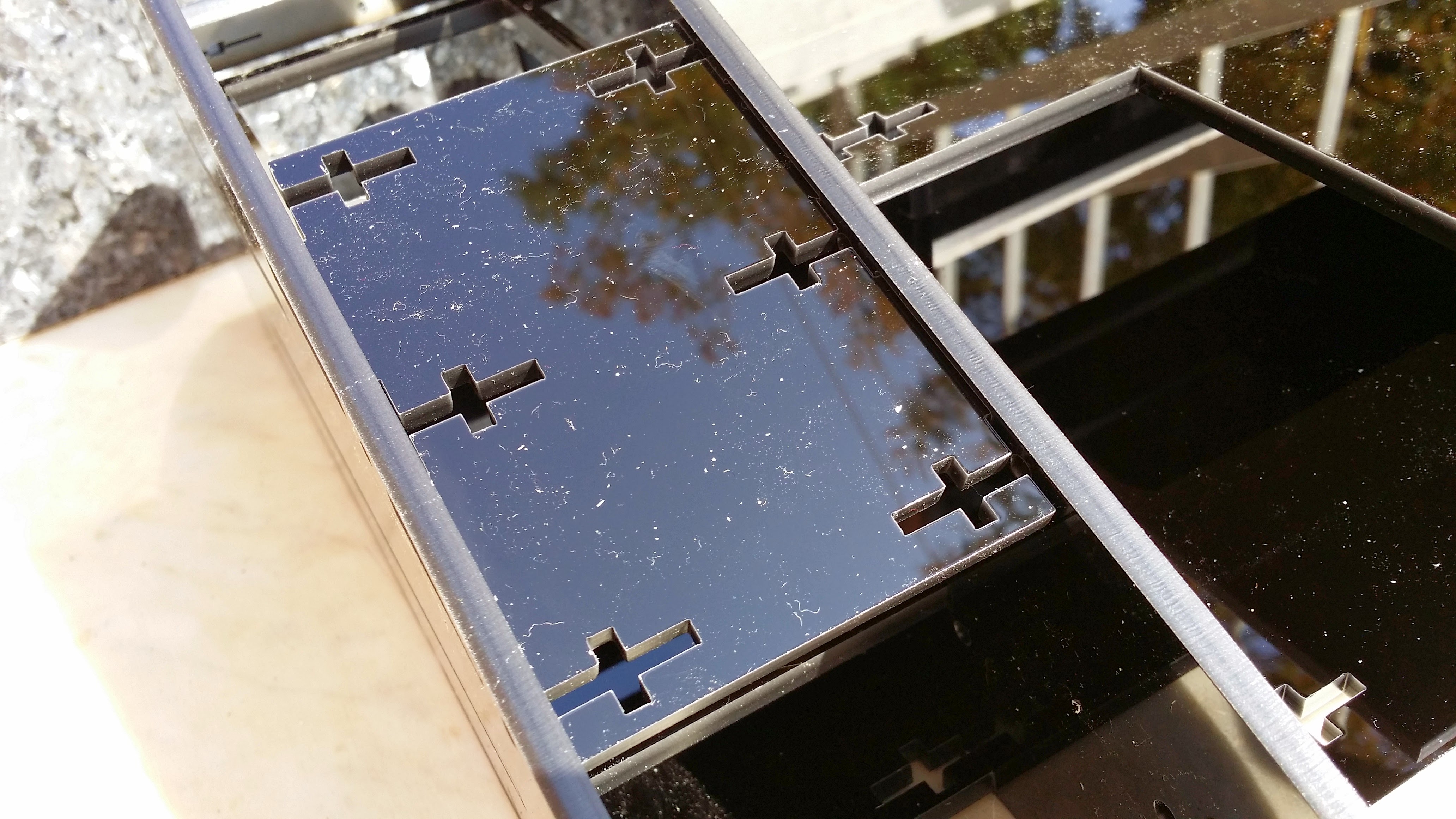

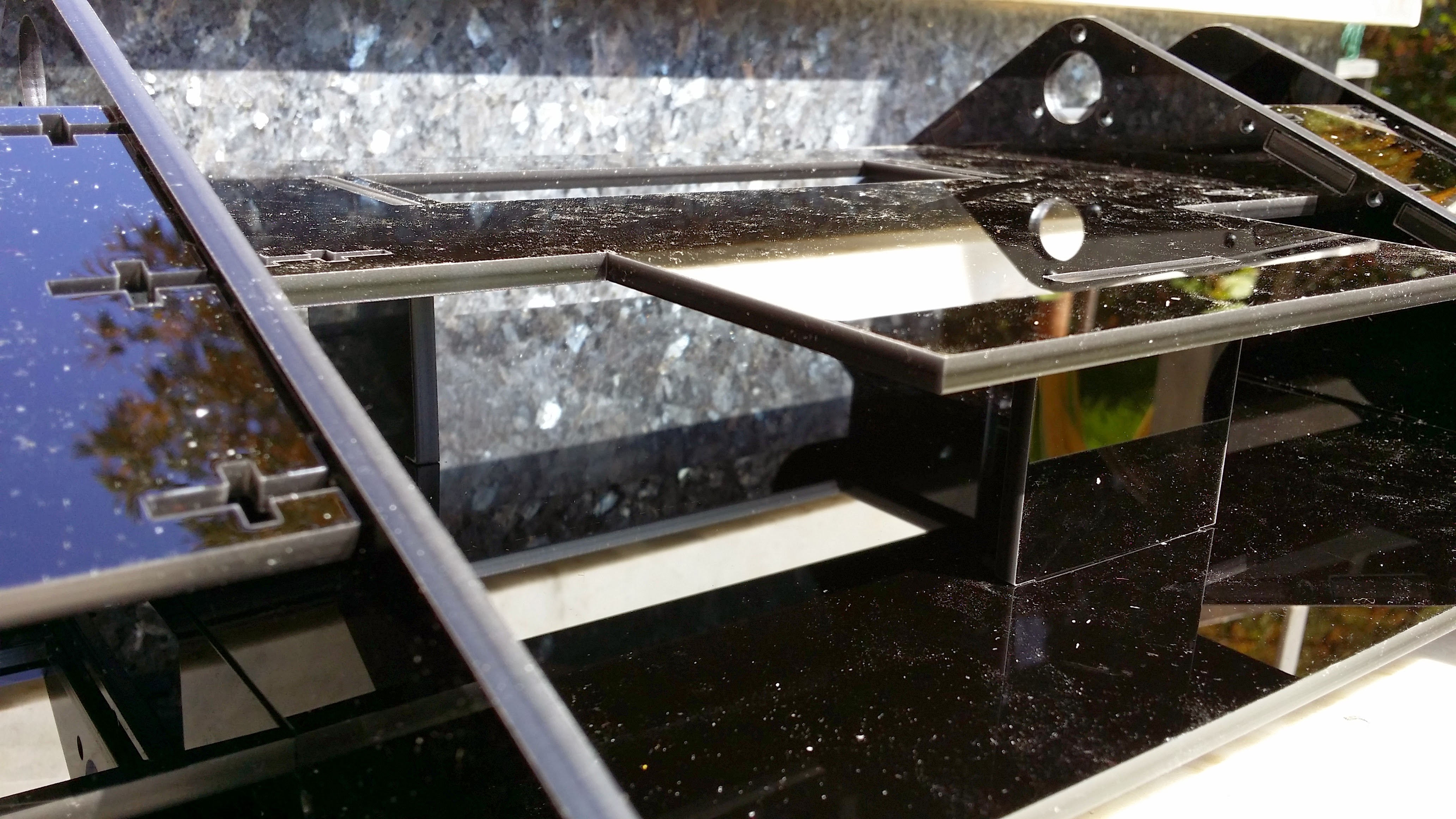

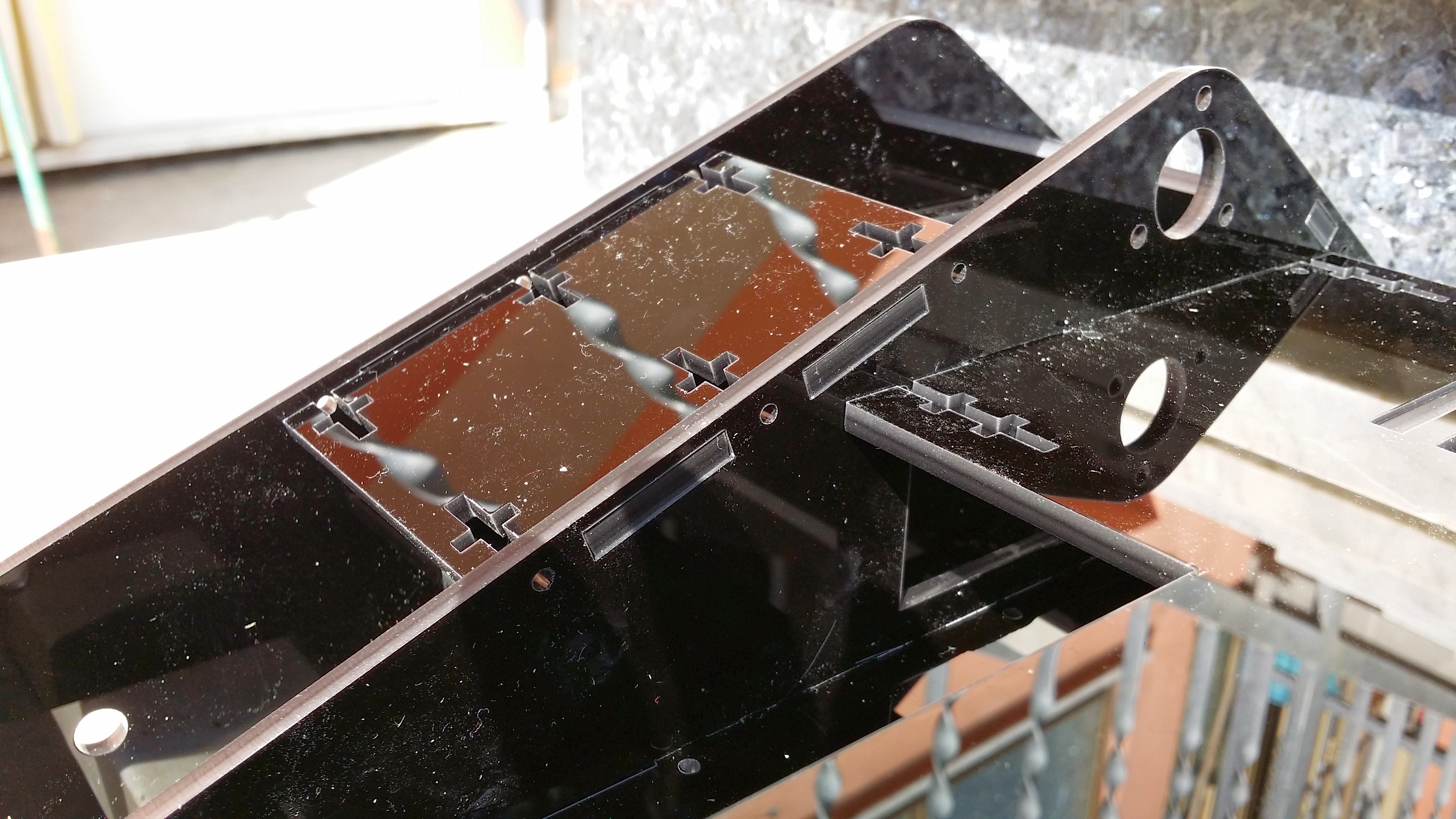

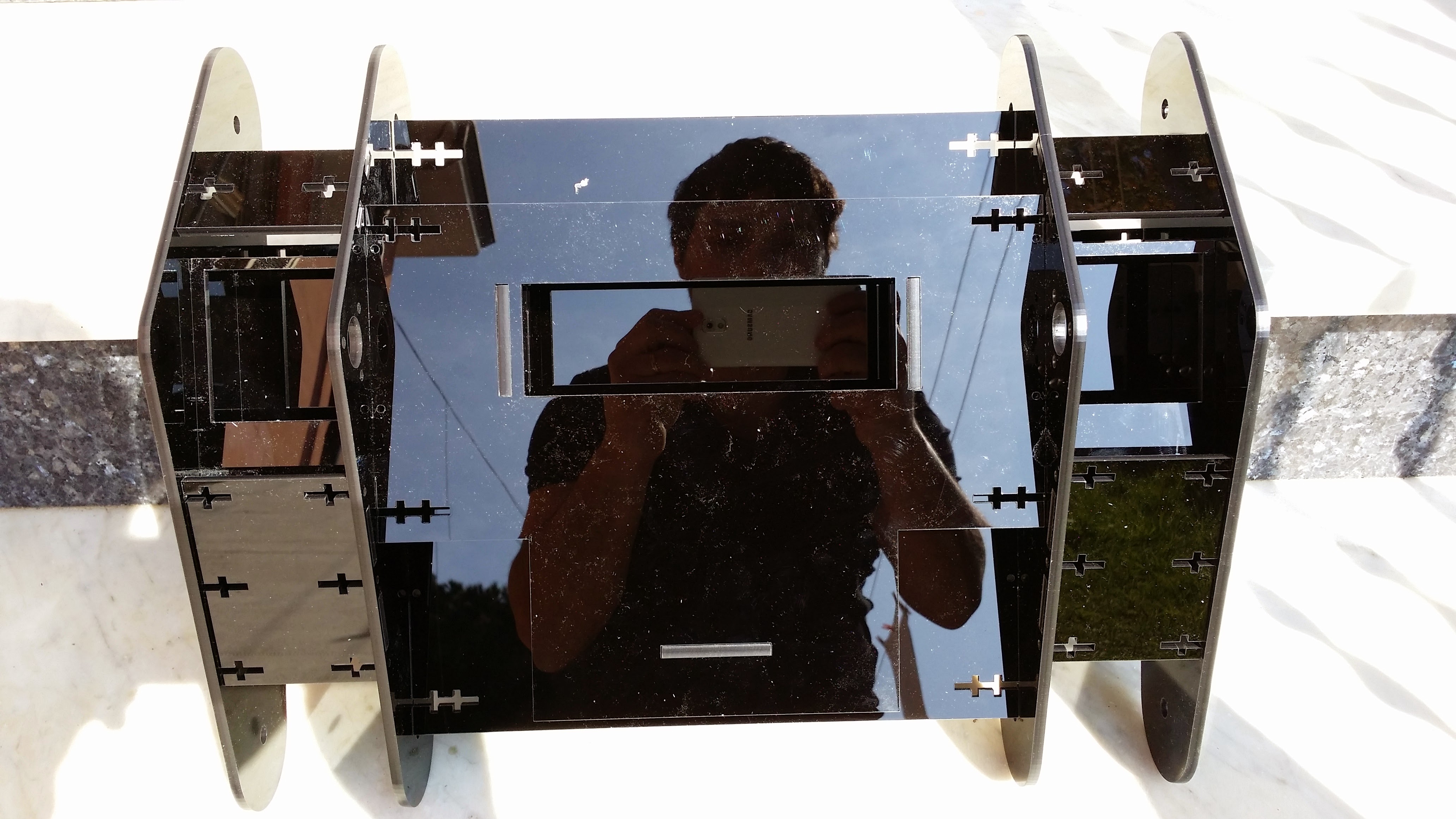

The hardware received significant upgrades. I redesigned the laser-cut plastic chassis to accommodate the Jetson TK1 board and replaced the dual-webcam setup with an Asus Xtion Pro Live RGB-D camera. This addition provided depth information for obstacle detection and mapping at zero cost, thanks to the open-source OpenNI drivers.

An Encounter That Changed My Life

At GTC 2015, during a lunch break while manning the NVIDIA® booth alone, a girl approached holding a silver device with two camera eyes. Cecile Schmollgruber, co-founder and CEO of Stereolabs, introduced herself and her revolutionary product: the first ZED Stereo Camera. This high-resolution passive stereo camera featured perfect CMOS sensor synchronization and a powerful CUDA-accelerated SDK: a computer vision enthusiast’s dream.

Cecile and Edwin Azzam, another Stereolabs co-founder and CTO, were announcing the ZED’s launch at GTC with a booth at the conference. Their SDK leveraged CUDA for real-time processing, generating high-resolution depth maps and point clouds with natively synchronized color information.

Fascinated by the ZED’s potential for robotics, we exchanged contacts. I promised to connect them with the NVIDIA® team, and a few hours later, I led one of the NVIDIA managers to their booth. This encounter sparked a long-lasting friendship and collaboration that led me to join Stereolabs in 2018 as a Senior Software Engineer, where I became a key ambassador and contributor to their products and SDKs.

Spoiler alert: the ZED camera was later integrated into MyzharBot-v4 ![]()

Below are two of the first ZED cameras released that Stereolabs donated to me after GTC to use with the next version of MyzharBot. They still work like jewels and I treasure them. Behind a Jetson Champion cup, I love using it for breakfast as a daily reminder of how far I’ve come since those early days.

Videos

A couple of videos from MyzharBot-v3 era:

Photos

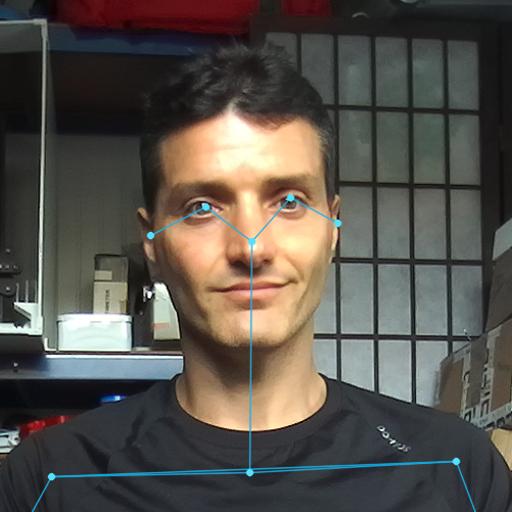

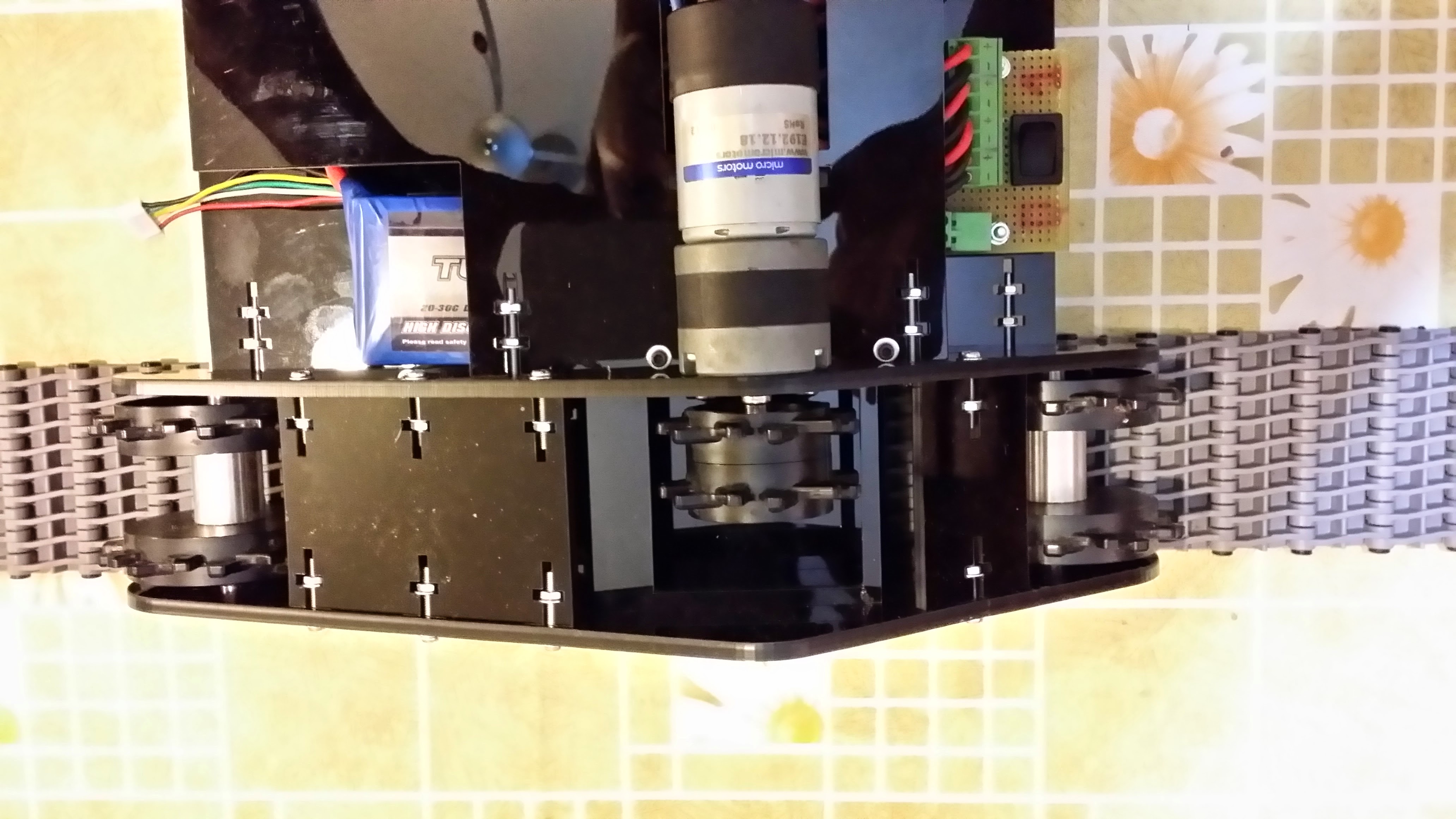

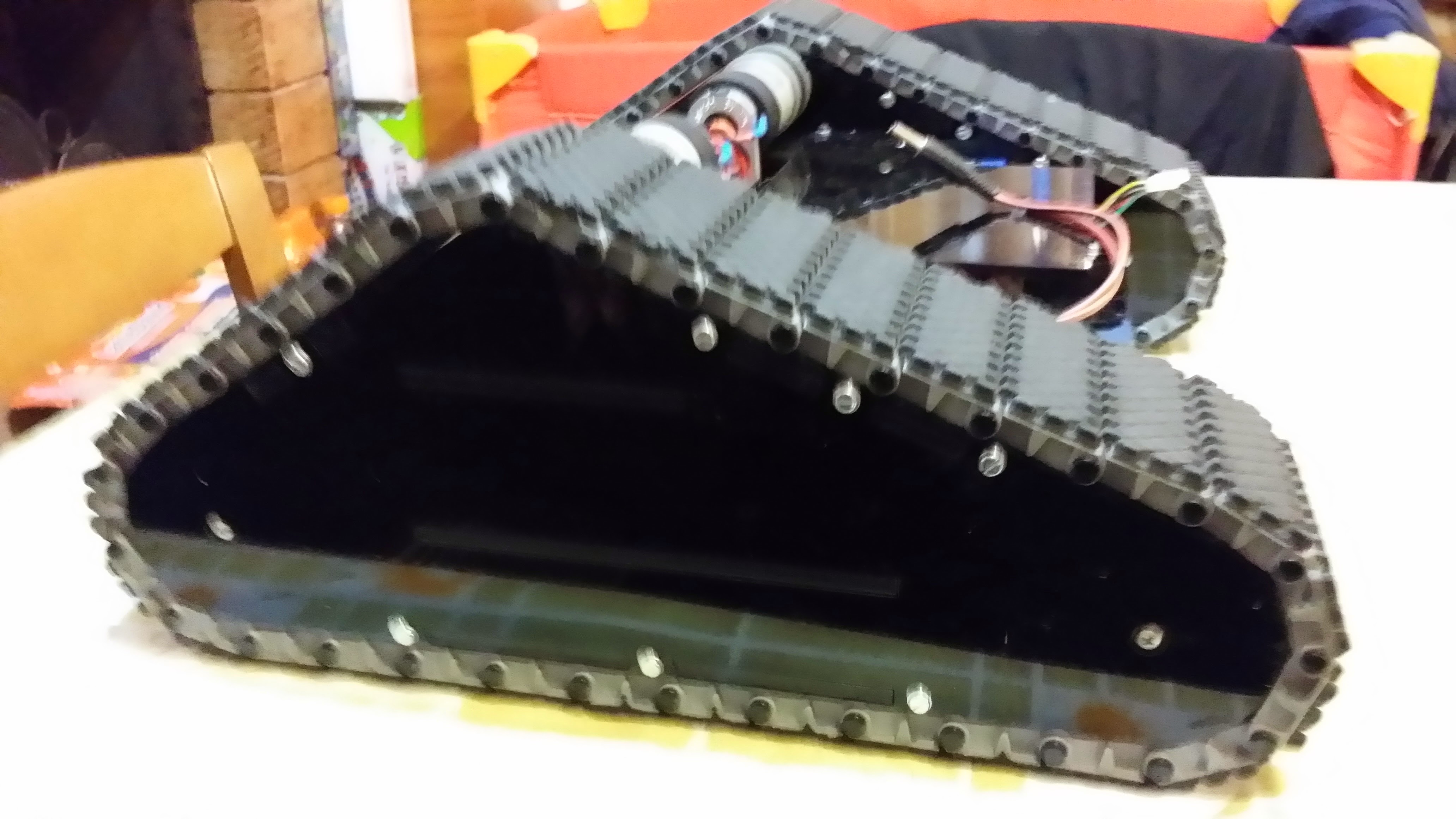

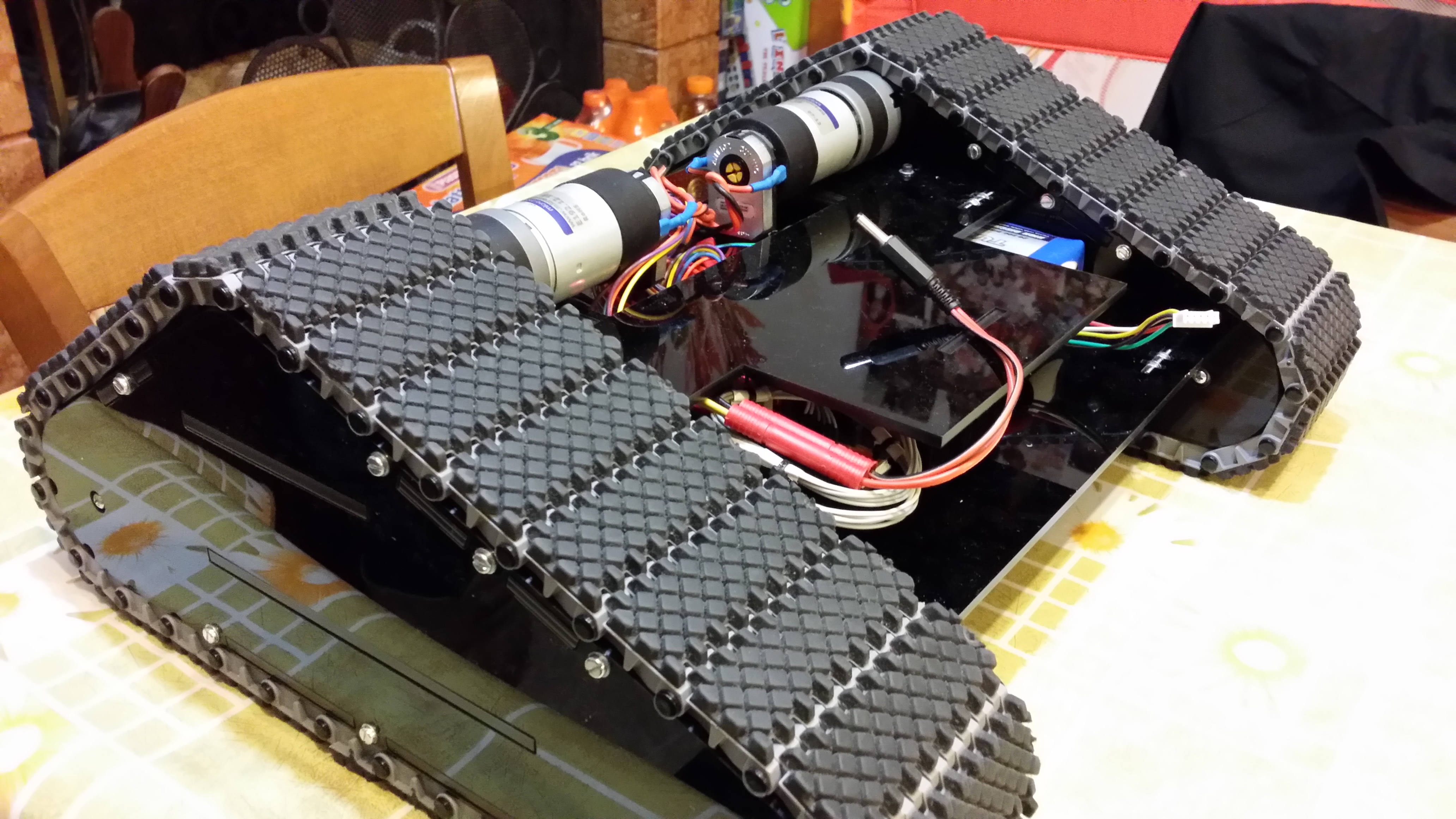

A few memorable moments from the MyzharBot-v3 era: building the robot, developing, showcase at MakerFaire 2014, and GTC 2015.

.jpg)

.jpg)

.jpg)

.jpg)